diff --git a/.github/workflows/create-caches.yml b/.github/workflows/create-caches.yml

index 951718af1b..e21286a407 100644

--- a/.github/workflows/create-caches.yml

+++ b/.github/workflows/create-caches.yml

@@ -13,10 +13,10 @@ jobs:

id: vars

run: |

if [ "$RUNNER_OS" = "macOS" ]; then

- echo "::set-output name=ENV_FILE::environment-mac.yaml"

+ echo "::set-output name=ENV_FILE::environment-mac.yml"

echo "::set-output name=PYTHON_BIN::/usr/local/miniconda/envs/ldm/bin/python"

elif [ "$RUNNER_OS" = "Linux" ]; then

- echo "::set-output name=ENV_FILE::environment.yaml"

+ echo "::set-output name=ENV_FILE::environment.yml"

echo "::set-output name=PYTHON_BIN::/usr/share/miniconda/envs/ldm/bin/python"

fi

- name: Checkout sources

diff --git a/.github/workflows/test-dream-conda.yml b/.github/workflows/test-dream-conda.yml

index 6c51ebe718..b426275b26 100644

--- a/.github/workflows/test-dream-conda.yml

+++ b/.github/workflows/test-dream-conda.yml

@@ -19,10 +19,10 @@ jobs:

run: |

# Note, can't "activate" via github action; specifying the env's python has the same effect

if [ "$RUNNER_OS" = "macOS" ]; then

- echo "::set-output name=ENV_FILE::environment-mac.yaml"

+ echo "::set-output name=ENV_FILE::environment-mac.yml"

echo "::set-output name=PYTHON_BIN::/usr/local/miniconda/envs/ldm/bin/python"

elif [ "$RUNNER_OS" = "Linux" ]; then

- echo "::set-output name=ENV_FILE::environment.yaml"

+ echo "::set-output name=ENV_FILE::environment.yml"

echo "::set-output name=PYTHON_BIN::/usr/share/miniconda/envs/ldm/bin/python"

fi

- name: Checkout sources

diff --git a/README.md b/README.md

index 6300b1beb7..2c962dba8d 100644

--- a/README.md

+++ b/README.md

@@ -2,6 +2,15 @@

# InvokeAI: A Stable Diffusion Toolkit

+_Note: This fork is rapidly evolving. Please use the

+[Issues](https://github.com/invoke-ai/InvokeAI/issues) tab to

+report bugs and make feature requests. Be sure to use the provided

+templates. They will help aid diagnose issues faster._

+

+_This repository was formally known as lstein/stable-diffusion_

+

+# **Table of Contents**

+

[![discord badge]][discord link]

@@ -19,7 +28,7 @@

[discord badge]: https://flat.badgen.net/discord/members/htRgbc7e?icon=discord

[discord link]: https://discord.gg/ZmtBAhwWhy

[github forks badge]: https://flat.badgen.net/github/forks/invoke-ai/InvokeAI?icon=github

-[github forks link]: https://useful-forks.github.io/?repo=lstein%2Fstable-diffusion

+[github forks link]: https://useful-forks.github.io/?repo=invoke-ai%2FInvokeAI

[github open issues badge]: https://flat.badgen.net/github/open-issues/invoke-ai/InvokeAI?icon=github

[github open issues link]: https://github.com/invoke-ai/InvokeAI/issues?q=is%3Aissue+is%3Aopen

[github open prs badge]: https://flat.badgen.net/github/open-prs/invoke-ai/InvokeAI?icon=github

diff --git a/backend/server.py b/backend/server.py

index b5a04a6e9b..408fcf0f4c 100644

--- a/backend/server.py

+++ b/backend/server.py

@@ -103,6 +103,8 @@ socketio = SocketIO(

engineio_logger=engineio_logger,

max_http_buffer_size=max_http_buffer_size,

cors_allowed_origins=cors_allowed_origins,

+ ping_interval=(50, 50),

+ ping_timeout=60,

)

@@ -186,17 +188,50 @@ def handle_request_capabilities():

socketio.emit("systemConfig", config)

-@socketio.on("requestAllImages")

-def handle_request_all_images():

- print(f">> All images requested")

- paths = list(filter(os.path.isfile, glob.glob(result_path + "*.png")))

- paths.sort(key=lambda x: os.path.getmtime(x))

+@socketio.on("requestImages")

+def handle_request_images(page=1, offset=0, last_mtime=None):

+ chunk_size = 50

+

+ if last_mtime:

+ print(f">> Latest images requested")

+ else:

+ print(

+ f">> Page {page} of images requested (page size {chunk_size} offset {offset})"

+ )

+

+ paths = glob.glob(os.path.join(result_path, "*.png"))

+ sorted_paths = sorted(paths, key=lambda x: os.path.getmtime(x), reverse=True)

+

+ if last_mtime:

+ image_paths = filter(lambda x: os.path.getmtime(x) > last_mtime, sorted_paths)

+ else:

+

+ image_paths = sorted_paths[

+ slice(chunk_size * (page - 1) + offset, chunk_size * page + offset)

+ ]

+ page = page + 1

+

image_array = []

- for path in paths:

+

+ for path in image_paths:

metadata = retrieve_metadata(path)

- image_array.append({"url": path, "metadata": metadata["sd-metadata"]})

- socketio.emit("galleryImages", {"images": image_array})

- eventlet.sleep(0)

+ image_array.append(

+ {

+ "url": path,

+ "mtime": os.path.getmtime(path),

+ "metadata": metadata["sd-metadata"],

+ }

+ )

+

+ socketio.emit(

+ "galleryImages",

+ {

+ "images": image_array,

+ "nextPage": page,

+ "offset": offset,

+ "onlyNewImages": True if last_mtime else False,

+ },

+ )

@socketio.on("generateImage")

@@ -275,6 +310,7 @@ def handle_run_esrgan_event(original_image, esrgan_parameters):

"esrganResult",

{

"url": os.path.relpath(path),

+ "mtime": os.path.getmtime(path),

"metadata": metadata,

},

)

@@ -343,6 +379,7 @@ def handle_run_gfpgan_event(original_image, gfpgan_parameters):

"gfpganResult",

{

"url": os.path.relpath(path),

+ "mtime": os.path.mtime(path),

"metadata": metadata,

},

)

@@ -635,14 +672,19 @@ def generate_images(generation_parameters, esrgan_parameters, gfpgan_parameters)

and step < generation_parameters["steps"] - 1

):

image = generate.sample_to_image(sample)

- path = save_image(

- image, generation_parameters, intermediate_path, step_index

- )

+

+ metadata = parameters_to_generated_image_metadata(generation_parameters)

+ command = parameters_to_command(generation_parameters)

+ path = save_image(image, command, metadata, intermediate_path, step_index=step_index, postprocessing=False)

step_index += 1

socketio.emit(

"intermediateResult",

- {"url": os.path.relpath(path), "metadata": generation_parameters},

+ {

+ "url": os.path.relpath(path),

+ "mtime": os.path.getmtime(path),

+ "metadata": metadata,

+ },

)

socketio.emit("progressUpdate", progress)

eventlet.sleep(0)

@@ -670,6 +712,11 @@ def generate_images(generation_parameters, esrgan_parameters, gfpgan_parameters)

first_seed = first_seed or seed

this_variation = [[seed, all_parameters["variation_amount"]]]

all_parameters["with_variations"] = prior_variations + this_variation

+ all_parameters["seed"] = first_seed

+ elif ("with_variations" in all_parameters):

+ all_parameters["seed"] = first_seed

+ else:

+ all_parameters["seed"] = seed

if esrgan_parameters:

progress["currentStatus"] = "Upscaling"

@@ -702,7 +749,6 @@ def generate_images(generation_parameters, esrgan_parameters, gfpgan_parameters)

postprocessing = True

all_parameters["gfpgan_strength"] = gfpgan_parameters["strength"]

- all_parameters["seed"] = first_seed

progress["currentStatus"] = "Saving image"

socketio.emit("progressUpdate", progress)

eventlet.sleep(0)

@@ -735,7 +781,11 @@ def generate_images(generation_parameters, esrgan_parameters, gfpgan_parameters)

socketio.emit(

"generationResult",

- {"url": os.path.relpath(path), "metadata": metadata},

+ {

+ "url": os.path.relpath(path),

+ "mtime": os.path.getmtime(path),

+ "metadata": metadata,

+ },

)

eventlet.sleep(0)

diff --git a/docker-build/Dockerfile b/docker-build/Dockerfile

index dd6d898ce5..f3d6834c93 100644

--- a/docker-build/Dockerfile

+++ b/docker-build/Dockerfile

@@ -47,7 +47,7 @@ RUN git clone https://github.com/TencentARC/GFPGAN.git

WORKDIR /GFPGAN

RUN pip3 install -r requirements.txt \

&& python3 setup.py develop \

- && ln -s "/data/GFPGANv1.3.pth" experiments/pretrained_models/GFPGANv1.3.pth

+ && ln -s "/data/GFPGANv1.4.pth" experiments/pretrained_models/GFPGANv1.4.pth

WORKDIR /stable-diffusion

RUN python3 scripts/preload_models.py

diff --git a/docs/CHANGELOG.md b/docs/CHANGELOG.md

new file mode 100644

index 0000000000..f0d61aeb97

--- /dev/null

+++ b/docs/CHANGELOG.md

@@ -0,0 +1,137 @@

+# **Changelog**

+

+## v1.13 (in process)

+

+- Supports a Google Colab notebook for a standalone server running on Google hardware [Arturo Mendivil](https://github.com/artmen1516)

+- WebUI supports GFPGAN/ESRGAN facial reconstruction and upscaling [Kevin Gibbons](https://github.com/bakkot)

+- WebUI supports incremental display of in-progress images during generation [Kevin Gibbons](https://github.com/bakkot)

+- Output directory can be specified on the dream> command line.

+- The grid was displaying duplicated images when not enough images to fill the final row [Muhammad Usama](https://github.com/SMUsamaShah)

+- Can specify --grid on dream.py command line as the default.

+- Miscellaneous internal bug and stability fixes.

+

+---

+

+## v1.12 (28 August 2022)

+

+- Improved file handling, including ability to read prompts from standard input.

+ (kudos to [Yunsaki](https://github.com/yunsaki)

+- The web server is now integrated with the dream.py script. Invoke by adding --web to

+ the dream.py command arguments.

+- Face restoration and upscaling via GFPGAN and Real-ESGAN are now automatically

+ enabled if the GFPGAN directory is located as a sibling to Stable Diffusion.

+ VRAM requirements are modestly reduced. Thanks to both [Blessedcoolant](https://github.com/blessedcoolant) and

+ [Oceanswave](https://github.com/oceanswave) for their work on this.

+- You can now swap samplers on the dream> command line. [Blessedcoolant](https://github.com/blessedcoolant)

+

+---

+

+## v1.11 (26 August 2022)

+

+- NEW FEATURE: Support upscaling and face enhancement using the GFPGAN module. (kudos to [Oceanswave](https://github.com/Oceanswave)

+- You now can specify a seed of -1 to use the previous image's seed, -2 to use the seed for the image generated before that, etc.

+ Seed memory only extends back to the previous command, but will work on all images generated with the -n# switch.

+- Variant generation support temporarily disabled pending more general solution.

+- Created a feature branch named **yunsaki-morphing-dream** which adds experimental support for

+ iteratively modifying the prompt and its parameters. Please see[ Pull Request #86](https://github.com/lstein/stable-diffusion/pull/86)

+ for a synopsis of how this works. Note that when this feature is eventually added to the main branch, it will may be modified

+ significantly.

+

+---

+

+## v1.10 (25 August 2022)

+

+- A barebones but fully functional interactive web server for online generation of txt2img and img2img.

+

+---

+

+## v1.09 (24 August 2022)

+

+- A new -v option allows you to generate multiple variants of an initial image

+ in img2img mode. (kudos to [Oceanswave](https://github.com/Oceanswave). [

+ See this discussion in the PR for examples and details on use](https://github.com/lstein/stable-diffusion/pull/71#issuecomment-1226700810))

+- Added ability to personalize text to image generation (kudos to [Oceanswave](https://github.com/Oceanswave) and [nicolai256](https://github.com/nicolai256))

+- Enabled all of the samplers from k_diffusion

+

+---

+

+## v1.08 (24 August 2022)

+

+- Escape single quotes on the dream> command before trying to parse. This avoids

+ parse errors.

+- Removed instruction to get Python3.8 as first step in Windows install.

+ Anaconda3 does it for you.

+- Added bounds checks for numeric arguments that could cause crashes.

+- Cleaned up the copyright and license agreement files.

+

+---

+

+## v1.07 (23 August 2022)

+

+- Image filenames will now never fill gaps in the sequence, but will be assigned the

+ next higher name in the chosen directory. This ensures that the alphabetic and chronological

+ sort orders are the same.

+

+---

+

+## v1.06 (23 August 2022)

+

+- Added weighted prompt support contributed by [xraxra](https://github.com/xraxra)

+- Example of using weighted prompts to tweak a demonic figure contributed by [bmaltais](https://github.com/bmaltais)

+

+---

+

+## v1.05 (22 August 2022 - after the drop)

+

+- Filenames now use the following formats:

+ 000010.95183149.png -- Two files produced by the same command (e.g. -n2),

+ 000010.26742632.png -- distinguished by a different seed.

+

+ 000011.455191342.01.png -- Two files produced by the same command using

+ 000011.455191342.02.png -- a batch size>1 (e.g. -b2). They have the same seed.

+

+ 000011.4160627868.grid#1-4.png -- a grid of four images (-g); the whole grid can

+ be regenerated with the indicated key

+

+- It should no longer be possible for one image to overwrite another

+- You can use the "cd" and "pwd" commands at the dream> prompt to set and retrieve

+ the path of the output directory.

+

+---

+

+## v1.04 (22 August 2022 - after the drop)

+

+- Updated README to reflect installation of the released weights.

+- Suppressed very noisy and inconsequential warning when loading the frozen CLIP

+ tokenizer.

+

+---

+

+## v1.03 (22 August 2022)

+

+- The original txt2img and img2img scripts from the CompViz repository have been moved into

+ a subfolder named "orig_scripts", to reduce confusion.

+

+---

+

+## v1.02 (21 August 2022)

+

+- A copy of the prompt and all of its switches and options is now stored in the corresponding

+ image in a tEXt metadata field named "Dream". You can read the prompt using scripts/images2prompt.py,

+ or an image editor that allows you to explore the full metadata.

+ **Please run "conda env update" to load the k_lms dependencies!!**

+

+---

+

+## v1.01 (21 August 2022)

+

+- added k_lms sampling.

+ **Please run "conda env update" to load the k_lms dependencies!!**

+- use half precision arithmetic by default, resulting in faster execution and lower memory requirements

+ Pass argument --full_precision to dream.py to get slower but more accurate image generation

+

+---

+

+## Links

+

+- **[Read Me](../readme.md)**

diff --git a/docs/features/CLI.md b/docs/features/CLI.md

index aab695bbcd..4a1580fc0d 100644

--- a/docs/features/CLI.md

+++ b/docs/features/CLI.md

@@ -8,20 +8,23 @@ hide:

## **Interactive Command Line Interface**

-The `dream.py` script, located in `scripts/dream.py`, provides an interactive interface to image

-generation similar to the "dream mothership" bot that Stable AI provided on its Discord server.

+The `dream.py` script, located in `scripts/dream.py`, provides an interactive

+interface to image generation similar to the "dream mothership" bot that Stable

+AI provided on its Discord server.

Unlike the `txt2img.py` and `img2img.py` scripts provided in the original

-[CompVis/stable-diffusion](https://github.com/CompVis/stable-diffusion) source code repository, the

-time-consuming initialization of the AI model initialization only happens once. After that image

-generation from the command-line interface is very fast.

+[CompVis/stable-diffusion](https://github.com/CompVis/stable-diffusion) source

+code repository, the time-consuming initialization of the AI model

+initialization only happens once. After that image generation from the

+command-line interface is very fast.

-The script uses the readline library to allow for in-line editing, command history (++up++ and

-++down++), autocompletion, and more. To help keep track of which prompts generated which images, the

-script writes a log file of image names and prompts to the selected output directory.

+The script uses the readline library to allow for in-line editing, command

+history (++up++ and ++down++), autocompletion, and more. To help keep track of

+which prompts generated which images, the script writes a log file of image

+names and prompts to the selected output directory.

-In addition, as of version 1.02, it also writes the prompt into the PNG file's metadata where it can

-be retrieved using `scripts/images2prompt.py`

+In addition, as of version 1.02, it also writes the prompt into the PNG file's

+metadata where it can be retrieved using `scripts/images2prompt.py`

The script is confirmed to work on Linux, Windows and Mac systems.

@@ -56,21 +59,24 @@ dream> q

-The `dream>` prompt's arguments are pretty much identical to those used in the Discord bot, except

-you don't need to type "!dream" (it doesn't hurt if you do). A significant change is that creation

-of individual images is now the default unless `--grid` (`-g`) is given. A full list is given in

+The `dream>` prompt's arguments are pretty much identical to those used in the

+Discord bot, except you don't need to type "!dream" (it doesn't hurt if you do).

+A significant change is that creation of individual images is now the default

+unless `--grid` (`-g`) is given. A full list is given in

[List of prompt arguments](#list-of-prompt-arguments).

## Arguments

-The script itself also recognizes a series of command-line switches that will change important

-global defaults, such as the directory for image outputs and the location of the model weight files.

+The script itself also recognizes a series of command-line switches that will

+change important global defaults, such as the directory for image outputs and

+the location of the model weight files.

### List of arguments recognized at the command line

-These command-line arguments can be passed to `dream.py` when you first run it from the Windows, Mac

-or Linux command line. Some set defaults that can be overridden on a per-prompt basis (see [List of

-prompt arguments] (#list-of-prompt-arguments). Others

+These command-line arguments can be passed to `dream.py` when you first run it

+from the Windows, Mac or Linux command line. Some set defaults that can be

+overridden on a per-prompt basis (see [List of prompt arguments]

+(#list-of-prompt-arguments). Others

| Argument ![]() | Shortcut

| Shortcut ![]() | Default

| Default ![]() | Description |

| ----------------------------------------- | ----------------------------------------- | ---------------------------------------------- | ---------------------------------------------------------------------------------------------------- |

@@ -90,157 +96,150 @@ prompt arguments] (#list-of-prompt-arguments). Others

| `--seamless` | | `False` | Create interesting effects by tiling elements of the image. |

| `--embedding_path ` | | `None` | Path to pre-trained embedding manager checkpoints, for custom models |

| `--gfpgan_dir` | | `src/gfpgan` | Path to where GFPGAN is installed. |

-| `--gfpgan_model_path` | | `experiments/pretrained_models/GFPGANv1.3.pth` | Path to GFPGAN model file, relative to `--gfpgan_dir`. |

+| `--gfpgan_model_path` | | `experiments/pretrained_models/GFPGANv1.4.pth` | Path to GFPGAN model file, relative to `--gfpgan_dir`. |

| `--device ` | `-d` | `torch.cuda.current_device()` | Device to run SD on, e.g. "cuda:0" |

+| `--free_gpu_mem` | | `False` | Free GPU memory after sampling, to allow image decoding and saving in low VRAM conditions |

+| `--precision` | | `auto` | Set model precision, default is selected by device. Options: auto, float32, float16, autocast |

#### deprecated

These arguments are deprecated but still work:

-

-| Argument | Shortcut | Default | Description |

-| ------------------ | -------- | ------- | --------------------------------------------------------------- |

-| `--weights ` | | `None` | Pth to weights file; use `--model stable-diffusion-1.4` instead |

-| `--laion400m` | `-l` | `False` | Use older LAION400m weights; use `--model=laion400m` instead |

+| Argument | Shortcut | Default | Description |

+|--------------------|------------|---------------------|--------------|

+| --weights | | None | Pth to weights file; use `--model stable-diffusion-1.4` instead |

+| --laion400m | -l | False | Use older LAION400m weights; use `--model=laion400m` instead |

-

+**A note on path names:** On Windows systems, you may run into

+ problems when passing the dream script standard backslashed path

+ names because the Python interpreter treats "\" as an escape.

+ You can either double your slashes (ick): C:\\\\path\\\\to\\\\my\\\\file, or

+ use Linux/Mac style forward slashes (better): C:/path/to/my/file.

-!!! note

+## List of prompt arguments

- On Windows systems, you may run into problems when passing the dream script standard backslashed

- path names because the Python interpreter treats `\` as an escape. You can either double your

- slashes (ick): `C:\\path\\to\\my\\file`, or use Linux/Mac style forward slashes (better):

- `C:/path/to/my/file`.

+After the dream.py script initializes, it will present you with a

+**dream>** prompt. Here you can enter information to generate images

+from text (txt2img), to embellish an existing image or sketch

+(img2img), or to selectively alter chosen regions of the image

+(inpainting).

-### List of prompt arguments

+### This is an example of txt2img:

-After the `dream.py` script initializes, it will present you with a **`dream>`** prompt. Here you

-can enter information to generate images from text (txt2img), to embellish an existing image or

-sketch (img2img), or to selectively alter chosen regions of the image (inpainting).

+~~~~

+dream> waterfall and rainbow -W640 -H480

+~~~~

-#### txt2img

+This will create the requested image with the dimensions 640 (width)

+and 480 (height).

-!!! example

+Here are the dream> command that apply to txt2img:

- ```bash

- dream> "waterfall and rainbow" -W640 -H480

- ```

+| Argument | Shortcut | Default | Description |

+|--------------------|------------|---------------------|--------------|

+| "my prompt" | | | Text prompt to use. The quotation marks are optional. |

+| --width | -W | 512 | Width of generated image |

+| --height | -H | 512 | Height of generated image |

+| --iterations | -n | 1 | How many images to generate from this prompt |

+| --steps | -s | 50 | How many steps of refinement to apply |

+| --cfg_scale | -C | 7.5 | How hard to try to match the prompt to the generated image; any number greater than 1.0 works, but the useful range is roughly 5.0 to 20.0 |

+| --seed | -S | None | Set the random seed for the next series of images. This can be used to recreate an image generated previously.|

+| --sampler | -A| k_lms | Sampler to use. Use -h to get list of available samplers. |

+| --grid | -g | False | Turn on grid mode to return a single image combining all the images generated by this prompt |

+| --individual | -i | True | Turn off grid mode (deprecated; leave off --grid instead) |

+| --outdir | -o | outputs/img_samples | Temporarily change the location of these images |

+| --seamless | | False | Activate seamless tiling for interesting effects |

+| --log_tokenization | -t | False | Display a color-coded list of the parsed tokens derived from the prompt |

+| --skip_normalization| -x | False | Weighted subprompts will not be normalized. See [Weighted Prompts](./OTHER.md#weighted-prompts) |

+| --upscale | -U | -U 1 0.75| Upscale image by magnification factor (2, 4), and set strength of upscaling (0.0-1.0). If strength not set, will default to 0.75. |

+| --gfpgan_strength | -G | -G0 | Fix faces using the GFPGAN algorithm; argument indicates how hard the algorithm should try (0.0-1.0) |

+| --save_original | -save_orig| False | When upscaling or fixing faces, this will cause the original image to be saved rather than replaced. |

+| --variation |-v| 0.0 | Add a bit of noise (0.0=none, 1.0=high) to the image in order to generate a series of variations. Usually used in combination with -S and -n to generate a series a riffs on a starting image. See [Variations](./VARIATIONS.md). |

+| --with_variations | -V| None | Combine two or more variations. See [Variations](./VARIATIONS.md) for now to use this. |

- This will create the requested image with the dimensions 640 (width) and 480 (height).

+Note that the width and height of the image must be multiples of

+64. You can provide different values, but they will be rounded down to

+the nearest multiple of 64.

-Those are the `dream` commands that apply to txt2img:

-| Argument

| Description |

| ----------------------------------------- | ----------------------------------------- | ---------------------------------------------- | ---------------------------------------------------------------------------------------------------- |

@@ -90,157 +96,150 @@ prompt arguments] (#list-of-prompt-arguments). Others

| `--seamless` | | `False` | Create interesting effects by tiling elements of the image. |

| `--embedding_path ` | | `None` | Path to pre-trained embedding manager checkpoints, for custom models |

| `--gfpgan_dir` | | `src/gfpgan` | Path to where GFPGAN is installed. |

-| `--gfpgan_model_path` | | `experiments/pretrained_models/GFPGANv1.3.pth` | Path to GFPGAN model file, relative to `--gfpgan_dir`. |

+| `--gfpgan_model_path` | | `experiments/pretrained_models/GFPGANv1.4.pth` | Path to GFPGAN model file, relative to `--gfpgan_dir`. |

| `--device ` | `-d` | `torch.cuda.current_device()` | Device to run SD on, e.g. "cuda:0" |

+| `--free_gpu_mem` | | `False` | Free GPU memory after sampling, to allow image decoding and saving in low VRAM conditions |

+| `--precision` | | `auto` | Set model precision, default is selected by device. Options: auto, float32, float16, autocast |

#### deprecated

These arguments are deprecated but still work:

-

-| Argument | Shortcut | Default | Description |

-| ------------------ | -------- | ------- | --------------------------------------------------------------- |

-| `--weights ` | | `None` | Pth to weights file; use `--model stable-diffusion-1.4` instead |

-| `--laion400m` | `-l` | `False` | Use older LAION400m weights; use `--model=laion400m` instead |

+| Argument | Shortcut | Default | Description |

+|--------------------|------------|---------------------|--------------|

+| --weights | | None | Pth to weights file; use `--model stable-diffusion-1.4` instead |

+| --laion400m | -l | False | Use older LAION400m weights; use `--model=laion400m` instead |

-

+**A note on path names:** On Windows systems, you may run into

+ problems when passing the dream script standard backslashed path

+ names because the Python interpreter treats "\" as an escape.

+ You can either double your slashes (ick): C:\\\\path\\\\to\\\\my\\\\file, or

+ use Linux/Mac style forward slashes (better): C:/path/to/my/file.

-!!! note

+## List of prompt arguments

- On Windows systems, you may run into problems when passing the dream script standard backslashed

- path names because the Python interpreter treats `\` as an escape. You can either double your

- slashes (ick): `C:\\path\\to\\my\\file`, or use Linux/Mac style forward slashes (better):

- `C:/path/to/my/file`.

+After the dream.py script initializes, it will present you with a

+**dream>** prompt. Here you can enter information to generate images

+from text (txt2img), to embellish an existing image or sketch

+(img2img), or to selectively alter chosen regions of the image

+(inpainting).

-### List of prompt arguments

+### This is an example of txt2img:

-After the `dream.py` script initializes, it will present you with a **`dream>`** prompt. Here you

-can enter information to generate images from text (txt2img), to embellish an existing image or

-sketch (img2img), or to selectively alter chosen regions of the image (inpainting).

+~~~~

+dream> waterfall and rainbow -W640 -H480

+~~~~

-#### txt2img

+This will create the requested image with the dimensions 640 (width)

+and 480 (height).

-!!! example

+Here are the dream> command that apply to txt2img:

- ```bash

- dream> "waterfall and rainbow" -W640 -H480

- ```

+| Argument | Shortcut | Default | Description |

+|--------------------|------------|---------------------|--------------|

+| "my prompt" | | | Text prompt to use. The quotation marks are optional. |

+| --width | -W | 512 | Width of generated image |

+| --height | -H | 512 | Height of generated image |

+| --iterations | -n | 1 | How many images to generate from this prompt |

+| --steps | -s | 50 | How many steps of refinement to apply |

+| --cfg_scale | -C | 7.5 | How hard to try to match the prompt to the generated image; any number greater than 1.0 works, but the useful range is roughly 5.0 to 20.0 |

+| --seed | -S | None | Set the random seed for the next series of images. This can be used to recreate an image generated previously.|

+| --sampler | -A| k_lms | Sampler to use. Use -h to get list of available samplers. |

+| --grid | -g | False | Turn on grid mode to return a single image combining all the images generated by this prompt |

+| --individual | -i | True | Turn off grid mode (deprecated; leave off --grid instead) |

+| --outdir | -o | outputs/img_samples | Temporarily change the location of these images |

+| --seamless | | False | Activate seamless tiling for interesting effects |

+| --log_tokenization | -t | False | Display a color-coded list of the parsed tokens derived from the prompt |

+| --skip_normalization| -x | False | Weighted subprompts will not be normalized. See [Weighted Prompts](./OTHER.md#weighted-prompts) |

+| --upscale | -U | -U 1 0.75| Upscale image by magnification factor (2, 4), and set strength of upscaling (0.0-1.0). If strength not set, will default to 0.75. |

+| --gfpgan_strength | -G | -G0 | Fix faces using the GFPGAN algorithm; argument indicates how hard the algorithm should try (0.0-1.0) |

+| --save_original | -save_orig| False | When upscaling or fixing faces, this will cause the original image to be saved rather than replaced. |

+| --variation |-v| 0.0 | Add a bit of noise (0.0=none, 1.0=high) to the image in order to generate a series of variations. Usually used in combination with -S and -n to generate a series a riffs on a starting image. See [Variations](./VARIATIONS.md). |

+| --with_variations | -V| None | Combine two or more variations. See [Variations](./VARIATIONS.md) for now to use this. |

- This will create the requested image with the dimensions 640 (width) and 480 (height).

+Note that the width and height of the image must be multiples of

+64. You can provide different values, but they will be rounded down to

+the nearest multiple of 64.

-Those are the `dream` commands that apply to txt2img:

-| Argument ![]() | Shortcut

| Shortcut ![]() | Default

| Default ![]() | Description |

-| ----------------------------------------- | ----------------------------------------- | ---------------------------------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ |

-| `"my prompt"` | | | Text prompt to use. The quotation marks are optional. |

-| `--width ` | `-W` | `512` | Width of generated image |

-| `--height ` | `-H` | `512` | Height of generated image |

-| `--iterations ` | `-n` | `1` | How many images to generate from this prompt |

-| `--steps ` | `-s` | `50` | How many steps of refinement to apply |

-| `--cfg_scale ` | `-C` | `7.5` | How hard to try to match the prompt to the generated image; any number greater than 0.0 works, but the useful range is roughly 5.0 to 20.0 |

-| `--seed ` | `-S` | `None` | Set the random seed for the next series of images. This can be used to recreate an image generated previously. |

-| `--sampler ` | `-A` | `k_lms` | Sampler to use. Use `-h` to get list of available samplers. |

-| `--grid` | `-g` | `False` | Turn on grid mode to return a single image combining all the images generated by this prompt |

-| `--individual` | `-i` | `True` | Turn off grid mode (deprecated; leave off `--grid` instead) |

-| `--outdir ` | `-o` | `outputs/img_samples` | Temporarily change the location of these images |

-| `--seamless` | | `False` | Activate seamless tiling for interesting effects |

-| `--log_tokenization` | `-t` | `False` | Display a color-coded list of the parsed tokens derived from the prompt |

-| `--skip_normalization` | `-x` | `False` | Weighted subprompts will not be normalized. See [Weighted Prompts](./OTHER.md#weighted-prompts) |

-| `--upscale ` | `-U ` | `-U 1 0.75` | Upscale image by magnification factor (2, 4), and set strength of upscaling (0.0-1.0). If strength not set, will default to 0.75. |

-| `--gfpgan_strength ` | `-G ` | `-G0` | Fix faces using the GFPGAN algorithm; argument indicates how hard the algorithm should try (0.0-1.0) |

-| `--save_original` | `-save_orig` | `False` | When upscaling or fixing faces, this will cause the original image to be saved rather than replaced. |

-| `--variation ` | `-v` | `0.0` | Add a bit of noise (0.0=none, 1.0=high) to the image in order to generate a series of variations. Usually used in combination with `-S` and `-n` to generate a series a riffs on a starting image. See [Variations](./VARIATIONS.md). |

-| `--with_variations ` | `-V` | `None` | Combine two or more variations. See [Variations](./VARIATIONS.md) for now to use this. |

+### This is an example of img2img:

-!!! note

+~~~~

+dream> waterfall and rainbow -I./vacation-photo.png -W640 -H480 --fit

+~~~~

- The width and height of the image must be multiples of 64. You can provide different

- values, but they will be rounded down to the nearest multiple of 64.

+This will modify the indicated vacation photograph by making it more

+like the prompt. Results will vary greatly depending on what is in the

+image. We also ask to --fit the image into a box no bigger than

+640x480. Otherwise the image size will be identical to the provided

+photo and you may run out of memory if it is large.

-#### img2img

+In addition to the command-line options recognized by txt2img, img2img

+accepts additional options:

-!!! example

+| Argument | Shortcut | Default | Description |

+|--------------------|------------|---------------------|--------------|

+| --init_img | -I | None | Path to the initialization image |

+| --fit | -F | False | Scale the image to fit into the specified -H and -W dimensions |

+| --strength | -s | 0.75 | How hard to try to match the prompt to the initial image. Ranges from 0.0-0.99, with higher values replacing the initial image completely.|

- ```bash

- dream> "waterfall and rainbow" -I./vacation-photo.png -W640 -H480 --fit

- ```

+### This is an example of inpainting:

- This will modify the indicated vacation photograph by making it more like the prompt. Results will

- vary greatly depending on what is in the image. We also ask to --fit the image into a box no bigger

- than 640x480. Otherwise the image size will be identical to the provided photo and you may run out

- of memory if it is large.

+~~~~

+dream> waterfall and rainbow -I./vacation-photo.png -M./vacation-mask.png -W640 -H480 --fit

+~~~~

-Repeated chaining of img2img on an image can result in significant color shifts in the output,

-especially if run with lower strength. Color correction can be run against a reference image to fix

-this issue. Use the original input image to the chain as the the reference image for each step in

-the chain.

+This will do the same thing as img2img, but image alterations will

+only occur within transparent areas defined by the mask file specified

+by -M. You may also supply just a single initial image with the areas

+to overpaint made transparent, but you must be careful not to destroy

+the pixels underneath when you create the transparent areas. See

+[Inpainting](./INPAINTING.md) for details.

-In addition to the command-line options recognized by txt2img, img2img accepts additional options:

+inpainting accepts all the arguments used for txt2img and img2img, as

+well as the --mask (-M) argument:

-| Argument

| Description |

-| ----------------------------------------- | ----------------------------------------- | ---------------------------------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ |

-| `"my prompt"` | | | Text prompt to use. The quotation marks are optional. |

-| `--width ` | `-W` | `512` | Width of generated image |

-| `--height ` | `-H` | `512` | Height of generated image |

-| `--iterations ` | `-n` | `1` | How many images to generate from this prompt |

-| `--steps ` | `-s` | `50` | How many steps of refinement to apply |

-| `--cfg_scale ` | `-C` | `7.5` | How hard to try to match the prompt to the generated image; any number greater than 0.0 works, but the useful range is roughly 5.0 to 20.0 |

-| `--seed ` | `-S` | `None` | Set the random seed for the next series of images. This can be used to recreate an image generated previously. |

-| `--sampler ` | `-A` | `k_lms` | Sampler to use. Use `-h` to get list of available samplers. |

-| `--grid` | `-g` | `False` | Turn on grid mode to return a single image combining all the images generated by this prompt |

-| `--individual` | `-i` | `True` | Turn off grid mode (deprecated; leave off `--grid` instead) |

-| `--outdir ` | `-o` | `outputs/img_samples` | Temporarily change the location of these images |

-| `--seamless` | | `False` | Activate seamless tiling for interesting effects |

-| `--log_tokenization` | `-t` | `False` | Display a color-coded list of the parsed tokens derived from the prompt |

-| `--skip_normalization` | `-x` | `False` | Weighted subprompts will not be normalized. See [Weighted Prompts](./OTHER.md#weighted-prompts) |

-| `--upscale ` | `-U ` | `-U 1 0.75` | Upscale image by magnification factor (2, 4), and set strength of upscaling (0.0-1.0). If strength not set, will default to 0.75. |

-| `--gfpgan_strength ` | `-G ` | `-G0` | Fix faces using the GFPGAN algorithm; argument indicates how hard the algorithm should try (0.0-1.0) |

-| `--save_original` | `-save_orig` | `False` | When upscaling or fixing faces, this will cause the original image to be saved rather than replaced. |

-| `--variation ` | `-v` | `0.0` | Add a bit of noise (0.0=none, 1.0=high) to the image in order to generate a series of variations. Usually used in combination with `-S` and `-n` to generate a series a riffs on a starting image. See [Variations](./VARIATIONS.md). |

-| `--with_variations ` | `-V` | `None` | Combine two or more variations. See [Variations](./VARIATIONS.md) for now to use this. |

+### This is an example of img2img:

-!!! note

+~~~~

+dream> waterfall and rainbow -I./vacation-photo.png -W640 -H480 --fit

+~~~~

- The width and height of the image must be multiples of 64. You can provide different

- values, but they will be rounded down to the nearest multiple of 64.

+This will modify the indicated vacation photograph by making it more

+like the prompt. Results will vary greatly depending on what is in the

+image. We also ask to --fit the image into a box no bigger than

+640x480. Otherwise the image size will be identical to the provided

+photo and you may run out of memory if it is large.

-#### img2img

+In addition to the command-line options recognized by txt2img, img2img

+accepts additional options:

-!!! example

+| Argument | Shortcut | Default | Description |

+|--------------------|------------|---------------------|--------------|

+| --init_img | -I | None | Path to the initialization image |

+| --fit | -F | False | Scale the image to fit into the specified -H and -W dimensions |

+| --strength | -s | 0.75 | How hard to try to match the prompt to the initial image. Ranges from 0.0-0.99, with higher values replacing the initial image completely.|

- ```bash

- dream> "waterfall and rainbow" -I./vacation-photo.png -W640 -H480 --fit

- ```

+### This is an example of inpainting:

- This will modify the indicated vacation photograph by making it more like the prompt. Results will

- vary greatly depending on what is in the image. We also ask to --fit the image into a box no bigger

- than 640x480. Otherwise the image size will be identical to the provided photo and you may run out

- of memory if it is large.

+~~~~

+dream> waterfall and rainbow -I./vacation-photo.png -M./vacation-mask.png -W640 -H480 --fit

+~~~~

-Repeated chaining of img2img on an image can result in significant color shifts in the output,

-especially if run with lower strength. Color correction can be run against a reference image to fix

-this issue. Use the original input image to the chain as the the reference image for each step in

-the chain.

+This will do the same thing as img2img, but image alterations will

+only occur within transparent areas defined by the mask file specified

+by -M. You may also supply just a single initial image with the areas

+to overpaint made transparent, but you must be careful not to destroy

+the pixels underneath when you create the transparent areas. See

+[Inpainting](./INPAINTING.md) for details.

-In addition to the command-line options recognized by txt2img, img2img accepts additional options:

+inpainting accepts all the arguments used for txt2img and img2img, as

+well as the --mask (-M) argument:

-| Argument ![]() | Shortcut | Default | Description |

-| ----------------------------------------- | ----------- | ------- | ------------------------------------------------------------------------------------------------------------------------------------------ |

-| `--init_img ` | `-I` | `None` | Path to the initialization image |

-| `--init_color ` | | `None` | Path to reference image for color correction |

-| `--fit` | `-F` | `False` | Scale the image to fit into the specified -H and -W dimensions |

-| `--strength ` | `-f` | `0.75` | How hard to try to match the prompt to the initial image. Ranges from 0.0-0.99, with higher values replacing the initial image completely. |

+| Argument | Shortcut | Default | Description |

+|--------------------|------------|---------------------|--------------|

+| --init_mask | -M | None |Path to an image the same size as the initial_image, with areas for inpainting made transparent.|

-#### Inpainting

-!!! example

+# Command-line editing and completion

- ```bash

- dream> "waterfall and rainbow" -I./vacation-photo.png -M./vacation-mask.png -W640 -H480 --fit

- ```

+If you are on a Macintosh or Linux machine, the command-line offers

+convenient history tracking, editing, and command completion.

- This will do the same thing as img2img, but image alterations will only occur within transparent

- areas defined by the mask file specified by `-M`. You may also supply just a single initial image with

- the areas to overpaint made transparent, but you must be careful not to destroy the pixels

- underneath when you create the transparent areas. See [Inpainting](./INPAINTING.md) for details.

+- To scroll through previous commands and potentially edit/reuse them, use the up and down cursor keys.

+- To edit the current command, use the left and right cursor keys to position the cursor, and then backspace, delete or insert characters.

+- To move to the very beginning of the command, type CTRL-A (or command-A on the Mac)

+- To move to the end of the command, type CTRL-E.

+- To cut a section of the command, position the cursor where you want to start cutting and type CTRL-K.

+- To paste a cut section back in, position the cursor where you want to paste, and type CTRL-Y

-Inpainting accepts all the arguments used for txt2img and img2img, as well as the `--mask` (`-M`)

-argument:

+Windows users can get similar, but more limited, functionality if they

+launch dream.py with the "winpty" program:

-| Argument

| Shortcut | Default | Description |

-| ----------------------------------------- | ----------- | ------- | ------------------------------------------------------------------------------------------------------------------------------------------ |

-| `--init_img ` | `-I` | `None` | Path to the initialization image |

-| `--init_color ` | | `None` | Path to reference image for color correction |

-| `--fit` | `-F` | `False` | Scale the image to fit into the specified -H and -W dimensions |

-| `--strength ` | `-f` | `0.75` | How hard to try to match the prompt to the initial image. Ranges from 0.0-0.99, with higher values replacing the initial image completely. |

+| Argument | Shortcut | Default | Description |

+|--------------------|------------|---------------------|--------------|

+| --init_mask | -M | None |Path to an image the same size as the initial_image, with areas for inpainting made transparent.|

-#### Inpainting

-!!! example

+# Command-line editing and completion

- ```bash

- dream> "waterfall and rainbow" -I./vacation-photo.png -M./vacation-mask.png -W640 -H480 --fit

- ```

+If you are on a Macintosh or Linux machine, the command-line offers

+convenient history tracking, editing, and command completion.

- This will do the same thing as img2img, but image alterations will only occur within transparent

- areas defined by the mask file specified by `-M`. You may also supply just a single initial image with

- the areas to overpaint made transparent, but you must be careful not to destroy the pixels

- underneath when you create the transparent areas. See [Inpainting](./INPAINTING.md) for details.

+- To scroll through previous commands and potentially edit/reuse them, use the up and down cursor keys.

+- To edit the current command, use the left and right cursor keys to position the cursor, and then backspace, delete or insert characters.

+- To move to the very beginning of the command, type CTRL-A (or command-A on the Mac)

+- To move to the end of the command, type CTRL-E.

+- To cut a section of the command, position the cursor where you want to start cutting and type CTRL-K.

+- To paste a cut section back in, position the cursor where you want to paste, and type CTRL-Y

-Inpainting accepts all the arguments used for txt2img and img2img, as well as the `--mask` (`-M`)

-argument:

+Windows users can get similar, but more limited, functionality if they

+launch dream.py with the "winpty" program:

-| Argument ![]() | Shortcut | Default | Description |

-| ----------------------------------------- | ---------- | ------- | ------------------------------------------------------------------------------------------------ |

-| `--init_mask ` | `-M` | `None` | Path to an image the same size as the initial_image, with areas for inpainting made transparent. |

+~~~

+> winpty python scripts\dream.py

+~~~

-## Command-line editing and completion

+On the Mac and Linux platforms, when you exit dream.py, the last 1000

+lines of your command-line history will be saved. When you restart

+dream.py, you can access the saved history using the up-arrow key.

-If you are on a Macintosh or Linux machine, the command-line offers convenient history tracking,

-editing, and command completion.

+In addition, limited command-line completion is installed. In various

+contexts, you can start typing your command and press tab. A list of

+potential completions will be presented to you. You can then type a

+little more, hit tab again, and eventually autocomplete what you want.

-- To scroll through previous commands and potentially edit/reuse them, use the ++up++ and ++down++

- cursor keys.

-- To edit the current command, use the ++left++ and ++right++ cursor keys to position the cursor,

- and then ++backspace++, ++delete++ or ++insert++ characters.

-- To move to the very beginning of the command, type ++ctrl+a++ (or ++command+a++ on the Mac)

-- To move to the end of the command, type ++ctrl+e++.

-- To cut a section of the command, position the cursor where you want to start cutting and type

- ++ctrl+k++.

-- To paste a cut section back in, position the cursor where you want to paste, and type ++ctrl+y++

+When specifying file paths using the one-letter shortcuts, the CLI

+will attempt to complete pathnames for you. This is most handy for the

+-I (init image) and -M (init mask) paths. To initiate completion, start

+the path with a slash ("/") or "./". For example:

-Windows users can get similar, but more limited, functionality if they launch `dream.py` with the

-"winpty" program:

-

-```batch

-winpty python scripts\dream.py

-```

-

-On the Mac and Linux platforms, when you exit `dream.py`, the last 1000 lines of your command-line

-history will be saved. When you restart `dream.py`, you can access the saved history using the

-++up++ key.

-

-In addition, limited command-line completion is installed. In various contexts, you can start typing

-your command and press tab. A list of potential completions will be presented to you. You can then

-type a little more, hit tab again, and eventually autocomplete what you want.

-

-When specifying file paths using the one-letter shortcuts, the CLI will attempt to complete

-pathnames for you. This is most handy for the `-I` (init image) and `-M` (init mask) paths. To

-initiate completion, start the path with a slash `/` or `./`, for example:

-

-```bash

-dream> "zebra with a mustache" -I./test-pictures

+~~~

+dream> zebra with a mustache -I./test-pictures

-I./test-pictures/Lincoln-and-Parrot.png -I./test-pictures/zebra.jpg -I./test-pictures/madonna.png

-I./test-pictures/bad-sketch.png -I./test-pictures/man_with_eagle/

```

diff --git a/docs/features/UPSCALE.md b/docs/features/UPSCALE.md

index f68bac987c..1d9e7e0335 100644

--- a/docs/features/UPSCALE.md

+++ b/docs/features/UPSCALE.md

@@ -4,37 +4,42 @@ title: Upscale

## Intro

-The script provides the ability to restore faces and upscale. You can apply these operations

-at the time you generate the images, or at any time to a previously-generated PNG file, using

-the [!fix](#fixing-previously-generated-images) command.

+The script provides the ability to restore faces and upscale. You can apply

+these operations at the time you generate the images, or at any time to a

+previously-generated PNG file, using the

+[!fix](#fixing-previously-generated-images) command.

## Face Fixing

-The default face restoration module is GFPGAN. The default upscale is Real-ESRGAN. For an alternative

-face restoration module, see [CodeFormer Support] below.

+The default face restoration module is GFPGAN. The default upscale is

+Real-ESRGAN. For an alternative face restoration module, see [CodeFormer

+Support] below.

-As of version 1.14, environment.yaml will install the Real-ESRGAN package into the standard install

-location for python packages, and will put GFPGAN into a subdirectory of "src" in the

-stable-diffusion directory. (The reason for this is that the standard GFPGAN distribution has a

-minor bug that adversely affects image color.) Upscaling with Real-ESRGAN should "just work" without

-further intervention. Simply pass the --upscale (-U) option on the dream> command line, or indicate

-the desired scale on the popup in the Web GUI.

+As of version 1.14, environment.yaml will install the Real-ESRGAN package into

+the standard install location for python packages, and will put GFPGAN into a

+subdirectory of "src" in the InvokeAI directory. (The reason for this is

+that the standard GFPGAN distribution has a minor bug that adversely affects

+image color.) Upscaling with Real-ESRGAN should "just work" without further

+intervention. Simply pass the --upscale (-U) option on the dream> command line,

+or indicate the desired scale on the popup in the Web GUI.

-For **GFPGAN** to work, there is one additional step needed. You will need to download and copy the

-GFPGAN [models file](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth)

-into **src/gfpgan/experiments/pretrained_models**. On Mac and Linux systems, here's how you'd do it

-using **wget**:

+For **GFPGAN** to work, there is one additional step needed. You will need to

+download and copy the GFPGAN

+[models file](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.4.pth)

+into **src/gfpgan/experiments/pretrained_models**. On Mac and Linux systems,

+here's how you'd do it using **wget**:

```bash

-> wget https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth src/gfpgan/experiments/pretrained_models/

+> wget https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.4.pth src/gfpgan/experiments/pretrained_models/

```

-Make sure that you're in the stable-diffusion directory when you do this.

+Make sure that you're in the InvokeAI directory when you do this.

-Alternatively, if you have GFPGAN installed elsewhere, or if you are using an earlier version of

-this package which asked you to install GFPGAN in a sibling directory, you may use the

-`--gfpgan_dir` argument with `dream.py` to set a custom path to your GFPGAN directory. _There are

-other GFPGAN related boot arguments if you wish to customize further._

+Alternatively, if you have GFPGAN installed elsewhere, or if you are using an

+earlier version of this package which asked you to install GFPGAN in a sibling

+directory, you may use the `--gfpgan_dir` argument with `dream.py` to set a

+custom path to your GFPGAN directory. _There are other GFPGAN related boot

+arguments if you wish to customize further._

!!! warning "Internet connection needed"

@@ -52,13 +57,14 @@ You will now have access to two new prompt arguments.

`-U : `

-The upscaling prompt argument takes two values. The first value is a scaling factor and should be

-set to either `2` or `4` only. This will either scale the image 2x or 4x respectively using

-different models.

+The upscaling prompt argument takes two values. The first value is a scaling

+factor and should be set to either `2` or `4` only. This will either scale the

+image 2x or 4x respectively using different models.

-You can set the scaling stength between `0` and `1.0` to control intensity of the of the scaling.

-This is handy because AI upscalers generally tend to smooth out texture details. If you wish to

-retain some of those for natural looking results, we recommend using values between `0.5 to 0.8`.

+You can set the scaling stength between `0` and `1.0` to control intensity of

+the of the scaling. This is handy because AI upscalers generally tend to smooth

+out texture details. If you wish to retain some of those for natural looking

+results, we recommend using values between `0.5 to 0.8`.

If you do not explicitly specify an upscaling_strength, it will default to 0.75.

@@ -66,18 +72,19 @@ If you do not explicitly specify an upscaling_strength, it will default to 0.75.

`-G : `

-This prompt argument controls the strength of the face restoration that is being applied. Similar to

-upscaling, values between `0.5 to 0.8` are recommended.

+This prompt argument controls the strength of the face restoration that is being

+applied. Similar to upscaling, values between `0.5 to 0.8` are recommended.

-You can use either one or both without any conflicts. In cases where you use both, the image will be

-first upscaled and then the face restoration process will be executed to ensure you get the highest

-quality facial features.

+You can use either one or both without any conflicts. In cases where you use

+both, the image will be first upscaled and then the face restoration process

+will be executed to ensure you get the highest quality facial features.

`--save_orig`

-When you use either `-U` or `-G`, the final result you get is upscaled or face modified. If you want

-to save the original Stable Diffusion generation, you can use the `-save_orig` prompt argument to

-save the original unaffected version too.

+When you use either `-U` or `-G`, the final result you get is upscaled or face

+modified. If you want to save the original Stable Diffusion generation, you can

+use the `-save_orig` prompt argument to save the original unaffected version

+too.

### Example Usage

@@ -102,60 +109,69 @@ dream> a man wearing a pineapple hat -I path/to/your/file.png -U 2 0.5 -G 0.6

process is complete. While the image generation is taking place, you will still be able to preview

the base images.

-If you wish to stop during the image generation but want to upscale or face restore a particular

-generated image, pass it again with the same prompt and generated seed along with the `-U` and `-G`

-prompt arguments to perform those actions.

+If you wish to stop during the image generation but want to upscale or face

+restore a particular generated image, pass it again with the same prompt and

+generated seed along with the `-U` and `-G` prompt arguments to perform those

+actions.

## CodeFormer Support

This repo also allows you to perform face restoration using

[CodeFormer](https://github.com/sczhou/CodeFormer).

-In order to setup CodeFormer to work, you need to download the models like with GFPGAN. You can do

-this either by running `preload_models.py` or by manually downloading the

-[model file](https://github.com/sczhou/CodeFormer/releases/download/v0.1.0/codeformer.pth) and

-saving it to `ldm/restoration/codeformer/weights` folder.

+In order to setup CodeFormer to work, you need to download the models like with

+GFPGAN. You can do this either by running `preload_models.py` or by manually

+downloading the

+[model file](https://github.com/sczhou/CodeFormer/releases/download/v0.1.0/codeformer.pth)

+and saving it to `ldm/restoration/codeformer/weights` folder.

-You can use `-ft` prompt argument to swap between CodeFormer and the default GFPGAN. The above

-mentioned `-G` prompt argument will allow you to control the strength of the restoration effect.

+You can use `-ft` prompt argument to swap between CodeFormer and the default

+GFPGAN. The above mentioned `-G` prompt argument will allow you to control the

+strength of the restoration effect.

### Usage:

-The following command will perform face restoration with CodeFormer instead of the default gfpgan.

+The following command will perform face restoration with CodeFormer instead of

+the default gfpgan.

` -G 0.8 -ft codeformer`

### Other Options:

-- `-cf` - cf or CodeFormer Fidelity takes values between `0` and `1`. 0 produces high quality

- results but low accuracy and 1 produces lower quality results but higher accuacy to your original

- face.

+- `-cf` - cf or CodeFormer Fidelity takes values between `0` and `1`. 0 produces

+ high quality results but low accuracy and 1 produces lower quality results but

+ higher accuacy to your original face.

-The following command will perform face restoration with CodeFormer. CodeFormer will output a result

-that is closely matching to the input face.

+The following command will perform face restoration with CodeFormer. CodeFormer

+will output a result that is closely matching to the input face.

` -G 1.0 -ft codeformer -cf 0.9`

-The following command will perform face restoration with CodeFormer. CodeFormer will output a result

-that is the best restoration possible. This may deviate slightly from the original face. This is an

-excellent option to use in situations when there is very little facial data to work with.

+The following command will perform face restoration with CodeFormer. CodeFormer

+will output a result that is the best restoration possible. This may deviate

+slightly from the original face. This is an excellent option to use in

+situations when there is very little facial data to work with.

` -G 1.0 -ft codeformer -cf 0.1`

## Fixing Previously-Generated Images

-It is easy to apply face restoration and/or upscaling to any previously-generated file. Just use the

-syntax `!fix path/to/file.png `. For example, to apply GFPGAN at strength 0.8 and upscale 2X

-for a file named `./outputs/img-samples/000044.2945021133.png`, just run:

+It is easy to apply face restoration and/or upscaling to any

+previously-generated file. Just use the syntax

+`!fix path/to/file.png `. For example, to apply GFPGAN at strength 0.8

+and upscale 2X for a file named `./outputs/img-samples/000044.2945021133.png`,

+just run:

-~~~~

+```

dream> !fix ./outputs/img-samples/000044.2945021133.png -G 0.8 -U 2

-~~~~

+```

-A new file named `000044.2945021133.fixed.png` will be created in the output directory. Note that

-the `!fix` command does not replace the original file, unlike the behavior at generate time.

+A new file named `000044.2945021133.fixed.png` will be created in the output

+directory. Note that the `!fix` command does not replace the original file,

+unlike the behavior at generate time.

### Disabling:

-If, for some reason, you do not wish to load the GFPGAN and/or ESRGAN libraries, you can disable them

-on the dream.py command line with the `--no_restore` and `--no_upscale` options, respectively.

+If, for some reason, you do not wish to load the GFPGAN and/or ESRGAN libraries,

+you can disable them on the dream.py command line with the `--no_restore` and

+`--no_upscale` options, respectively.

diff --git a/docs/help/SAMPLER_CONVERGENCE.md b/docs/help/SAMPLER_CONVERGENCE.md

new file mode 100644

index 0000000000..5dfee5dc4e

--- /dev/null

+++ b/docs/help/SAMPLER_CONVERGENCE.md

@@ -0,0 +1,141 @@

+---

+title: SAMPLER CONVERGENCE

+---

+

+## *Sampler Convergence*

+

+As features keep increasing, making the right choices for your needs can become increasingly difficult. What sampler to use? And for how many steps? Do you change the CFG value? Do you use prompt weighting? Do you allow variations?

+

+Even once you have a result, do you blend it with other images? Pass it through `img2img`? With what strength? Do you use inpainting to correct small details? Outpainting to extend cropped sections?

+

+The purpose of this series of documents is to help you better understand these tools, so you can make the best out of them. Feel free to contribute with your own findings!

+

+In this document, we will talk about sampler convergence.

+

+Looking for a short version? Here's a TL;DR in 3 tables.

+

+| Remember |

+|:---|

+| Results converge as steps (`-s`) are increased (except for `K_DPM_2_A` and `K_EULER_A`). Often at ≥ `-s100`, but may require ≥ `-s700`). |

+| Producing a batch of candidate images at low (`-s8` to `-s30`) step counts can save you hours of computation. |

+| `K_HEUN` and `K_DPM_2` converge in less steps (but are slower). |

+| `K_DPM_2_A` and `K_EULER_A` incorporate a lot of creativity/variability. |

+

+| Sampler | (3 sample avg) it/s (M1 Max 64GB, 512x512) |

+|---|---|

+| `DDIM` | 1.89 |

+| `PLMS` | 1.86 |

+| `K_EULER` | 1.86 |

+| `K_LMS` | 1.91 |

+| `K_HEUN` | 0.95 (slower) |

+| `K_DPM_2` | 0.95 (slower) |

+| `K_DPM_2_A` | 0.95 (slower) |

+| `K_EULER_A` | 1.86 |

+

+| Suggestions |

+|:---|

+| For most use cases, `K_LMS`, `K_HEUN` and `K_DPM_2` are the best choices (the latter 2 run 0.5x as quick, but tend to converge 2x as quick as `K_LMS`). At very low steps (≤ `-s8`), `K_HEUN` and `K_DPM_2` are not recommended. Use `K_LMS` instead.|

+| For variability, use `K_EULER_A` (runs 2x as quick as `K_DPM_2_A`). |

+

+---

+

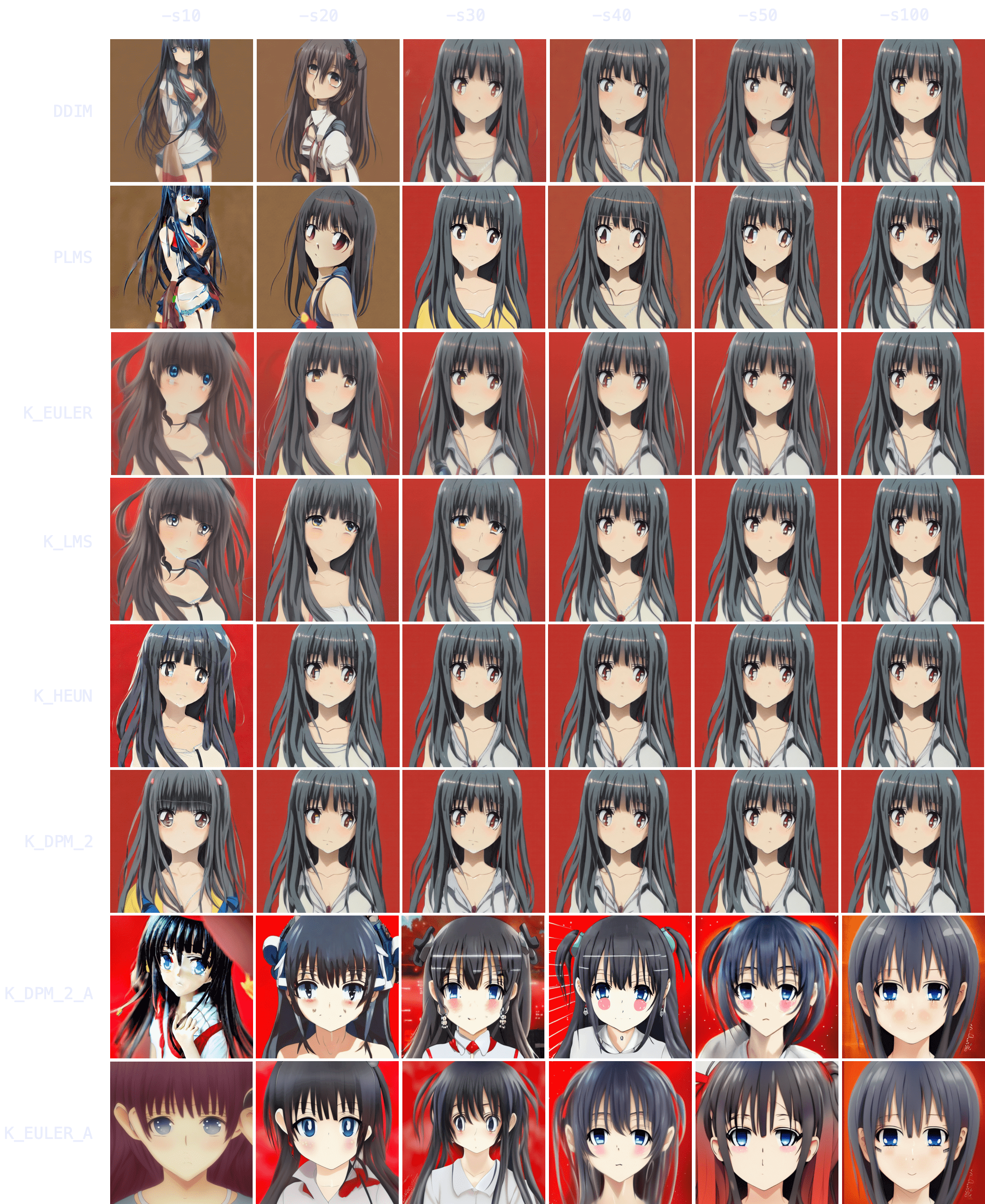

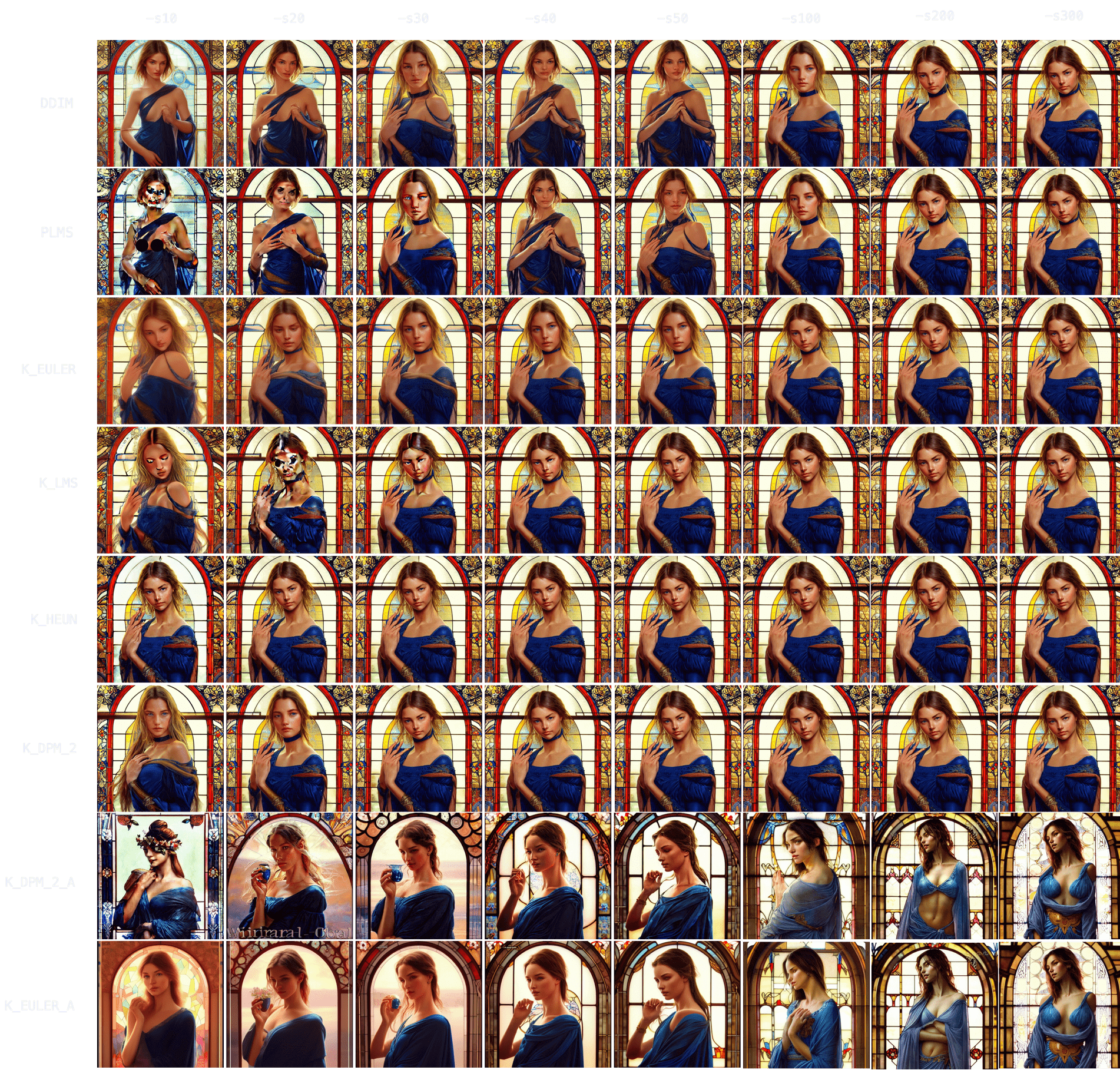

+### *Sampler results*

+

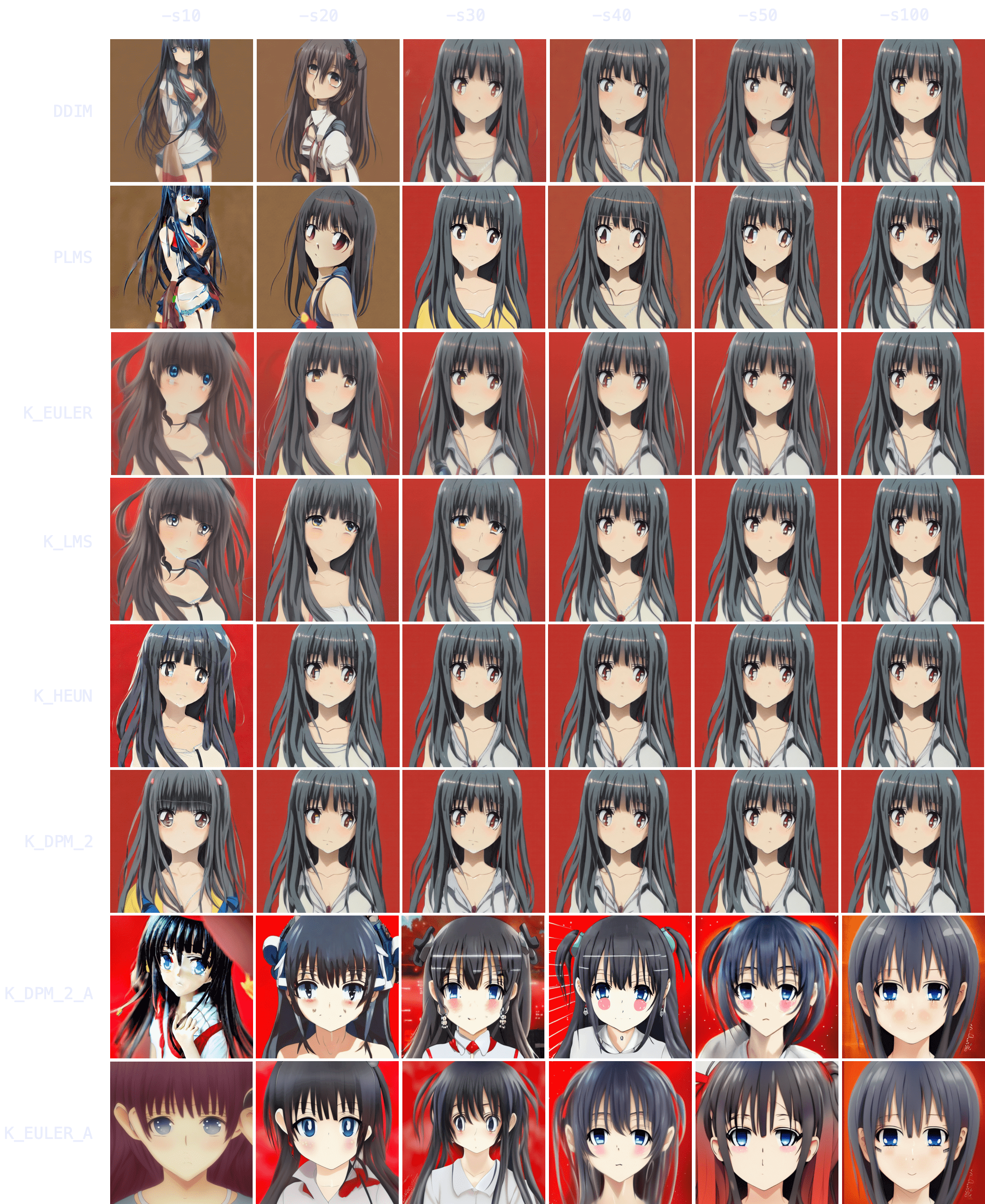

+Let's start by choosing a prompt and using it with each of our 8 samplers, running it for 10, 20, 30, 40, 50 and 100 steps.

+

+Anime. `"an anime girl" -W512 -H512 -C7.5 -S3031912972`

+

+

+

+### *Sampler convergence*

+

+Immediately, you can notice results tend to converge -that is, as `-s` (step) values increase, images look more and more similar until there comes a point where the image no longer changes.

+

+You can also notice how `DDIM` and `PLMS` eventually tend to converge to K-sampler results as steps are increased.

+Among K-samplers, `K_HEUN` and `K_DPM_2` seem to require the fewest steps to converge, and even at low step counts they are good indicators of the final result. And finally, `K_DPM_2_A` and `K_EULER_A` seem to do a bit of their own thing and don't keep much similarity with the rest of the samplers.

+

+### *Batch generation speedup*

+

+This realization is very useful because it means you don't need to create a batch of 100 images (`-n100`) at `-s100` to choose your favorite 2 or 3 images.

+You can produce the same 100 images at `-s10` to `-s30` using a K-sampler (since they converge faster), get a rough idea of the final result, choose your 2 or 3 favorite ones, and then run `-s100` on those images to polish some details.

+The latter technique is 3-8x as quick.

+

+Example:

+

+At 60s per 100 steps.

+

+(Option A) 60s * 100 images = 6000s (100 images at `-s100`, manually picking 3 favorites)

+

+(Option B) 6s * 100 images + 60s * 3 images = 780s (100 images at `-s10`, manually picking 3 favorites, and running those 3 at `-s100` to polish details)

+

+The result is 1 hour and 40 minutes (Option A) vs 13 minutes (Option B).

+

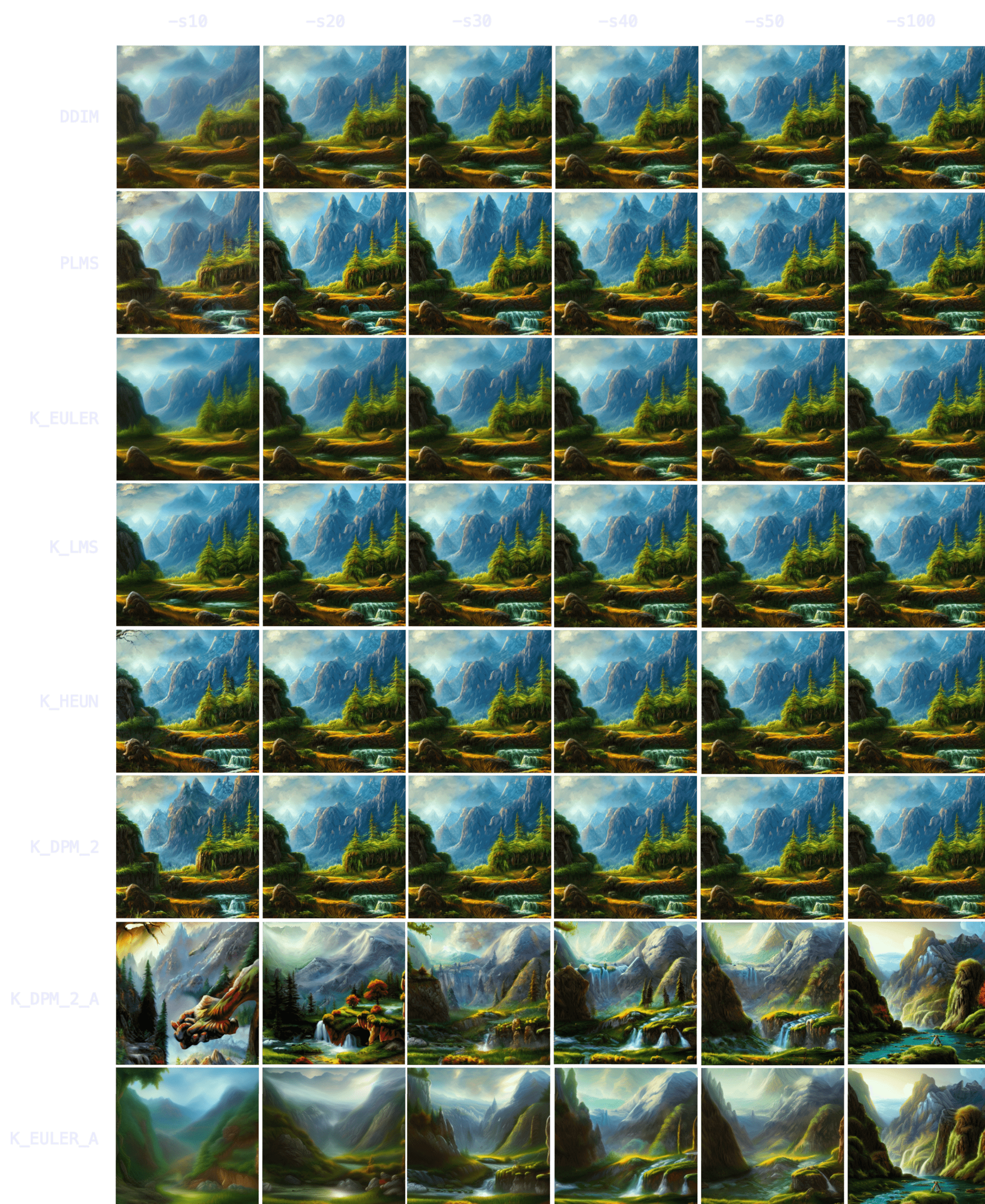

+### *Topic convergance*

+

+Now, these results seem interesting, but do they hold for other topics? How about nature? Food? People? Animals? Let's try!

+

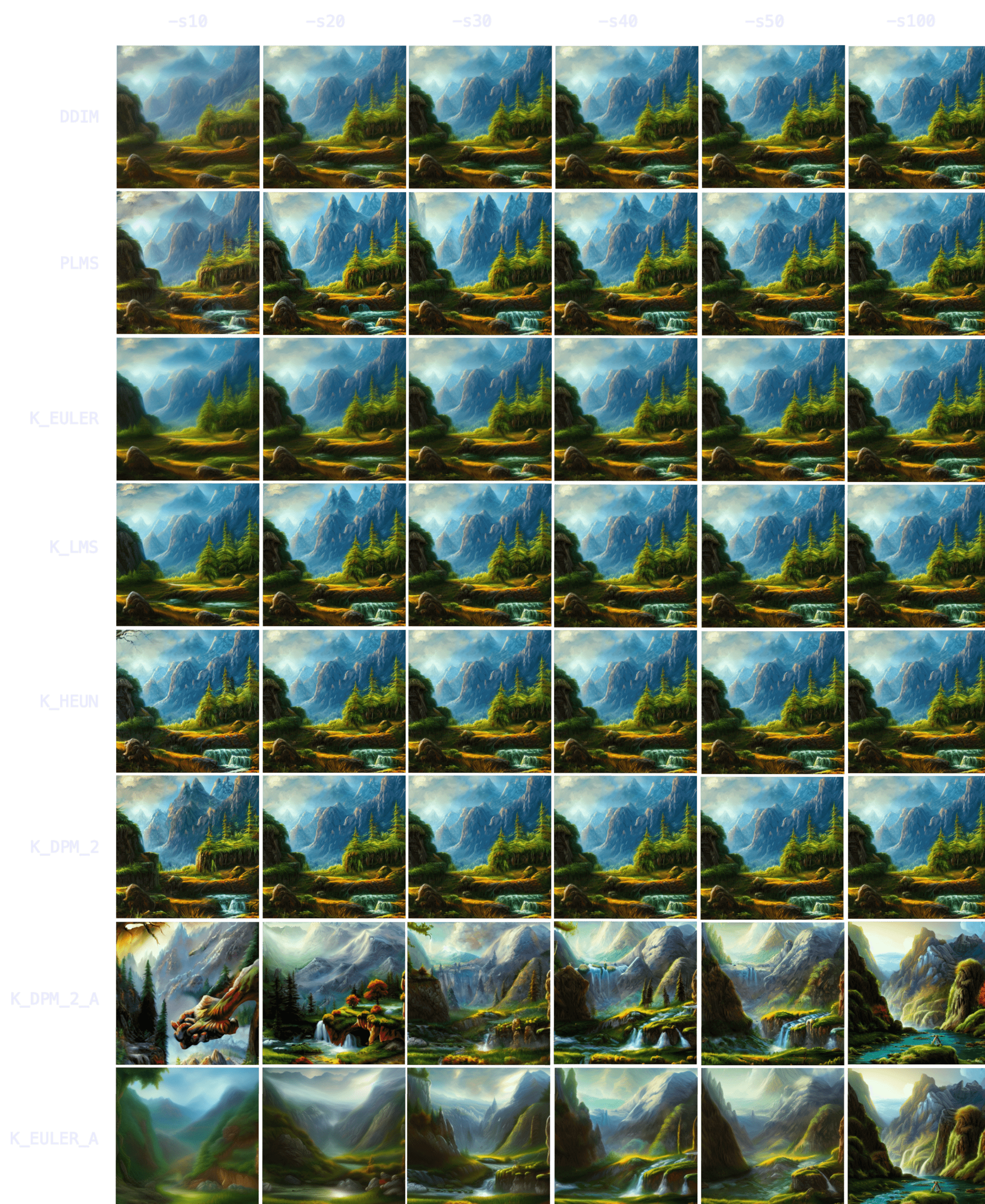

+Nature. `"valley landscape wallpaper, d&d art, fantasy, painted, 4k, high detail, sharp focus, washed colors, elaborate excellent painted illustration" -W512 -H512 -C7.5 -S1458228930`

+

+

+

+With nature, you can see how initial results are even more indicative of final result -more so than with characters/people. `K_HEUN` and `K_DPM_2` are again the quickest indicators, almost right from the start. Results also converge faster (e.g. `K_HEUN` converged at `-s21`).

+

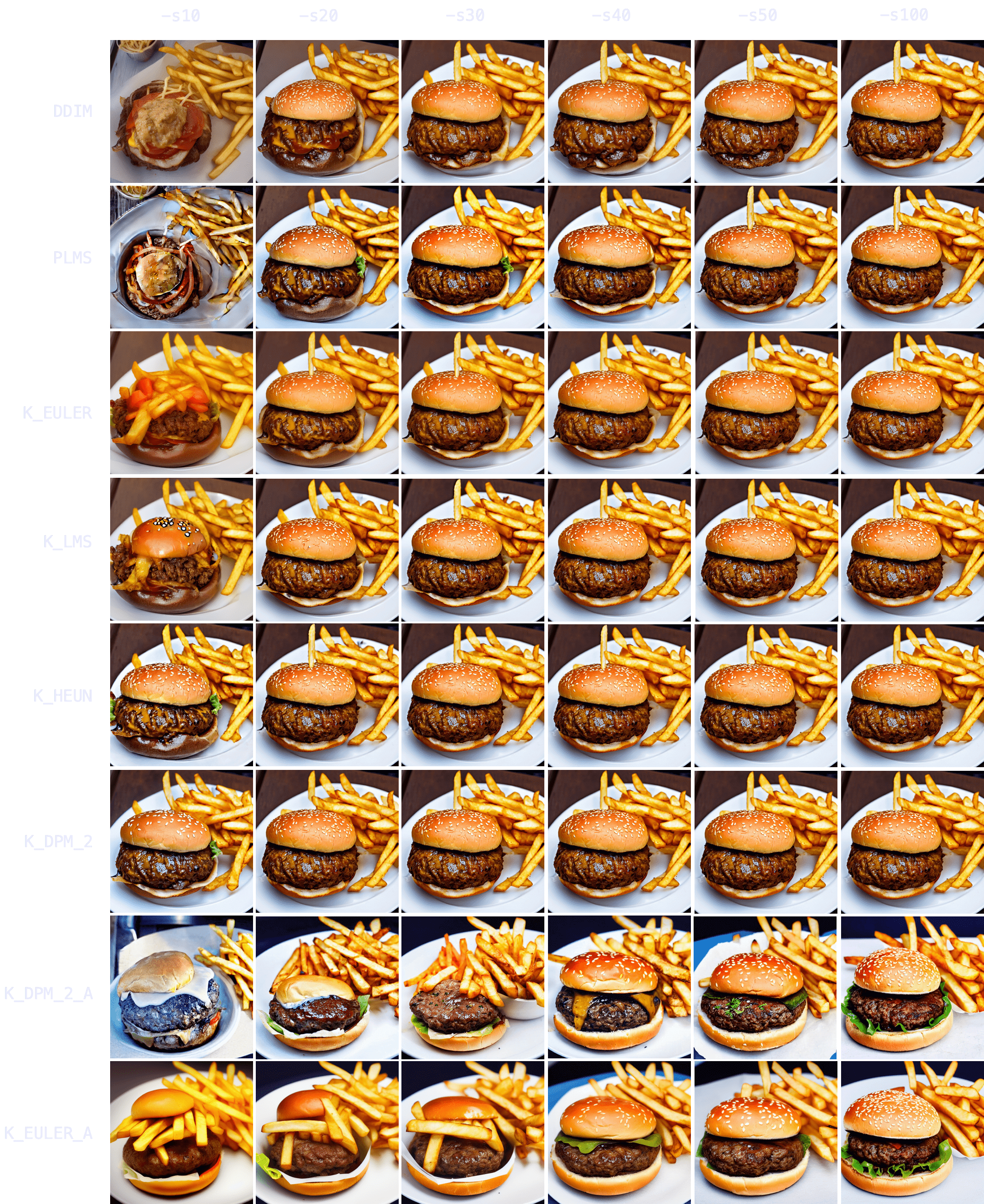

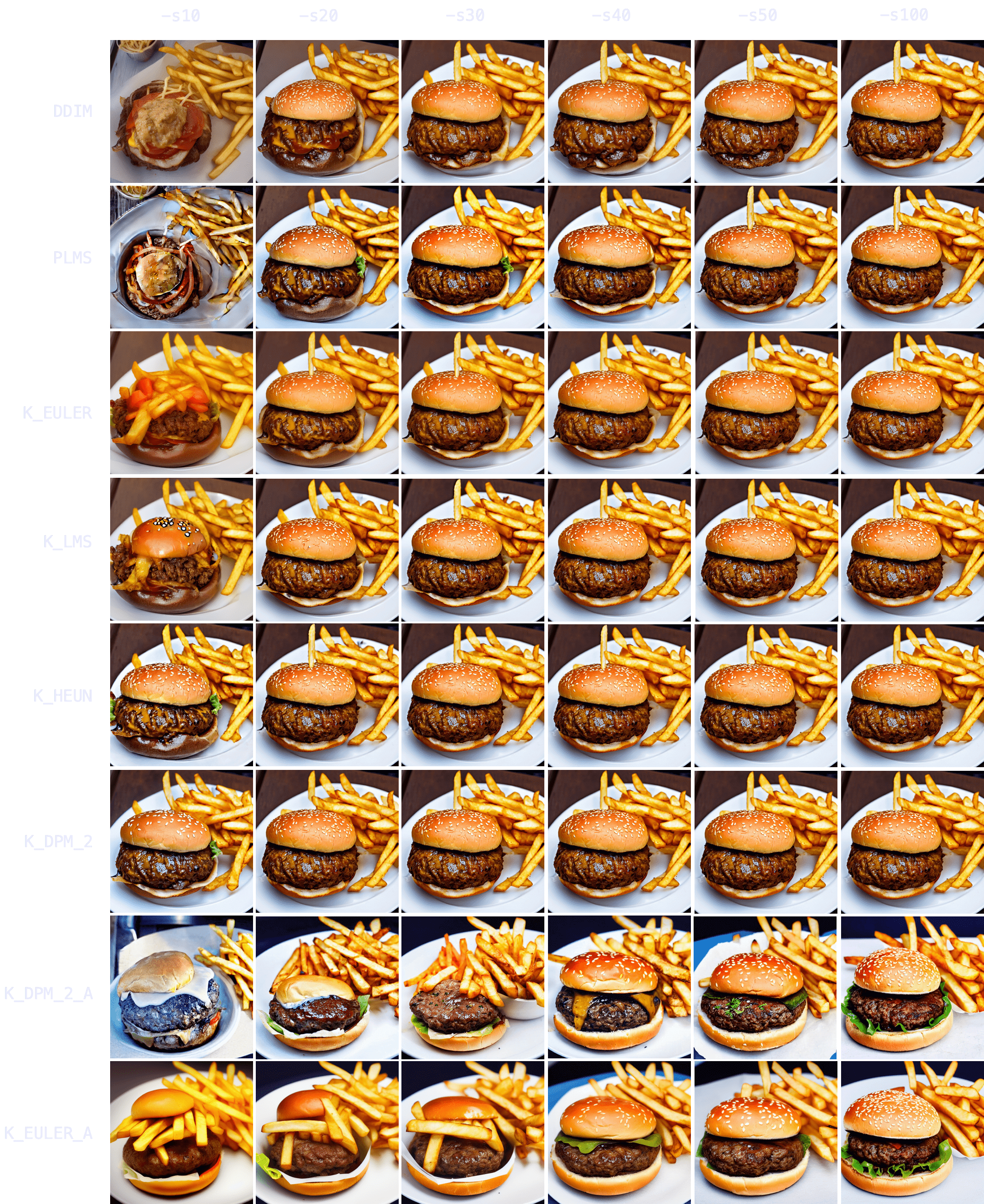

+Food. `"a hamburger with a bowl of french fries" -W512 -H512 -C7.5 -S4053222918`

+

+

+

+Again, `K_HEUN` and `K_DPM_2` take the fewest number of steps to be good indicators of the final result. `K_DPM_2_A` and `K_EULER_A` seem to incorporate a lot of creativity/variability, capable of producing rotten hamburgers, but also of adding lettuce to the mix. And they're the only samplers that produced an actual 'bowl of fries'!

+

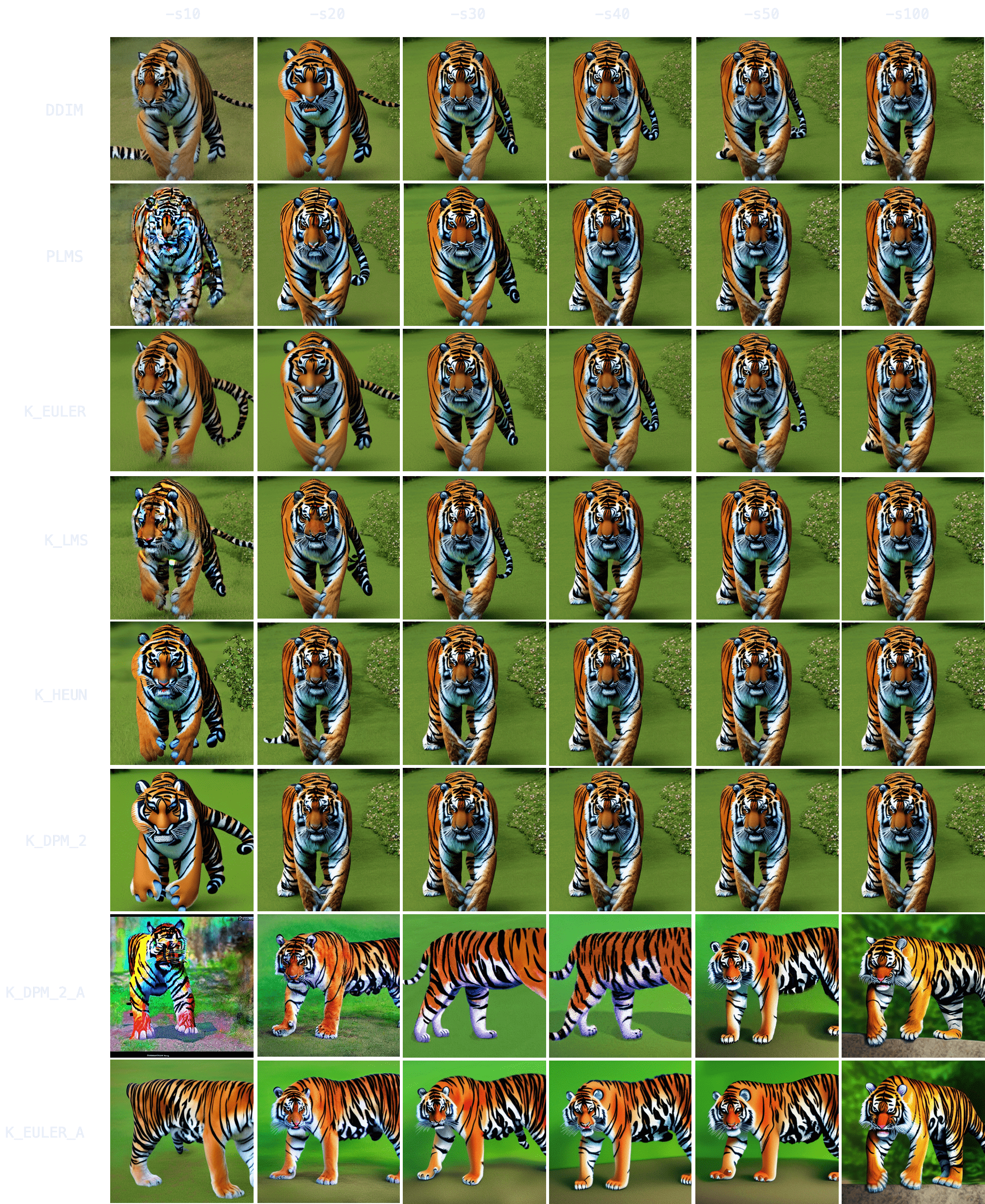

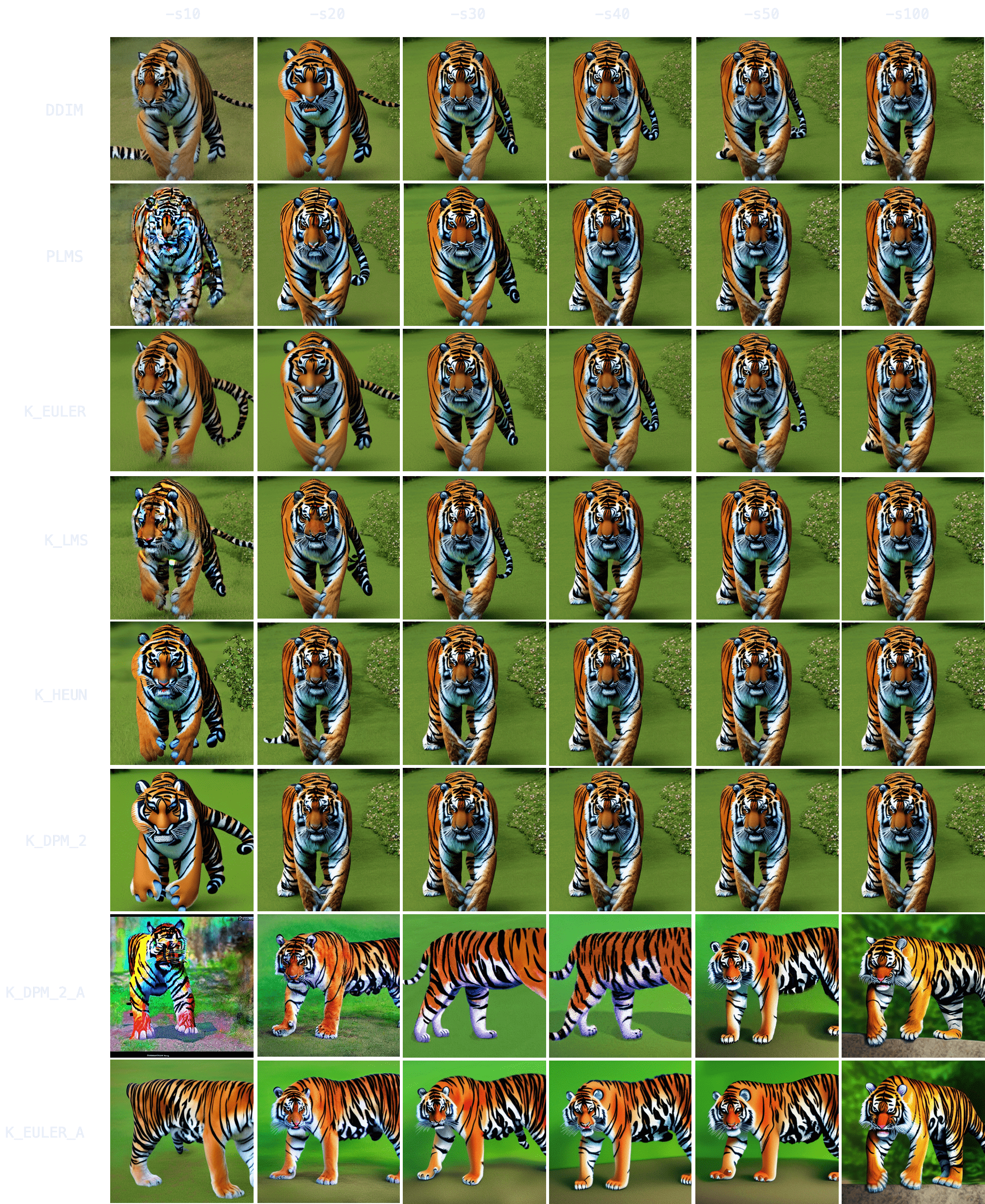

+Animals. `"grown tiger, full body" -W512 -H512 -C7.5 -S3721629802`

+

+

+

+`K_HEUN` and `K_DPM_2` once again require the least number of steps to be indicative of the final result (around `-s30`), while other samplers are still struggling with several tails or malformed back legs.

+

+It also takes longer to converge (for comparison, `K_HEUN` required around 150 steps to converge). This is normal, as producing human/animal faces/bodies is one of the things the model struggles the most with. For these topics, running for more steps will often increase coherence within the composition.

+

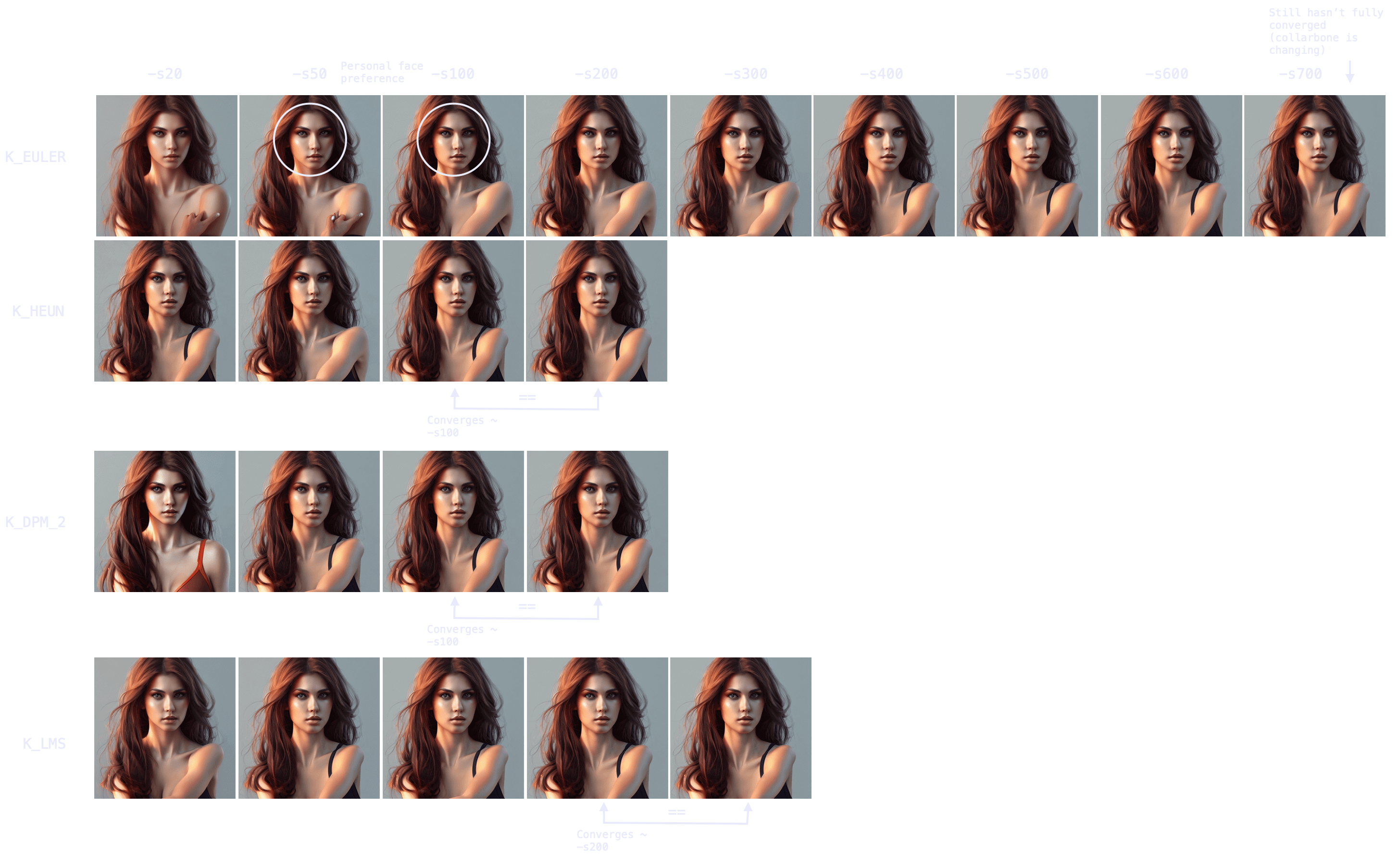

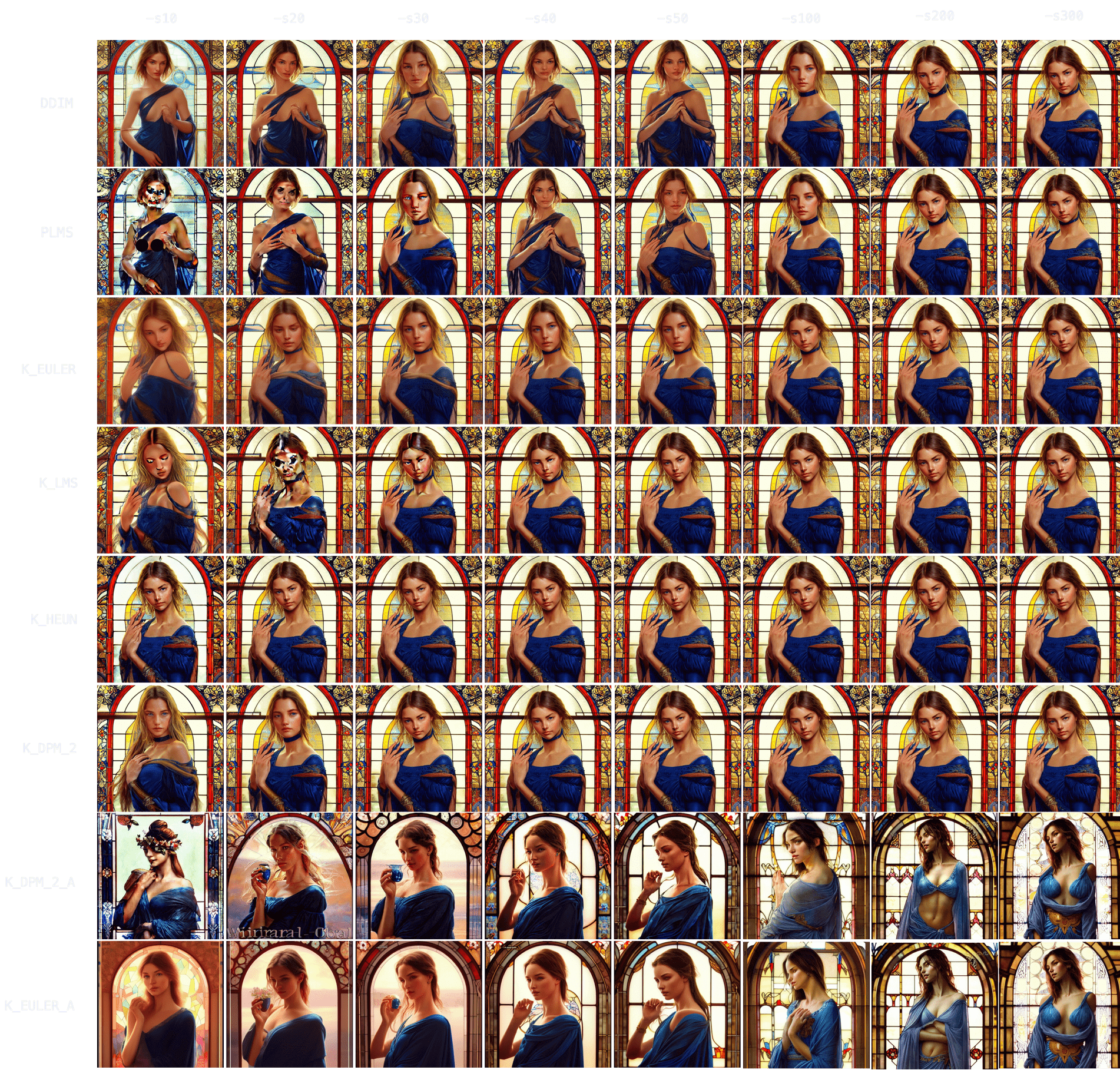

+People. `"Ultra realistic photo, (Miranda Bloom-Kerr), young, stunning model, blue eyes, blond hair, beautiful face, intricate, highly detailed, smooth, art by artgerm and greg rutkowski and alphonse mucha, stained glass" -W512 -H512 -C7.5 -S2131956332`. This time, we will go up to 300 steps.

+

+

+

+Observing the results, it again takes longer for all samplers to converge (`K_HEUN` took around 150 steps), but we can observe good indicative results much earlier (see: `K_HEUN`). Conversely, `DDIM` and `PLMS` are still undergoing moderate changes (see: lace around her neck), even at `-s300`.

+

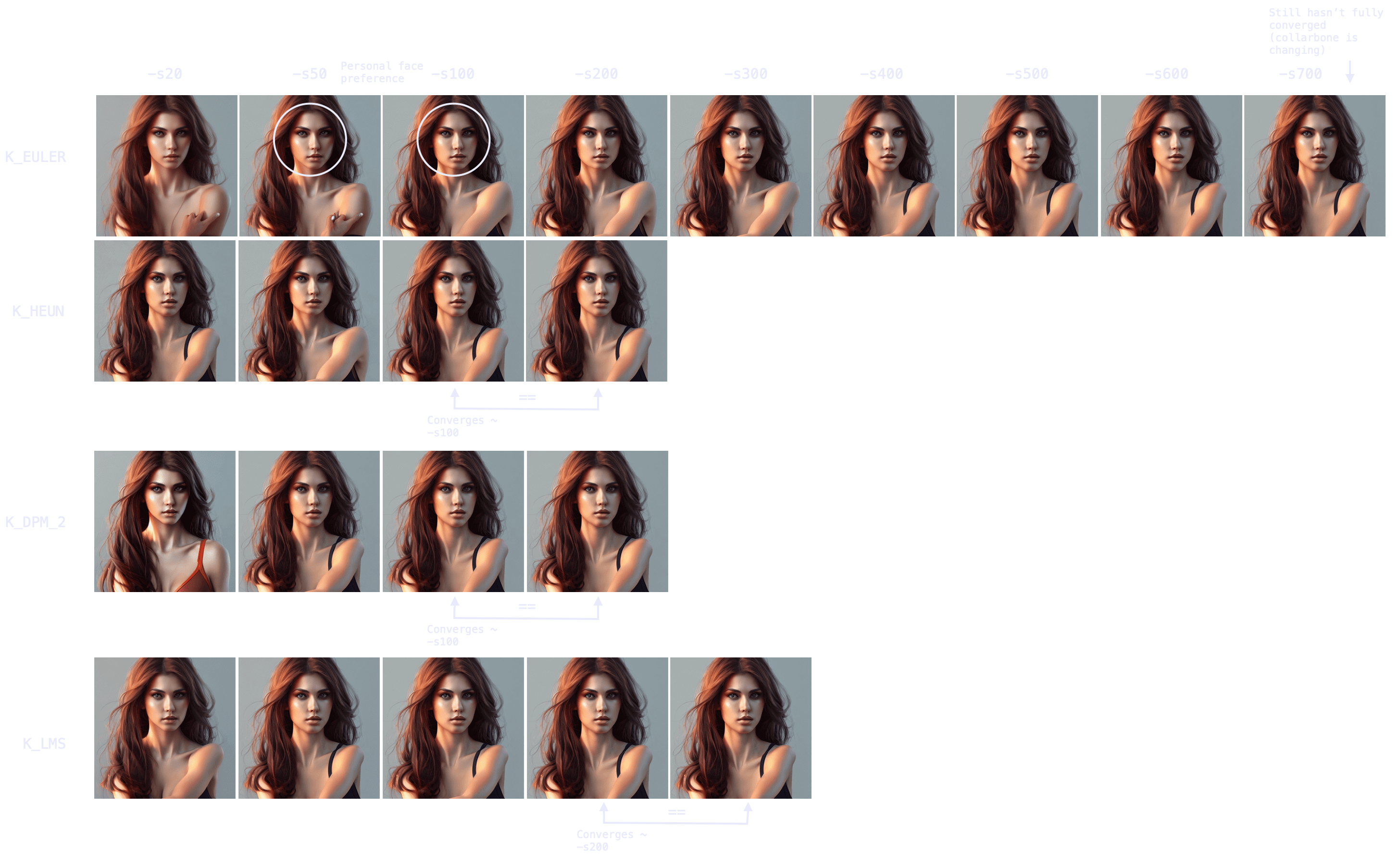

+In fact, as we can see in this other experiment, some samplers can take 700+ steps to converge when generating people.

+

+

+

+Note also the point of convergence may not be the most desirable state (e.g. I prefer an earlier version of the face, more rounded), but it will probably be the most coherent arms/hands/face attributes-wise. You can always merge different images with a photo editing tool and pass it through `img2img` to smoothen the composition.

+

+### *Sampler generation times*

+

+Once we understand the concept of sampler convergence, we must look into the performance of each sampler in terms of steps (iterations) per second, as not all samplers run at the same speed.

+

+On my M1 Max with 64GB of RAM, for a 512x512 image:

+| Sampler | (3 sample average) it/s |

+|---|---|

+| `DDIM` | 1.89 |

+| `PLMS` | 1.86 |

+| `K_EULER` | 1.86 |

+| `K_LMS` | 1.91 |

+| `K_HEUN` | 0.95 (slower) |

+| `K_DPM_2` | 0.95 (slower) |

+| `K_DPM_2_A` | 0.95 (slower) |

+| `K_EULER_A` | 1.86 |

+

+Combining our results with the steps per second of each sampler, three choices come out on top: `K_LMS`, `K_HEUN` and `K_DPM_2` (where the latter two run 0.5x as quick but tend to converge 2x as quick as `K_LMS`). For creativity and a lot of variation between iterations, `K_EULER_A` can be a good choice (which runs 2x as quick as `K_DPM_2_A`).

+

+Additionally, image generation at very low steps (≤ `-s8`) is not recommended for `K_HEUN` and `K_DPM_2`. Use `K_LMS` instead.

+

+

| Shortcut | Default | Description |

-| ----------------------------------------- | ---------- | ------- | ------------------------------------------------------------------------------------------------ |

-| `--init_mask ` | `-M` | `None` | Path to an image the same size as the initial_image, with areas for inpainting made transparent. |

+~~~

+> winpty python scripts\dream.py

+~~~

-## Command-line editing and completion

+On the Mac and Linux platforms, when you exit dream.py, the last 1000

+lines of your command-line history will be saved. When you restart

+dream.py, you can access the saved history using the up-arrow key.

-If you are on a Macintosh or Linux machine, the command-line offers convenient history tracking,

-editing, and command completion.

+In addition, limited command-line completion is installed. In various

+contexts, you can start typing your command and press tab. A list of

+potential completions will be presented to you. You can then type a

+little more, hit tab again, and eventually autocomplete what you want.

-- To scroll through previous commands and potentially edit/reuse them, use the ++up++ and ++down++

- cursor keys.

-- To edit the current command, use the ++left++ and ++right++ cursor keys to position the cursor,

- and then ++backspace++, ++delete++ or ++insert++ characters.

-- To move to the very beginning of the command, type ++ctrl+a++ (or ++command+a++ on the Mac)

-- To move to the end of the command, type ++ctrl+e++.

-- To cut a section of the command, position the cursor where you want to start cutting and type

- ++ctrl+k++.

-- To paste a cut section back in, position the cursor where you want to paste, and type ++ctrl+y++

+When specifying file paths using the one-letter shortcuts, the CLI

+will attempt to complete pathnames for you. This is most handy for the

+-I (init image) and -M (init mask) paths. To initiate completion, start

+the path with a slash ("/") or "./". For example:

-Windows users can get similar, but more limited, functionality if they launch `dream.py` with the

-"winpty" program:

-

-```batch

-winpty python scripts\dream.py

-```

-

-On the Mac and Linux platforms, when you exit `dream.py`, the last 1000 lines of your command-line

-history will be saved. When you restart `dream.py`, you can access the saved history using the

-++up++ key.

-

-In addition, limited command-line completion is installed. In various contexts, you can start typing

-your command and press tab. A list of potential completions will be presented to you. You can then

-type a little more, hit tab again, and eventually autocomplete what you want.

-

-When specifying file paths using the one-letter shortcuts, the CLI will attempt to complete

-pathnames for you. This is most handy for the `-I` (init image) and `-M` (init mask) paths. To

-initiate completion, start the path with a slash `/` or `./`, for example:

-

-```bash

-dream> "zebra with a mustache" -I./test-pictures

+~~~

+dream> zebra with a mustache -I./test-pictures

-I./test-pictures/Lincoln-and-Parrot.png -I./test-pictures/zebra.jpg -I./test-pictures/madonna.png

-I./test-pictures/bad-sketch.png -I./test-pictures/man_with_eagle/

```

diff --git a/docs/features/UPSCALE.md b/docs/features/UPSCALE.md

index f68bac987c..1d9e7e0335 100644

--- a/docs/features/UPSCALE.md

+++ b/docs/features/UPSCALE.md

@@ -4,37 +4,42 @@ title: Upscale

## Intro

-The script provides the ability to restore faces and upscale. You can apply these operations

-at the time you generate the images, or at any time to a previously-generated PNG file, using

-the [!fix](#fixing-previously-generated-images) command.

+The script provides the ability to restore faces and upscale. You can apply

+these operations at the time you generate the images, or at any time to a

+previously-generated PNG file, using the

+[!fix](#fixing-previously-generated-images) command.

## Face Fixing

-The default face restoration module is GFPGAN. The default upscale is Real-ESRGAN. For an alternative

-face restoration module, see [CodeFormer Support] below.

+The default face restoration module is GFPGAN. The default upscale is

+Real-ESRGAN. For an alternative face restoration module, see [CodeFormer

+Support] below.

-As of version 1.14, environment.yaml will install the Real-ESRGAN package into the standard install

-location for python packages, and will put GFPGAN into a subdirectory of "src" in the

-stable-diffusion directory. (The reason for this is that the standard GFPGAN distribution has a

-minor bug that adversely affects image color.) Upscaling with Real-ESRGAN should "just work" without

-further intervention. Simply pass the --upscale (-U) option on the dream> command line, or indicate

-the desired scale on the popup in the Web GUI.

+As of version 1.14, environment.yaml will install the Real-ESRGAN package into

+the standard install location for python packages, and will put GFPGAN into a

+subdirectory of "src" in the InvokeAI directory. (The reason for this is

+that the standard GFPGAN distribution has a minor bug that adversely affects

+image color.) Upscaling with Real-ESRGAN should "just work" without further

+intervention. Simply pass the --upscale (-U) option on the dream> command line,