mirror of

https://github.com/invoke-ai/InvokeAI

synced 2024-08-30 20:32:17 +00:00

[WebUI] Model Conversion (#2616)

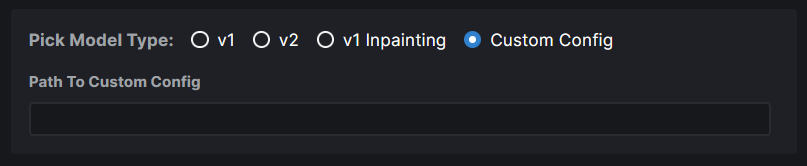

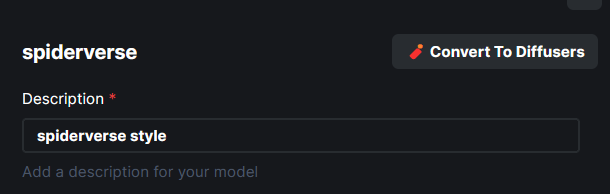

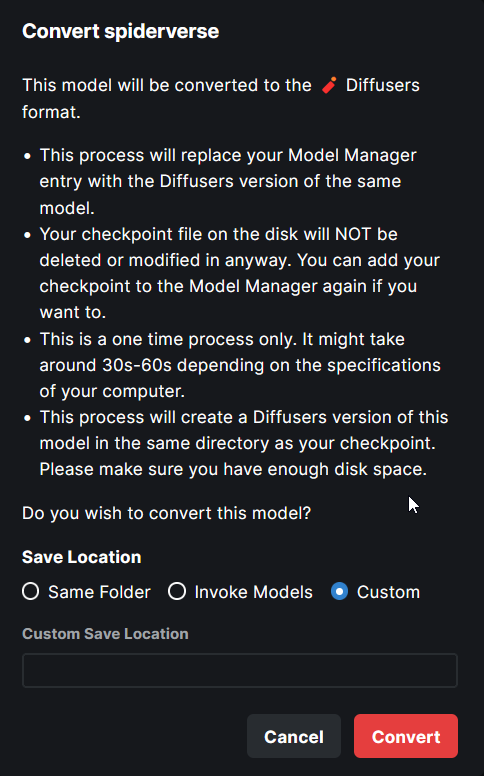

### WebUI Model Conversion **Model Search Updates** - Model Search now has a radio group that allows users to pick the type of model they are importing. If they know their model has a custom config file, they can assign it right here. Based on their pick, the model config data is automatically populated. And this same information is used when converting the model to `diffusers`.  - Files named `model.safetensors` and `diffusion_pytorch_model.safetensors` are excluded from the search because these are naming conventions used by diffusers models and they will end up showing on the list because our conversion saves safetensors and not bin files. **Model Conversion UI** - The **Convert To Diffusers** button can be found on the Edit page of any **Checkpoint Model**.  - When converting the model, the entire process is handled automatically. The corresponding config while at the time of the Ckpt addition is used in the process. - Users are presented with the choice on where to save the diffusers converted model - same location as the ckpt, InvokeAI models root folder or a completely custom location.  - When the model is converted, the checkpoint entry is replaced with the diffusers model entry. A user can readd the ckpt if they wish to. --- More or less done. Might make some minor UX improvements as I refine things.

This commit is contained in:

commit

7cb9d6b1a6

@ -28,7 +28,7 @@ from ldm.invoke.args import Args, APP_ID, APP_VERSION, calculate_init_img_hash

|

||||

from ldm.invoke.conditioning import get_tokens_for_prompt, get_prompt_structure

|

||||

from ldm.invoke.generator.diffusers_pipeline import PipelineIntermediateState

|

||||

from ldm.invoke.generator.inpaint import infill_methods

|

||||

from ldm.invoke.globals import Globals

|

||||

from ldm.invoke.globals import Globals, global_converted_ckpts_dir

|

||||

from ldm.invoke.pngwriter import PngWriter, retrieve_metadata

|

||||

from ldm.invoke.prompt_parser import split_weighted_subprompts, Blend

|

||||

|

||||

@ -43,7 +43,8 @@ if not os.path.isabs(args.outdir):

|

||||

|

||||

# normalize the config directory relative to root

|

||||

if not os.path.isabs(opt.conf):

|

||||

opt.conf = os.path.normpath(os.path.join(Globals.root,opt.conf))

|

||||

opt.conf = os.path.normpath(os.path.join(Globals.root, opt.conf))

|

||||

|

||||

|

||||

class InvokeAIWebServer:

|

||||

def __init__(self, generate: Generate, gfpgan, codeformer, esrgan) -> None:

|

||||

@ -189,7 +190,8 @@ class InvokeAIWebServer:

|

||||

(width, height) = pil_image.size

|

||||

|

||||

thumbnail_path = save_thumbnail(

|

||||

pil_image, os.path.basename(file_path), self.thumbnail_image_path

|

||||

pil_image, os.path.basename(

|

||||

file_path), self.thumbnail_image_path

|

||||

)

|

||||

|

||||

response = {

|

||||

@ -264,14 +266,16 @@ class InvokeAIWebServer:

|

||||

# location for "finished" images

|

||||

self.result_path = args.outdir

|

||||

# temporary path for intermediates

|

||||

self.intermediate_path = os.path.join(self.result_path, "intermediates/")

|

||||

self.intermediate_path = os.path.join(

|

||||

self.result_path, "intermediates/")

|

||||

# path for user-uploaded init images and masks

|

||||

self.init_image_path = os.path.join(self.result_path, "init-images/")

|

||||

self.mask_image_path = os.path.join(self.result_path, "mask-images/")

|

||||

# path for temp images e.g. gallery generations which are not committed

|

||||

self.temp_image_path = os.path.join(self.result_path, "temp-images/")

|

||||

# path for thumbnail images

|

||||

self.thumbnail_image_path = os.path.join(self.result_path, "thumbnails/")

|

||||

self.thumbnail_image_path = os.path.join(

|

||||

self.result_path, "thumbnails/")

|

||||

# txt log

|

||||

self.log_path = os.path.join(self.result_path, "invoke_log.txt")

|

||||

# make all output paths

|

||||

@ -290,7 +294,7 @@ class InvokeAIWebServer:

|

||||

def load_socketio_listeners(self, socketio):

|

||||

@socketio.on("requestSystemConfig")

|

||||

def handle_request_capabilities():

|

||||

print(f">> System config requested")

|

||||

print(">> System config requested")

|

||||

config = self.get_system_config()

|

||||

config["model_list"] = self.generate.model_manager.list_models()

|

||||

config["infill_methods"] = infill_methods()

|

||||

@ -301,14 +305,16 @@ class InvokeAIWebServer:

|

||||

try:

|

||||

if not search_folder:

|

||||

socketio.emit(

|

||||

"foundModels",

|

||||

{'search_folder': None, 'found_models': None},

|

||||

)

|

||||

"foundModels",

|

||||

{'search_folder': None, 'found_models': None},

|

||||

)

|

||||

else:

|

||||

search_folder, found_models = self.generate.model_manager.search_models(search_folder)

|

||||

search_folder, found_models = self.generate.model_manager.search_models(

|

||||

search_folder)

|

||||

socketio.emit(

|

||||

"foundModels",

|

||||

{'search_folder': search_folder, 'found_models': found_models},

|

||||

{'search_folder': search_folder,

|

||||

'found_models': found_models},

|

||||

)

|

||||

except Exception as e:

|

||||

self.socketio.emit("error", {"message": (str(e))})

|

||||

@ -393,6 +399,67 @@ class InvokeAIWebServer:

|

||||

traceback.print_exc()

|

||||

print("\n")

|

||||

|

||||

@socketio.on('convertToDiffusers')

|

||||

def convert_to_diffusers(model_to_convert: dict):

|

||||

try:

|

||||

if (model_info := self.generate.model_manager.model_info(model_name=model_to_convert['model_name'])):

|

||||

if 'weights' in model_info:

|

||||

ckpt_path = Path(model_info['weights'])

|

||||

original_config_file = Path(model_info['config'])

|

||||

model_name = model_to_convert['model_name']

|

||||

model_description = model_info['description']

|

||||

else:

|

||||

self.socketio.emit(

|

||||

"error", {"message": "Model is not a valid checkpoint file"})

|

||||

else:

|

||||

self.socketio.emit(

|

||||

"error", {"message": "Could not retrieve model info."})

|

||||

|

||||

if not ckpt_path.is_absolute():

|

||||

ckpt_path = Path(Globals.root, ckpt_path)

|

||||

|

||||

if original_config_file and not original_config_file.is_absolute():

|

||||

original_config_file = Path(

|

||||

Globals.root, original_config_file)

|

||||

|

||||

diffusers_path = Path(

|

||||

ckpt_path.parent.absolute(),

|

||||

f'{model_name}_diffusers'

|

||||

)

|

||||

|

||||

if model_to_convert['save_location'] == 'root':

|

||||

diffusers_path = Path(global_converted_ckpts_dir(), f'{model_name}_diffusers')

|

||||

|

||||

if model_to_convert['save_location'] == 'custom' and model_to_convert['custom_location'] is not None:

|

||||

diffusers_path = Path(model_to_convert['custom_location'], f'{model_name}_diffusers')

|

||||

|

||||

if diffusers_path.exists():

|

||||

shutil.rmtree(diffusers_path)

|

||||

|

||||

self.generate.model_manager.convert_and_import(

|

||||

ckpt_path,

|

||||

diffusers_path,

|

||||

model_name=model_name,

|

||||

model_description=model_description,

|

||||

vae=None,

|

||||

original_config_file=original_config_file,

|

||||

commit_to_conf=opt.conf,

|

||||

)

|

||||

|

||||

new_model_list = self.generate.model_manager.list_models()

|

||||

socketio.emit(

|

||||

"modelConverted",

|

||||

{"new_model_name": model_name,

|

||||

"model_list": new_model_list, 'update': True},

|

||||

)

|

||||

print(f">> Model Converted: {model_name}")

|

||||

except Exception as e:

|

||||

self.socketio.emit("error", {"message": (str(e))})

|

||||

print("\n")

|

||||

|

||||

traceback.print_exc()

|

||||

print("\n")

|

||||

|

||||

@socketio.on("requestEmptyTempFolder")

|

||||

def empty_temp_folder():

|

||||

try:

|

||||

@ -406,7 +473,8 @@ class InvokeAIWebServer:

|

||||

)

|

||||

os.remove(thumbnail_path)

|

||||

except Exception as e:

|

||||

socketio.emit("error", {"message": f"Unable to delete {f}: {str(e)}"})

|

||||

socketio.emit(

|

||||

"error", {"message": f"Unable to delete {f}: {str(e)}"})

|

||||

pass

|

||||

|

||||

socketio.emit("tempFolderEmptied")

|

||||

@ -421,7 +489,8 @@ class InvokeAIWebServer:

|

||||

def save_temp_image_to_gallery(url):

|

||||

try:

|

||||

image_path = self.get_image_path_from_url(url)

|

||||

new_path = os.path.join(self.result_path, os.path.basename(image_path))

|

||||

new_path = os.path.join(

|

||||

self.result_path, os.path.basename(image_path))

|

||||

shutil.copy2(image_path, new_path)

|

||||

|

||||

if os.path.splitext(new_path)[1] == ".png":

|

||||

@ -434,7 +503,8 @@ class InvokeAIWebServer:

|

||||

(width, height) = pil_image.size

|

||||

|

||||

thumbnail_path = save_thumbnail(

|

||||

pil_image, os.path.basename(new_path), self.thumbnail_image_path

|

||||

pil_image, os.path.basename(

|

||||

new_path), self.thumbnail_image_path

|

||||

)

|

||||

|

||||

image_array = [

|

||||

@ -497,7 +567,8 @@ class InvokeAIWebServer:

|

||||

(width, height) = pil_image.size

|

||||

|

||||

thumbnail_path = save_thumbnail(

|

||||

pil_image, os.path.basename(path), self.thumbnail_image_path

|

||||

pil_image, os.path.basename(

|

||||

path), self.thumbnail_image_path

|

||||

)

|

||||

|

||||

image_array.append(

|

||||

@ -515,7 +586,8 @@ class InvokeAIWebServer:

|

||||

}

|

||||

)

|

||||

except Exception as e:

|

||||

socketio.emit("error", {"message": f"Unable to load {path}: {str(e)}"})

|

||||

socketio.emit(

|

||||

"error", {"message": f"Unable to load {path}: {str(e)}"})

|

||||

pass

|

||||

|

||||

socketio.emit(

|

||||

@ -569,7 +641,8 @@ class InvokeAIWebServer:

|

||||

(width, height) = pil_image.size

|

||||

|

||||

thumbnail_path = save_thumbnail(

|

||||

pil_image, os.path.basename(path), self.thumbnail_image_path

|

||||

pil_image, os.path.basename(

|

||||

path), self.thumbnail_image_path

|

||||

)

|

||||

|

||||

image_array.append(

|

||||

@ -588,7 +661,8 @@ class InvokeAIWebServer:

|

||||

)

|

||||

except Exception as e:

|

||||

print(f">> Unable to load {path}")

|

||||

socketio.emit("error", {"message": f"Unable to load {path}: {str(e)}"})

|

||||

socketio.emit(

|

||||

"error", {"message": f"Unable to load {path}: {str(e)}"})

|

||||

pass

|

||||

|

||||

socketio.emit(

|

||||

@ -626,7 +700,8 @@ class InvokeAIWebServer:

|

||||

printable_parameters["init_mask"][:64] + "..."

|

||||

)

|

||||

|

||||

print(f'\n>> Image Generation Parameters:\n\n{printable_parameters}\n')

|

||||

print(

|

||||

f'\n>> Image Generation Parameters:\n\n{printable_parameters}\n')

|

||||

print(f'>> ESRGAN Parameters: {esrgan_parameters}')

|

||||

print(f'>> Facetool Parameters: {facetool_parameters}')

|

||||

|

||||

@ -662,16 +737,18 @@ class InvokeAIWebServer:

|

||||

|

||||

try:

|

||||

seed = original_image["metadata"]["image"]["seed"]

|

||||

except (KeyError) as e:

|

||||

except KeyError:

|

||||

seed = "unknown_seed"

|

||||

pass

|

||||

|

||||

if postprocessing_parameters["type"] == "esrgan":

|

||||

progress.set_current_status("common:statusUpscalingESRGAN")

|

||||

elif postprocessing_parameters["type"] == "gfpgan":

|

||||

progress.set_current_status("common:statusRestoringFacesGFPGAN")

|

||||

progress.set_current_status(

|

||||

"common:statusRestoringFacesGFPGAN")

|

||||

elif postprocessing_parameters["type"] == "codeformer":

|

||||

progress.set_current_status("common:statusRestoringFacesCodeFormer")

|

||||

progress.set_current_status(

|

||||

"common:statusRestoringFacesCodeFormer")

|

||||

|

||||

socketio.emit("progressUpdate", progress.to_formatted_dict())

|

||||

eventlet.sleep(0)

|

||||

@ -760,7 +837,7 @@ class InvokeAIWebServer:

|

||||

|

||||

@socketio.on("cancel")

|

||||

def handle_cancel():

|

||||

print(f">> Cancel processing requested")

|

||||

print(">> Cancel processing requested")

|

||||

self.canceled.set()

|

||||

|

||||

# TODO: I think this needs a safety mechanism.

|

||||

@ -842,12 +919,10 @@ class InvokeAIWebServer:

|

||||

So we need to convert each into a PIL Image.

|

||||

"""

|

||||

|

||||

truncated_outpaint_image_b64 = generation_parameters["init_img"][:64]

|

||||

truncated_outpaint_mask_b64 = generation_parameters["init_mask"][:64]

|

||||

|

||||

init_img_url = generation_parameters["init_img"]

|

||||

|

||||

original_bounding_box = generation_parameters["bounding_box"].copy()

|

||||

original_bounding_box = generation_parameters["bounding_box"].copy(

|

||||

)

|

||||

|

||||

initial_image = dataURL_to_image(

|

||||

generation_parameters["init_img"]

|

||||

@ -924,7 +999,8 @@ class InvokeAIWebServer:

|

||||

elif generation_parameters["generation_mode"] == "img2img":

|

||||

init_img_url = generation_parameters["init_img"]

|

||||

init_img_path = self.get_image_path_from_url(init_img_url)

|

||||

generation_parameters["init_img"] = Image.open(init_img_path).convert('RGB')

|

||||

generation_parameters["init_img"] = Image.open(

|

||||

init_img_path).convert('RGB')

|

||||

|

||||

def image_progress(sample, step):

|

||||

if self.canceled.is_set():

|

||||

@ -983,9 +1059,9 @@ class InvokeAIWebServer:

|

||||

},

|

||||

)

|

||||

|

||||

|

||||

if generation_parameters["progress_latents"]:

|

||||

image = self.generate.sample_to_lowres_estimated_image(sample)

|

||||

image = self.generate.sample_to_lowres_estimated_image(

|

||||

sample)

|

||||

(width, height) = image.size

|

||||

width *= 8

|

||||

height *= 8

|

||||

@ -1004,7 +1080,8 @@ class InvokeAIWebServer:

|

||||

},

|

||||

)

|

||||

|

||||

self.socketio.emit("progressUpdate", progress.to_formatted_dict())

|

||||

self.socketio.emit(

|

||||

"progressUpdate", progress.to_formatted_dict())

|

||||

eventlet.sleep(0)

|

||||

|

||||

def image_done(image, seed, first_seed, attention_maps_image=None):

|

||||

@ -1016,7 +1093,6 @@ class InvokeAIWebServer:

|

||||

nonlocal facetool_parameters

|

||||

nonlocal progress

|

||||

|

||||

step_index = 1

|

||||

nonlocal prior_variations

|

||||

|

||||

"""

|

||||

@ -1032,7 +1108,8 @@ class InvokeAIWebServer:

|

||||

|

||||

progress.set_current_status("common:statusGenerationComplete")

|

||||

|

||||

self.socketio.emit("progressUpdate", progress.to_formatted_dict())

|

||||

self.socketio.emit(

|

||||

"progressUpdate", progress.to_formatted_dict())

|

||||

eventlet.sleep(0)

|

||||

|

||||

all_parameters = generation_parameters

|

||||

@ -1043,7 +1120,8 @@ class InvokeAIWebServer:

|

||||

and all_parameters["variation_amount"] > 0

|

||||

):

|

||||

first_seed = first_seed or seed

|

||||

this_variation = [[seed, all_parameters["variation_amount"]]]

|

||||

this_variation = [

|

||||

[seed, all_parameters["variation_amount"]]]

|

||||

all_parameters["with_variations"] = (

|

||||

prior_variations + this_variation

|

||||

)

|

||||

@ -1059,7 +1137,8 @@ class InvokeAIWebServer:

|

||||

if esrgan_parameters:

|

||||

progress.set_current_status("common:statusUpscaling")

|

||||

progress.set_current_status_has_steps(False)

|

||||

self.socketio.emit("progressUpdate", progress.to_formatted_dict())

|

||||

self.socketio.emit(

|

||||

"progressUpdate", progress.to_formatted_dict())

|

||||

eventlet.sleep(0)

|

||||

|

||||

image = self.esrgan.process(

|

||||

@ -1082,12 +1161,15 @@ class InvokeAIWebServer:

|

||||

|

||||

if facetool_parameters:

|

||||

if facetool_parameters["type"] == "gfpgan":

|

||||

progress.set_current_status("common:statusRestoringFacesGFPGAN")

|

||||

progress.set_current_status(

|

||||

"common:statusRestoringFacesGFPGAN")

|

||||

elif facetool_parameters["type"] == "codeformer":

|

||||

progress.set_current_status("common:statusRestoringFacesCodeFormer")

|

||||

progress.set_current_status(

|

||||

"common:statusRestoringFacesCodeFormer")

|

||||

|

||||

progress.set_current_status_has_steps(False)

|

||||

self.socketio.emit("progressUpdate", progress.to_formatted_dict())

|

||||

self.socketio.emit(

|

||||

"progressUpdate", progress.to_formatted_dict())

|

||||

eventlet.sleep(0)

|

||||

|

||||

if facetool_parameters["type"] == "gfpgan":

|

||||

@ -1117,7 +1199,8 @@ class InvokeAIWebServer:

|

||||

all_parameters["facetool_type"] = facetool_parameters["type"]

|

||||

|

||||

progress.set_current_status("common:statusSavingImage")

|

||||

self.socketio.emit("progressUpdate", progress.to_formatted_dict())

|

||||

self.socketio.emit(

|

||||

"progressUpdate", progress.to_formatted_dict())

|

||||

eventlet.sleep(0)

|

||||

|

||||

# restore the stashed URLS and discard the paths, we are about to send the result to client

|

||||

@ -1128,12 +1211,14 @@ class InvokeAIWebServer:

|

||||

)

|

||||

|

||||

if "init_mask" in all_parameters:

|

||||

all_parameters["init_mask"] = "" # TODO: store the mask in metadata

|

||||

# TODO: store the mask in metadata

|

||||

all_parameters["init_mask"] = ""

|

||||

|

||||

if generation_parameters["generation_mode"] == "unifiedCanvas":

|

||||

all_parameters["bounding_box"] = original_bounding_box

|

||||

|

||||

metadata = self.parameters_to_generated_image_metadata(all_parameters)

|

||||

metadata = self.parameters_to_generated_image_metadata(

|

||||

all_parameters)

|

||||

|

||||

command = parameters_to_command(all_parameters)

|

||||

|

||||

@ -1163,15 +1248,18 @@ class InvokeAIWebServer:

|

||||

|

||||

if progress.total_iterations > progress.current_iteration:

|

||||

progress.set_current_step(1)

|

||||

progress.set_current_status("common:statusIterationComplete")

|

||||

progress.set_current_status(

|

||||

"common:statusIterationComplete")

|

||||

progress.set_current_status_has_steps(False)

|

||||

else:

|

||||

progress.mark_complete()

|

||||

|

||||

self.socketio.emit("progressUpdate", progress.to_formatted_dict())

|

||||

self.socketio.emit(

|

||||

"progressUpdate", progress.to_formatted_dict())

|

||||

eventlet.sleep(0)

|

||||

|

||||

parsed_prompt, _ = get_prompt_structure(generation_parameters["prompt"])

|

||||

parsed_prompt, _ = get_prompt_structure(

|

||||

generation_parameters["prompt"])

|

||||

tokens = None if type(parsed_prompt) is Blend else \

|

||||

get_tokens_for_prompt(self.generate.model, parsed_prompt)

|

||||

attention_maps_image_base64_url = None if attention_maps_image is None \

|

||||

@ -1345,7 +1433,8 @@ class InvokeAIWebServer:

|

||||

self, parameters, original_image_path

|

||||

):

|

||||

try:

|

||||

current_metadata = retrieve_metadata(original_image_path)["sd-metadata"]

|

||||

current_metadata = retrieve_metadata(

|

||||

original_image_path)["sd-metadata"]

|

||||

postprocessing_metadata = {}

|

||||

|

||||

"""

|

||||

@ -1385,7 +1474,8 @@ class InvokeAIWebServer:

|

||||

postprocessing_metadata

|

||||

)

|

||||

else:

|

||||

current_metadata["image"]["postprocessing"] = [postprocessing_metadata]

|

||||

current_metadata["image"]["postprocessing"] = [

|

||||

postprocessing_metadata]

|

||||

|

||||

return current_metadata

|

||||

|

||||

@ -1424,7 +1514,7 @@ class InvokeAIWebServer:

|

||||

if step_index:

|

||||

filename += f".{step_index}"

|

||||

if postprocessing:

|

||||

filename += f".postprocessed"

|

||||

filename += ".postprocessed"

|

||||

|

||||

filename += ".png"

|

||||

|

||||

@ -1497,7 +1587,8 @@ class InvokeAIWebServer:

|

||||

)

|

||||

elif "thumbnails" in url:

|

||||

return os.path.abspath(

|

||||

os.path.join(self.thumbnail_image_path, os.path.basename(url))

|

||||

os.path.join(self.thumbnail_image_path,

|

||||

os.path.basename(url))

|

||||

)

|

||||

else:

|

||||

return os.path.abspath(

|

||||

@ -1666,10 +1757,12 @@ def dataURL_to_image(dataURL: str) -> ImageType:

|

||||

)

|

||||

return image

|

||||

|

||||

|

||||

"""

|

||||

Converts an image into a base64 image dataURL.

|

||||

"""

|

||||

|

||||

|

||||

def image_to_dataURL(image: ImageType) -> str:

|

||||

buffered = io.BytesIO()

|

||||

image.save(buffered, format="PNG")

|

||||

@ -1679,7 +1772,6 @@ def image_to_dataURL(image: ImageType) -> str:

|

||||

return image_base64

|

||||

|

||||

|

||||

|

||||

"""

|

||||

Converts a base64 image dataURL into bytes.

|

||||

The dataURL is split on the first commma.

|

||||

|

||||

638

invokeai/frontend/dist/assets/index-6adf40ae.js

vendored

638

invokeai/frontend/dist/assets/index-6adf40ae.js

vendored

File diff suppressed because one or more lines are too long

638

invokeai/frontend/dist/assets/index-6b9f1e33.js

vendored

Normal file

638

invokeai/frontend/dist/assets/index-6b9f1e33.js

vendored

Normal file

File diff suppressed because one or more lines are too long

2

invokeai/frontend/dist/index.html

vendored

2

invokeai/frontend/dist/index.html

vendored

@ -5,7 +5,7 @@

|

||||

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

|

||||

<title>InvokeAI - A Stable Diffusion Toolkit</title>

|

||||

<link rel="shortcut icon" type="icon" href="./assets/favicon-0d253ced.ico" />

|

||||

<script type="module" crossorigin src="./assets/index-6adf40ae.js"></script>

|

||||

<script type="module" crossorigin src="./assets/index-6b9f1e33.js"></script>

|

||||

<link rel="stylesheet" href="./assets/index-fecb6dd4.css">

|

||||

</head>

|

||||

|

||||

|

||||

@ -58,5 +58,7 @@

|

||||

"statusUpscaling": "Upscaling",

|

||||

"statusUpscalingESRGAN": "Upscaling (ESRGAN)",

|

||||

"statusLoadingModel": "Loading Model",

|

||||

"statusModelChanged": "Model Changed"

|

||||

"statusModelChanged": "Model Changed",

|

||||

"statusConvertingModel": "Converting Model",

|

||||

"statusModelConverted": "Model Converted"

|

||||

}

|

||||

|

||||

@ -63,5 +63,23 @@

|

||||

"formMessageDiffusersModelLocation": "Diffusers Model Location",

|

||||

"formMessageDiffusersModelLocationDesc": "Please enter at least one.",

|

||||

"formMessageDiffusersVAELocation": "VAE Location",

|

||||

"formMessageDiffusersVAELocationDesc": "If not provided, InvokeAI will look for the VAE file inside the model location given above."

|

||||

"formMessageDiffusersVAELocationDesc": "If not provided, InvokeAI will look for the VAE file inside the model location given above.",

|

||||

"convert": "Convert",

|

||||

"convertToDiffusers": "Convert To Diffusers",

|

||||

"convertToDiffusersHelpText1": "This model will be converted to the 🧨 Diffusers format.",

|

||||

"convertToDiffusersHelpText2": "This process will replace your Model Manager entry with the Diffusers version of the same model.",

|

||||

"convertToDiffusersHelpText3": "Your checkpoint file on the disk will NOT be deleted or modified in anyway. You can add your checkpoint to the Model Manager again if you want to.",

|

||||

"convertToDiffusersHelpText4": "This is a one time process only. It might take around 30s-60s depending on the specifications of your computer.",

|

||||

"convertToDiffusersHelpText5": "Please make sure you have enough disk space. Models generally vary between 4GB-7GB in size.",

|

||||

"convertToDiffusersHelpText6": "Do you wish to convert this model?",

|

||||

"v1": "v1",

|

||||

"v2": "v2",

|

||||

"inpainting": "v1 Inpainting",

|

||||

"customConfig": "Custom Config",

|

||||

"pathToCustomConfig": "Path To Custom Config",

|

||||

"statusConverting": "Converting",

|

||||

"sameFolder": "Same Folder",

|

||||

"invokeRoot": "Invoke Models",

|

||||

"custom": "Custom",

|

||||

"customSaveLocation": "Custom Save Location"

|

||||

}

|

||||

|

||||

@ -63,5 +63,25 @@

|

||||

"formMessageDiffusersModelLocation": "Diffusers Model Location",

|

||||

"formMessageDiffusersModelLocationDesc": "Please enter at least one.",

|

||||

"formMessageDiffusersVAELocation": "VAE Location",

|

||||

"formMessageDiffusersVAELocationDesc": "If not provided, InvokeAI will look for the VAE file inside the model location given above."

|

||||

"formMessageDiffusersVAELocationDesc": "If not provided, InvokeAI will look for the VAE file inside the model location given above.",

|

||||

"convert": "Convert",

|

||||

"convertToDiffusers": "Convert To Diffusers",

|

||||

"convertToDiffusersHelpText1": "This model will be converted to the 🧨 Diffusers format.",

|

||||

"convertToDiffusersHelpText2": "This process will replace your Model Manager entry with the Diffusers version of the same model.",

|

||||

"convertToDiffusersHelpText3": "Your checkpoint file on the disk will NOT be deleted or modified in anyway. You can add your checkpoint to the Model Manager again if you want to.",

|

||||

"convertToDiffusersHelpText4": "This is a one time process only. It might take around 30s-60s depending on the specifications of your computer.",

|

||||

"convertToDiffusersHelpText5": "Please make sure you have enough disk space. Models generally vary between 4GB-7GB in size.",

|

||||

"convertToDiffusersHelpText6": "Do you wish to convert this model?",

|

||||

"convertToDiffusersSaveLocation": "Save Location",

|

||||

"v1": "v1",

|

||||

"v2": "v2",

|

||||

"inpainting": "v1 Inpainting",

|

||||

"customConfig": "Custom Config",

|

||||

"pathToCustomConfig": "Path To Custom Config",

|

||||

"statusConverting": "Converting",

|

||||

"modelConverted": "Model Converted",

|

||||

"sameFolder": "Same folder",

|

||||

"invokeRoot": "InvokeAI folder",

|

||||

"custom": "Custom",

|

||||

"customSaveLocation": "Custom Save Location"

|

||||

}

|

||||

|

||||

@ -58,5 +58,7 @@

|

||||

"statusUpscaling": "Upscaling",

|

||||

"statusUpscalingESRGAN": "Upscaling (ESRGAN)",

|

||||

"statusLoadingModel": "Loading Model",

|

||||

"statusModelChanged": "Model Changed"

|

||||

"statusModelChanged": "Model Changed",

|

||||

"statusConvertingModel": "Converting Model",

|

||||

"statusModelConverted": "Model Converted"

|

||||

}

|

||||

|

||||

@ -63,5 +63,23 @@

|

||||

"formMessageDiffusersModelLocation": "Diffusers Model Location",

|

||||

"formMessageDiffusersModelLocationDesc": "Please enter at least one.",

|

||||

"formMessageDiffusersVAELocation": "VAE Location",

|

||||

"formMessageDiffusersVAELocationDesc": "If not provided, InvokeAI will look for the VAE file inside the model location given above."

|

||||

"formMessageDiffusersVAELocationDesc": "If not provided, InvokeAI will look for the VAE file inside the model location given above.",

|

||||

"convert": "Convert",

|

||||

"convertToDiffusers": "Convert To Diffusers",

|

||||

"convertToDiffusersHelpText1": "This model will be converted to the 🧨 Diffusers format.",

|

||||

"convertToDiffusersHelpText2": "This process will replace your Model Manager entry with the Diffusers version of the same model.",

|

||||

"convertToDiffusersHelpText3": "Your checkpoint file on the disk will NOT be deleted or modified in anyway. You can add your checkpoint to the Model Manager again if you want to.",

|

||||

"convertToDiffusersHelpText4": "This is a one time process only. It might take around 30s-60s depending on the specifications of your computer.",

|

||||

"convertToDiffusersHelpText5": "Please make sure you have enough disk space. Models generally vary between 4GB-7GB in size.",

|

||||

"convertToDiffusersHelpText6": "Do you wish to convert this model?",

|

||||

"v1": "v1",

|

||||

"v2": "v2",

|

||||

"inpainting": "v1 Inpainting",

|

||||

"customConfig": "Custom Config",

|

||||

"pathToCustomConfig": "Path To Custom Config",

|

||||

"statusConverting": "Converting",

|

||||

"sameFolder": "Same Folder",

|

||||

"invokeRoot": "Invoke Models",

|

||||

"custom": "Custom",

|

||||

"customSaveLocation": "Custom Save Location"

|

||||

}

|

||||

|

||||

@ -63,5 +63,25 @@

|

||||

"formMessageDiffusersModelLocation": "Diffusers Model Location",

|

||||

"formMessageDiffusersModelLocationDesc": "Please enter at least one.",

|

||||

"formMessageDiffusersVAELocation": "VAE Location",

|

||||

"formMessageDiffusersVAELocationDesc": "If not provided, InvokeAI will look for the VAE file inside the model location given above."

|

||||

"formMessageDiffusersVAELocationDesc": "If not provided, InvokeAI will look for the VAE file inside the model location given above.",

|

||||

"convert": "Convert",

|

||||

"convertToDiffusers": "Convert To Diffusers",

|

||||

"convertToDiffusersHelpText1": "This model will be converted to the 🧨 Diffusers format.",

|

||||

"convertToDiffusersHelpText2": "This process will replace your Model Manager entry with the Diffusers version of the same model.",

|

||||

"convertToDiffusersHelpText3": "Your checkpoint file on the disk will NOT be deleted or modified in anyway. You can add your checkpoint to the Model Manager again if you want to.",

|

||||

"convertToDiffusersHelpText4": "This is a one time process only. It might take around 30s-60s depending on the specifications of your computer.",

|

||||

"convertToDiffusersHelpText5": "Please make sure you have enough disk space. Models generally vary between 4GB-7GB in size.",

|

||||

"convertToDiffusersHelpText6": "Do you wish to convert this model?",

|

||||

"convertToDiffusersSaveLocation": "Save Location",

|

||||

"v1": "v1",

|

||||

"v2": "v2",

|

||||

"inpainting": "v1 Inpainting",

|

||||

"customConfig": "Custom Config",

|

||||

"pathToCustomConfig": "Path To Custom Config",

|

||||

"statusConverting": "Converting",

|

||||

"modelConverted": "Model Converted",

|

||||

"sameFolder": "Same folder",

|

||||

"invokeRoot": "InvokeAI folder",

|

||||

"custom": "Custom",

|

||||

"customSaveLocation": "Custom Save Location"

|

||||

}

|

||||

|

||||

11

invokeai/frontend/src/app/invokeai.d.ts

vendored

11

invokeai/frontend/src/app/invokeai.d.ts

vendored

@ -219,6 +219,12 @@ export declare type InvokeDiffusersModelConfigProps = {

|

||||

};

|

||||

};

|

||||

|

||||

export declare type InvokeModelConversionProps = {

|

||||

model_name: string;

|

||||

save_location: string;

|

||||

custom_location: string | null;

|

||||

};

|

||||

|

||||

/**

|

||||

* These types type data received from the server via socketio.

|

||||

*/

|

||||

@ -228,6 +234,11 @@ export declare type ModelChangeResponse = {

|

||||

model_list: ModelList;

|

||||

};

|

||||

|

||||

export declare type ModelConvertedResponse = {

|

||||

converted_model_name: string;

|

||||

model_list: ModelList;

|

||||

};

|

||||

|

||||

export declare type ModelAddedResponse = {

|

||||

new_model_name: string;

|

||||

model_list: ModelList;

|

||||

|

||||

@ -38,6 +38,11 @@ export const addNewModel = createAction<

|

||||

|

||||

export const deleteModel = createAction<string>('socketio/deleteModel');

|

||||

|

||||

export const convertToDiffusers =

|

||||

createAction<InvokeAI.InvokeModelConversionProps>(

|

||||

'socketio/convertToDiffusers'

|

||||

);

|

||||

|

||||

export const requestModelChange = createAction<string>(

|

||||

'socketio/requestModelChange'

|

||||

);

|

||||

|

||||

@ -15,6 +15,7 @@ import {

|

||||

addLogEntry,

|

||||

generationRequested,

|

||||

modelChangeRequested,

|

||||

modelConvertRequested,

|

||||

setIsProcessing,

|

||||

} from 'features/system/store/systemSlice';

|

||||

import { InvokeTabName } from 'features/ui/store/tabMap';

|

||||

@ -178,6 +179,12 @@ const makeSocketIOEmitters = (

|

||||

emitDeleteModel: (modelName: string) => {

|

||||

socketio.emit('deleteModel', modelName);

|

||||

},

|

||||

emitConvertToDiffusers: (

|

||||

modelToConvert: InvokeAI.InvokeModelConversionProps

|

||||

) => {

|

||||

dispatch(modelConvertRequested());

|

||||

socketio.emit('convertToDiffusers', modelToConvert);

|

||||

},

|

||||

emitRequestModelChange: (modelName: string) => {

|

||||

dispatch(modelChangeRequested());

|

||||

socketio.emit('requestModelChange', modelName);

|

||||

|

||||

@ -365,6 +365,7 @@ const makeSocketIOListeners = (

|

||||

const { new_model_name, model_list, update } = data;

|

||||

dispatch(setModelList(model_list));

|

||||

dispatch(setIsProcessing(false));

|

||||

dispatch(setCurrentStatus(i18n.t('modelmanager:modelAdded')));

|

||||

dispatch(

|

||||

addLogEntry({

|

||||

timestamp: dateFormat(new Date(), 'isoDateTime'),

|

||||

@ -407,6 +408,30 @@ const makeSocketIOListeners = (

|

||||

})

|

||||

);

|

||||

},

|

||||

onModelConverted: (data: InvokeAI.ModelConvertedResponse) => {

|

||||

const { converted_model_name, model_list } = data;

|

||||

dispatch(setModelList(model_list));

|

||||

dispatch(setCurrentStatus(i18n.t('common:statusModelConverted')));

|

||||

dispatch(setIsProcessing(false));

|

||||

dispatch(setIsCancelable(true));

|

||||

dispatch(

|

||||

addLogEntry({

|

||||

timestamp: dateFormat(new Date(), 'isoDateTime'),

|

||||

message: `Model converted: ${converted_model_name}`,

|

||||

level: 'info',

|

||||

})

|

||||

);

|

||||

dispatch(

|

||||

addToast({

|

||||

title: `${i18n.t(

|

||||

'modelmanager:modelConverted'

|

||||

)}: ${converted_model_name}`,

|

||||

status: 'success',

|

||||

duration: 2500,

|

||||

isClosable: true,

|

||||

})

|

||||

);

|

||||

},

|

||||

onModelChanged: (data: InvokeAI.ModelChangeResponse) => {

|

||||

const { model_name, model_list } = data;

|

||||

dispatch(setModelList(model_list));

|

||||

|

||||

@ -48,6 +48,7 @@ export const socketioMiddleware = () => {

|

||||

onFoundModels,

|

||||

onNewModelAdded,

|

||||

onModelDeleted,

|

||||

onModelConverted,

|

||||

onModelChangeFailed,

|

||||

onTempFolderEmptied,

|

||||

} = makeSocketIOListeners(store);

|

||||

@ -64,6 +65,7 @@ export const socketioMiddleware = () => {

|

||||

emitSearchForModels,

|

||||

emitAddNewModel,

|

||||

emitDeleteModel,

|

||||

emitConvertToDiffusers,

|

||||

emitRequestModelChange,

|

||||

emitSaveStagingAreaImageToGallery,

|

||||

emitRequestEmptyTempFolder,

|

||||

@ -125,6 +127,10 @@ export const socketioMiddleware = () => {

|

||||

onModelDeleted(data);

|

||||

});

|

||||

|

||||

socketio.on('modelConverted', (data: InvokeAI.ModelConvertedResponse) => {

|

||||

onModelConverted(data);

|

||||

});

|

||||

|

||||

socketio.on('modelChanged', (data: InvokeAI.ModelChangeResponse) => {

|

||||

onModelChanged(data);

|

||||

});

|

||||

@ -199,6 +205,11 @@ export const socketioMiddleware = () => {

|

||||

break;

|

||||

}

|

||||

|

||||

case 'socketio/convertToDiffusers': {

|

||||

emitConvertToDiffusers(action.payload);

|

||||

break;

|

||||

}

|

||||

|

||||

case 'socketio/requestModelChange': {

|

||||

emitRequestModelChange(action.payload);

|

||||

break;

|

||||

|

||||

@ -27,6 +27,7 @@ import type { InvokeModelConfigProps } from 'app/invokeai';

|

||||

import type { RootState } from 'app/store';

|

||||

import type { FieldInputProps, FormikProps } from 'formik';

|

||||

import { isEqual, pickBy } from 'lodash';

|

||||

import ModelConvert from './ModelConvert';

|

||||

|

||||

const selector = createSelector(

|

||||

[systemSelector],

|

||||

@ -101,10 +102,11 @@ export default function CheckpointModelEdit() {

|

||||

|

||||

return openModel ? (

|

||||

<Flex flexDirection="column" rowGap="1rem" width="100%">

|

||||

<Flex alignItems="center">

|

||||

<Flex alignItems="center" gap={4} justifyContent="space-between">

|

||||

<Text fontSize="lg" fontWeight="bold">

|

||||

{openModel}

|

||||

</Text>

|

||||

<ModelConvert model={openModel} />

|

||||

</Flex>

|

||||

<Flex

|

||||

flexDirection="column"

|

||||

|

||||

@ -0,0 +1,148 @@

|

||||

import {

|

||||

Flex,

|

||||

ListItem,

|

||||

Radio,

|

||||

RadioGroup,

|

||||

Text,

|

||||

UnorderedList,

|

||||

Tooltip,

|

||||

} from '@chakra-ui/react';

|

||||

import { convertToDiffusers } from 'app/socketio/actions';

|

||||

import { RootState } from 'app/store';

|

||||

import { useAppDispatch, useAppSelector } from 'app/storeHooks';

|

||||

import IAIAlertDialog from 'common/components/IAIAlertDialog';

|

||||

import IAIButton from 'common/components/IAIButton';

|

||||

import IAIInput from 'common/components/IAIInput';

|

||||

import { useState, useEffect } from 'react';

|

||||

import { useTranslation } from 'react-i18next';

|

||||

|

||||

interface ModelConvertProps {

|

||||

model: string;

|

||||

}

|

||||

|

||||

export default function ModelConvert(props: ModelConvertProps) {

|

||||

const { model } = props;

|

||||

|

||||

const model_list = useAppSelector(

|

||||

(state: RootState) => state.system.model_list

|

||||

);

|

||||

|

||||

const retrievedModel = model_list[model];

|

||||

|

||||

const dispatch = useAppDispatch();

|

||||

const { t } = useTranslation();

|

||||

|

||||

const isProcessing = useAppSelector(

|

||||

(state: RootState) => state.system.isProcessing

|

||||

);

|

||||

|

||||

const isConnected = useAppSelector(

|

||||

(state: RootState) => state.system.isConnected

|

||||

);

|

||||

|

||||

const [saveLocation, setSaveLocation] = useState<string>('same');

|

||||

const [customSaveLocation, setCustomSaveLocation] = useState<string>('');

|

||||

|

||||

useEffect(() => {

|

||||

setSaveLocation('same');

|

||||

}, [model]);

|

||||

|

||||

const modelConvertCancelHandler = () => {

|

||||

setSaveLocation('same');

|

||||

};

|

||||

|

||||

const modelConvertHandler = () => {

|

||||

const modelToConvert = {

|

||||

model_name: model,

|

||||

save_location: saveLocation,

|

||||

custom_location:

|

||||

saveLocation === 'custom' && customSaveLocation !== ''

|

||||

? customSaveLocation

|

||||

: null,

|

||||

};

|

||||

dispatch(convertToDiffusers(modelToConvert));

|

||||

};

|

||||

|

||||

return (

|

||||

<IAIAlertDialog

|

||||

title={`${t('modelmanager:convert')} ${model}`}

|

||||

acceptCallback={modelConvertHandler}

|

||||

cancelCallback={modelConvertCancelHandler}

|

||||

acceptButtonText={`${t('modelmanager:convert')}`}

|

||||

triggerComponent={

|

||||

<IAIButton

|

||||

size={'sm'}

|

||||

aria-label={t('modelmanager:convertToDiffusers')}

|

||||

isDisabled={

|

||||

retrievedModel.status === 'active' || isProcessing || !isConnected

|

||||

}

|

||||

className=" modal-close-btn"

|

||||

marginRight="2rem"

|

||||

>

|

||||

🧨 {t('modelmanager:convertToDiffusers')}

|

||||

</IAIButton>

|

||||

}

|

||||

motionPreset="slideInBottom"

|

||||

>

|

||||

<Flex flexDirection="column" rowGap={4}>

|

||||

<Text>{t('modelmanager:convertToDiffusersHelpText1')}</Text>

|

||||

<UnorderedList>

|

||||

<ListItem>{t('modelmanager:convertToDiffusersHelpText2')}</ListItem>

|

||||

<ListItem>{t('modelmanager:convertToDiffusersHelpText3')}</ListItem>

|

||||

<ListItem>{t('modelmanager:convertToDiffusersHelpText4')}</ListItem>

|

||||

<ListItem>{t('modelmanager:convertToDiffusersHelpText5')}</ListItem>

|

||||

</UnorderedList>

|

||||

<Text>{t('modelmanager:convertToDiffusersHelpText6')}</Text>

|

||||

</Flex>

|

||||

|

||||

<Flex flexDir="column" gap={4}>

|

||||

<Flex marginTop="1rem" flexDir="column" gap={2}>

|

||||

<Text fontWeight="bold">

|

||||

{t('modelmanager:convertToDiffusersSaveLocation')}

|

||||

</Text>

|

||||

<RadioGroup value={saveLocation} onChange={(v) => setSaveLocation(v)}>

|

||||

<Flex gap={4}>

|

||||

<Radio value="same">

|

||||

<Tooltip label="Save converted model in the same folder">

|

||||

{t('modelmanager:sameFolder')}

|

||||

</Tooltip>

|

||||

</Radio>

|

||||

|

||||

<Radio value="root">

|

||||

<Tooltip label="Save converted model in the InvokeAI root folder">

|

||||

{t('modelmanager:invokeRoot')}

|

||||

</Tooltip>

|

||||

</Radio>

|

||||

|

||||

<Radio value="custom">

|

||||

<Tooltip label="Save converted model in a custom folder">

|

||||

{t('modelmanager:custom')}

|

||||

</Tooltip>

|

||||

</Radio>

|

||||

</Flex>

|

||||

</RadioGroup>

|

||||

</Flex>

|

||||

|

||||

{saveLocation === 'custom' && (

|

||||

<Flex flexDirection="column" rowGap={2}>

|

||||

<Text

|

||||

fontWeight="bold"

|

||||

fontSize="sm"

|

||||

color="var(--text-color-secondary)"

|

||||

>

|

||||

{t('modelmanager:customSaveLocation')}

|

||||

</Text>

|

||||

<IAIInput

|

||||

value={customSaveLocation}

|

||||

onChange={(e) => {

|

||||

if (e.target.value !== '')

|

||||

setCustomSaveLocation(e.target.value);

|

||||

}}

|

||||

width="25rem"

|

||||

/>

|

||||

</Flex>

|

||||

)}

|

||||

</Flex>

|

||||

</IAIAlertDialog>

|

||||

);

|

||||

}

|

||||

@ -83,6 +83,7 @@ export default function ModelListItem(props: ModelListItemProps) {

|

||||

>

|

||||

{t('modelmanager:load')}

|

||||

</Button>

|

||||

|

||||

<IAIIconButton

|

||||

icon={<EditIcon />}

|

||||

size={'sm'}

|

||||

|

||||

@ -3,7 +3,16 @@ import IAICheckbox from 'common/components/IAICheckbox';

|

||||

import IAIIconButton from 'common/components/IAIIconButton';

|

||||

import React from 'react';

|

||||

|

||||

import { Box, Flex, FormControl, HStack, Text, VStack } from '@chakra-ui/react';

|

||||

import {

|

||||

Box,

|

||||

Flex,

|

||||

FormControl,

|

||||

HStack,

|

||||

Radio,

|

||||

RadioGroup,

|

||||

Text,

|

||||

VStack,

|

||||

} from '@chakra-ui/react';

|

||||

import { createSelector } from '@reduxjs/toolkit';

|

||||

import { useAppDispatch, useAppSelector } from 'app/storeHooks';

|

||||

import { systemSelector } from 'features/system/store/systemSelectors';

|

||||

@ -135,6 +144,8 @@ export default function SearchModels() {

|

||||

);

|

||||

|

||||

const [modelsToAdd, setModelsToAdd] = React.useState<string[]>([]);

|

||||

const [modelType, setModelType] = React.useState<string>('v1');

|

||||

const [pathToConfig, setPathToConfig] = React.useState<string>('');

|

||||

|

||||

const resetSearchModelHandler = () => {

|

||||

dispatch(setSearchFolder(null));

|

||||

@ -167,11 +178,19 @@ export default function SearchModels() {

|

||||

const modelsToBeAdded = foundModels?.filter((foundModel) =>

|

||||

modelsToAdd.includes(foundModel.name)

|

||||

);

|

||||

|

||||

const configFiles = {

|

||||

v1: 'configs/stable-diffusion/v1-inference.yaml',

|

||||

v2: 'configs/stable-diffusion/v2-inference-v.yaml',

|

||||

inpainting: 'configs/stable-diffusion/v1-inpainting-inference.yaml',

|

||||

custom: pathToConfig,

|

||||

};

|

||||

|

||||

modelsToBeAdded?.forEach((model) => {

|

||||

const modelFormat = {

|

||||

name: model.name,

|

||||

description: '',

|

||||

config: 'configs/stable-diffusion/v1-inference.yaml',

|

||||

config: configFiles[modelType as keyof typeof configFiles],

|

||||

weights: model.location,

|

||||

vae: '',

|

||||

width: 512,

|

||||

@ -346,6 +365,55 @@ export default function SearchModels() {

|

||||

{t('modelmanager:addSelected')}

|

||||

</IAIButton>

|

||||

</Flex>

|

||||

|

||||

<Flex

|

||||

gap={4}

|

||||

backgroundColor="var(--background-color)"

|

||||

padding="1rem 1rem"

|

||||

borderRadius="0.2rem"

|

||||

flexDirection="column"

|

||||

>

|

||||

<Flex gap={4}>

|

||||

<Text fontWeight="bold" color="var(--text-color-secondary)">

|

||||

Pick Model Type:

|

||||

</Text>

|

||||

<RadioGroup

|

||||

value={modelType}

|

||||

onChange={(v) => setModelType(v)}

|

||||

defaultValue="v1"

|

||||

name="model_type"

|

||||

>

|

||||

<Flex gap={4}>

|

||||

<Radio value="v1">{t('modelmanager:v1')}</Radio>

|

||||

<Radio value="v2">{t('modelmanager:v2')}</Radio>

|

||||

<Radio value="inpainting">

|

||||

{t('modelmanager:inpainting')}

|

||||

</Radio>

|

||||

<Radio value="custom">{t('modelmanager:customConfig')}</Radio>

|

||||

</Flex>

|

||||

</RadioGroup>

|

||||

</Flex>

|

||||

|

||||

{modelType === 'custom' && (

|

||||

<Flex flexDirection="column" rowGap={2}>

|

||||

<Text

|

||||

fontWeight="bold"

|

||||

fontSize="sm"

|

||||

color="var(--text-color-secondary)"

|

||||

>

|

||||

{t('modelmanager:pathToCustomConfig')}

|

||||

</Text>

|

||||

<IAIInput

|

||||

value={pathToConfig}

|

||||

onChange={(e) => {

|

||||

if (e.target.value !== '') setPathToConfig(e.target.value);

|

||||

}}

|

||||

width="42.5rem"

|

||||

/>

|

||||

</Flex>

|

||||

)}

|

||||

</Flex>

|

||||

|

||||

<Flex

|

||||

rowGap="1rem"

|

||||

flexDirection="column"

|

||||

|

||||

@ -214,6 +214,12 @@ export const systemSlice = createSlice({

|

||||

state.isProcessing = true;

|

||||

state.currentStatusHasSteps = false;

|

||||

},

|

||||

modelConvertRequested: (state) => {

|

||||

state.currentStatus = i18n.t('common:statusConvertingModel');

|

||||

state.isCancelable = false;

|

||||

state.isProcessing = true;

|

||||

state.currentStatusHasSteps = false;

|

||||

},

|

||||

setSaveIntermediatesInterval: (state, action: PayloadAction<number>) => {

|

||||

state.saveIntermediatesInterval = action.payload;

|

||||

},

|

||||

@ -265,6 +271,7 @@ export const {

|

||||

setModelList,

|

||||

setIsCancelable,

|

||||

modelChangeRequested,

|

||||

modelConvertRequested,

|

||||

setSaveIntermediatesInterval,

|

||||

setEnableImageDebugging,

|

||||

generationRequested,

|

||||

|

||||

File diff suppressed because one or more lines are too long

@ -33,7 +33,7 @@ Globals.models_file = 'models.yaml'

|

||||

Globals.models_dir = 'models'

|

||||

Globals.config_dir = 'configs'

|

||||

Globals.autoscan_dir = 'weights'

|

||||

Globals.converted_ckpts_dir = 'converted-ckpts'

|

||||

Globals.converted_ckpts_dir = 'converted_ckpts'

|

||||

|

||||

# Try loading patchmatch

|

||||

Globals.try_patchmatch = True

|

||||

@ -66,6 +66,9 @@ def global_models_dir()->Path:

|

||||

def global_autoscan_dir()->Path:

|

||||

return Path(Globals.root, Globals.autoscan_dir)

|

||||

|

||||

def global_converted_ckpts_dir()->Path:

|

||||

return Path(global_models_dir(), Globals.converted_ckpts_dir)

|

||||

|

||||

def global_set_root(root_dir:Union[str,Path]):

|

||||

Globals.root = root_dir

|

||||

|

||||

|

||||

@ -759,7 +759,7 @@ class ModelManager(object):

|

||||

return

|

||||

|

||||

model_name = model_name or diffusers_path.name

|

||||

model_description = model_description or "Optimized version of {model_name}"

|

||||

model_description = model_description or f"Optimized version of {model_name}"

|

||||

print(f">> Optimizing {model_name} (30-60s)")

|

||||

try:

|

||||

# By passing the specified VAE too the conversion function, the autoencoder

|

||||

@ -799,15 +799,17 @@ class ModelManager(object):

|

||||

models_folder_safetensors = Path(search_folder).glob("**/*.safetensors")

|

||||

|

||||

ckpt_files = [x for x in models_folder_ckpt if x.is_file()]

|

||||

safetensor_files = [x for x in models_folder_safetensors if x.is_file]

|

||||

safetensor_files = [x for x in models_folder_safetensors if x.is_file()]

|

||||

|

||||

files = ckpt_files + safetensor_files

|

||||

|

||||

found_models = []

|

||||

for file in files:

|

||||

found_models.append(

|

||||

{"name": file.stem, "location": str(file.resolve()).replace("\\", "/")}

|

||||

)

|

||||

location = str(file.resolve()).replace("\\", "/")

|

||||

if 'model.safetensors' not in location and 'diffusion_pytorch_model.safetensors' not in location:

|

||||

found_models.append(

|

||||

{"name": file.stem, "location": location}

|

||||

)

|

||||

|

||||

return search_folder, found_models

|

||||

|

||||

|

||||

Loading…

x

Reference in New Issue

Block a user