Squashed commit of the following:

commit 1c649e4663f37b51b42a561548c7e03d7efb209e

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 12 13:29:16 2022 -0400

fix torchvision dependency version #511

commit 4d197f699e1e8c3b0e7c1b71c30261a49370ee8d

Merge: a3e07fb 190ba78

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 12 07:29:19 2022 -0400

Merge branch 'development' of github.com:lstein/stable-diffusion into development

commit a3e07fb84ad51eab2aa586edaa011bbd4e01b395

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 12 07:28:58 2022 -0400

fix grid crash

commit 9fa1f31bf2f80785492927959c58e4b0825fb2e4

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 12 07:07:05 2022 -0400

fix opencv and realesrgan dependencies in mac install

commit 190ba78960c0c45bd1c51626e303b8c78a17b0c1

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 12 01:50:58 2022 -0400

Update requirements-mac.txt

Fixed dangling dash on last line.

commit 25d9ccc5091cc6452d8597453dcfe6c79327aa3a

Author: Any-Winter-4079 <50542132+Any-Winter-4079@users.noreply.github.com>

Date: Mon Sep 12 03:17:29 2022 +0200

Update model.py

commit 9cdf3aca7d2a7a6e85ec0a2732eb8e5a2dd60329

Author: Any-Winter-4079 <50542132+Any-Winter-4079@users.noreply.github.com>

Date: Mon Sep 12 02:52:36 2022 +0200

Update attention.py

Performance improvements to generate larger images in M1 #431

Update attention.py

Added dtype=r1.dtype to softmax

commit 49a96b90d846bcff17582273cacad596eff30658

Author: Mihai <299015+mh-dm@users.noreply.github.com>

Date: Sat Sep 10 16:58:07 2022 +0300

~7% speedup (1.57 to 1.69it/s) from switch to += in ldm.modules.attention. (#482)

Tested on 8GB eGPU nvidia setup so YMMV.

512x512 output, max VRAM stays same.

commit aba94b85e88cde654dd03bdec493a6d3b232f931

Author: Niek van der Maas <mail@niekvandermaas.nl>

Date: Fri Sep 9 15:01:37 2022 +0200

Fix macOS `pyenv` instructions, add code block highlight (#441)

Fix: `anaconda3-latest` does not work, specify the correct virtualenv, add missing init.

commit aac5102cf3850781a635cacc3150dd6bb4f486a8

Author: Henry van Megen <h.vanmegen@gmail.com>

Date: Thu Sep 8 05:16:35 2022 +0200

Disabled debug output (#436)

Co-authored-by: Henry van Megen <hvanmegen@gmail.com>

commit 0ab5a3646424467b459ea878d49cfc23f4a5ea35

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 17:19:46 2022 -0400

fix missing lines in outputs

commit 5e433728b550de9f56a2f124c8b325b3a5f2bd2f

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 16:20:14 2022 -0400

upped max_steps in v1-finetune.yaml and fixed TI docs to address #493

commit 7708f4fb98510dff504041231261c039a2c718de

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 16:03:37 2022 -0400

slight efficiency gain by using += in attention.py

commit b86a1deb00892f2b5f260659377d27790ef14016

Author: blessedcoolant <54517381+blessedcoolant@users.noreply.github.com>

Date: Mon Sep 12 07:47:12 2022 +1200

Remove print statement styling (#504)

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

commit 4951e66103878e5d5c8943a710ebce9320888252

Author: chromaticist <mhostick@gmail.com>

Date: Sun Sep 11 12:44:26 2022 -0700

Adding support for .bin files from huggingface concepts (#498)

* Adding support for .bin files from huggingface concepts

* Updating documentation to include huggingface .bin info

commit 79b445b0ca43b3592a829909dc4507cb1ecbe9e0

Merge: a323070 f7662c1

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 15:39:38 2022 -0400

Merge branch 'development' of github.com:lstein/stable-diffusion into development

commit a323070a4dbb1ce62db94342a2ab8e4adef833d6

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 15:28:57 2022 -0400

update requirements for new location of gfpgan

commit f7662c1808acc1704316d3b84d4baeacf1b24018

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 15:00:24 2022 -0400

update requirements for changed location of gfpgan

commit 93c242c9fbef91d87a6bbf42db2267dbd51e5739

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 14:47:58 2022 -0400

make gfpgan_model_exists flag available to web interface

commit c7c6cd7735b5c32e58349ca998a925cbaed7b376

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 14:43:07 2022 -0400

Update UPSCALE.md

New instructions needed to accommodate fact that the ESRGAN and GFPGAN packages are now installed by environment.yaml.

commit 77ca83e1031639f1e15cb7451e53dd8e37d1e971

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 14:31:56 2022 -0400

Update CLI.md

Final documentation tweak.

commit 0ea145d1884ce2316452124fd51a879506e2988d

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 14:29:26 2022 -0400

Update CLI.md

More doc fixes.

commit 162285ae86a2ab0bb26749387186c82b6bbf851d

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 14:28:45 2022 -0400

Update CLI.md

Minor documentation fix

commit 37c921dfe2aa25342934a101bf83eea4c0f5cfb7

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 14:26:41 2022 -0400

documentation enhancements

commit 4f72cb44ad0429874c9ba507d325267e295a040c

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 13:05:38 2022 -0400

moved the notebook files into their own directory

commit 878ef2e9e095ab08d00532f8a19556b8949b2dbb

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 12:58:06 2022 -0400

documentation tweaks

commit 4923118610ecaced2a670d108aef81c220d3507a

Merge: 16f6a67 defafc0

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 12:51:25 2022 -0400

Merge branch 'development' of github.com:lstein/stable-diffusion into development

commit defafc0e8e0e69b39fd13db12036e1d01e7a19f1

Author: Dominic Letz <dominic@diode.io>

Date: Sun Sep 11 18:51:01 2022 +0200

Enable upscaling on m1 (#474)

commit 16f6a6731d80fcc04dcdb693d74fc5c21e753c10

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 12:47:26 2022 -0400

install GFPGAN inside SD repository in order to fix 'dark cast' issue #169

commit 0881d429f2ddcd288aa673b2b5e9435a8a44371a

Author: blessedcoolant <54517381+blessedcoolant@users.noreply.github.com>

Date: Mon Sep 12 03:52:43 2022 +1200

Docs Update (#466)

Authored-by: @blessedcoolant

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

commit 9a29d442b437d650bd42516bbb24ebbcd0d6cd74

Author: Gérald LONLAS <gerald@lonlas.com>

Date: Sun Sep 11 23:23:18 2022 +0800

Revert "Add 3x Upscale option on the Web UI (#442)" (#488)

This reverts commit f8a540881c79ae657dc05b47bc71f8648e9f9782.

commit d301836fbdfce0a3f12b19ae6415e7ae14f53ed2

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 10:52:19 2022 -0400

can select prior output for init_img using -1, -2, etc

commit 70aa674e9e10d03eb462249764695ef1d4e1e28c

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 10:34:06 2022 -0400

merge PR #495 - keep using float16 in ldm.modules.attention

commit 8748370f44e28b104fbaa23b4e2e54e64102d799

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 10:22:32 2022 -0400

negative -S indexing recovers correct previous seed; closes issue #476

commit 839e30e4b8ca6554017fbab671bdf85fadf9a6ea

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 10:02:44 2022 -0400

improve CUDA VRAM monitoring

extra check that device==cuda before getting VRAM stats

commit bfb278127923fbd461c4549a4b7f2f2c1dd34b8c

Author: tildebyte <337875+tildebyte@users.noreply.github.com>

Date: Sat Sep 10 10:15:56 2022 -0400

fix(readme): add note about updating env via conda (#475)

commit 5c439888626145f94db1fdb00f5787ad27b64602

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 10 10:02:43 2022 -0400

reduce VRAM memory usage by half during model loading

* This moves the call to half() before model.to(device) to avoid GPU

copy of full model. Improves speed and reduces memory usage dramatically

* This fix contributed by @mh-dm (Mihai)

commit 99122708ca3342e00063c687f149c950cfd87200

Merge: 817c4a2 ecc6b75

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 10 09:54:34 2022 -0400

Merge branch 'development' of github.com:lstein/stable-diffusion into development

commit 817c4a26de0d01b109550e6db9d4c3ece9f37c1b

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 10 09:53:27 2022 -0400

remove -F option from normalized prompt; closes #483

commit ecc6b75a3ede6d1d2850d69e998c92c342efdf2d

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 10 09:53:27 2022 -0400

remove -F option from normalized prompt

commit 723d07444205a9c3da96926630c1dc705db3f130

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Fri Sep 9 18:49:51 2022 -0400

Allow ctrl c when using --from_file (#472)

* added ansi escapes to highlight key parts of CLI session

* adjust exception handling so that ^C will abort when reading prompts from a file

commit 75f633cda887d7bfcca3ef529d25c52461e11d99

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Fri Sep 9 12:03:45 2022 -0400

re-add new logo

commit 10db192cc4be66b3cebbdaa48a1806807578b56f

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Fri Sep 9 09:26:10 2022 -0400

changes to dogettx optimizations to run on m1

* Author @any-winter-4079

* Author @dogettx

Thanks to many individuals who contributed time and hardware to

benchmarking and debugging these changes.

commit c85ae00b33d619ab5448246ecda6c8e40d66fa3e

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 23:57:45 2022 -0400

fix bug which caused seed to get "stuck" on previous image even when UI specified -1

commit 1b5aae3ef3218b3f07b9ec48ce72589c0ad33746

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 22:36:47 2022 -0400

add icon to dream web server

commit 6abf739315ef83202ff5ad2144888f79f480d88d

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 22:25:09 2022 -0400

add favicon to web server

commit db825b813805b7428465e42377d756009e09e836

Merge: 33874ba afee7f9

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 22:17:37 2022 -0400

Merge branch 'deNULL-development' into development

commit 33874bae8db71dcdb5525826a1ec93b105e841ad

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 22:16:29 2022 -0400

Squashed commit of the following:

commit afee7f9cea2a73a3d62ced667e88aa0fe15020e4

Merge: 6531446 171f8db

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 22:14:32 2022 -0400

Merge branch 'development' of github.com:deNULL/stable-diffusion into deNULL-development

commit 171f8db742f18532b6fa03cdfbf4be2bbf6cf3ad

Author: Denis Olshin <me@denull.ru>

Date: Thu Sep 8 03:15:20 2022 +0300

saving full prompt to metadata when using web ui

commit d7e67b62f0ea9b7c8394b7c48786f5cf9c6f9e94

Author: Denis Olshin <me@denull.ru>

Date: Thu Sep 8 01:51:47 2022 +0300

better logic for clicking to make variations

commit afee7f9cea2a73a3d62ced667e88aa0fe15020e4

Merge: 6531446 171f8db

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 22:14:32 2022 -0400

Merge branch 'development' of github.com:deNULL/stable-diffusion into deNULL-development

commit 653144694fbb928d387c615c013ab0f2f1d5ca7f

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 20:41:37 2022 -0400

work around unexplained crash when timesteps=1000 (#440)

* work around unexplained crash when timesteps=1000

* this fix seems to work

commit c33a84cdfdb861a77916cd499e561d4c68ee192a

Author: blessedcoolant <54517381+blessedcoolant@users.noreply.github.com>

Date: Fri Sep 9 12:39:51 2022 +1200

Add New Logo (#454)

* Add instructions on how to install alongside pyenv (#393)

Like probably many others, I have a lot of different virtualenvs, one for each project. Most of them are handled by `pyenv`.

After installing according to these instructions I had issues with ´pyenv`and `miniconda` fighting over the $PATH of my system.

But then I stumbled upon this nice solution on SO: https://stackoverflow.com/a/73139031 , upon which I have based my suggested changes.

It runs perfectly on my M1 setup, with the anaconda setup as a virtual environment handled by pyenv.

Feel free to incorporate these instructions as you see fit.

Thanks a million for all your hard work.

* Disabled debug output (#436)

Co-authored-by: Henry van Megen <hvanmegen@gmail.com>

* Add New Logo

Co-authored-by: Håvard Gulldahl <havard@lurtgjort.no>

Co-authored-by: Henry van Megen <h.vanmegen@gmail.com>

Co-authored-by: Henry van Megen <hvanmegen@gmail.com>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

commit f8a540881c79ae657dc05b47bc71f8648e9f9782

Author: Gérald LONLAS <gerald@lonlas.com>

Date: Fri Sep 9 01:45:54 2022 +0800

Add 3x Upscale option on the Web UI (#442)

commit 244239e5f656e1f34830b8e8ce99a40decbea324

Author: James Reynolds <magnusviri@users.noreply.github.com>

Date: Thu Sep 8 05:36:33 2022 -0600

macOS CI workflow, dream.py exits with an error, but the workflow com… (#396)

* macOS CI workflow, dream.py exits with an error, but the workflow completes.

* Files for testing

Co-authored-by: James Reynolds <magnsuviri@me.com>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

commit 711d49ed30a0741558ed06d6be38680e00272774

Author: James Reynolds <magnusviri@users.noreply.github.com>

Date: Thu Sep 8 05:35:08 2022 -0600

Cache model workflow (#394)

* Add workflow that caches the model, step 1 for CI

* Change name of workflow job

Co-authored-by: James Reynolds <magnsuviri@me.com>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

commit 7996a30e3aea1ae9611bbce6e6efaac60aeb95d4

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 07:34:03 2022 -0400

add auto-creation of mask for inpainting (#438)

* now use a single init image for both image and mask

* turn on debugging for now to write out mask and image

* add back -M option as a fallback

commit a69ca31f349ddcf4c94fd009dc896f4e653f7fa4

Author: elliotsayes <elliotsayes@gmail.com>

Date: Thu Sep 8 15:30:06 2022 +1200

.gitignore WebUI temp files (#430)

* Add instructions on how to install alongside pyenv (#393)

Like probably many others, I have a lot of different virtualenvs, one for each project. Most of them are handled by `pyenv`.

After installing according to these instructions I had issues with ´pyenv`and `miniconda` fighting over the $PATH of my system.

But then I stumbled upon this nice solution on SO: https://stackoverflow.com/a/73139031 , upon which I have based my suggested changes.

It runs perfectly on my M1 setup, with the anaconda setup as a virtual environment handled by pyenv.

Feel free to incorporate these instructions as you see fit.

Thanks a million for all your hard work.

* .gitignore WebUI temp files

Co-authored-by: Håvard Gulldahl <havard@lurtgjort.no>

commit 5c6b612a722ff9cde1a5ddf9b29874842f1d5a26

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Wed Sep 7 22:50:55 2022 -0400

fix bug that caused same seed to be redisplayed repeatedly

commit 56f155c5907224b4276adb6ba01bd5c1a3401ee3

Author: Johan Roxendal <johan@roxendal.com>

Date: Thu Sep 8 04:50:06 2022 +0200

added support for parsing run log and displaying images in the frontend init state (#410)

Co-authored-by: Johan Roxendal <johan.roxendal@litteraturbanken.se>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

commit 41687746be5290a4c3d3437957307666d956ae9d

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Wed Sep 7 20:24:35 2022 -0400

added missing initialization of latent_noise to None

commit 171f8db742f18532b6fa03cdfbf4be2bbf6cf3ad

Author: Denis Olshin <me@denull.ru>

Date: Thu Sep 8 03:15:20 2022 +0300

saving full prompt to metadata when using web ui

commit d7e67b62f0ea9b7c8394b7c48786f5cf9c6f9e94

Author: Denis Olshin <me@denull.ru>

Date: Thu Sep 8 01:51:47 2022 +0300

better logic for clicking to make variations

commit d1d044aa87cf8ba95a7e2e553c7fd993ec81a6d7

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Wed Sep 7 17:56:59 2022 -0400

actual image seed now written into web log rather than -1 (#428)

commit edada042b318028c77ab50dfbaa0b2671cc69e61

Author: Arturo Mendivil <60411196+artmen1516@users.noreply.github.com>

Date: Wed Sep 7 10:42:26 2022 -0700

Improve notebook and add requirements file (#422)

commit 29ab3c20280bfa73b9a89c8bd9dc99dc0ad7b651

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Wed Sep 7 13:28:11 2022 -0400

disable neonpixel optimizations on M1 hardware (#414)

* disable neonpixel optimizations on M1 hardware

* fix typo that was causing random noise images on m1

commit 7670ecc63f3e30e320e2c4197eb7140c6196c168

Author: cody <cnmizell@gmail.com>

Date: Wed Sep 7 12:24:41 2022 -0500

add more keyboard support on the web server (#391)

add ability to submit prompts with the "enter" key

add ability to cancel generations with the "escape" key

commit dd2aedacaf27d8fe750a342c310bc88de5311931

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Wed Sep 7 13:23:53 2022 -0400

report VRAM usage stats during initial model loading (#419)

commit f6284777e6d79bd3d1e85b83aa72d774299a7403

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Tue Sep 6 17:12:39 2022 -0400

Squashed commit of the following:

commit 7d1344282d942a33dcecda4d5144fc154ec82915

Merge: caf4ea3 ebeb556

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 5 10:07:27 2022 -0400

Merge branch 'development' of github.com:WebDev9000/stable-diffusion into WebDev9000-development

commit ebeb556af9c99b491a83c72f83512683a02a82ad

Author: Web Dev 9000 <rirath@gmail.com>

Date: Sun Sep 4 18:05:15 2022 -0700

Fixed unintentionally removed lines

commit ff2c4b9a1b773b95686d5f3e546e1194de054694

Author: Web Dev 9000 <rirath@gmail.com>

Date: Sun Sep 4 17:50:13 2022 -0700

Add ability to recreate variations via image click

commit c012929cdae7c37aa3b3b4fa2e7de465458f732a

Author: Web Dev 9000 <rirath@gmail.com>

Date: Sun Sep 4 14:35:33 2022 -0700

Add files via upload

commit 02a601899214adfe4536ce0ba67694a46319fd51

Author: Web Dev 9000 <rirath@gmail.com>

Date: Sun Sep 4 14:35:07 2022 -0700

Add files via upload

commit eef788981cbed7c68ffd58b4eb22a2df2e59ae0b

Author: Olivier Louvignes <olivier@mg-crea.com>

Date: Tue Sep 6 12:41:08 2022 +0200

feat(txt2img): allow from_file to work with len(lines) < batch_size (#349)

commit 720e5cd6513cd27e6d53feb6475dde20bd39841a

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 5 20:40:10 2022 -0400

Refactoring simplet2i (#387)

* start refactoring -not yet functional

* first phase of refactor done - not sure weighted prompts working

* Second phase of refactoring. Everything mostly working.

* The refactoring has moved all the hard-core inference work into

ldm.dream.generator.*, where there are submodules for txt2img and

img2img. inpaint will go in there as well.

* Some additional refactoring will be done soon, but relatively

minor work.

* fix -save_orig flag to actually work

* add @neonsecret attention.py memory optimization

* remove unneeded imports

* move token logging into conditioning.py

* add placeholder version of inpaint; porting in progress

* fix crash in img2img

* inpainting working; not tested on variations

* fix crashes in img2img

* ported attention.py memory optimization #117 from basujindal branch

* added @torch_no_grad() decorators to img2img, txt2img, inpaint closures

* Final commit prior to PR against development

* fixup crash when generating intermediate images in web UI

* rename ldm.simplet2i to ldm.generate

* add backward-compatibility simplet2i shell with deprecation warning

* add back in mps exception, addresses @vargol comment in #354

* replaced Conditioning class with exported functions

* fix wrong type of with_variations attribute during intialization

* changed "image_iterator()" to "get_make_image()"

* raise NotImplementedError for calling get_make_image() in parent class

* Update ldm/generate.py

better error message

Co-authored-by: Kevin Gibbons <bakkot@gmail.com>

* minor stylistic fixes and assertion checks from code review

* moved get_noise() method into img2img class

* break get_noise() into two methods, one for txt2img and the other for img2img

* inpainting works on non-square images now

* make get_noise() an abstract method in base class

* much improved inpainting

Co-authored-by: Kevin Gibbons <bakkot@gmail.com>

commit 1ad2a8e567b054cfe9df1715aa805218ee185754

Author: thealanle <35761977+thealanle@users.noreply.github.com>

Date: Mon Sep 5 17:35:04 2022 -0700

Fix --outdir function for web (#373)

* Fix --outdir function for web

* Removed unnecessary hardcoded path

commit 52d8bb2836cf05994ee5e2c5cf9c8d190dac0524

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 5 10:31:59 2022 -0400

Squashed commit of the following:

commit 0cd48e932f1326e000c46f4140f98697eb9bdc79

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 5 10:27:43 2022 -0400

resolve conflicts with development

commit d7bc8c12e05535a363ac7c745a3f3abc2773bfcf

Author: Scott McMillin <scott@scottmcmillin.com>

Date: Sun Sep 4 18:52:09 2022 -0500

Add title attribute back to img tag

commit 5397c89184ebfb8260bc2d8c3f23e73e103d24e6

Author: Scott McMillin <scott@scottmcmillin.com>

Date: Sun Sep 4 13:49:46 2022 -0500

Remove temp code

commit 1da080b50972696db2930681a09cb1c14e524758

Author: Scott McMillin <scott@scottmcmillin.com>

Date: Sun Sep 4 13:33:56 2022 -0500

Cleaned up HTML; small style changes; image click opens image; add seed to figcaption beneath image

commit caf4ea3d8982416dcf5a80fe4601ac4fbc126cc0

Author: Adam Rice <adam@askadam.io>

Date: Mon Sep 5 10:05:39 2022 -0400

Add a 'Remove Image' button to clear the file upload field (#382)

* added "remove image" button

* styled a new "remove image" button

* Update index.js

commit 95c088b30342c75ec2ab8c7d7a423ffd11c50099

Author: Kevin Gibbons <bakkot@gmail.com>

Date: Sun Sep 4 19:04:14 2022 -0700

Revert "Add CORS headers to dream server to ease integration with third-party web interfaces" (#371)

This reverts commit 91e826e5f425333674d1e3bec1fa1ac63cfb382d.

commit a20113d5a3985a23b7e19301acb57688e31e975c

Author: Kevin Gibbons <bakkot@gmail.com>

Date: Sun Sep 4 18:59:12 2022 -0700

put no_grad decorator on make_image closures (#375)

commit 0f93dadd6ac5aa0fbeee5d72150def775752a153

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 4 21:39:15 2022 -0400

fix several dangling references to --gfpgan option, which no longer exists

commit f4004f660e5daba721426cfcd3fe95318fd10bc3

Author: tildebyte <337875+tildebyte@users.noreply.github.com>

Date: Sun Sep 4 19:43:04 2022 -0400

TOIL(requirements): Split requirements to per-platform (#355)

* toil(reqs): split requirements to per-platform

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* toil(reqs): fix for Win and Lin...

...allow pip to resolve latest torch, numpy

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* toil(install): update reqs in Win install notebook

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

commit 4406fd138dec0e25409aeaa2b716f88dd95b76d1

Merge: 5116c81 fd7a72e

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 4 08:23:53 2022 -0400

Merge branch 'SebastianAigner-main' into development

Add support for full CORS headers for dream server.

commit fd7a72e147393f32fc40d8f5918ea9bf1401e723

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 4 08:23:11 2022 -0400

remove debugging message

commit 3a2be621f36e66b16e60b7f4f9210babfe84c582

Merge: 91e826e 5116c81

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 4 08:15:51 2022 -0400

Merge branch 'development' into main

commit 5116c8178c67f550e57f5d16fe931ee1a7cdb0ba

Author: Justin Wong <1584142+wongjustin99@users.noreply.github.com>

Date: Sun Sep 4 07:17:58 2022 -0400

fix save_original flag saving to the same filename (#360)

* Update README.md with new Anaconda install steps (#347)

pip3 version did not work for me and this is the recommended way to install Anaconda now it seems

* fix save_original flag saving to the same filename

Before this, the `--save_orig` flag was not working. The upscaled/GFPGAN would overwrite the original output image.

Co-authored-by: greentext2 <112735219+greentext2@users.noreply.github.com>

commit 91e826e5f425333674d1e3bec1fa1ac63cfb382d

Author: Sebastian Aigner <SebastianAigner@users.noreply.github.com>

Date: Sun Sep 4 10:22:54 2022 +0200

Add CORS headers to dream server to ease integration with third-party web interfaces

commit 6266d9e8d6421ee732338560f825771e461cefb0

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 15:45:20 2022 -0400

remove stray debugging message

commit 138956e5162679f6894ce75462907c9eeed83cbb

Author: greentext2 <112735219+greentext2@users.noreply.github.com>

Date: Sat Sep 3 13:38:57 2022 -0500

Update README.md with new Anaconda install steps (#347)

pip3 version did not work for me and this is the recommended way to install Anaconda now it seems

commit 60be735e802a1c3cd2812c5d8e63f9ed467ea9d9

Author: Cora Johnson-Roberson <cora.johnson.roberson@gmail.com>

Date: Sat Sep 3 14:28:34 2022 -0400

Switch to regular pytorch channel and restore Python 3.10 for Macs. (#301)

* Switch to regular pytorch channel and restore Python 3.10 for Macs.

Although pytorch-nightly should in theory be faster, it is currently

causing increased memory usage and slower iterations:

https://github.com/lstein/stable-diffusion/pull/283#issuecomment-1234784885

This changes the environment-mac.yaml file back to the regular pytorch

channel and moves the `transformers` dep into pip for now (since it

cannot be satisfied until tokenizers>=0.11 is built for Python 3.10).

* Specify versions for Pip packages as well.

commit d0d95d3a2a4b7a91c5c4f570d88af43a2c3afe75

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 14:10:31 2022 -0400

make initimg appear in web log

commit b90a21500037f07bb1b5d143045253ee6bc67391

Merge: 1eee811 6270e31

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 13:47:15 2022 -0400

Merge branch 'prixt-seamless' into development

commit 6270e313b8d87b33cb914f12558e34bc2f0ae357

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 13:46:29 2022 -0400

add credit to prixt for seamless circular tiling

commit a01b7bdc40af5376177de30b76dc075b523b3450

Merge: 1eee811 9d88abe

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 13:43:04 2022 -0400

add web interface for seamless option

commit 1eee8111b95241f54b49f58605ab343a52325b89

Merge: 64eca42 fb857f0

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 12:33:39 2022 -0400

Merge branch 'development' of github.com:lstein/stable-diffusion into development

commit 64eca42610b92cb73a30c405ab9dad28990c15e1

Merge: 9130ad7 21a1f68

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 12:33:05 2022 -0400

Merge branch 'main' into development

* brings in small documentation fixes that were

added directly to main during release tweaking.

commit fb857f05ba0eda5cf9bbe0f60b73a73d75562d85

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 12:07:07 2022 -0400

fix typo in docs

commit 9d88abe2ea1fed6231ffd822956614589a1075b7

Author: prixt <paraxite@naver.com>

Date: Sat Sep 3 22:42:16 2022 +0900

fixed typo

commit a61e49bc974af0fc01c8424d7df9262f63ecf289

Author: prixt <paraxite@naver.com>

Date: Sat Sep 3 22:39:35 2022 +0900

* Removed unnecessary code

* Added description about --seamless

commit 02bee4fdb1534b71c5e609204506efb66699b2bc

Author: prixt <paraxite@naver.com>

Date: Sat Sep 3 16:08:03 2022 +0900

added --seamless tag logging to normalize_prompt

commit d922b53c26f3e9a11ecb920536b9632ec69df5f6

Author: prixt <paraxite@naver.com>

Date: Sat Sep 3 15:13:31 2022 +0900

added seamless tiling mode and commands

64

.github/workflows/cache-model.yml

vendored

Normal file

@ -0,0 +1,64 @@

|

||||

name: Cache Model

|

||||

on:

|

||||

workflow_dispatch

|

||||

jobs:

|

||||

build:

|

||||

strategy:

|

||||

matrix:

|

||||

os: [ macos-12 ]

|

||||

name: Create Caches using ${{ matrix.os }}

|

||||

runs-on: ${{ matrix.os }}

|

||||

steps:

|

||||

- name: Checkout sources

|

||||

uses: actions/checkout@v3

|

||||

- name: Cache model

|

||||

id: cache-sd-v1-4

|

||||

uses: actions/cache@v3

|

||||

env:

|

||||

cache-name: cache-sd-v1-4

|

||||

with:

|

||||

path: models/ldm/stable-diffusion-v1/model.ckpt

|

||||

key: ${{ env.cache-name }}

|

||||

restore-keys: |

|

||||

${{ env.cache-name }}

|

||||

- name: Download Stable Diffusion v1.4 model

|

||||

if: ${{ steps.cache-sd-v1-4.outputs.cache-hit != 'true' }}

|

||||

continue-on-error: true

|

||||

run: |

|

||||

if [ ! -e models/ldm/stable-diffusion-v1 ]; then

|

||||

mkdir -p models/ldm/stable-diffusion-v1

|

||||

fi

|

||||

if [ ! -e models/ldm/stable-diffusion-v1/model.ckpt ]; then

|

||||

curl -o models/ldm/stable-diffusion-v1/model.ckpt ${{ secrets.SD_V1_4_URL }}

|

||||

fi

|

||||

# Uncomment this when we no longer make changes to environment-mac.yaml

|

||||

# - name: Cache environment

|

||||

# id: cache-conda-env-ldm

|

||||

# uses: actions/cache@v3

|

||||

# env:

|

||||

# cache-name: cache-conda-env-ldm

|

||||

# with:

|

||||

# path: ~/.conda/envs/ldm

|

||||

# key: ${{ env.cache-name }}

|

||||

# restore-keys: |

|

||||

# ${{ env.cache-name }}

|

||||

- name: Install dependencies

|

||||

# if: ${{ steps.cache-conda-env-ldm.outputs.cache-hit != 'true' }}

|

||||

run: |

|

||||

conda env create -f environment-mac.yaml

|

||||

- name: Cache hugginface and torch models

|

||||

id: cache-hugginface-torch

|

||||

uses: actions/cache@v3

|

||||

env:

|

||||

cache-name: cache-hugginface-torch

|

||||

with:

|

||||

path: ~/.cache

|

||||

key: ${{ env.cache-name }}

|

||||

restore-keys: |

|

||||

${{ env.cache-name }}

|

||||

- name: Download Huggingface and Torch models

|

||||

if: ${{ steps.cache-hugginface-torch.outputs.cache-hit != 'true' }}

|

||||

continue-on-error: true

|

||||

run: |

|

||||

export PYTHON_BIN=/usr/local/miniconda/envs/ldm/bin/python

|

||||

$PYTHON_BIN scripts/preload_models.py

|

||||

80

.github/workflows/macos12-miniconda.yml

vendored

Normal file

@ -0,0 +1,80 @@

|

||||

name: Build

|

||||

on:

|

||||

push:

|

||||

branches: [ main ]

|

||||

pull_request:

|

||||

branches: [ main ]

|

||||

jobs:

|

||||

build:

|

||||

strategy:

|

||||

matrix:

|

||||

os: [ macos-12 ]

|

||||

name: Build on ${{ matrix.os }} miniconda

|

||||

runs-on: ${{ matrix.os }}

|

||||

steps:

|

||||

- name: Checkout sources

|

||||

uses: actions/checkout@v3

|

||||

- name: Cache model

|

||||

id: cache-sd-v1-4

|

||||

uses: actions/cache@v3

|

||||

env:

|

||||

cache-name: cache-sd-v1-4

|

||||

with:

|

||||

path: models/ldm/stable-diffusion-v1/model.ckpt

|

||||

key: ${{ env.cache-name }}

|

||||

restore-keys: |

|

||||

${{ env.cache-name }}

|

||||

- name: Download Stable Diffusion v1.4 model

|

||||

if: ${{ steps.cache-sd-v1-4.outputs.cache-hit != 'true' }}

|

||||

continue-on-error: true

|

||||

run: |

|

||||

if [ ! -e models/ldm/stable-diffusion-v1 ]; then

|

||||

mkdir -p models/ldm/stable-diffusion-v1

|

||||

fi

|

||||

if [ ! -e models/ldm/stable-diffusion-v1/model.ckpt ]; then

|

||||

curl -o models/ldm/stable-diffusion-v1/model.ckpt ${{ secrets.SD_V1_4_URL }}

|

||||

fi

|

||||

# Uncomment this when we no longer make changes to environment-mac.yaml

|

||||

# - name: Cache environment

|

||||

# id: cache-conda-env-ldm

|

||||

# uses: actions/cache@v3

|

||||

# env:

|

||||

# cache-name: cache-conda-env-ldm

|

||||

# with:

|

||||

# path: ~/.conda/envs/ldm

|

||||

# key: ${{ env.cache-name }}

|

||||

# restore-keys: |

|

||||

# ${{ env.cache-name }}

|

||||

- name: Install dependencies

|

||||

# if: ${{ steps.cache-conda-env-ldm.outputs.cache-hit != 'true' }}

|

||||

run: |

|

||||

conda env create -f environment-mac.yaml

|

||||

- name: Cache hugginface and torch models

|

||||

id: cache-hugginface-torch

|

||||

uses: actions/cache@v3

|

||||

env:

|

||||

cache-name: cache-hugginface-torch

|

||||

with:

|

||||

path: ~/.cache

|

||||

key: ${{ env.cache-name }}

|

||||

restore-keys: |

|

||||

${{ env.cache-name }}

|

||||

- name: Download Huggingface and Torch models

|

||||

if: ${{ steps.cache-hugginface-torch.outputs.cache-hit != 'true' }}

|

||||

continue-on-error: true

|

||||

run: |

|

||||

export PYTHON_BIN=/usr/local/miniconda/envs/ldm/bin/python

|

||||

$PYTHON_BIN scripts/preload_models.py

|

||||

- name: Run the tests

|

||||

run: |

|

||||

# Note, can't "activate" via automation, and activation is just env vars and path

|

||||

export PYTHON_BIN=/usr/local/miniconda/envs/ldm/bin/python

|

||||

export PYTORCH_ENABLE_MPS_FALLBACK=1

|

||||

$PYTHON_BIN scripts/preload_models.py

|

||||

mkdir -p outputs/img-samples

|

||||

time $PYTHON_BIN scripts/dream.py --from_file tests/prompts.txt </dev/null 2> outputs/img-samples/err.log > outputs/img-samples/out.log

|

||||

- name: Archive results

|

||||

uses: actions/upload-artifact@v3

|

||||

with:

|

||||

name: results

|

||||

path: outputs/img-samples

|

||||

3

.gitignore

vendored

@ -77,6 +77,9 @@ db.sqlite3-journal

|

||||

instance/

|

||||

.webassets-cache

|

||||

|

||||

# WebUI temp files:

|

||||

img2img-tmp.png

|

||||

|

||||

# Scrapy stuff:

|

||||

.scrapy

|

||||

|

||||

|

||||

789

README.md

@ -1,7 +1,7 @@

|

||||

<h1 align='center'><b>Stable Diffusion Dream Script</b></h1>

|

||||

|

||||

<p align='center'>

|

||||

<img src="static/logo_temp.png"/>

|

||||

<img src="docs/assets/logo.png"/>

|

||||

</p>

|

||||

|

||||

<p align="center">

|

||||

@ -12,397 +12,118 @@

|

||||

<img src="https://img.shields.io/github/issues-pr/lstein/stable-diffusion?logo=GitHub&style=for-the-badge" alt="pull-requests"/>

|

||||

</p>

|

||||

|

||||

This is a fork of CompVis/stable-diffusion, the wonderful open source

|

||||

text-to-image generator. This fork supports:

|

||||

# **Stable Diffusion Dream Script**

|

||||

|

||||

1. An interactive command-line interface that accepts the same prompt

|

||||

and switches as the Discord bot.

|

||||

This is a fork of

|

||||

[CompVis/stable-diffusion](https://github.com/CompVis/stable-diffusion),

|

||||

the open source text-to-image generator. It provides a streamlined

|

||||

process with various new features and options to aid the image

|

||||

generation process. It runs on Windows, Mac and Linux machines,

|

||||

and runs on GPU cards with as little as 4 GB or RAM.

|

||||

|

||||

2. A basic Web interface that allows you to run a local web server for

|

||||

generating images in your browser.

|

||||

_Note: This fork is rapidly evolving. Please use the

|

||||

[Issues](https://github.com/lstein/stable-diffusion/issues) tab to

|

||||

report bugs and make feature requests. Be sure to use the provided

|

||||

templates. They will help aid diagnose issues faster._

|

||||

|

||||

3. Support for img2img in which you provide a seed image to guide the

|

||||

image creation. (inpainting & masking coming soon)

|

||||

# **Table of Contents**

|

||||

|

||||

4. A notebook for running the code on Google Colab.

|

||||

|

||||

5. Upscaling and face fixing using the optional ESRGAN and GFPGAN

|

||||

packages.

|

||||

|

||||

6. Weighted subprompts for prompt tuning.

|

||||

|

||||

7. [Image variations](VARIATIONS.md) which allow you to systematically

|

||||

generate variations of an image you like and combine two or more

|

||||

images together to combine the best features of both.

|

||||

|

||||

8. Textual inversion for customization of the prompt language and images.

|

||||

|

||||

8. ...and more!

|

||||

|

||||

This fork is rapidly evolving, so use the Issues panel to report bugs

|

||||

and make feature requests, and check back periodically for

|

||||

improvements and bug fixes.

|

||||

|

||||

# Table of Contents

|

||||

|

||||

1. [Major Features](#features)

|

||||

2. [Changelog](#latest-changes)

|

||||

3. [Installation](#installation)

|

||||

1. [Linux](#linux)

|

||||

1. [Windows](#windows)

|

||||

1. [MacOS](README-Mac-MPS.md)

|

||||

1. [Installation](#installation)

|

||||

2. [Major Features](#features)

|

||||

3. [Changelog](#latest-changes)

|

||||

4. [Troubleshooting](#troubleshooting)

|

||||

5. [Contributing](#contributing)

|

||||

6. [Support](#support)

|

||||

|

||||

# Features

|

||||

# Installation

|

||||

|

||||

## Interactive command-line interface similar to the Discord bot

|

||||

This fork is supported across multiple platforms. You can find individual installation instructions below.

|

||||

|

||||

The _dream.py_ script, located in scripts/dream.py,

|

||||

provides an interactive interface to image generation similar to

|

||||

the "dream mothership" bot that Stable AI provided on its Discord

|

||||

server. Unlike the txt2img.py and img2img.py scripts provided in the

|

||||

original CompViz/stable-diffusion source code repository, the

|

||||

time-consuming initialization of the AI model

|

||||

initialization only happens once. After that image generation

|

||||

from the command-line interface is very fast.

|

||||

- ## [Linux](docs/installation/INSTALL_LINUX.md)

|

||||

- ## [Windows](docs/installation/INSTALL_WINDOWS.md)

|

||||

- ## [Macintosh](docs/installation/INSTALL_MAC.md)

|

||||

|

||||

The script uses the readline library to allow for in-line editing,

|

||||

command history (up and down arrows), autocompletion, and more. To help

|

||||

keep track of which prompts generated which images, the script writes a

|

||||

log file of image names and prompts to the selected output directory.

|

||||

In addition, as of version 1.02, it also writes the prompt into the PNG

|

||||

file's metadata where it can be retrieved using scripts/images2prompt.py

|

||||

## **Hardware Requirements**

|

||||

|

||||

The script is confirmed to work on Linux and Windows systems. It should

|

||||

work on MacOSX as well, but this is not confirmed. Note that this script

|

||||

runs from the command-line (CMD or Terminal window), and does not have a GUI.

|

||||

**System**

|

||||

|

||||

```

|

||||

(ldm) ~/stable-diffusion$ python3 ./scripts/dream.py

|

||||

* Initializing, be patient...

|

||||

Loading model from models/ldm/text2img-large/model.ckpt

|

||||

(...more initialization messages...)

|

||||

You wil need one of the following:

|

||||

|

||||

* Initialization done! Awaiting your command...

|

||||

dream> ashley judd riding a camel -n2 -s150

|

||||

Outputs:

|

||||

outputs/img-samples/00009.png: "ashley judd riding a camel" -n2 -s150 -S 416354203

|

||||

outputs/img-samples/00010.png: "ashley judd riding a camel" -n2 -s150 -S 1362479620

|

||||

- An NVIDIA-based graphics card with 4 GB or more VRAM memory.

|

||||

- An Apple computer with an M1 chip.

|

||||

|

||||

dream> "there's a fly in my soup" -n6 -g

|

||||

outputs/img-samples/00011.png: "there's a fly in my soup" -n6 -g -S 2685670268

|

||||

seeds for individual rows: [2685670268, 1216708065, 2335773498, 822223658, 714542046, 3395302430]

|

||||

dream> q

|

||||

**Memory**

|

||||

|

||||

# this shows how to retrieve the prompt stored in the saved image's metadata

|

||||

(ldm) ~/stable-diffusion$ python3 ./scripts/images2prompt.py outputs/img_samples/*.png

|

||||

00009.png: "ashley judd riding a camel" -s150 -S 416354203

|

||||

00010.png: "ashley judd riding a camel" -s150 -S 1362479620

|

||||

00011.png: "there's a fly in my soup" -n6 -g -S 2685670268

|

||||

```

|

||||

- At least 12 GB Main Memory RAM.

|

||||

|

||||

<p align='center'>

|

||||

<img src="static/dream-py-demo.png"/>

|

||||

</p>

|

||||

**Disk**

|

||||

|

||||

The dream> prompt's arguments are pretty much identical to those used

|

||||

in the Discord bot, except you don't need to type "!dream" (it doesn't

|

||||

hurt if you do). A significant change is that creation of individual

|

||||

images is now the default unless --grid (-g) is given. For backward

|

||||

compatibility, the -i switch is recognized. For command-line help

|

||||

type -h (or --help) at the dream> prompt.

|

||||

|

||||

The script itself also recognizes a series of command-line switches

|

||||

that will change important global defaults, such as the directory for

|

||||

image outputs and the location of the model weight files.

|

||||

|

||||

## Image-to-Image

|

||||

|

||||

This script also provides an img2img feature that lets you seed your

|

||||

creations with a drawing or photo. This is a really cool feature that tells

|

||||

stable diffusion to build the prompt on top of the image you provide, preserving

|

||||

the original's basic shape and layout. To use it, provide the --init_img

|

||||

option as shown here:

|

||||

|

||||

```

|

||||

dream> "waterfall and rainbow" --init_img=./init-images/crude_drawing.png --strength=0.5 -s100 -n4

|

||||

```

|

||||

|

||||

The --init_img (-I) option gives the path to the seed picture. --strength (-f) controls how much

|

||||

the original will be modified, ranging from 0.0 (keep the original intact), to 1.0 (ignore the original

|

||||

completely). The default is 0.75, and ranges from 0.25-0.75 give interesting results.

|

||||

|

||||

You may also pass a -v<count> option to generate count variants on the original image. This is done by

|

||||

passing the first generated image back into img2img the requested number of times. It generates interesting

|

||||

variants.

|

||||

|

||||

## GFPGAN and Real-ESRGAN Support

|

||||

|

||||

The script also provides the ability to do face restoration and

|

||||

upscaling with the help of GFPGAN and Real-ESRGAN respectively.

|

||||

|

||||

To use the ability, clone the **[GFPGAN

|

||||

repository](https://github.com/TencentARC/GFPGAN)** and follow their

|

||||

installation instructions. By default, we expect GFPGAN to be

|

||||

installed in a 'GFPGAN' sibling directory. Be sure that the `"ldm"`

|

||||

conda environment is active as you install GFPGAN.

|

||||

|

||||

You can use the `--gfpgan_dir` argument with `dream.py` to set a

|

||||

custom path to your GFPGAN directory. _There are other GFPGAN related

|

||||

boot arguments if you wish to customize further._

|

||||

|

||||

You can install **Real-ESRGAN** by typing the following command.

|

||||

|

||||

```

|

||||

pip install realesrgan

|

||||

```

|

||||

|

||||

**Note: Internet connection needed:**

|

||||

Users whose GPU machines are isolated from the Internet (e.g. on a

|

||||

University cluster) should be aware that the first time you run

|

||||

dream.py with GFPGAN and Real-ESRGAN turned on, it will try to

|

||||

download model files from the Internet. To rectify this, you may run

|

||||

`python3 scripts/preload_models.py` after you have installed GFPGAN

|

||||

and all its dependencies.

|

||||

|

||||

**Usage**

|

||||

|

||||

You will now have access to two new prompt arguments.

|

||||

|

||||

**Upscaling**

|

||||

|

||||

`-U : <upscaling_factor> <upscaling_strength>`

|

||||

|

||||

The upscaling prompt argument takes two values. The first value is a

|

||||

scaling factor and should be set to either `2` or `4` only. This will

|

||||

either scale the image 2x or 4x respectively using different models.

|

||||

|

||||

You can set the scaling stength between `0` and `1.0` to control

|

||||

intensity of the of the scaling. This is handy because AI upscalers

|

||||

generally tend to smooth out texture details. If you wish to retain

|

||||

some of those for natural looking results, we recommend using values

|

||||

between `0.5 to 0.8`.

|

||||

|

||||

If you do not explicitly specify an upscaling_strength, it will

|

||||

default to 0.75.

|

||||

|

||||

**Face Restoration**

|

||||

|

||||

`-G : <gfpgan_strength>`

|

||||

|

||||

This prompt argument controls the strength of the face restoration

|

||||

that is being applied. Similar to upscaling, values between `0.5 to 0.8` are recommended.

|

||||

|

||||

You can use either one or both without any conflicts. In cases where

|

||||

you use both, the image will be first upscaled and then the face

|

||||

restoration process will be executed to ensure you get the highest

|

||||

quality facial features.

|

||||

|

||||

`--save_orig`

|

||||

|

||||

When you use either `-U` or `-G`, the final result you get is upscaled

|

||||

or face modified. If you want to save the original Stable Diffusion

|

||||

generation, you can use the `-save_orig` prompt argument to save the

|

||||

original unaffected version too.

|

||||

|

||||

**Example Usage**

|

||||

|

||||

```

|

||||

dream > superman dancing with a panda bear -U 2 0.6 -G 0.4

|

||||

```

|

||||

|

||||

This also works with img2img:

|

||||

|

||||

```

|

||||

dream> a man wearing a pineapple hat -I path/to/your/file.png -U 2 0.5 -G 0.6

|

||||

```

|

||||

- At least 6 GB of free disk space for the machine learning model, Python, and all its dependencies.

|

||||

|

||||

**Note**

|

||||

|

||||

GFPGAN and Real-ESRGAN are both memory intensive. In order to avoid

|

||||

crashes and memory overloads during the Stable Diffusion process,

|

||||

these effects are applied after Stable Diffusion has completed its

|

||||

work.

|

||||

If you are have a Nvidia 10xx series card (e.g. the 1080ti), please

|

||||

run the dream script in full-precision mode as shown below.

|

||||

|

||||

In single image generations, you will see the output right away but

|

||||

when you are using multiple iterations, the images will first be

|

||||

generated and then upscaled and face restored after that process is

|

||||

complete. While the image generation is taking place, you will still

|

||||

be able to preview the base images.

|

||||

Similarly, specify full-precision mode on Apple M1 hardware.

|

||||

|

||||

If you wish to stop during the image generation but want to upscale or

|

||||

face restore a particular generated image, pass it again with the same

|

||||

prompt and generated seed along with the `-U` and `-G` prompt

|

||||

arguments to perform those actions.

|

||||

|

||||

## Google Colab

|

||||

|

||||

Stable Diffusion AI Notebook: <a href="https://colab.research.google.com/github/lstein/stable-diffusion/blob/main/Stable_Diffusion_AI_Notebook.ipynb" target="_parent"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a> <br>

|

||||

Open and follow instructions to use an isolated environment running Dream.<br>

|

||||

|

||||

Output example:

|

||||

|

||||

|

||||

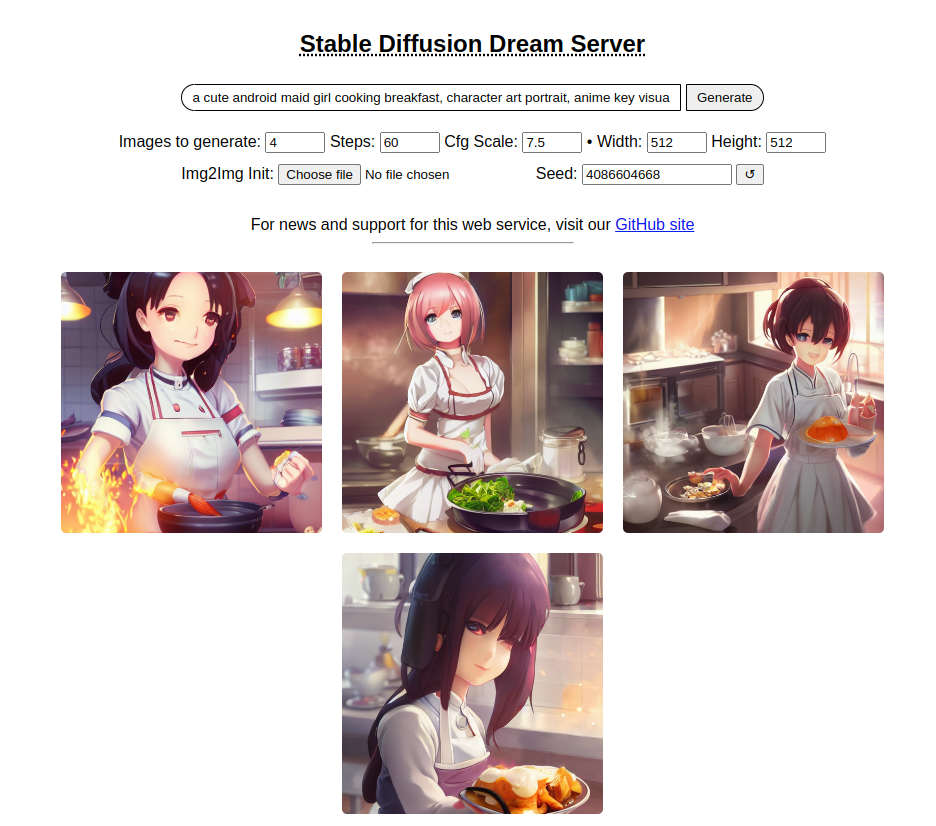

## Barebones Web Server

|

||||

|

||||

As of version 1.10, this distribution comes with a bare bones web

|

||||

server (see screenshot). To use it, run the _dream.py_ script by

|

||||

adding the **--web** option.

|

||||

To run in full-precision mode, start `dream.py` with the

|

||||

`--full_precision` flag:

|

||||

|

||||

```

|

||||

(ldm) ~/stable-diffusion$ python3 scripts/dream.py --web

|

||||

(ldm) ~/stable-diffusion$ python scripts/dream.py --full_precision

|

||||

```

|

||||

|

||||

You can then connect to the server by pointing your web browser at

|

||||

http://localhost:9090, or to the network name or IP address of the server.

|

||||

# Features

|

||||

|

||||

Kudos to [Tesseract Cat](https://github.com/TesseractCat) for

|

||||

contributing this code, and to [dagf2101](https://github.com/dagf2101)

|

||||

for refining it.

|

||||

## **Major Features**

|

||||

|

||||

|

||||

- ## [Interactive Command Line Interface](docs/features/CLI.md)

|

||||

|

||||

## Reading Prompts from a File

|

||||

- ## [Image To Image](docs/features/IMG2IMG.md)

|

||||

|

||||

You can automate dream.py by providing a text file with the prompts

|

||||

you want to run, one line per prompt. The text file must be composed

|

||||

with a text editor (e.g. Notepad) and not a word processor. Each line

|

||||

should look like what you would type at the dream> prompt:

|

||||

- ## [Inpainting Support](docs/features/INPAINTING.md)

|

||||

|

||||

```

|

||||

a beautiful sunny day in the park, children playing -n4 -C10

|

||||

stormy weather on a mountain top, goats grazing -s100

|

||||

innovative packaging for a squid's dinner -S137038382

|

||||

```

|

||||

- ## [GFPGAN and Real-ESRGAN Support](docs/features/UPSCALE.md)

|

||||

|

||||

Then pass this file's name to dream.py when you invoke it:

|

||||

- ## [Seamless Tiling](docs/features/OTHER.md#seamless-tiling)

|

||||

|

||||

```

|

||||

(ldm) ~/stable-diffusion$ python3 scripts/dream.py --from_file "path/to/prompts.txt"

|

||||

```

|

||||

- ## [Google Colab](docs/features/OTHER.md#google-colab)

|

||||

|

||||

You may read a series of prompts from standard input by providing a filename of "-":

|

||||

- ## [Web Server](docs/features/WEB.md)

|

||||

|

||||

```

|

||||

(ldm) ~/stable-diffusion$ echo "a beautiful day" | python3 scripts/dream.py --from_file -

|

||||

```

|

||||

- ## [Reading Prompts From File](docs/features/OTHER.md#reading-prompts-from-a-file)

|

||||

|

||||

## Shortcut for reusing seeds from the previous command

|

||||

- ## [Shortcut: Reusing Seeds](docs/features/OTHER.md#shortcuts-reusing-seeds)

|

||||

|

||||

Since it is so common to reuse seeds while refining a prompt, there is

|

||||

now a shortcut as of version 1.11. Provide a **-S** (or **--seed**)

|

||||

switch of -1 to use the seed of the most recent image generated. If

|

||||

you produced multiple images with the **-n** switch, then you can go

|

||||

back further using -2, -3, etc. up to the first image generated by the

|

||||

previous command. Sorry, but you can't go back further than one

|

||||

command.

|

||||

- ## [Weighted Prompts](docs/features/OTHER.md#weighted-prompts)

|

||||

|

||||

Here's an example of using this to do a quick refinement. It also

|

||||

illustrates using the new **-G** switch to turn on upscaling and

|

||||

face enhancement (see previous section):

|

||||

- ## [Variations](docs/features/VARIATIONS.md)

|

||||

|

||||

```

|

||||

dream> a cute child playing hopscotch -G0.5

|

||||

[...]

|

||||

outputs/img-samples/000039.3498014304.png: "a cute child playing hopscotch" -s50 -W512 -H512 -C7.5 -mk_lms -S3498014304

|

||||

- ## [Personalizing Text-to-Image Generation](docs/features/TEXTUAL_INVERSION.md)

|

||||

|

||||

# I wonder what it will look like if I bump up the steps and set facial enhancement to full strength?

|

||||

dream> a cute child playing hopscotch -G1.0 -s100 -S -1

|

||||

reusing previous seed 3498014304

|

||||

[...]

|

||||

outputs/img-samples/000040.3498014304.png: "a cute child playing hopscotch" -G1.0 -s100 -W512 -H512 -C7.5 -mk_lms -S3498014304

|

||||

```

|

||||

- ## [Simplified API for text to image generation](docs/features/OTHER.md#simplified-api)

|

||||

|

||||

## Weighted Prompts

|

||||

## **Other Features**

|

||||

|

||||

You may weight different sections of the prompt to tell the sampler to attach different levels of

|

||||

priority to them, by adding :(number) to the end of the section you wish to up- or downweight.

|

||||

For example consider this prompt:

|

||||

- ### [Creating Transparent Regions for Inpainting](docs/features/INPAINTING.md#creating-transparent-regions-for-inpainting)

|

||||

|

||||

```

|

||||

tabby cat:0.25 white duck:0.75 hybrid

|

||||

```

|

||||

|

||||

This will tell the sampler to invest 25% of its effort on the tabby

|

||||

cat aspect of the image and 75% on the white duck aspect

|

||||

(surprisingly, this example actually works). The prompt weights can

|

||||

use any combination of integers and floating point numbers, and they

|

||||

do not need to add up to 1.

|

||||

|

||||

## Personalizing Text-to-Image Generation

|

||||

|

||||

You may personalize the generated images to provide your own styles or objects by training a new LDM checkpoint

|

||||

and introducing a new vocabulary to the fixed model.

|

||||

|

||||

To train, prepare a folder that contains images sized at 512x512 and execute the following:

|

||||

|

||||

|

||||

WINDOWS: As the default backend is not available on Windows, if you're using that platform, set the environment variable `PL_TORCH_DISTRIBUTED_BACKEND=gloo`

|

||||

|

||||

```

|

||||

(ldm) ~/stable-diffusion$ python3 ./main.py --base ./configs/stable-diffusion/v1-finetune.yaml \

|

||||

-t \

|

||||

--actual_resume ./models/ldm/stable-diffusion-v1/model.ckpt \

|

||||

-n my_cat \

|

||||

--gpus 0, \

|

||||

--data_root D:/textual-inversion/my_cat \

|

||||

--init_word 'cat'

|

||||

```

|

||||

|

||||

During the training process, files will be created in /logs/[project][time][project]/

|

||||

where you can see the process.

|

||||

|

||||

conditioning\* contains the training prompts

|

||||

inputs, reconstruction the input images for the training epoch

|

||||

samples, samples scaled for a sample of the prompt and one with the init word provided

|

||||

|

||||

On a RTX3090, the process for SD will take ~1h @1.6 iterations/sec.

|

||||

|

||||

Note: According to the associated paper, the optimal number of images

|

||||

is 3-5. Your model may not converge if you use more images than that.

|

||||

|

||||

Training will run indefinately, but you may wish to stop it before the

|

||||

heat death of the universe, when you find a low loss epoch or around

|

||||

~5000 iterations.

|

||||

|

||||

Once the model is trained, specify the trained .pt file when starting

|

||||

dream using

|

||||

|

||||

```

|

||||

(ldm) ~/stable-diffusion$ python3 ./scripts/dream.py --embedding_path /path/to/embedding.pt --full_precision

|

||||

```

|

||||

|

||||

Then, to utilize your subject at the dream prompt

|

||||

|

||||

```

|

||||

dream> "a photo of *"

|

||||

```

|

||||

|

||||

this also works with image2image

|

||||

|

||||

```

|

||||

dream> "waterfall and rainbow in the style of *" --init_img=./init-images/crude_drawing.png --strength=0.5 -s100 -n4

|

||||

```

|

||||

|

||||

It's also possible to train multiple tokens (modify the placeholder string in configs/stable-diffusion/v1-finetune.yaml) and combine LDM checkpoints using:

|

||||

|

||||

```

|

||||

(ldm) ~/stable-diffusion$ python3 ./scripts/merge_embeddings.py \

|

||||

--manager_ckpts /path/to/first/embedding.pt /path/to/second/embedding.pt [...] \

|

||||

--output_path /path/to/output/embedding.pt

|

||||

```

|

||||

|

||||

Credit goes to @rinongal and the repository located at

|

||||

https://github.com/rinongal/textual_inversion Please see the

|

||||

repository and associated paper for details and limitations.

|

||||

- ### [Preload Models](docs/features/OTHER.md#preload-models)

|

||||

|

||||

# Latest Changes

|

||||

|

||||

- v1.13 (3 September 2022)

|

||||

- v1.14 (11 September 2022)

|

||||

|

||||

- Support image variations (see [VARIATIONS](VARIATIONS.md) ([Kevin Gibbons](https://github.com/bakkot) and many contributors and reviewers)

|

||||

- Memory optimizations for small-RAM cards. 512x512 now possible on 4 GB GPUs.

|

||||

- Full support for Apple hardware with M1 or M2 chips.

|

||||

- Add "seamless mode" for circular tiling of image. Generates beautiful effects. ([prixt](https://github.com/prixt)).

|

||||

- Inpainting support.

|

||||

- Improved web server GUI.

|

||||

- Lots of code and documentation cleanups.

|

||||

|

||||

- v1.13 (3 September 2022

|

||||

|

||||

- Support image variations (see [VARIATIONS](docs/features/VARIATIONS.md) ([Kevin Gibbons](https://github.com/bakkot) and many contributors and reviewers)

|

||||

- Supports a Google Colab notebook for a standalone server running on Google hardware [Arturo Mendivil](https://github.com/artmen1516)

|

||||

- WebUI supports GFPGAN/ESRGAN facial reconstruction and upscaling [Kevin Gibbons](https://github.com/bakkot)

|

||||

- WebUI supports incremental display of in-progress images during generation [Kevin Gibbons](https://github.com/bakkot)

|

||||

@ -413,369 +134,22 @@ repository and associated paper for details and limitations.

|

||||

- Works on M1 Apple hardware.

|

||||

- Multiple bug fixes.

|

||||

|

||||

For older changelogs, please visit **[CHANGELOGS](CHANGELOG.md)**.

|

||||

|

||||

# Installation

|

||||

|

||||

There are separate installation walkthroughs for [Linux](#linux), [Windows](#windows) and [Macintosh](#Macintosh)

|

||||

|

||||

## Linux

|

||||

|

||||

1. You will need to install the following prerequisites if they are not already available. Use your

|

||||

operating system's preferred installer

|

||||

|

||||

- Python (version 3.8.5 recommended; higher may work)

|

||||

- git

|

||||

|

||||

2. Install the Python Anaconda environment manager.

|

||||

|

||||

```

|

||||

~$ wget https://repo.anaconda.com/archive/Anaconda3-2022.05-Linux-x86_64.sh

|

||||

~$ chmod +x Anaconda3-2022.05-Linux-x86_64.sh

|

||||

~$ ./Anaconda3-2022.05-Linux-x86_64.sh

|

||||

```

|

||||

|

||||

After installing anaconda, you should log out of your system and log back in. If the installation

|

||||

worked, your command prompt will be prefixed by the name of the current anaconda environment, "(base)".

|

||||

|

||||

3. Copy the stable-diffusion source code from GitHub:

|

||||

|

||||

```

|

||||

(base) ~$ git clone https://github.com/lstein/stable-diffusion.git

|

||||

```

|

||||

|

||||

This will create stable-diffusion folder where you will follow the rest of the steps.

|

||||

|

||||

4. Enter the newly-created stable-diffusion folder. From this step forward make sure that you are working in the stable-diffusion directory!

|

||||

|

||||

```

|

||||

(base) ~$ cd stable-diffusion

|

||||

(base) ~/stable-diffusion$

|

||||

```

|

||||

|

||||

5. Use anaconda to copy necessary python packages, create a new python environment named "ldm",

|

||||

and activate the environment.

|

||||

|

||||

```

|

||||

(base) ~/stable-diffusion$ conda env create -f environment.yaml

|

||||

(base) ~/stable-diffusion$ conda activate ldm

|

||||

(ldm) ~/stable-diffusion$

|

||||

```

|

||||

|

||||

After these steps, your command prompt will be prefixed by "(ldm)" as shown above.

|

||||

|

||||

6. Load a couple of small machine-learning models required by stable diffusion:

|

||||

|

||||

```

|

||||

(ldm) ~/stable-diffusion$ python3 scripts/preload_models.py

|

||||

```

|

||||

|

||||

Note that this step is necessary because I modified the original

|

||||

just-in-time model loading scheme to allow the script to work on GPU

|

||||

machines that are not internet connected. See [Workaround for machines with limited internet connectivity](#workaround-for-machines-with-limited-internet-connectivity)

|

||||

|

||||

7. Now you need to install the weights for the stable diffusion model.

|

||||

|

||||

For running with the released weights, you will first need to set up an acount with Hugging Face (https://huggingface.co).

|

||||

Use your credentials to log in, and then point your browser at https://huggingface.co/CompVis/stable-diffusion-v-1-4-original.

|

||||

You may be asked to sign a license agreement at this point.

|

||||

|

||||

Click on "Files and versions" near the top of the page, and then click on the file named "sd-v1-4.ckpt". You'll be taken

|

||||

to a page that prompts you to click the "download" link. Save the file somewhere safe on your local machine.

|

||||

|

||||

Now run the following commands from within the stable-diffusion directory. This will create a symbolic

|

||||

link from the stable-diffusion model.ckpt file, to the true location of the sd-v1-4.ckpt file.

|

||||

|

||||

```

|

||||

(ldm) ~/stable-diffusion$ mkdir -p models/ldm/stable-diffusion-v1

|

||||

(ldm) ~/stable-diffusion$ ln -sf /path/to/sd-v1-4.ckpt models/ldm/stable-diffusion-v1/model.ckpt

|

||||

```

|

||||

|

||||

8. Start generating images!

|

||||

|

||||

```

|

||||

# for the pre-release weights use the -l or --liaon400m switch

|

||||

(ldm) ~/stable-diffusion$ python3 scripts/dream.py -l

|

||||

|

||||

# for the post-release weights do not use the switch

|

||||

(ldm) ~/stable-diffusion$ python3 scripts/dream.py

|

||||

|

||||

# for additional configuration switches and arguments, use -h or --help

|

||||

(ldm) ~/stable-diffusion$ python3 scripts/dream.py -h

|

||||

```

|

||||

|

||||

9. Subsequently, to relaunch the script, be sure to run "conda activate ldm" (step 5, second command), enter the "stable-diffusion"

|

||||

directory, and then launch the dream script (step 8). If you forget to activate the ldm environment, the script will fail with multiple ModuleNotFound errors.

|

||||

|

||||

### Updating to newer versions of the script

|

||||

|

||||

This distribution is changing rapidly. If you used the "git clone" method (step 5) to download the stable-diffusion directory, then to update to the latest and greatest version, launch the Anaconda window, enter "stable-diffusion", and type:

|

||||

|

||||

```

|

||||

(ldm) ~/stable-diffusion$ git pull

|

||||

```

|

||||

|

||||

This will bring your local copy into sync with the remote one.

|

||||

|

||||

## Windows

|

||||

|

||||

### Notebook install (semi-automated)

|

||||

|

||||

We have a

|

||||

[Jupyter notebook](https://github.com/lstein/stable-diffusion/blob/main/Stable-Diffusion-local-Windows.ipynb)

|

||||

with cell-by-cell installation steps. It will download the code in this repo as

|

||||

one of the steps, so instead of cloning this repo, simply download the notebook

|

||||

from the link above and load it up in VSCode (with the

|

||||

appropriate extensions installed)/Jupyter/JupyterLab and start running the cells one-by-one.

|

||||

|

||||

Note that you will need NVIDIA drivers, Python 3.10, and Git installed

|

||||

beforehand - simplified

|

||||

[step-by-step instructions](https://github.com/lstein/stable-diffusion/wiki/Easy-peasy-Windows-install)

|

||||

are available in the wiki (you'll only need steps 1, 2, & 3 ).

|

||||

|

||||

### Manual installs

|

||||

|

||||

#### pip

|

||||

|

||||

See

|

||||

[Easy-peasy Windows install](https://github.com/lstein/stable-diffusion/wiki/Easy-peasy-Windows-install)

|

||||

in the wiki

|

||||

|

||||

#### Conda

|

||||

|

||||

1. Install Anaconda3 (miniconda3 version) from here: https://docs.anaconda.com/anaconda/install/windows/

|

||||

|

||||

2. Install Git from here: https://git-scm.com/download/win

|

||||

|

||||

3. Launch Anaconda from the Windows Start menu. This will bring up a command window. Type all the remaining commands in this window.

|

||||

|

||||

4. Run the command:

|

||||

|

||||

```

|

||||

git clone https://github.com/lstein/stable-diffusion.git

|

||||

```

|

||||

|

||||

This will create stable-diffusion folder where you will follow the rest of the steps.

|

||||

|

||||

5. Enter the newly-created stable-diffusion folder. From this step forward make sure that you are working in the stable-diffusion directory!

|

||||

|

||||

```

|

||||

cd stable-diffusion

|

||||

```

|

||||

|

||||

6. Run the following two commands:

|

||||