mirror of

https://github.com/invoke-ai/InvokeAI

synced 2024-08-30 20:32:17 +00:00

Clean up communityNodes.md (#4870)

* Clean up communityNodes.md * Update communityNodes.md

This commit is contained in:

committed by

psychedelicious

psychedelicious

parent

17be3e1234

commit

a76e58017c

@ -8,28 +8,42 @@ To download a node, simply download the `.py` node file from the link and add it

|

||||

|

||||

To use a community workflow, download the the `.json` node graph file and load it into Invoke AI via the **Load Workflow** button in the Workflow Editor.

|

||||

|

||||

--------------------------------

|

||||

- Community Nodes

|

||||

+ [Depth Map from Wavefront OBJ](#depth-map-from-wavefront-obj)

|

||||

+ [Film Grain](#film-grain)

|

||||

+ [Generative Grammar-Based Prompt Nodes](#generative-grammar-based-prompt-nodes)

|

||||

+ [GPT2RandomPromptMaker](#gpt2randompromptmaker)

|

||||

+ [Grid to Gif](#grid-to-gif)

|

||||

+ [Halftone](#halftone)

|

||||

+ [Ideal Size](#ideal-size)

|

||||

+ [Image and Mask Composition Pack](#image-and-mask-composition-pack)

|

||||

+ [Image to Character Art Image Nodes](#image-to-character-art-image-nodes)

|

||||

+ [Image Picker](#image-picker)

|

||||

+ [Load Video Frame](#load-video-frame)

|

||||

+ [Make 3D](#make-3d)

|

||||

+ [Oobabooga](#oobabooga)

|

||||

+ [Prompt Tools](#prompt-tools)

|

||||

+ [Retroize](#retroize)

|

||||

+ [Size Stepper Nodes](#size-stepper-nodes)

|

||||

+ [Text font to Image](#text-font-to-image)

|

||||

+ [Thresholding](#thresholding)

|

||||

+ [XY Image to Grid and Images to Grids nodes](#xy-image-to-grid-and-images-to-grids-nodes)

|

||||

- [Example Node Template](#example-node-template)

|

||||

- [Disclaimer](#disclaimer)

|

||||

- [Help](#help)

|

||||

|

||||

|

||||

--------------------------------

|

||||

### Make 3D

|

||||

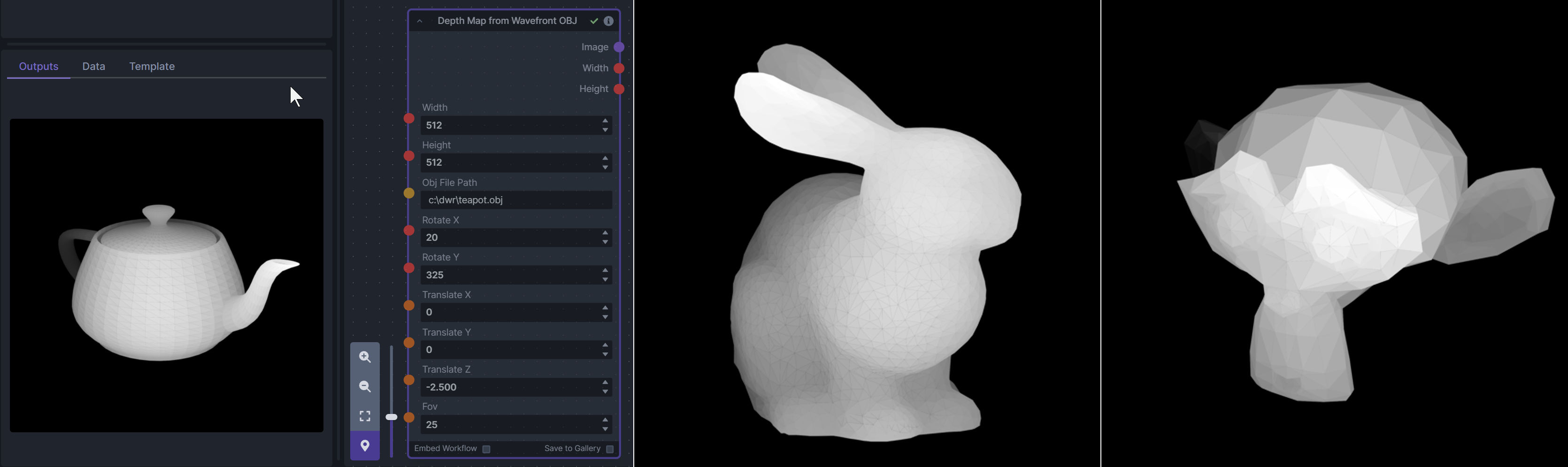

### Depth Map from Wavefront OBJ

|

||||

|

||||

**Description:** Create compelling 3D stereo images from 2D originals.

|

||||

**Description:** Render depth maps from Wavefront .obj files (triangulated) using this simple 3D renderer utilizing numpy and matplotlib to compute and color the scene. There are simple parameters to change the FOV, camera position, and model orientation.

|

||||

|

||||

**Node Link:** [https://gitlab.com/srcrr/shift3d/-/raw/main/make3d.py](https://gitlab.com/srcrr/shift3d)

|

||||

To be imported, an .obj must use triangulated meshes, so make sure to enable that option if exporting from a 3D modeling program. This renderer makes each triangle a solid color based on its average depth, so it will cause anomalies if your .obj has large triangles. In Blender, the Remesh modifier can be helpful to subdivide a mesh into small pieces that work well given these limitations.

|

||||

|

||||

**Example Node Graph:** https://gitlab.com/srcrr/shift3d/-/raw/main/example-workflow.json?ref_type=heads&inline=false

|

||||

**Node Link:** https://github.com/dwringer/depth-from-obj-node

|

||||

|

||||

**Output Examples**

|

||||

|

||||

{: style="height:512px;width:512px"}

|

||||

{: style="height:512px;width:512px"}

|

||||

|

||||

--------------------------------

|

||||

### Ideal Size

|

||||

|

||||

**Description:** This node calculates an ideal image size for a first pass of a multi-pass upscaling. The aim is to avoid duplication that results from choosing a size larger than the model is capable of.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/ideal-size-node

|

||||

**Example Usage:**

|

||||

</br><img src="https://raw.githubusercontent.com/dwringer/depth-from-obj-node/main/depth_from_obj_usage.jpg" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### Film Grain

|

||||

@ -39,68 +53,19 @@ To use a community workflow, download the the `.json` node graph file and load i

|

||||

**Node Link:** https://github.com/JPPhoto/film-grain-node

|

||||

|

||||

--------------------------------

|

||||

### Image Picker

|

||||

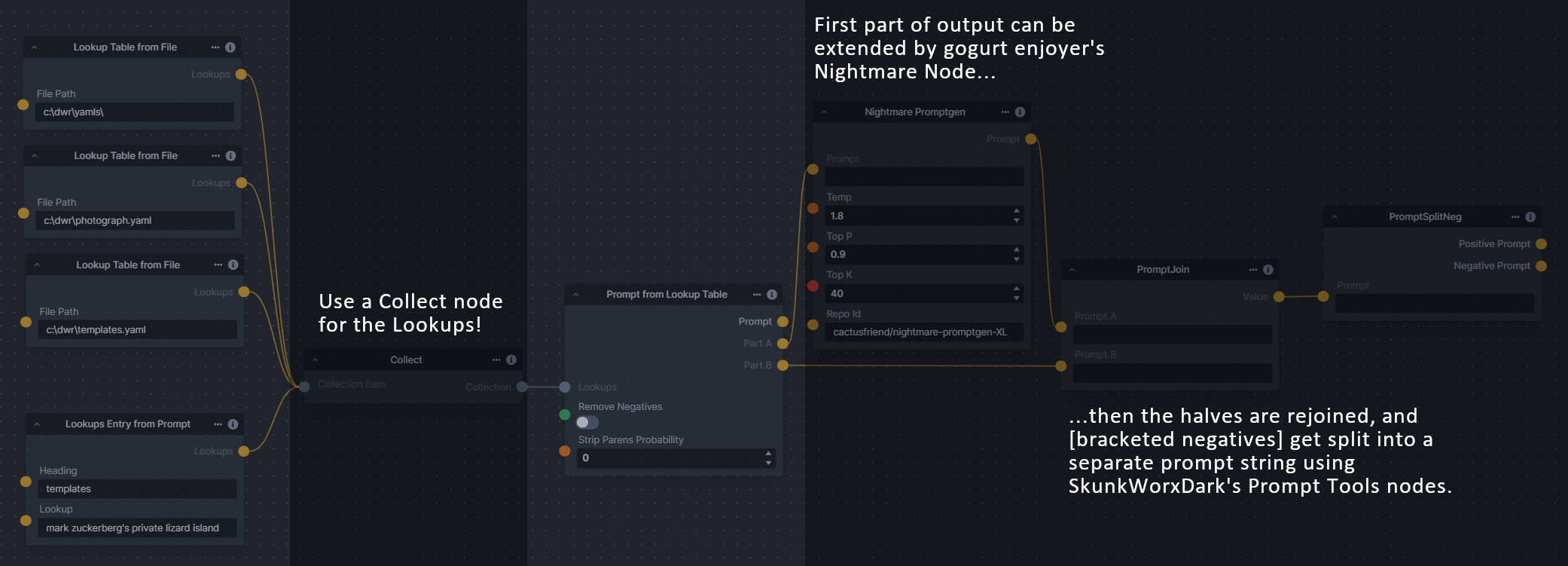

### Generative Grammar-Based Prompt Nodes

|

||||

|

||||

**Description:** This InvokeAI node takes in a collection of images and randomly chooses one. This can be useful when you have a number of poses to choose from for a ControlNet node, or a number of input images for another purpose.

|

||||

**Description:** This set of 3 nodes generates prompts from simple user-defined grammar rules (loaded from custom files - examples provided below). The prompts are made by recursively expanding a special template string, replacing nonterminal "parts-of-speech" until no nonterminal terms remain in the string.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/image-picker-node

|

||||

This includes 3 Nodes:

|

||||

- *Lookup Table from File* - loads a YAML file "prompt" section (or of a whole folder of YAML's) into a JSON-ified dictionary (Lookups output)

|

||||

- *Lookups Entry from Prompt* - places a single entry in a new Lookups output under the specified heading

|

||||

- *Prompt from Lookup Table* - uses a Collection of Lookups as grammar rules from which to randomly generate prompts.

|

||||

|

||||

--------------------------------

|

||||

### Thresholding

|

||||

**Node Link:** https://github.com/dwringer/generative-grammar-prompt-nodes

|

||||

|

||||

**Description:** This node generates masks for highlights, midtones, and shadows given an input image. You can optionally specify a blur for the lookup table used in making those masks from the source image.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/thresholding-node

|

||||

|

||||

**Examples**

|

||||

|

||||

Input:

|

||||

|

||||

{: style="height:512px;width:512px"}

|

||||

|

||||

Highlights/Midtones/Shadows:

|

||||

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/727021c1-36ff-4ec8-90c8-105e00de986d" style="width: 30%" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/0b721bfc-f051-404e-b905-2f16b824ddfe" style="width: 30%" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/04c1297f-1c88-42b6-a7df-dd090b976286" style="width: 30%" />

|

||||

|

||||

Highlights/Midtones/Shadows (with LUT blur enabled):

|

||||

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/19aa718a-70c1-4668-8169-d68f4bd13771" style="width: 30%" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/0a440e43-697f-4d17-82ee-f287467df0a5" style="width: 30%" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/0701fd0f-2ca7-4fe2-8613-2b52547bafce" style="width: 30%" />

|

||||

|

||||

--------------------------------

|

||||

### Halftone

|

||||

|

||||

**Description**: Halftone converts the source image to grayscale and then performs halftoning. CMYK Halftone converts the image to CMYK and applies a per-channel halftoning to make the source image look like a magazine or newspaper. For both nodes, you can specify angles and halftone dot spacing.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/halftone-node

|

||||

|

||||

**Example**

|

||||

|

||||

Input:

|

||||

|

||||

{: style="height:512px;width:512px"}

|

||||

|

||||

Halftone Output:

|

||||

|

||||

{: style="height:512px;width:512px"}

|

||||

|

||||

CMYK Halftone Output:

|

||||

|

||||

{: style="height:512px;width:512px"}

|

||||

|

||||

--------------------------------

|

||||

### Retroize

|

||||

|

||||

**Description:** Retroize is a collection of nodes for InvokeAI to "Retroize" images. Any image can be given a fresh coat of retro paint with these nodes, either from your gallery or from within the graph itself. It includes nodes to pixelize, quantize, palettize, and ditherize images; as well as to retrieve palettes from existing images.

|

||||

|

||||

**Node Link:** https://github.com/Ar7ific1al/invokeai-retroizeinode/

|

||||

|

||||

**Retroize Output Examples**

|

||||

|

||||

|

||||

**Example Usage:**

|

||||

</br><img src="https://raw.githubusercontent.com/dwringer/generative-grammar-prompt-nodes/main/lookuptables_usage.jpg" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### GPT2RandomPromptMaker

|

||||

@ -113,76 +78,49 @@ CMYK Halftone Output:

|

||||

|

||||

Generated Prompt: An enchanted weapon will be usable by any character regardless of their alignment.

|

||||

|

||||

|

||||

<img src="https://github.com/mickr777/InvokeAI/assets/115216705/8496ba09-bcdd-4ff7-8076-ff213b6a1e4c" width="200" />

|

||||

|

||||

--------------------------------

|

||||

### Load Video Frame

|

||||

### Grid to Gif

|

||||

|

||||

**Description:** This is a video frame image provider + indexer/video creation nodes for hooking up to iterators and ranges and ControlNets and such for invokeAI node experimentation. Think animation + ControlNet outputs.

|

||||

**Description:** One node that turns a grid image into an image collection, one node that turns an image collection into a gif.

|

||||

|

||||

**Node Link:** https://github.com/helix4u/load_video_frame

|

||||

**Node Link:** https://github.com/mildmisery/invokeai-GridToGifNode/blob/main/GridToGif.py

|

||||

|

||||

**Example Node Graph:** https://github.com/helix4u/load_video_frame/blob/main/Example_Workflow.json

|

||||

**Example Node Graph:** https://github.com/mildmisery/invokeai-GridToGifNode/blob/main/Grid%20to%20Gif%20Example%20Workflow.json

|

||||

|

||||

**Output Example:**

|

||||

**Output Examples**

|

||||

|

||||

|

||||

[Full mp4 of Example Output test.mp4](https://github.com/helix4u/load_video_frame/blob/main/test.mp4)

|

||||

<img src="https://raw.githubusercontent.com/mildmisery/invokeai-GridToGifNode/main/input.png" width="300" />

|

||||

<img src="https://raw.githubusercontent.com/mildmisery/invokeai-GridToGifNode/main/output.gif" width="300" />

|

||||

|

||||

--------------------------------

|

||||

### Halftone

|

||||

|

||||

### Oobabooga

|

||||

**Description**: Halftone converts the source image to grayscale and then performs halftoning. CMYK Halftone converts the image to CMYK and applies a per-channel halftoning to make the source image look like a magazine or newspaper. For both nodes, you can specify angles and halftone dot spacing.

|

||||

|

||||

**Description:** asks a local LLM running in Oobabooga's Text-Generation-Webui to write a prompt based on the user input.

|

||||

**Node Link:** https://github.com/JPPhoto/halftone-node

|

||||

|

||||

**Link:** https://github.com/sammyf/oobabooga-node

|

||||

**Example**

|

||||

|

||||

Input:

|

||||

|

||||

**Example:**

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/fd5efb9f-4355-4409-a1c2-c1ca99e0cab4" width="300" />

|

||||

|

||||

"describe a new mystical creature in its natural environment"

|

||||

Halftone Output:

|

||||

|

||||

*can return*

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/7e606f29-e68f-4d46-b3d5-97f799a4ec2f" width="300" />

|

||||

|

||||

"The mystical creature I am describing to you is called the "Glimmerwing". It is a majestic, iridescent being that inhabits the depths of the most enchanted forests and glimmering lakes. Its body is covered in shimmering scales that reflect every color of the rainbow, and it has delicate, translucent wings that sparkle like diamonds in the sunlight. The Glimmerwing's home is a crystal-clear lake, surrounded by towering trees with leaves that shimmer like jewels. In this serene environment, the Glimmerwing spends its days swimming gracefully through the water, chasing schools of glittering fish and playing with the gentle ripples of the lake's surface.

|

||||

As the sun sets, the Glimmerwing perches on a branch of one of the trees, spreading its wings to catch the last rays of light. The creature's scales glow softly, casting a rainbow of colors across the forest floor. The Glimmerwing sings a haunting melody, its voice echoing through the stillness of the night air. Its song is said to have the power to heal the sick and bring peace to troubled souls. Those who are lucky enough to hear the Glimmerwing's song are forever changed by its beauty and grace."

|

||||

CMYK Halftone Output:

|

||||

|

||||

|

||||

|

||||

**Requirement**

|

||||

|

||||

a Text-Generation-Webui instance (might work remotely too, but I never tried it) and obviously InvokeAI 3.x

|

||||

|

||||

**Note**

|

||||

|

||||

This node works best with SDXL models, especially as the style can be described independantly of the LLM's output.

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/c59c578f-db8e-4d66-8c66-2851752d75ea" width="300" />

|

||||

|

||||

--------------------------------

|

||||

### Depth Map from Wavefront OBJ

|

||||

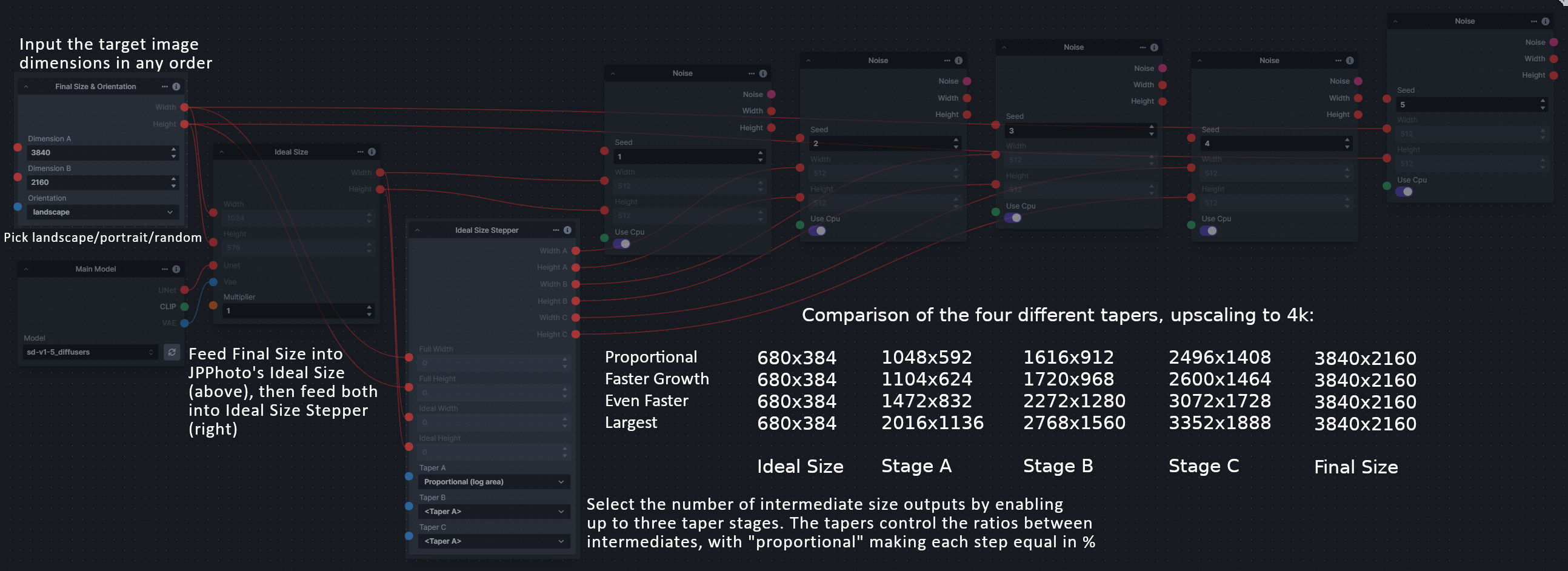

### Ideal Size

|

||||

|

||||

**Description:** Render depth maps from Wavefront .obj files (triangulated) using this simple 3D renderer utilizing numpy and matplotlib to compute and color the scene. There are simple parameters to change the FOV, camera position, and model orientation.

|

||||

**Description:** This node calculates an ideal image size for a first pass of a multi-pass upscaling. The aim is to avoid duplication that results from choosing a size larger than the model is capable of.

|

||||

|

||||

To be imported, an .obj must use triangulated meshes, so make sure to enable that option if exporting from a 3D modeling program. This renderer makes each triangle a solid color based on its average depth, so it will cause anomalies if your .obj has large triangles. In Blender, the Remesh modifier can be helpful to subdivide a mesh into small pieces that work well given these limitations.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/depth-from-obj-node

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

### Generative Grammar-Based Prompt Nodes

|

||||

|

||||

**Description:** This set of 3 nodes generates prompts from simple user-defined grammar rules (loaded from custom files - examples provided below). The prompts are made by recursively expanding a special template string, replacing nonterminal "parts-of-speech" until no more nonterminal terms remain in the string.

|

||||

|

||||

This includes 3 Nodes:

|

||||

- *Lookup Table from File* - loads a YAML file "prompt" section (or of a whole folder of YAML's) into a JSON-ified dictionary (Lookups output)

|

||||

- *Lookups Entry from Prompt* - places a single entry in a new Lookups output under the specified heading

|

||||

- *Prompt from Lookup Table* - uses a Collection of Lookups as grammar rules from which to randomly generate prompts.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/generative-grammar-prompt-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/ideal-size-node

|

||||

|

||||

--------------------------------

|

||||

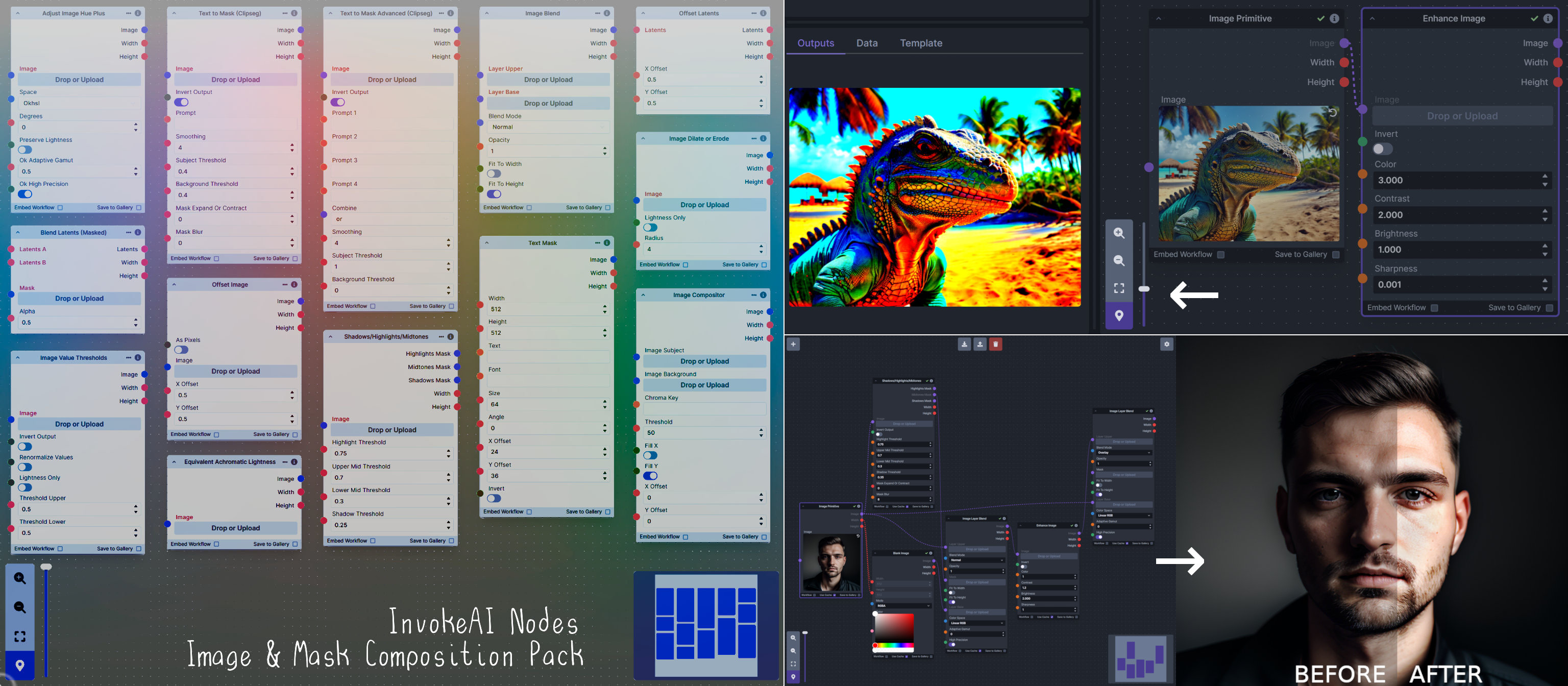

### Image and Mask Composition Pack

|

||||

@ -209,44 +147,87 @@ This includes 15 Nodes:

|

||||

|

||||

**Node Link:** https://github.com/dwringer/composition-nodes

|

||||

|

||||

**Nodes and Output Examples:**

|

||||

|

||||

</br><img src="https://raw.githubusercontent.com/dwringer/composition-nodes/main/composition_pack_overview.jpg" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### Size Stepper Nodes

|

||||

### Image to Character Art Image Nodes

|

||||

|

||||

**Description:** This is a set of nodes for calculating the necessary size increments for doing upscaling workflows. Use the *Final Size & Orientation* node to enter your full size dimensions and orientation (portrait/landscape/random), then plug that and your initial generation dimensions into the *Ideal Size Stepper* and get 1, 2, or 3 intermediate pairs of dimensions for upscaling. Note this does not output the initial size or full size dimensions: the 1, 2, or 3 outputs of this node are only the intermediate sizes.

|

||||

**Description:** Group of nodes to convert an input image into ascii/unicode art Image

|

||||

|

||||

A third node is included, *Random Switch (Integers)*, which is just a generic version of Final Size with no orientation selection.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/size-stepper-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Text font to Image

|

||||

|

||||

**Description:** text font to text image node for InvokeAI, download a font to use (or if in font cache uses it from there), the text is always resized to the image size, but can control that with padding, optional 2nd line

|

||||

|

||||

**Node Link:** https://github.com/mickr777/textfontimage

|

||||

**Node Link:** https://github.com/mickr777/imagetoasciiimage

|

||||

|

||||

**Output Examples**

|

||||

|

||||

|

||||

|

||||

Results after using the depth controlnet

|

||||

|

||||

|

||||

|

||||

|

||||

<img src="https://user-images.githubusercontent.com/115216705/271817646-8e061fcc-9a2c-4fa9-bcc7-c0f7b01e9056.png" width="300" /><img src="https://github.com/mickr777/imagetoasciiimage/assets/115216705/3c4990eb-2f42-46b9-90f9-0088b939dc6a" width="300" /></br>

|

||||

<img src="https://github.com/mickr777/imagetoasciiimage/assets/115216705/fee7f800-a4a8-41e2-a66b-c66e4343307e" width="300" />

|

||||

<img src="https://github.com/mickr777/imagetoasciiimage/assets/115216705/1d9c1003-a45f-45c2-aac7-46470bb89330" width="300" />

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Image Picker

|

||||

|

||||

**Description:** This InvokeAI node takes in a collection of images and randomly chooses one. This can be useful when you have a number of poses to choose from for a ControlNet node, or a number of input images for another purpose.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/image-picker-node

|

||||

|

||||

--------------------------------

|

||||

### Load Video Frame

|

||||

|

||||

**Description:** This is a video frame image provider + indexer/video creation nodes for hooking up to iterators and ranges and ControlNets and such for invokeAI node experimentation. Think animation + ControlNet outputs.

|

||||

|

||||

**Node Link:** https://github.com/helix4u/load_video_frame

|

||||

|

||||

**Example Node Graph:** https://github.com/helix4u/load_video_frame/blob/main/Example_Workflow.json

|

||||

|

||||

**Output Example:**

|

||||

|

||||

<img src="https://github.com/helix4u/load_video_frame/blob/main/testmp4_embed_converted.gif" width="500" />

|

||||

[Full mp4 of Example Output test.mp4](https://github.com/helix4u/load_video_frame/blob/main/test.mp4)

|

||||

|

||||

--------------------------------

|

||||

### Make 3D

|

||||

|

||||

**Description:** Create compelling 3D stereo images from 2D originals.

|

||||

|

||||

**Node Link:** [https://gitlab.com/srcrr/shift3d/-/raw/main/make3d.py](https://gitlab.com/srcrr/shift3d)

|

||||

|

||||

**Example Node Graph:** https://gitlab.com/srcrr/shift3d/-/raw/main/example-workflow.json?ref_type=heads&inline=false

|

||||

|

||||

**Output Examples**

|

||||

|

||||

<img src="https://gitlab.com/srcrr/shift3d/-/raw/main/example-1.png" width="300" />

|

||||

<img src="https://gitlab.com/srcrr/shift3d/-/raw/main/example-2.png" width="300" />

|

||||

|

||||

--------------------------------

|

||||

### Oobabooga

|

||||

|

||||

**Description:** asks a local LLM running in Oobabooga's Text-Generation-Webui to write a prompt based on the user input.

|

||||

|

||||

**Link:** https://github.com/sammyf/oobabooga-node

|

||||

|

||||

**Example:**

|

||||

|

||||

"describe a new mystical creature in its natural environment"

|

||||

|

||||

*can return*

|

||||

|

||||

"The mystical creature I am describing to you is called the "Glimmerwing". It is a majestic, iridescent being that inhabits the depths of the most enchanted forests and glimmering lakes. Its body is covered in shimmering scales that reflect every color of the rainbow, and it has delicate, translucent wings that sparkle like diamonds in the sunlight. The Glimmerwing's home is a crystal-clear lake, surrounded by towering trees with leaves that shimmer like jewels. In this serene environment, the Glimmerwing spends its days swimming gracefully through the water, chasing schools of glittering fish and playing with the gentle ripples of the lake's surface.

|

||||

As the sun sets, the Glimmerwing perches on a branch of one of the trees, spreading its wings to catch the last rays of light. The creature's scales glow softly, casting a rainbow of colors across the forest floor. The Glimmerwing sings a haunting melody, its voice echoing through the stillness of the night air. Its song is said to have the power to heal the sick and bring peace to troubled souls. Those who are lucky enough to hear the Glimmerwing's song are forever changed by its beauty and grace."

|

||||

|

||||

<img src="https://github.com/sammyf/oobabooga-node/assets/42468608/cecdd820-93dd-4c35-abbf-607e001fb2ed" width="300" />

|

||||

|

||||

**Requirement**

|

||||

|

||||

a Text-Generation-Webui instance (might work remotely too, but I never tried it) and obviously InvokeAI 3.x

|

||||

|

||||

**Note**

|

||||

|

||||

This node works best with SDXL models, especially as the style can be described independently of the LLM's output.

|

||||

|

||||

--------------------------------

|

||||

### Prompt Tools

|

||||

|

||||

**Description:** A set of InvokeAI nodes that add general prompt manipulation tools. These where written to accompany the PromptsFromFile node and other prompt generation nodes.

|

||||

**Description:** A set of InvokeAI nodes that add general prompt manipulation tools. These were written to accompany the PromptsFromFile node and other prompt generation nodes.

|

||||

|

||||

1. PromptJoin - Joins to prompts into one.

|

||||

2. PromptReplace - performs a search and replace on a prompt. With the option of using regex.

|

||||

@ -263,51 +244,83 @@ See full docs here: https://github.com/skunkworxdark/Prompt-tools-nodes/edit/mai

|

||||

**Node Link:** https://github.com/skunkworxdark/Prompt-tools-nodes

|

||||

|

||||

--------------------------------

|

||||

### Retroize

|

||||

|

||||

**Description:** Retroize is a collection of nodes for InvokeAI to "Retroize" images. Any image can be given a fresh coat of retro paint with these nodes, either from your gallery or from within the graph itself. It includes nodes to pixelize, quantize, palettize, and ditherize images; as well as to retrieve palettes from existing images.

|

||||

|

||||

**Node Link:** https://github.com/Ar7ific1al/invokeai-retroizeinode/

|

||||

|

||||

**Retroize Output Examples**

|

||||

|

||||

<img src="https://github.com/Ar7ific1al/InvokeAI_nodes_retroize/assets/2306586/de8b4fa6-324c-4c2d-b36c-297600c73974" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### Size Stepper Nodes

|

||||

|

||||

**Description:** This is a set of nodes for calculating the necessary size increments for doing upscaling workflows. Use the *Final Size & Orientation* node to enter your full size dimensions and orientation (portrait/landscape/random), then plug that and your initial generation dimensions into the *Ideal Size Stepper* and get 1, 2, or 3 intermediate pairs of dimensions for upscaling. Note this does not output the initial size or full size dimensions: the 1, 2, or 3 outputs of this node are only the intermediate sizes.

|

||||

|

||||

A third node is included, *Random Switch (Integers)*, which is just a generic version of Final Size with no orientation selection.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/size-stepper-nodes

|

||||

|

||||

**Example Usage:**

|

||||

</br><img src="https://raw.githubusercontent.com/dwringer/size-stepper-nodes/main/size_nodes_usage.jpg" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### Text font to Image

|

||||

|

||||

**Description:** text font to text image node for InvokeAI, download a font to use (or if in font cache uses it from there), the text is always resized to the image size, but can control that with padding, optional 2nd line

|

||||

|

||||

**Node Link:** https://github.com/mickr777/textfontimage

|

||||

|

||||

**Output Examples**

|

||||

|

||||

<img src="https://github.com/mickr777/InvokeAI/assets/115216705/c21b0af3-d9c6-4c16-9152-846a23effd36" width="300" />

|

||||

|

||||

Results after using the depth controlnet

|

||||

|

||||

<img src="https://github.com/mickr777/InvokeAI/assets/115216705/915f1a53-968e-43eb-aa61-07cd8f1a733a" width="300" />

|

||||

<img src="https://github.com/mickr777/InvokeAI/assets/115216705/821ef89e-8a60-44f5-b94e-471a9d8690cc" width="300" />

|

||||

<img src="https://github.com/mickr777/InvokeAI/assets/115216705/2befcb6d-49f4-4bfd-b5fc-1fee19274f89" width="300" />

|

||||

|

||||

--------------------------------

|

||||

### Thresholding

|

||||

|

||||

**Description:** This node generates masks for highlights, midtones, and shadows given an input image. You can optionally specify a blur for the lookup table used in making those masks from the source image.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/thresholding-node

|

||||

|

||||

**Examples**

|

||||

|

||||

Input:

|

||||

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/c88ada13-fb3d-484c-a4fe-947b44712632" width="300" />

|

||||

|

||||

Highlights/Midtones/Shadows:

|

||||

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/727021c1-36ff-4ec8-90c8-105e00de986d" width="300" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/0b721bfc-f051-404e-b905-2f16b824ddfe" width="300" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/04c1297f-1c88-42b6-a7df-dd090b976286" width="300" />

|

||||

|

||||

Highlights/Midtones/Shadows (with LUT blur enabled):

|

||||

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/19aa718a-70c1-4668-8169-d68f4bd13771" width="300" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/0a440e43-697f-4d17-82ee-f287467df0a5" width="300" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/0701fd0f-2ca7-4fe2-8613-2b52547bafce" width="300" />

|

||||

|

||||

--------------------------------

|

||||

### XY Image to Grid and Images to Grids nodes

|

||||

|

||||

**Description:** Image to grid nodes and supporting tools.

|

||||

|

||||

1. "Images To Grids" node - Takes a collection of images and creates a grid(s) of images. If there are more images than the size of a single grid then mutilple grids will be created until it runs out of images.

|

||||

2. "XYImage To Grid" node - Converts a collection of XYImages into a labeled Grid of images. The XYImages collection has to be built using the supporoting nodes. See example node setups for more details.

|

||||

|

||||

1. "Images To Grids" node - Takes a collection of images and creates a grid(s) of images. If there are more images than the size of a single grid then multiple grids will be created until it runs out of images.

|

||||

2. "XYImage To Grid" node - Converts a collection of XYImages into a labeled Grid of images. The XYImages collection has to be built using the supporting nodes. See example node setups for more details.

|

||||

|

||||

See full docs here: https://github.com/skunkworxdark/XYGrid_nodes/edit/main/README.md

|

||||

|

||||

**Node Link:** https://github.com/skunkworxdark/XYGrid_nodes

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Image to Character Art Image Node's

|

||||

|

||||

**Description:** Group of nodes to convert an input image into ascii/unicode art Image

|

||||

|

||||

**Node Link:** https://github.com/mickr777/imagetoasciiimage

|

||||

|

||||

**Output Examples**

|

||||

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/115216705/8e061fcc-9a2c-4fa9-bcc7-c0f7b01e9056" width="300" />

|

||||

<img src="https://github.com/mickr777/imagetoasciiimage/assets/115216705/3c4990eb-2f42-46b9-90f9-0088b939dc6a" width="300" /></br>

|

||||

<img src="https://github.com/mickr777/imagetoasciiimage/assets/115216705/fee7f800-a4a8-41e2-a66b-c66e4343307e" width="300" />

|

||||

<img src="https://github.com/mickr777/imagetoasciiimage/assets/115216705/1d9c1003-a45f-45c2-aac7-46470bb89330" width="300" />

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Grid to Gif

|

||||

|

||||

**Description:** One node that turns a grid image into an image colletion, one node that turns an image collection into a gif

|

||||

|

||||

**Node Link:** https://github.com/mildmisery/invokeai-GridToGifNode/blob/main/GridToGif.py

|

||||

|

||||

**Example Node Graph:** https://github.com/mildmisery/invokeai-GridToGifNode/blob/main/Grid%20to%20Gif%20Example%20Workflow.json

|

||||

|

||||

**Output Examples**

|

||||

|

||||

<img src="https://raw.githubusercontent.com/mildmisery/invokeai-GridToGifNode/main/input.png" width="300" />

|

||||

<img src="https://raw.githubusercontent.com/mildmisery/invokeai-GridToGifNode/main/output.gif" width="300" />

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Example Node Template

|

||||

|

||||

**Description:** This node allows you to do super cool things with InvokeAI.

|

||||

@ -318,7 +331,7 @@ See full docs here: https://github.com/skunkworxdark/XYGrid_nodes/edit/main/READ

|

||||

|

||||

**Output Examples**

|

||||

|

||||

{: style="height:115px;width:240px"}

|

||||

</br><img src="https://invoke-ai.github.io/InvokeAI/assets/invoke_ai_banner.png" width="500" />

|

||||

|

||||

|

||||

## Disclaimer

|

||||

|

||||

Reference in New Issue

Block a user