mirror of

https://github.com/invoke-ai/InvokeAI

synced 2024-08-30 20:32:17 +00:00

Merge branch 'main' into feat/ip-adapter

This commit is contained in:

commit

f44496a579

@ -109,6 +109,73 @@ a Text-Generation-Webui instance (might work remotely too, but I never tried it)

|

|||||||

|

|

||||||

This node works best with SDXL models, especially as the style can be described independantly of the LLM's output.

|

This node works best with SDXL models, especially as the style can be described independantly of the LLM's output.

|

||||||

|

|

||||||

|

--------------------------------

|

||||||

|

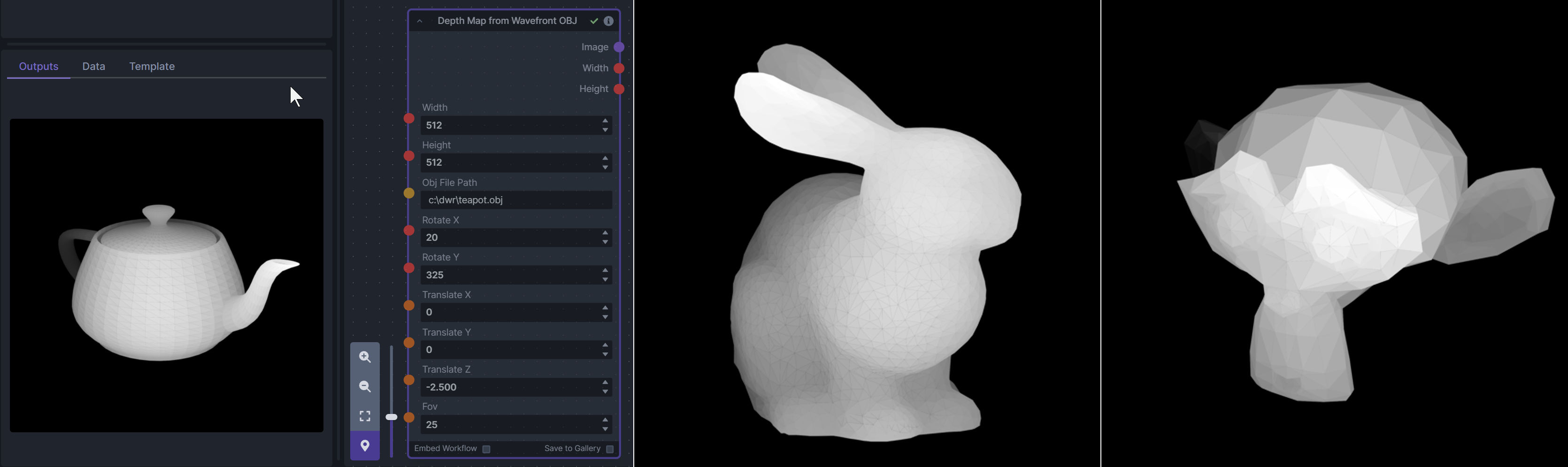

### Depth Map from Wavefront OBJ

|

||||||

|

|

||||||

|

**Description:** Render depth maps from Wavefront .obj files (triangulated) using this simple 3D renderer utilizing numpy and matplotlib to compute and color the scene. There are simple parameters to change the FOV, camera position, and model orientation.

|

||||||

|

|

||||||

|

To be imported, an .obj must use triangulated meshes, so make sure to enable that option if exporting from a 3D modeling program. This renderer makes each triangle a solid color based on its average depth, so it will cause anomalies if your .obj has large triangles. In Blender, the Remesh modifier can be helpful to subdivide a mesh into small pieces that work well given these limitations.

|

||||||

|

|

||||||

|

**Node Link:** https://github.com/dwringer/depth-from-obj-node

|

||||||

|

|

||||||

|

**Example Usage:**

|

||||||

|

|

||||||

|

|

||||||

|

--------------------------------

|

||||||

|

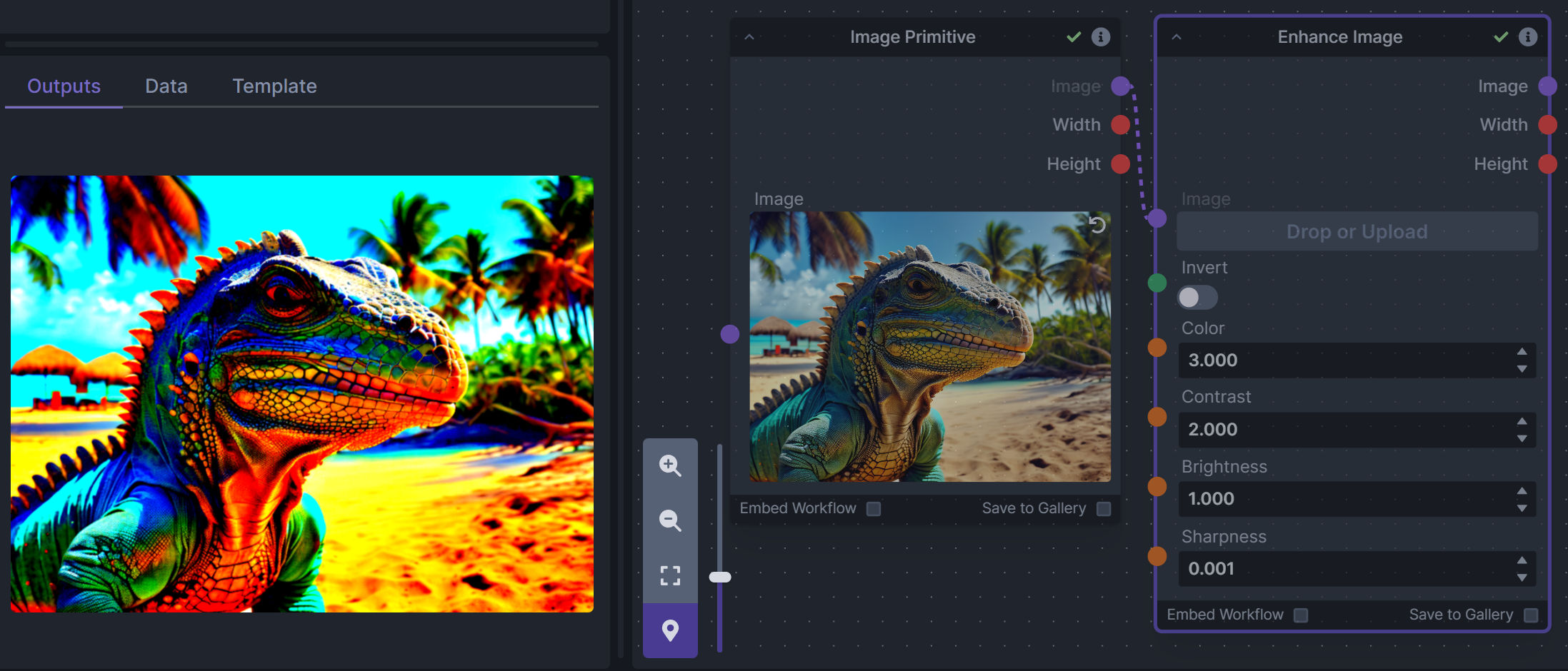

### Enhance Image (simple adjustments)

|

||||||

|

|

||||||

|

**Description:** Boost or reduce color saturation, contrast, brightness, sharpness, or invert colors of any image at any stage with this simple wrapper for pillow [PIL]'s ImageEnhance module.

|

||||||

|

|

||||||

|

Color inversion is toggled with a simple switch, while each of the four enhancer modes are activated by entering a value other than 1 in each corresponding input field. Values less than 1 will reduce the corresponding property, while values greater than 1 will enhance it.

|

||||||

|

|

||||||

|

**Node Link:** https://github.com/dwringer/image-enhance-node

|

||||||

|

|

||||||

|

**Example Usage:**

|

||||||

|

|

||||||

|

|

||||||

|

--------------------------------

|

||||||

|

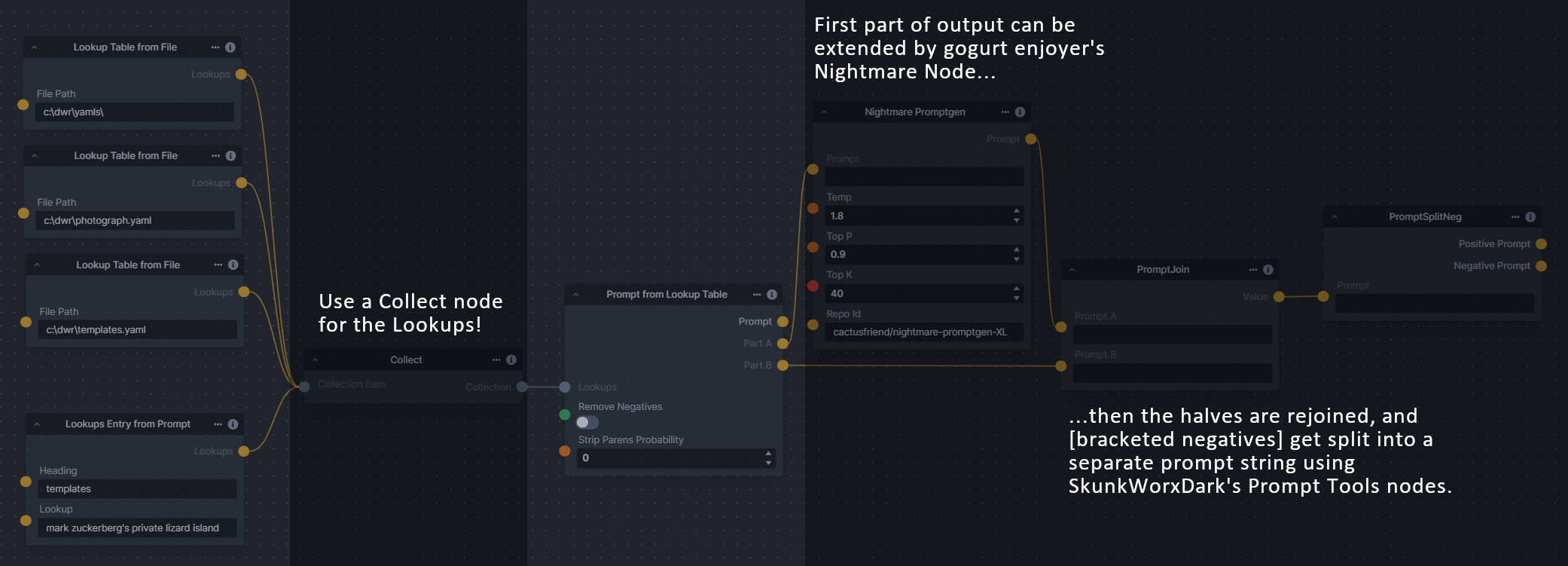

### Generative Grammar-Based Prompt Nodes

|

||||||

|

|

||||||

|

**Description:** This set of 3 nodes generates prompts from simple user-defined grammar rules (loaded from custom files - examples provided below). The prompts are made by recursively expanding a special template string, replacing nonterminal "parts-of-speech" until no more nonterminal terms remain in the string.

|

||||||

|

|

||||||

|

This includes 3 Nodes:

|

||||||

|

- *Lookup Table from File* - loads a YAML file "prompt" section (or of a whole folder of YAML's) into a JSON-ified dictionary (Lookups output)

|

||||||

|

- *Lookups Entry from Prompt* - places a single entry in a new Lookups output under the specified heading

|

||||||

|

- *Prompt from Lookup Table* - uses a Collection of Lookups as grammar rules from which to randomly generate prompts.

|

||||||

|

|

||||||

|

**Node Link:** https://github.com/dwringer/generative-grammar-prompt-nodes

|

||||||

|

|

||||||

|

**Example Usage:**

|

||||||

|

|

||||||

|

|

||||||

|

--------------------------------

|

||||||

|

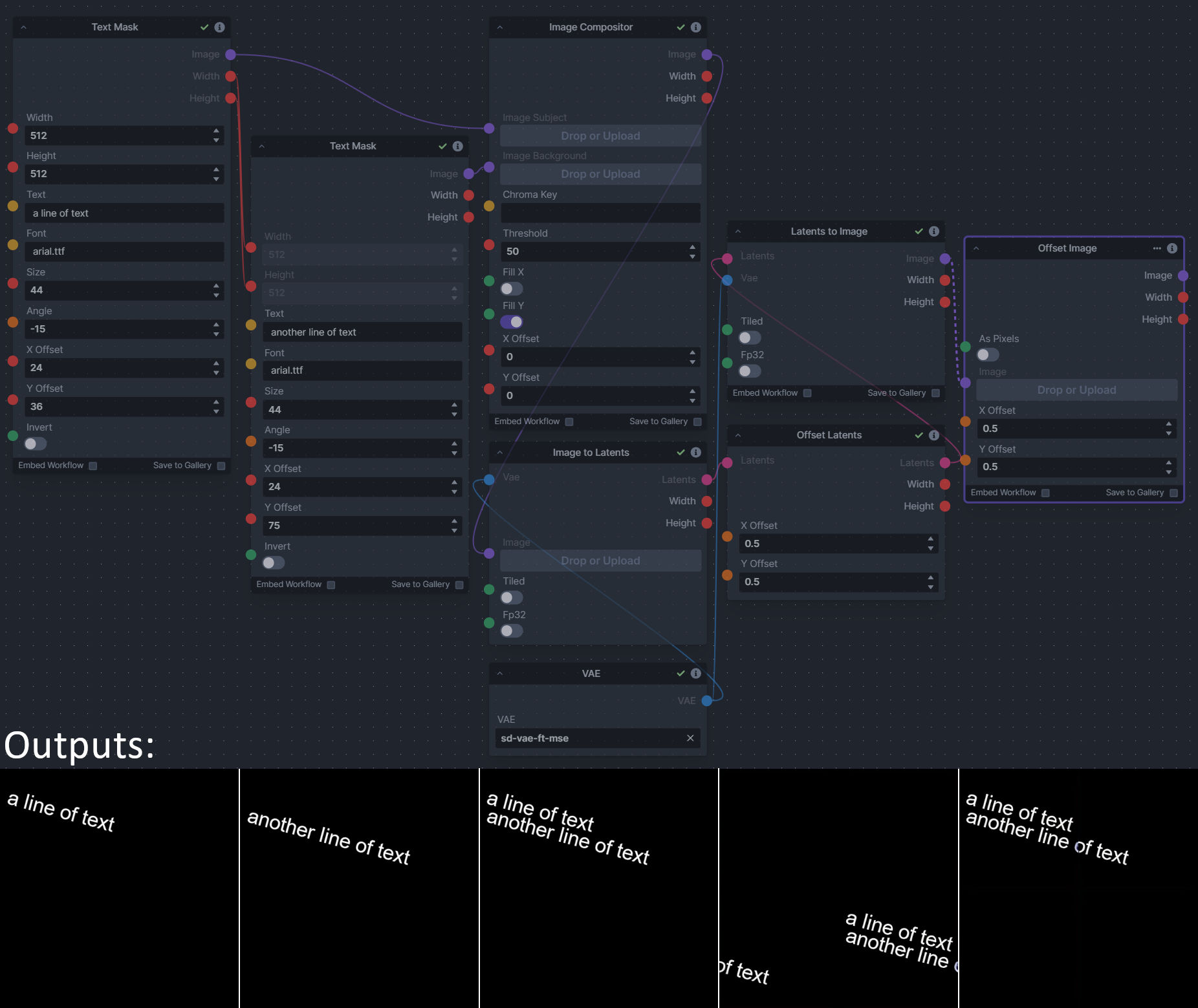

### Image and Mask Composition Pack

|

||||||

|

|

||||||

|

**Description:** This is a pack of nodes for composing masks and images, including a simple text mask creator and both image and latent offset nodes. The offsets wrap around, so these can be used in conjunction with the Seamless node to progressively generate centered on different parts of the seamless tiling.

|

||||||

|

|

||||||

|

This includes 4 Nodes:

|

||||||

|

- *Text Mask (simple 2D)* - create and position a white on black (or black on white) line of text using any font locally available to Invoke.

|

||||||

|

- *Image Compositor* - Take a subject from an image with a flat backdrop and layer it on another image using a chroma key or flood select background removal.

|

||||||

|

- *Offset Latents* - Offset a latents tensor in the vertical and/or horizontal dimensions, wrapping it around.

|

||||||

|

- *Offset Image* - Offset an image in the vertical and/or horizontal dimensions, wrapping it around.

|

||||||

|

|

||||||

|

**Node Link:** https://github.com/dwringer/composition-nodes

|

||||||

|

|

||||||

|

**Example Usage:**

|

||||||

|

|

||||||

|

|

||||||

|

--------------------------------

|

||||||

|

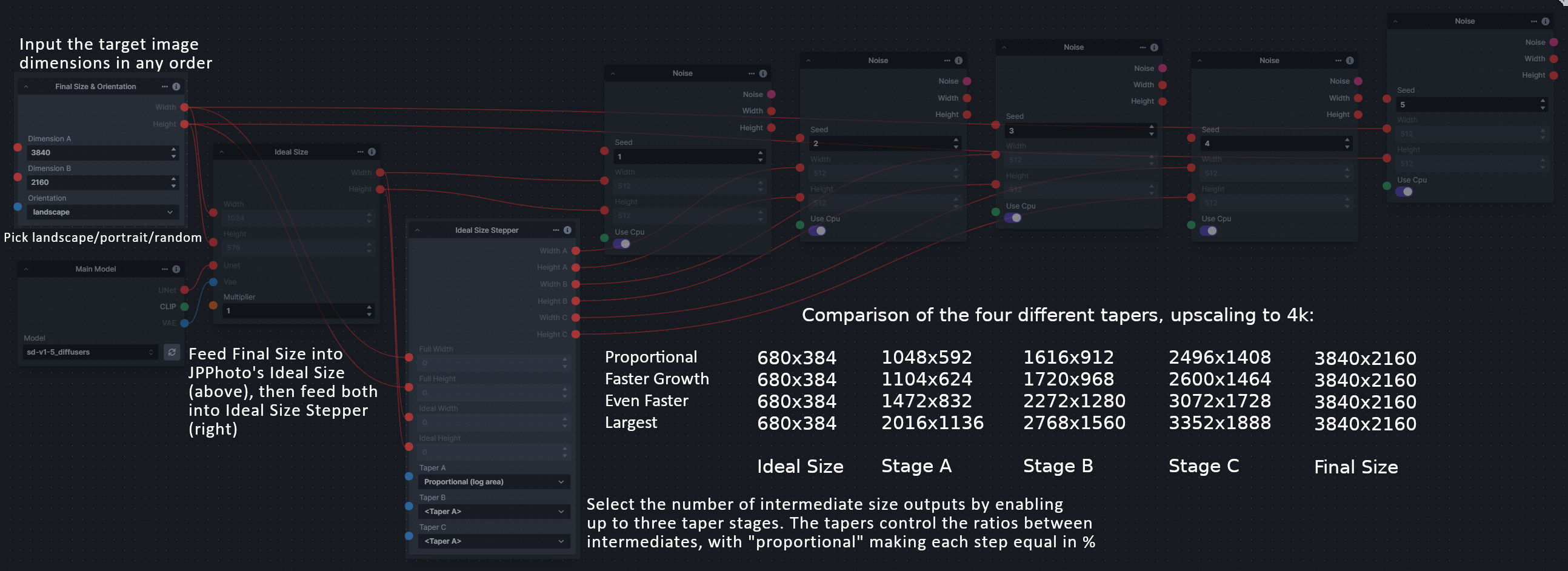

### Size Stepper Nodes

|

||||||

|

|

||||||

|

**Description:** This is a set of nodes for calculating the necessary size increments for doing upscaling workflows. Use the *Final Size & Orientation* node to enter your full size dimensions and orientation (portrait/landscape/random), then plug that and your initial generation dimensions into the *Ideal Size Stepper* and get 1, 2, or 3 intermediate pairs of dimensions for upscaling. Note this does not output the initial size or full size dimensions: the 1, 2, or 3 outputs of this node are only the intermediate sizes.

|

||||||

|

|

||||||

|

A third node is included, *Random Switch (Integers)*, which is just a generic version of Final Size with no orientation selection.

|

||||||

|

|

||||||

|

**Node Link:** https://github.com/dwringer/size-stepper-nodes

|

||||||

|

|

||||||

|

**Example Usage:**

|

||||||

|

|

||||||

|

|

||||||

--------------------------------

|

--------------------------------

|

||||||

|

|

||||||

### Example Node Template

|

### Example Node Template

|

||||||

|

|||||||

@ -35,13 +35,13 @@ The table below contains a list of the default nodes shipped with InvokeAI and t

|

|||||||

|Inverse Lerp Image | Inverse linear interpolation of all pixels of an image|

|

|Inverse Lerp Image | Inverse linear interpolation of all pixels of an image|

|

||||||

|Image Primitive | An image primitive value|

|

|Image Primitive | An image primitive value|

|

||||||

|Lerp Image | Linear interpolation of all pixels of an image|

|

|Lerp Image | Linear interpolation of all pixels of an image|

|

||||||

|Image Luminosity Adjustment | Adjusts the Luminosity (Value) of an image.|

|

|Offset Image Channel | Add to or subtract from an image color channel by a uniform value.|

|

||||||

|

|Multiply Image Channel | Multiply or Invert an image color channel by a scalar value.|

|

||||||

|Multiply Images | Multiplies two images together using `PIL.ImageChops.multiply()`.|

|

|Multiply Images | Multiplies two images together using `PIL.ImageChops.multiply()`.|

|

||||||

|Blur NSFW Image | Add blur to NSFW-flagged images|

|

|Blur NSFW Image | Add blur to NSFW-flagged images|

|

||||||

|Paste Image | Pastes an image into another image.|

|

|Paste Image | Pastes an image into another image.|

|

||||||

|ImageProcessor | Base class for invocations that preprocess images for ControlNet|

|

|ImageProcessor | Base class for invocations that preprocess images for ControlNet|

|

||||||

|Resize Image | Resizes an image to specific dimensions|

|

|Resize Image | Resizes an image to specific dimensions|

|

||||||

|Image Saturation Adjustment | Adjusts the Saturation of an image.|

|

|

||||||

|Scale Image | Scales an image by a factor|

|

|Scale Image | Scales an image by a factor|

|

||||||

|Image to Latents | Encodes an image into latents.|

|

|Image to Latents | Encodes an image into latents.|

|

||||||

|Add Invisible Watermark | Add an invisible watermark to an image|

|

|Add Invisible Watermark | Add an invisible watermark to an image|

|

||||||

|

|||||||

@ -1,19 +1,19 @@

|

|||||||

import typing

|

import typing

|

||||||

from enum import Enum

|

from enum import Enum

|

||||||

|

from pathlib import Path

|

||||||

|

|

||||||

from fastapi import Body

|

from fastapi import Body

|

||||||

from fastapi.routing import APIRouter

|

from fastapi.routing import APIRouter

|

||||||

from pathlib import Path

|

|

||||||

from pydantic import BaseModel, Field

|

from pydantic import BaseModel, Field

|

||||||

|

|

||||||

|

from invokeai.app.invocations.upscale import ESRGAN_MODELS

|

||||||

|

from invokeai.backend.image_util.invisible_watermark import InvisibleWatermark

|

||||||

from invokeai.backend.image_util.patchmatch import PatchMatch

|

from invokeai.backend.image_util.patchmatch import PatchMatch

|

||||||

from invokeai.backend.image_util.safety_checker import SafetyChecker

|

from invokeai.backend.image_util.safety_checker import SafetyChecker

|

||||||

from invokeai.backend.image_util.invisible_watermark import InvisibleWatermark

|

from invokeai.backend.util.logging import logging

|

||||||

from invokeai.app.invocations.upscale import ESRGAN_MODELS

|

|

||||||

|

|

||||||

from invokeai.version import __version__

|

from invokeai.version import __version__

|

||||||

|

|

||||||

from ..dependencies import ApiDependencies

|

from ..dependencies import ApiDependencies

|

||||||

from invokeai.backend.util.logging import logging

|

|

||||||

|

|

||||||

|

|

||||||

class LogLevel(int, Enum):

|

class LogLevel(int, Enum):

|

||||||

@ -55,7 +55,7 @@ async def get_version() -> AppVersion:

|

|||||||

|

|

||||||

@app_router.get("/config", operation_id="get_config", status_code=200, response_model=AppConfig)

|

@app_router.get("/config", operation_id="get_config", status_code=200, response_model=AppConfig)

|

||||||

async def get_config() -> AppConfig:

|

async def get_config() -> AppConfig:

|

||||||

infill_methods = ["tile", "lama"]

|

infill_methods = ["tile", "lama", "cv2"]

|

||||||

if PatchMatch.patchmatch_available():

|

if PatchMatch.patchmatch_available():

|

||||||

infill_methods.append("patchmatch")

|

infill_methods.append("patchmatch")

|

||||||

|

|

||||||

|

|||||||

@ -563,7 +563,7 @@ class MaskEdgeInvocation(BaseInvocation):

|

|||||||

)

|

)

|

||||||

|

|

||||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||||

mask = context.services.images.get_pil_image(self.image.image_name)

|

mask = context.services.images.get_pil_image(self.image.image_name).convert("L")

|

||||||

|

|

||||||

npimg = numpy.asarray(mask, dtype=numpy.uint8)

|

npimg = numpy.asarray(mask, dtype=numpy.uint8)

|

||||||

npgradient = numpy.uint8(255 * (1.0 - numpy.floor(numpy.abs(0.5 - numpy.float32(npimg) / 255.0) * 2.0)))

|

npgradient = numpy.uint8(255 * (1.0 - numpy.floor(numpy.abs(0.5 - numpy.float32(npimg) / 255.0) * 2.0)))

|

||||||

@ -700,8 +700,13 @@ class ColorCorrectInvocation(BaseInvocation):

|

|||||||

# Blur the mask out (into init image) by specified amount

|

# Blur the mask out (into init image) by specified amount

|

||||||

if self.mask_blur_radius > 0:

|

if self.mask_blur_radius > 0:

|

||||||

nm = numpy.asarray(pil_init_mask, dtype=numpy.uint8)

|

nm = numpy.asarray(pil_init_mask, dtype=numpy.uint8)

|

||||||

|

inverted_nm = 255 - nm

|

||||||

|

dilation_size = int(round(self.mask_blur_radius) + 20)

|

||||||

|

dilating_kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (dilation_size, dilation_size))

|

||||||

|

inverted_dilated_nm = cv2.dilate(inverted_nm, dilating_kernel)

|

||||||

|

dilated_nm = 255 - inverted_dilated_nm

|

||||||

nmd = cv2.erode(

|

nmd = cv2.erode(

|

||||||

nm,

|

dilated_nm,

|

||||||

kernel=numpy.ones((3, 3), dtype=numpy.uint8),

|

kernel=numpy.ones((3, 3), dtype=numpy.uint8),

|

||||||

iterations=int(self.mask_blur_radius / 2),

|

iterations=int(self.mask_blur_radius / 2),

|

||||||

)

|

)

|

||||||

@ -773,39 +778,95 @@ class ImageHueAdjustmentInvocation(BaseInvocation):

|

|||||||

)

|

)

|

||||||

|

|

||||||

|

|

||||||

|

COLOR_CHANNELS = Literal[

|

||||||

|

"Red (RGBA)",

|

||||||

|

"Green (RGBA)",

|

||||||

|

"Blue (RGBA)",

|

||||||

|

"Alpha (RGBA)",

|

||||||

|

"Cyan (CMYK)",

|

||||||

|

"Magenta (CMYK)",

|

||||||

|

"Yellow (CMYK)",

|

||||||

|

"Black (CMYK)",

|

||||||

|

"Hue (HSV)",

|

||||||

|

"Saturation (HSV)",

|

||||||

|

"Value (HSV)",

|

||||||

|

"Luminosity (LAB)",

|

||||||

|

"A (LAB)",

|

||||||

|

"B (LAB)",

|

||||||

|

"Y (YCbCr)",

|

||||||

|

"Cb (YCbCr)",

|

||||||

|

"Cr (YCbCr)",

|

||||||

|

]

|

||||||

|

|

||||||

|

CHANNEL_FORMATS = {

|

||||||

|

"Red (RGBA)": ("RGBA", 0),

|

||||||

|

"Green (RGBA)": ("RGBA", 1),

|

||||||

|

"Blue (RGBA)": ("RGBA", 2),

|

||||||

|

"Alpha (RGBA)": ("RGBA", 3),

|

||||||

|

"Cyan (CMYK)": ("CMYK", 0),

|

||||||

|

"Magenta (CMYK)": ("CMYK", 1),

|

||||||

|

"Yellow (CMYK)": ("CMYK", 2),

|

||||||

|

"Black (CMYK)": ("CMYK", 3),

|

||||||

|

"Hue (HSV)": ("HSV", 0),

|

||||||

|

"Saturation (HSV)": ("HSV", 1),

|

||||||

|

"Value (HSV)": ("HSV", 2),

|

||||||

|

"Luminosity (LAB)": ("LAB", 0),

|

||||||

|

"A (LAB)": ("LAB", 1),

|

||||||

|

"B (LAB)": ("LAB", 2),

|

||||||

|

"Y (YCbCr)": ("YCbCr", 0),

|

||||||

|

"Cb (YCbCr)": ("YCbCr", 1),

|

||||||

|

"Cr (YCbCr)": ("YCbCr", 2),

|

||||||

|

}

|

||||||

|

|

||||||

|

|

||||||

@invocation(

|

@invocation(

|

||||||

"img_luminosity_adjust",

|

"img_channel_offset",

|

||||||

title="Adjust Image Luminosity",

|

title="Offset Image Channel",

|

||||||

tags=["image", "luminosity", "hsl"],

|

tags=[

|

||||||

|

"image",

|

||||||

|

"offset",

|

||||||

|

"red",

|

||||||

|

"green",

|

||||||

|

"blue",

|

||||||

|

"alpha",

|

||||||

|

"cyan",

|

||||||

|

"magenta",

|

||||||

|

"yellow",

|

||||||

|

"black",

|

||||||

|

"hue",

|

||||||

|

"saturation",

|

||||||

|

"luminosity",

|

||||||

|

"value",

|

||||||

|

],

|

||||||

category="image",

|

category="image",

|

||||||

version="1.0.0",

|

version="1.0.0",

|

||||||

)

|

)

|

||||||

class ImageLuminosityAdjustmentInvocation(BaseInvocation):

|

class ImageChannelOffsetInvocation(BaseInvocation):

|

||||||

"""Adjusts the Luminosity (Value) of an image."""

|

"""Add or subtract a value from a specific color channel of an image."""

|

||||||

|

|

||||||

image: ImageField = InputField(description="The image to adjust")

|

image: ImageField = InputField(description="The image to adjust")

|

||||||

luminosity: float = InputField(

|

channel: COLOR_CHANNELS = InputField(description="Which channel to adjust")

|

||||||

default=1.0, ge=0, le=1, description="The factor by which to adjust the luminosity (value)"

|

offset: int = InputField(default=0, ge=-255, le=255, description="The amount to adjust the channel by")

|

||||||

)

|

|

||||||

|

|

||||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||||

pil_image = context.services.images.get_pil_image(self.image.image_name)

|

pil_image = context.services.images.get_pil_image(self.image.image_name)

|

||||||

|

|

||||||

# Convert PIL image to OpenCV format (numpy array), note color channel

|

# extract the channel and mode from the input and reference tuple

|

||||||

# ordering is changed from RGB to BGR

|

mode = CHANNEL_FORMATS[self.channel][0]

|

||||||

image = numpy.array(pil_image.convert("RGB"))[:, :, ::-1]

|

channel_number = CHANNEL_FORMATS[self.channel][1]

|

||||||

|

|

||||||

# Convert image to HSV color space

|

# Convert PIL image to new format

|

||||||

hsv_image = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

|

converted_image = numpy.array(pil_image.convert(mode)).astype(int)

|

||||||

|

image_channel = converted_image[:, :, channel_number]

|

||||||

|

|

||||||

# Adjust the luminosity (value)

|

# Adjust the value, clipping to 0..255

|

||||||

hsv_image[:, :, 2] = numpy.clip(hsv_image[:, :, 2] * self.luminosity, 0, 255)

|

image_channel = numpy.clip(image_channel + self.offset, 0, 255)

|

||||||

|

|

||||||

# Convert image back to BGR color space

|

# Put the channel back into the image

|

||||||

image = cv2.cvtColor(hsv_image, cv2.COLOR_HSV2BGR)

|

converted_image[:, :, channel_number] = image_channel

|

||||||

|

|

||||||

# Convert back to PIL format and to original color mode

|

# Convert back to RGBA format and output

|

||||||

pil_image = Image.fromarray(image[:, :, ::-1], "RGB").convert("RGBA")

|

pil_image = Image.fromarray(converted_image.astype(numpy.uint8), mode=mode).convert("RGBA")

|

||||||

|

|

||||||

image_dto = context.services.images.create(

|

image_dto = context.services.images.create(

|

||||||

image=pil_image,

|

image=pil_image,

|

||||||

@ -827,36 +888,60 @@ class ImageLuminosityAdjustmentInvocation(BaseInvocation):

|

|||||||

|

|

||||||

|

|

||||||

@invocation(

|

@invocation(

|

||||||

"img_saturation_adjust",

|

"img_channel_multiply",

|

||||||

title="Adjust Image Saturation",

|

title="Multiply Image Channel",

|

||||||

tags=["image", "saturation", "hsl"],

|

tags=[

|

||||||

|

"image",

|

||||||

|

"invert",

|

||||||

|

"scale",

|

||||||

|

"multiply",

|

||||||

|

"red",

|

||||||

|

"green",

|

||||||

|

"blue",

|

||||||

|

"alpha",

|

||||||

|

"cyan",

|

||||||

|

"magenta",

|

||||||

|

"yellow",

|

||||||

|

"black",

|

||||||

|

"hue",

|

||||||

|

"saturation",

|

||||||

|

"luminosity",

|

||||||

|

"value",

|

||||||

|

],

|

||||||

category="image",

|

category="image",

|

||||||

version="1.0.0",

|

version="1.0.0",

|

||||||

)

|

)

|

||||||

class ImageSaturationAdjustmentInvocation(BaseInvocation):

|

class ImageChannelMultiplyInvocation(BaseInvocation):

|

||||||

"""Adjusts the Saturation of an image."""

|

"""Scale a specific color channel of an image."""

|

||||||

|

|

||||||

image: ImageField = InputField(description="The image to adjust")

|

image: ImageField = InputField(description="The image to adjust")

|

||||||

saturation: float = InputField(default=1.0, ge=0, le=1, description="The factor by which to adjust the saturation")

|

channel: COLOR_CHANNELS = InputField(description="Which channel to adjust")

|

||||||

|

scale: float = InputField(default=1.0, ge=0.0, description="The amount to scale the channel by.")

|

||||||

|

invert_channel: bool = InputField(default=False, description="Invert the channel after scaling")

|

||||||

|

|

||||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||||

pil_image = context.services.images.get_pil_image(self.image.image_name)

|

pil_image = context.services.images.get_pil_image(self.image.image_name)

|

||||||

|

|

||||||

# Convert PIL image to OpenCV format (numpy array), note color channel

|

# extract the channel and mode from the input and reference tuple

|

||||||

# ordering is changed from RGB to BGR

|

mode = CHANNEL_FORMATS[self.channel][0]

|

||||||

image = numpy.array(pil_image.convert("RGB"))[:, :, ::-1]

|

channel_number = CHANNEL_FORMATS[self.channel][1]

|

||||||

|

|

||||||

# Convert image to HSV color space

|

# Convert PIL image to new format

|

||||||

hsv_image = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

|

converted_image = numpy.array(pil_image.convert(mode)).astype(float)

|

||||||

|

image_channel = converted_image[:, :, channel_number]

|

||||||

|

|

||||||

# Adjust the saturation

|

# Adjust the value, clipping to 0..255

|

||||||

hsv_image[:, :, 1] = numpy.clip(hsv_image[:, :, 1] * self.saturation, 0, 255)

|

image_channel = numpy.clip(image_channel * self.scale, 0, 255)

|

||||||

|

|

||||||

# Convert image back to BGR color space

|

# Invert the channel if requested

|

||||||

image = cv2.cvtColor(hsv_image, cv2.COLOR_HSV2BGR)

|

if self.invert_channel:

|

||||||

|

image_channel = 255 - image_channel

|

||||||

|

|

||||||

# Convert back to PIL format and to original color mode

|

# Put the channel back into the image

|

||||||

pil_image = Image.fromarray(image[:, :, ::-1], "RGB").convert("RGBA")

|

converted_image[:, :, channel_number] = image_channel

|

||||||

|

|

||||||

|

# Convert back to RGBA format and output

|

||||||

|

pil_image = Image.fromarray(converted_image.astype(numpy.uint8), mode=mode).convert("RGBA")

|

||||||

|

|

||||||

image_dto = context.services.images.create(

|

image_dto = context.services.images.create(

|

||||||

image=pil_image,

|

image=pil_image,

|

||||||

|

|||||||

@ -8,19 +8,17 @@ from PIL import Image, ImageOps

|

|||||||

|

|

||||||

from invokeai.app.invocations.primitives import ColorField, ImageField, ImageOutput

|

from invokeai.app.invocations.primitives import ColorField, ImageField, ImageOutput

|

||||||

from invokeai.app.util.misc import SEED_MAX, get_random_seed

|

from invokeai.app.util.misc import SEED_MAX, get_random_seed

|

||||||

|

from invokeai.backend.image_util.cv2_inpaint import cv2_inpaint

|

||||||

from invokeai.backend.image_util.lama import LaMA

|

from invokeai.backend.image_util.lama import LaMA

|

||||||

from invokeai.backend.image_util.patchmatch import PatchMatch

|

from invokeai.backend.image_util.patchmatch import PatchMatch

|

||||||

|

|

||||||

from ..models.image import ImageCategory, ResourceOrigin

|

from ..models.image import ImageCategory, ResourceOrigin

|

||||||

from .baseinvocation import BaseInvocation, InputField, InvocationContext, invocation

|

from .baseinvocation import BaseInvocation, InputField, InvocationContext, invocation

|

||||||

|

from .image import PIL_RESAMPLING_MAP, PIL_RESAMPLING_MODES

|

||||||

|

|

||||||

|

|

||||||

def infill_methods() -> list[str]:

|

def infill_methods() -> list[str]:

|

||||||

methods = [

|

methods = ["tile", "solid", "lama", "cv2"]

|

||||||

"tile",

|

|

||||||

"solid",

|

|

||||||

"lama",

|

|

||||||

]

|

|

||||||

if PatchMatch.patchmatch_available():

|

if PatchMatch.patchmatch_available():

|

||||||

methods.insert(0, "patchmatch")

|

methods.insert(0, "patchmatch")

|

||||||

return methods

|

return methods

|

||||||

@ -49,6 +47,10 @@ def infill_patchmatch(im: Image.Image) -> Image.Image:

|

|||||||

return im_patched

|

return im_patched

|

||||||

|

|

||||||

|

|

||||||

|

def infill_cv2(im: Image.Image) -> Image.Image:

|

||||||

|

return cv2_inpaint(im)

|

||||||

|

|

||||||

|

|

||||||

def get_tile_images(image: np.ndarray, width=8, height=8):

|

def get_tile_images(image: np.ndarray, width=8, height=8):

|

||||||

_nrows, _ncols, depth = image.shape

|

_nrows, _ncols, depth = image.shape

|

||||||

_strides = image.strides

|

_strides = image.strides

|

||||||

@ -194,15 +196,35 @@ class InfillPatchMatchInvocation(BaseInvocation):

|

|||||||

"""Infills transparent areas of an image using the PatchMatch algorithm"""

|

"""Infills transparent areas of an image using the PatchMatch algorithm"""

|

||||||

|

|

||||||

image: ImageField = InputField(description="The image to infill")

|

image: ImageField = InputField(description="The image to infill")

|

||||||

|

downscale: float = InputField(default=2.0, gt=0, description="Run patchmatch on downscaled image to speedup infill")

|

||||||

|

resample_mode: PIL_RESAMPLING_MODES = InputField(default="bicubic", description="The resampling mode")

|

||||||

|

|

||||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||||

image = context.services.images.get_pil_image(self.image.image_name)

|

image = context.services.images.get_pil_image(self.image.image_name).convert("RGBA")

|

||||||

|

|

||||||

|

resample_mode = PIL_RESAMPLING_MAP[self.resample_mode]

|

||||||

|

|

||||||

|

infill_image = image.copy()

|

||||||

|

width = int(image.width / self.downscale)

|

||||||

|

height = int(image.height / self.downscale)

|

||||||

|

infill_image = infill_image.resize(

|

||||||

|

(width, height),

|

||||||

|

resample=resample_mode,

|

||||||

|

)

|

||||||

|

|

||||||

if PatchMatch.patchmatch_available():

|

if PatchMatch.patchmatch_available():

|

||||||

infilled = infill_patchmatch(image.copy())

|

infilled = infill_patchmatch(infill_image)

|

||||||

else:

|

else:

|

||||||

raise ValueError("PatchMatch is not available on this system")

|

raise ValueError("PatchMatch is not available on this system")

|

||||||

|

|

||||||

|

infilled = infilled.resize(

|

||||||

|

(image.width, image.height),

|

||||||

|

resample=resample_mode,

|

||||||

|

)

|

||||||

|

|

||||||

|

infilled.paste(image, (0, 0), mask=image.split()[-1])

|

||||||

|

# image.paste(infilled, (0, 0), mask=image.split()[-1])

|

||||||

|

|

||||||

image_dto = context.services.images.create(

|

image_dto = context.services.images.create(

|

||||||

image=infilled,

|

image=infilled,

|

||||||

image_origin=ResourceOrigin.INTERNAL,

|

image_origin=ResourceOrigin.INTERNAL,

|

||||||

@ -245,3 +267,30 @@ class LaMaInfillInvocation(BaseInvocation):

|

|||||||

width=image_dto.width,

|

width=image_dto.width,

|

||||||

height=image_dto.height,

|

height=image_dto.height,

|

||||||

)

|

)

|

||||||

|

|

||||||

|

|

||||||

|

@invocation("infill_cv2", title="CV2 Infill", tags=["image", "inpaint"], category="inpaint")

|

||||||

|

class CV2InfillInvocation(BaseInvocation):

|

||||||

|

"""Infills transparent areas of an image using OpenCV Inpainting"""

|

||||||

|

|

||||||

|

image: ImageField = InputField(description="The image to infill")

|

||||||

|

|

||||||

|

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||||

|

image = context.services.images.get_pil_image(self.image.image_name)

|

||||||

|

|

||||||

|

infilled = infill_cv2(image.copy())

|

||||||

|

|

||||||

|

image_dto = context.services.images.create(

|

||||||

|

image=infilled,

|

||||||

|

image_origin=ResourceOrigin.INTERNAL,

|

||||||

|

image_category=ImageCategory.GENERAL,

|

||||||

|

node_id=self.id,

|

||||||

|

session_id=context.graph_execution_state_id,

|

||||||

|

is_intermediate=self.is_intermediate,

|

||||||

|

)

|

||||||

|

|

||||||

|

return ImageOutput(

|

||||||

|

image=ImageField(image_name=image_dto.image_name),

|

||||||

|

width=image_dto.width,

|

||||||

|

height=image_dto.height,

|

||||||

|

)

|

||||||

|

|||||||

20

invokeai/backend/image_util/cv2_inpaint.py

Normal file

20

invokeai/backend/image_util/cv2_inpaint.py

Normal file

@ -0,0 +1,20 @@

|

|||||||

|

import cv2

|

||||||

|

import numpy as np

|

||||||

|

from PIL import Image

|

||||||

|

|

||||||

|

|

||||||

|

def cv2_inpaint(image: Image.Image) -> Image.Image:

|

||||||

|

# Prepare Image

|

||||||

|

image_array = np.array(image.convert("RGB"))

|

||||||

|

image_cv = cv2.cvtColor(image_array, cv2.COLOR_RGB2BGR)

|

||||||

|

|

||||||

|

# Prepare Mask From Alpha Channel

|

||||||

|

mask = image.split()[3].convert("RGB")

|

||||||

|

mask_array = np.array(mask)

|

||||||

|

mask_cv = cv2.cvtColor(mask_array, cv2.COLOR_BGR2GRAY)

|

||||||

|

mask_inv = cv2.bitwise_not(mask_cv)

|

||||||

|

|

||||||

|

# Inpaint Image

|

||||||

|

inpainted_result = cv2.inpaint(image_cv, mask_inv, 3, cv2.INPAINT_TELEA)

|

||||||

|

inpainted_image = Image.fromarray(cv2.cvtColor(inpainted_result, cv2.COLOR_BGR2RGB))

|

||||||

|

return inpainted_image

|

||||||

@ -5,6 +5,7 @@ import numpy as np

|

|||||||

import torch

|

import torch

|

||||||

from PIL import Image

|

from PIL import Image

|

||||||

|

|

||||||

|

import invokeai.backend.util.logging as logger

|

||||||

from invokeai.app.services.config import get_invokeai_config

|

from invokeai.app.services.config import get_invokeai_config

|

||||||

from invokeai.backend.util.devices import choose_torch_device

|

from invokeai.backend.util.devices import choose_torch_device

|

||||||

|

|

||||||

@ -19,7 +20,7 @@ def norm_img(np_img):

|

|||||||

|

|

||||||

def load_jit_model(url_or_path, device):

|

def load_jit_model(url_or_path, device):

|

||||||

model_path = url_or_path

|

model_path = url_or_path

|

||||||

print(f"Loading model from: {model_path}")

|

logger.info(f"Loading model from: {model_path}")

|

||||||

model = torch.jit.load(model_path, map_location="cpu").to(device)

|

model = torch.jit.load(model_path, map_location="cpu").to(device)

|

||||||

model.eval()

|

model.eval()

|

||||||

return model

|

return model

|

||||||

@ -52,5 +53,6 @@ class LaMA:

|

|||||||

|

|

||||||

del model

|

del model

|

||||||

gc.collect()

|

gc.collect()

|

||||||

|

torch.cuda.empty_cache()

|

||||||

|

|

||||||

return infilled_image

|

return infilled_image

|

||||||

|

|||||||

@ -290,9 +290,20 @@ def download_realesrgan():

|

|||||||

download_with_progress_bar(model["url"], config.models_path / model["dest"], model["description"])

|

download_with_progress_bar(model["url"], config.models_path / model["dest"], model["description"])

|

||||||

|

|

||||||

|

|

||||||

|

# ---------------------------------------------

|

||||||

|

def download_lama():

|

||||||

|

logger.info("Installing lama infill model")

|

||||||

|

download_with_progress_bar(

|

||||||

|

"https://github.com/Sanster/models/releases/download/add_big_lama/big-lama.pt",

|

||||||

|

config.models_path / "core/misc/lama/lama.pt",

|

||||||

|

"lama infill model",

|

||||||

|

)

|

||||||

|

|

||||||

|

|

||||||

# ---------------------------------------------

|

# ---------------------------------------------

|

||||||

def download_support_models():

|

def download_support_models():

|

||||||

download_realesrgan()

|

download_realesrgan()

|

||||||

|

download_lama()

|

||||||

download_conversion_models()

|

download_conversion_models()

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@ -511,6 +511,7 @@

|

|||||||

"maskBlur": "Blur",

|

"maskBlur": "Blur",

|

||||||

"maskBlurMethod": "Blur Method",

|

"maskBlurMethod": "Blur Method",

|

||||||

"coherencePassHeader": "Coherence Pass",

|

"coherencePassHeader": "Coherence Pass",

|

||||||

|

"coherenceMode": "Mode",

|

||||||

"coherenceSteps": "Steps",

|

"coherenceSteps": "Steps",

|

||||||

"coherenceStrength": "Strength",

|

"coherenceStrength": "Strength",

|

||||||

"seamLowThreshold": "Low",

|

"seamLowThreshold": "Low",

|

||||||

@ -520,6 +521,7 @@

|

|||||||

"scaledHeight": "Scaled H",

|

"scaledHeight": "Scaled H",

|

||||||

"infillMethod": "Infill Method",

|

"infillMethod": "Infill Method",

|

||||||

"tileSize": "Tile Size",

|

"tileSize": "Tile Size",

|

||||||

|

"patchmatchDownScaleSize": "Downscale",

|

||||||

"boundingBoxHeader": "Bounding Box",

|

"boundingBoxHeader": "Bounding Box",

|

||||||

"seamCorrectionHeader": "Seam Correction",

|

"seamCorrectionHeader": "Seam Correction",

|

||||||

"infillScalingHeader": "Infill and Scaling",

|

"infillScalingHeader": "Infill and Scaling",

|

||||||

|

|||||||

@ -31,52 +31,54 @@ const selector = createSelector(

|

|||||||

reasons.push('No initial image selected');

|

reasons.push('No initial image selected');

|

||||||

}

|

}

|

||||||

|

|

||||||

if (activeTabName === 'nodes' && nodes.shouldValidateGraph) {

|

if (activeTabName === 'nodes') {

|

||||||

if (!nodes.nodes.length) {

|

if (nodes.shouldValidateGraph) {

|

||||||

reasons.push('No nodes in graph');

|

if (!nodes.nodes.length) {

|

||||||

}

|

reasons.push('No nodes in graph');

|

||||||

|

|

||||||

nodes.nodes.forEach((node) => {

|

|

||||||

if (!isInvocationNode(node)) {

|

|

||||||

return;

|

|

||||||

}

|

}

|

||||||

|

|

||||||

const nodeTemplate = nodes.nodeTemplates[node.data.type];

|

nodes.nodes.forEach((node) => {

|

||||||

|

if (!isInvocationNode(node)) {

|

||||||

if (!nodeTemplate) {

|

|

||||||

// Node type not found

|

|

||||||

reasons.push('Missing node template');

|

|

||||||

return;

|

|

||||||

}

|

|

||||||

|

|

||||||

const connectedEdges = getConnectedEdges([node], nodes.edges);

|

|

||||||

|

|

||||||

forEach(node.data.inputs, (field) => {

|

|

||||||

const fieldTemplate = nodeTemplate.inputs[field.name];

|

|

||||||

const hasConnection = connectedEdges.some(

|

|

||||||

(edge) =>

|

|

||||||

edge.target === node.id && edge.targetHandle === field.name

|

|

||||||

);

|

|

||||||

|

|

||||||

if (!fieldTemplate) {

|

|

||||||

reasons.push('Missing field template');

|

|

||||||

return;

|

return;

|

||||||

}

|

}

|

||||||

|

|

||||||

if (

|

const nodeTemplate = nodes.nodeTemplates[node.data.type];

|

||||||

fieldTemplate.required &&

|

|

||||||

field.value === undefined &&

|

if (!nodeTemplate) {

|

||||||

!hasConnection

|

// Node type not found

|

||||||

) {

|

reasons.push('Missing node template');

|

||||||

reasons.push(

|

return;

|

||||||

`${node.data.label || nodeTemplate.title} -> ${

|

}

|

||||||

field.label || fieldTemplate.title

|

|

||||||

} missing input`

|

const connectedEdges = getConnectedEdges([node], nodes.edges);

|

||||||

|

|

||||||

|

forEach(node.data.inputs, (field) => {

|

||||||

|

const fieldTemplate = nodeTemplate.inputs[field.name];

|

||||||

|

const hasConnection = connectedEdges.some(

|

||||||

|

(edge) =>

|

||||||

|

edge.target === node.id && edge.targetHandle === field.name

|

||||||

);

|

);

|

||||||

return;

|

|

||||||

}

|

if (!fieldTemplate) {

|

||||||

|

reasons.push('Missing field template');

|

||||||

|

return;

|

||||||

|

}

|

||||||

|

|

||||||

|

if (

|

||||||

|

fieldTemplate.required &&

|

||||||

|

field.value === undefined &&

|

||||||

|

!hasConnection

|

||||||

|

) {

|

||||||

|

reasons.push(

|

||||||

|

`${node.data.label || nodeTemplate.title} -> ${

|

||||||

|

field.label || fieldTemplate.title

|

||||||

|

} missing input`

|

||||||

|

);

|

||||||

|

return;

|

||||||

|

}

|

||||||

|

});

|

||||||

});

|

});

|

||||||

});

|

}

|

||||||

} else {

|

} else {

|

||||||

if (!model) {

|

if (!model) {

|

||||||

reasons.push('No model selected');

|

reasons.push('No model selected');

|

||||||

|

|||||||

@ -118,7 +118,11 @@ const IAICanvasToolChooserOptions = () => {

|

|||||||

useHotkeys(

|

useHotkeys(

|

||||||

['BracketLeft'],

|

['BracketLeft'],

|

||||||

() => {

|

() => {

|

||||||

dispatch(setBrushSize(Math.max(brushSize - 5, 5)));

|

if (brushSize - 5 <= 5) {

|

||||||

|

dispatch(setBrushSize(Math.max(brushSize - 1, 1)));

|

||||||

|

} else {

|

||||||

|

dispatch(setBrushSize(Math.max(brushSize - 5, 1)));

|

||||||

|

}

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

enabled: () => !isStaging,

|

enabled: () => !isStaging,

|

||||||

|

|||||||

@ -10,7 +10,8 @@ import {

|

|||||||

CANVAS_OUTPUT,

|

CANVAS_OUTPUT,

|

||||||

INPAINT_IMAGE_RESIZE_UP,

|

INPAINT_IMAGE_RESIZE_UP,

|

||||||

LATENTS_TO_IMAGE,

|

LATENTS_TO_IMAGE,

|

||||||

MASK_BLUR,

|

MASK_COMBINE,

|

||||||

|

MASK_RESIZE_UP,

|

||||||

METADATA_ACCUMULATOR,

|

METADATA_ACCUMULATOR,

|

||||||

SDXL_CANVAS_IMAGE_TO_IMAGE_GRAPH,

|

SDXL_CANVAS_IMAGE_TO_IMAGE_GRAPH,

|

||||||

SDXL_CANVAS_INPAINT_GRAPH,

|

SDXL_CANVAS_INPAINT_GRAPH,

|

||||||

@ -46,6 +47,8 @@ export const addSDXLRefinerToGraph = (

|

|||||||

const { seamlessXAxis, seamlessYAxis, vaePrecision } = state.generation;

|

const { seamlessXAxis, seamlessYAxis, vaePrecision } = state.generation;

|

||||||

const { boundingBoxScaleMethod } = state.canvas;

|

const { boundingBoxScaleMethod } = state.canvas;

|

||||||

|

|

||||||

|

const fp32 = vaePrecision === 'fp32';

|

||||||

|

|

||||||

const isUsingScaledDimensions = ['auto', 'manual'].includes(

|

const isUsingScaledDimensions = ['auto', 'manual'].includes(

|

||||||

boundingBoxScaleMethod

|

boundingBoxScaleMethod

|

||||||

);

|

);

|

||||||

@ -231,7 +234,7 @@ export const addSDXLRefinerToGraph = (

|

|||||||

type: 'create_denoise_mask',

|

type: 'create_denoise_mask',

|

||||||

id: SDXL_REFINER_INPAINT_CREATE_MASK,

|

id: SDXL_REFINER_INPAINT_CREATE_MASK,

|

||||||

is_intermediate: true,

|

is_intermediate: true,

|

||||||

fp32: vaePrecision === 'fp32' ? true : false,

|

fp32,

|

||||||

};

|

};

|

||||||

|

|

||||||

if (isUsingScaledDimensions) {

|

if (isUsingScaledDimensions) {

|

||||||

@ -257,7 +260,7 @@ export const addSDXLRefinerToGraph = (

|

|||||||

graph.edges.push(

|

graph.edges.push(

|

||||||

{

|

{

|

||||||

source: {

|

source: {

|

||||||

node_id: MASK_BLUR,

|

node_id: isUsingScaledDimensions ? MASK_RESIZE_UP : MASK_COMBINE,

|

||||||

field: 'image',

|

field: 'image',

|

||||||

},

|

},

|

||||||

destination: {

|

destination: {

|

||||||

|

|||||||

@ -2,6 +2,7 @@ import { RootState } from 'app/store/store';

|

|||||||

import { NonNullableGraph } from 'features/nodes/types/types';

|

import { NonNullableGraph } from 'features/nodes/types/types';

|

||||||

import { MetadataAccumulatorInvocation } from 'services/api/types';

|

import { MetadataAccumulatorInvocation } from 'services/api/types';

|

||||||

import {

|

import {

|

||||||

|

CANVAS_COHERENCE_INPAINT_CREATE_MASK,

|

||||||

CANVAS_IMAGE_TO_IMAGE_GRAPH,

|

CANVAS_IMAGE_TO_IMAGE_GRAPH,

|

||||||

CANVAS_INPAINT_GRAPH,

|

CANVAS_INPAINT_GRAPH,

|

||||||

CANVAS_OUTPAINT_GRAPH,

|

CANVAS_OUTPAINT_GRAPH,

|

||||||

@ -31,7 +32,7 @@ export const addVAEToGraph = (

|

|||||||

graph: NonNullableGraph,

|

graph: NonNullableGraph,

|

||||||

modelLoaderNodeId: string = MAIN_MODEL_LOADER

|

modelLoaderNodeId: string = MAIN_MODEL_LOADER

|

||||||

): void => {

|

): void => {

|

||||||

const { vae } = state.generation;

|

const { vae, canvasCoherenceMode } = state.generation;

|

||||||

const { boundingBoxScaleMethod } = state.canvas;

|

const { boundingBoxScaleMethod } = state.canvas;

|

||||||

const { shouldUseSDXLRefiner } = state.sdxl;

|

const { shouldUseSDXLRefiner } = state.sdxl;

|

||||||

|

|

||||||

@ -146,6 +147,20 @@ export const addVAEToGraph = (

|

|||||||

},

|

},

|

||||||

}

|

}

|

||||||

);

|

);

|

||||||

|

|

||||||

|

// Handle Coherence Mode

|

||||||

|

if (canvasCoherenceMode !== 'unmasked') {

|

||||||

|

graph.edges.push({

|

||||||

|

source: {

|

||||||

|

node_id: isAutoVae ? modelLoaderNodeId : VAE_LOADER,

|

||||||

|

field: isAutoVae && isOnnxModel ? 'vae_decoder' : 'vae',

|

||||||

|

},

|

||||||

|

destination: {

|

||||||

|

node_id: CANVAS_COHERENCE_INPAINT_CREATE_MASK,

|

||||||

|

field: 'vae',

|

||||||

|

},

|

||||||

|

});

|

||||||

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

if (shouldUseSDXLRefiner) {

|

if (shouldUseSDXLRefiner) {

|

||||||

|

|||||||

@ -59,6 +59,8 @@ export const buildCanvasImageToImageGraph = (

|

|||||||

shouldAutoSave,

|

shouldAutoSave,

|

||||||

} = state.canvas;

|

} = state.canvas;

|

||||||

|

|

||||||

|

const fp32 = vaePrecision === 'fp32';

|

||||||

|

|

||||||

const isUsingScaledDimensions = ['auto', 'manual'].includes(

|

const isUsingScaledDimensions = ['auto', 'manual'].includes(

|

||||||

boundingBoxScaleMethod

|

boundingBoxScaleMethod

|

||||||

);

|

);

|

||||||

@ -245,7 +247,7 @@ export const buildCanvasImageToImageGraph = (

|

|||||||

id: LATENTS_TO_IMAGE,

|

id: LATENTS_TO_IMAGE,

|

||||||

type: 'l2i',

|

type: 'l2i',

|

||||||

is_intermediate: true,

|

is_intermediate: true,

|

||||||

fp32: vaePrecision === 'fp32' ? true : false,

|

fp32,

|

||||||

};

|

};

|

||||||

graph.nodes[CANVAS_OUTPUT] = {

|

graph.nodes[CANVAS_OUTPUT] = {

|

||||||

id: CANVAS_OUTPUT,

|

id: CANVAS_OUTPUT,

|

||||||

@ -292,7 +294,7 @@ export const buildCanvasImageToImageGraph = (

|

|||||||

type: 'l2i',

|

type: 'l2i',

|

||||||

id: CANVAS_OUTPUT,

|

id: CANVAS_OUTPUT,

|

||||||

is_intermediate: !shouldAutoSave,

|

is_intermediate: !shouldAutoSave,

|

||||||

fp32: vaePrecision === 'fp32' ? true : false,

|

fp32,

|

||||||

};

|

};

|

||||||

|

|

||||||

(graph.nodes[IMAGE_TO_LATENTS] as ImageToLatentsInvocation).image =

|

(graph.nodes[IMAGE_TO_LATENTS] as ImageToLatentsInvocation).image =

|

||||||

|

|||||||

@ -6,6 +6,7 @@ import {

|

|||||||

ImageBlurInvocation,

|

ImageBlurInvocation,

|

||||||

ImageDTO,

|

ImageDTO,

|

||||||

ImageToLatentsInvocation,

|

ImageToLatentsInvocation,

|

||||||

|

MaskEdgeInvocation,

|

||||||

NoiseInvocation,

|

NoiseInvocation,

|

||||||

RandomIntInvocation,

|

RandomIntInvocation,

|

||||||

RangeOfSizeInvocation,

|

RangeOfSizeInvocation,

|

||||||

@ -18,6 +19,8 @@ import { addVAEToGraph } from './addVAEToGraph';

|

|||||||

import { addWatermarkerToGraph } from './addWatermarkerToGraph';

|

import { addWatermarkerToGraph } from './addWatermarkerToGraph';

|

||||||

import {

|

import {

|

||||||

CANVAS_COHERENCE_DENOISE_LATENTS,

|

CANVAS_COHERENCE_DENOISE_LATENTS,

|

||||||

|

CANVAS_COHERENCE_INPAINT_CREATE_MASK,

|

||||||

|

CANVAS_COHERENCE_MASK_EDGE,

|

||||||

CANVAS_COHERENCE_NOISE,

|

CANVAS_COHERENCE_NOISE,

|

||||||

CANVAS_COHERENCE_NOISE_INCREMENT,

|

CANVAS_COHERENCE_NOISE_INCREMENT,

|

||||||

CANVAS_INPAINT_GRAPH,

|

CANVAS_INPAINT_GRAPH,

|

||||||

@ -67,6 +70,7 @@ export const buildCanvasInpaintGraph = (

|

|||||||

shouldUseCpuNoise,

|

shouldUseCpuNoise,

|

||||||

maskBlur,

|

maskBlur,

|

||||||

maskBlurMethod,

|

maskBlurMethod,

|

||||||

|

canvasCoherenceMode,

|

||||||

canvasCoherenceSteps,

|

canvasCoherenceSteps,

|

||||||

canvasCoherenceStrength,

|

canvasCoherenceStrength,

|

||||||

clipSkip,

|

clipSkip,

|

||||||

@ -89,6 +93,12 @@ export const buildCanvasInpaintGraph = (

|

|||||||

shouldAutoSave,

|

shouldAutoSave,

|

||||||

} = state.canvas;

|

} = state.canvas;

|

||||||

|

|

||||||

|

const fp32 = vaePrecision === 'fp32';

|

||||||

|

|

||||||

|

const isUsingScaledDimensions = ['auto', 'manual'].includes(

|

||||||

|

boundingBoxScaleMethod

|

||||||

|

);

|

||||||

|

|

||||||

let modelLoaderNodeId = MAIN_MODEL_LOADER;

|

let modelLoaderNodeId = MAIN_MODEL_LOADER;

|

||||||

|

|

||||||

const use_cpu = shouldUseNoiseSettings

|

const use_cpu = shouldUseNoiseSettings

|

||||||

@ -133,13 +143,7 @@ export const buildCanvasInpaintGraph = (

|

|||||||

type: 'i2l',

|

type: 'i2l',

|

||||||

id: INPAINT_IMAGE,

|

id: INPAINT_IMAGE,

|

||||||

is_intermediate: true,

|

is_intermediate: true,

|

||||||

fp32: vaePrecision === 'fp32' ? true : false,

|

fp32,

|

||||||

},

|

|

||||||

[INPAINT_CREATE_MASK]: {

|

|

||||||

type: 'create_denoise_mask',

|

|

||||||

id: INPAINT_CREATE_MASK,

|

|

||||||

is_intermediate: true,

|

|

||||||

fp32: vaePrecision === 'fp32' ? true : false,

|

|

||||||

},

|

},

|

||||||

[NOISE]: {

|

[NOISE]: {

|

||||||

type: 'noise',

|

type: 'noise',

|

||||||

@ -147,6 +151,12 @@ export const buildCanvasInpaintGraph = (

|

|||||||

use_cpu,

|

use_cpu,

|

||||||

is_intermediate: true,

|

is_intermediate: true,

|

||||||

},

|

},

|

||||||

|

[INPAINT_CREATE_MASK]: {

|

||||||

|

type: 'create_denoise_mask',

|

||||||

|

id: INPAINT_CREATE_MASK,

|

||||||

|

is_intermediate: true,

|

||||||

|

fp32,

|

||||||

|

},

|

||||||

[DENOISE_LATENTS]: {

|

[DENOISE_LATENTS]: {

|

||||||

type: 'denoise_latents',

|

type: 'denoise_latents',

|

||||||

id: DENOISE_LATENTS,

|

id: DENOISE_LATENTS,

|

||||||

@ -171,7 +181,7 @@ export const buildCanvasInpaintGraph = (

|

|||||||

},

|

},

|

||||||

[CANVAS_COHERENCE_DENOISE_LATENTS]: {

|

[CANVAS_COHERENCE_DENOISE_LATENTS]: {

|

||||||

type: 'denoise_latents',

|

type: 'denoise_latents',

|

||||||

id: DENOISE_LATENTS,

|

id: CANVAS_COHERENCE_DENOISE_LATENTS,

|

||||||

is_intermediate: true,

|

is_intermediate: true,

|

||||||

steps: canvasCoherenceSteps,

|

steps: canvasCoherenceSteps,

|

||||||

cfg_scale: cfg_scale,

|

cfg_scale: cfg_scale,

|

||||||

@ -183,7 +193,7 @@ export const buildCanvasInpaintGraph = (

|

|||||||

type: 'l2i',

|

type: 'l2i',

|

||||||

id: LATENTS_TO_IMAGE,

|

id: LATENTS_TO_IMAGE,

|

||||||

is_intermediate: true,

|

is_intermediate: true,

|

||||||

fp32: vaePrecision === 'fp32' ? true : false,

|

fp32,

|

||||||

},

|

},

|

||||||

[CANVAS_OUTPUT]: {

|

[CANVAS_OUTPUT]: {

|

||||||

type: 'color_correct',

|

type: 'color_correct',

|

||||||

@ -418,7 +428,7 @@ export const buildCanvasInpaintGraph = (

|

|||||||

};

|

};

|

||||||

|

|

||||||

// Handle Scale Before Processing

|

// Handle Scale Before Processing

|

||||||

if (['auto', 'manual'].includes(boundingBoxScaleMethod)) {

|

if (isUsingScaledDimensions) {

|

||||||

const scaledWidth: number = scaledBoundingBoxDimensions.width;

|

const scaledWidth: number = scaledBoundingBoxDimensions.width;

|

||||||

const scaledHeight: number = scaledBoundingBoxDimensions.height;

|

const scaledHeight: number = scaledBoundingBoxDimensions.height;

|

||||||

|

|

||||||

@ -581,6 +591,116 @@ export const buildCanvasInpaintGraph = (

|

|||||||

);

|

);

|

||||||

}

|

}

|

||||||

|

|

||||||

|

// Handle Coherence Mode

|

||||||

|

if (canvasCoherenceMode !== 'unmasked') {

|

||||||

|

// Create Mask If Coherence Mode Is Not Full

|

||||||

|

graph.nodes[CANVAS_COHERENCE_INPAINT_CREATE_MASK] = {

|

||||||

|

type: 'create_denoise_mask',

|

||||||

|

id: CANVAS_COHERENCE_INPAINT_CREATE_MASK,

|

||||||

|

is_intermediate: true,

|

||||||

|

fp32,

|

||||||

|

};

|

||||||

|

|

||||||

|

// Handle Image Input For Mask Creation

|

||||||

|

if (isUsingScaledDimensions) {

|

||||||

|

graph.edges.push({

|

||||||

|

source: {

|

||||||

|

node_id: INPAINT_IMAGE_RESIZE_UP,

|

||||||

|

field: 'image',

|

||||||

|

},

|

||||||

|

destination: {

|

||||||

|

node_id: CANVAS_COHERENCE_INPAINT_CREATE_MASK,

|

||||||

|

field: 'image',

|

||||||

|

},

|

||||||

|

});

|

||||||

|

} else {

|

||||||

|

graph.nodes[CANVAS_COHERENCE_INPAINT_CREATE_MASK] = {

|

||||||

|

...(graph.nodes[

|

||||||

|

CANVAS_COHERENCE_INPAINT_CREATE_MASK

|

||||||

|

] as CreateDenoiseMaskInvocation),

|

||||||

|

image: canvasInitImage,

|

||||||

|

};

|

||||||

|

}

|

||||||

|

|

||||||

|

// Create Mask If Coherence Mode Is Mask

|

||||||

|

if (canvasCoherenceMode === 'mask') {

|

||||||

|

if (isUsingScaledDimensions) {

|

||||||

|

graph.edges.push({

|

||||||

|

source: {

|

||||||

|

node_id: MASK_RESIZE_UP,

|

||||||

|

field: 'image',

|

||||||

|

},

|

||||||

|

destination: {

|

||||||

|

node_id: CANVAS_COHERENCE_INPAINT_CREATE_MASK,

|

||||||

|

field: 'mask',

|

||||||

|

},

|

||||||

|

});

|

||||||

|

} else {

|

||||||

|

graph.nodes[CANVAS_COHERENCE_INPAINT_CREATE_MASK] = {

|

||||||

|

...(graph.nodes[

|

||||||

|

CANVAS_COHERENCE_INPAINT_CREATE_MASK

|

||||||

|

] as CreateDenoiseMaskInvocation),

|

||||||

|

mask: canvasMaskImage,

|

||||||

|

};

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

// Create Mask Edge If Coherence Mode Is Edge

|

||||||

|

if (canvasCoherenceMode === 'edge') {

|

||||||

|

graph.nodes[CANVAS_COHERENCE_MASK_EDGE] = {

|

||||||

|

type: 'mask_edge',

|

||||||

|

id: CANVAS_COHERENCE_MASK_EDGE,

|

||||||

|

is_intermediate: true,

|

||||||

|

edge_blur: maskBlur,

|

||||||

|

edge_size: maskBlur * 2,

|

||||||

|

low_threshold: 100,

|

||||||

|

high_threshold: 200,

|

||||||

|

};

|

||||||

|

|

||||||

|

// Handle Scaled Dimensions For Mask Edge

|

||||||

|

if (isUsingScaledDimensions) {

|

||||||

|

graph.edges.push({

|

||||||

|

source: {

|

||||||

|

node_id: MASK_RESIZE_UP,

|

||||||

|

field: 'image',

|

||||||

|

},

|

||||||

|

destination: {

|

||||||

|

node_id: CANVAS_COHERENCE_MASK_EDGE,

|

||||||

|

field: 'image',

|

||||||

|

},

|

||||||

|

});

|

||||||

|

} else {

|

||||||

|

graph.nodes[CANVAS_COHERENCE_MASK_EDGE] = {

|

||||||

|

...(graph.nodes[CANVAS_COHERENCE_MASK_EDGE] as MaskEdgeInvocation),

|

||||||

|

image: canvasMaskImage,

|

||||||

|

};

|

||||||

|

}

|

||||||

|

|

||||||

|

graph.edges.push({

|

||||||

|

source: {

|

||||||

|

node_id: CANVAS_COHERENCE_MASK_EDGE,

|

||||||

|

field: 'image',

|

||||||

|

},

|

||||||

|

destination: {

|

||||||

|

node_id: CANVAS_COHERENCE_INPAINT_CREATE_MASK,

|

||||||

|

field: 'mask',

|

||||||

|

},

|

||||||

|

});

|

||||||

|

}

|

||||||

|

|

||||||

|

// Plug Denoise Mask To Coherence Denoise Latents

|

||||||

|

graph.edges.push({

|

||||||

|

source: {

|

||||||

|

node_id: CANVAS_COHERENCE_INPAINT_CREATE_MASK,

|

||||||

|

field: 'denoise_mask',

|

||||||

|

},

|

||||||

|

destination: {

|

||||||

|

node_id: CANVAS_COHERENCE_DENOISE_LATENTS,

|

||||||

|

field: 'denoise_mask',

|

||||||

|

},

|

||||||

|

});

|

||||||

|

}

|

||||||

|

|

||||||

// Handle Seed

|

// Handle Seed

|

||||||

if (shouldRandomizeSeed) {

|

if (shouldRandomizeSeed) {

|

||||||

// Random int node to generate the starting seed

|

// Random int node to generate the starting seed

|

||||||

|

|||||||

@ -2,7 +2,6 @@ import { logger } from 'app/logging/logger';

|

|||||||

import { RootState } from 'app/store/store';

|

import { RootState } from 'app/store/store';

|

||||||

import { NonNullableGraph } from 'features/nodes/types/types';

|

import { NonNullableGraph } from 'features/nodes/types/types';

|

||||||

import {

|

import {

|

||||||

ImageBlurInvocation,

|

|

||||||

ImageDTO,

|

ImageDTO,

|

||||||

ImageToLatentsInvocation,

|

ImageToLatentsInvocation,

|

||||||

InfillPatchMatchInvocation,

|

InfillPatchMatchInvocation,

|

||||||

@ -19,6 +18,8 @@ import { addVAEToGraph } from './addVAEToGraph';

|

|||||||

import { addWatermarkerToGraph } from './addWatermarkerToGraph';

|

import { addWatermarkerToGraph } from './addWatermarkerToGraph';

|

||||||

import {

|

import {

|

||||||

CANVAS_COHERENCE_DENOISE_LATENTS,

|

CANVAS_COHERENCE_DENOISE_LATENTS,

|

||||||

|

CANVAS_COHERENCE_INPAINT_CREATE_MASK,

|

||||||

|

CANVAS_COHERENCE_MASK_EDGE,

|

||||||

CANVAS_COHERENCE_NOISE,

|

CANVAS_COHERENCE_NOISE,

|

||||||

CANVAS_COHERENCE_NOISE_INCREMENT,

|

CANVAS_COHERENCE_NOISE_INCREMENT,

|

||||||

CANVAS_OUTPAINT_GRAPH,

|

CANVAS_OUTPAINT_GRAPH,

|

||||||

@ -34,7 +35,6 @@ import {

|

|||||||

ITERATE,

|

ITERATE,

|

||||||

LATENTS_TO_IMAGE,

|

LATENTS_TO_IMAGE,

|

||||||

MAIN_MODEL_LOADER,

|

MAIN_MODEL_LOADER,

|

||||||

MASK_BLUR,

|

|

||||||

MASK_COMBINE,

|

MASK_COMBINE,

|

||||||

MASK_FROM_ALPHA,

|

MASK_FROM_ALPHA,

|

||||||

MASK_RESIZE_DOWN,

|

MASK_RESIZE_DOWN,

|

||||||

@ -71,10 +71,11 @@ export const buildCanvasOutpaintGraph = (

|

|||||||

shouldUseNoiseSettings,

|

shouldUseNoiseSettings,

|

||||||

shouldUseCpuNoise,

|

shouldUseCpuNoise,

|

||||||

maskBlur,

|

maskBlur,

|

||||||

maskBlurMethod,

|

canvasCoherenceMode,

|

||||||

canvasCoherenceSteps,

|

canvasCoherenceSteps,

|

||||||

canvasCoherenceStrength,

|

canvasCoherenceStrength,

|

||||||

tileSize,

|

infillTileSize,

|

||||||

|

infillPatchmatchDownscaleSize,

|

||||||

infillMethod,

|

infillMethod,

|

||||||

clipSkip,

|

clipSkip,

|

||||||

seamlessXAxis,

|

seamlessXAxis,

|

||||||

@ -96,6 +97,12 @@ export const buildCanvasOutpaintGraph = (

|

|||||||

shouldAutoSave,

|

shouldAutoSave,

|

||||||

} = state.canvas;

|

} = state.canvas;

|

||||||

|

|

||||||

|

const fp32 = vaePrecision === 'fp32';

|

||||||

|

|

||||||

|

const isUsingScaledDimensions = ['auto', 'manual'].includes(

|

||||||

|

boundingBoxScaleMethod

|

||||||

|

);

|

||||||

|

|

||||||

let modelLoaderNodeId = MAIN_MODEL_LOADER;

|

let modelLoaderNodeId = MAIN_MODEL_LOADER;

|

||||||

|

|

||||||

const use_cpu = shouldUseNoiseSettings

|

const use_cpu = shouldUseNoiseSettings

|

||||||

@ -141,18 +148,11 @@ export const buildCanvasOutpaintGraph = (

|

|||||||

is_intermediate: true,

|

is_intermediate: true,

|

||||||

mask2: canvasMaskImage,

|

mask2: canvasMaskImage,

|

||||||

},

|

},

|

||||||

[MASK_BLUR]: {

|

|

||||||

type: 'img_blur',

|

|

||||||

id: MASK_BLUR,

|

|

||||||

is_intermediate: true,

|

|

||||||

radius: maskBlur,

|

|

||||||

blur_type: maskBlurMethod,

|

|

||||||

},

|

|

||||||

[INPAINT_IMAGE]: {

|

[INPAINT_IMAGE]: {

|

||||||

type: 'i2l',

|

type: 'i2l',

|

||||||

id: INPAINT_IMAGE,

|

id: INPAINT_IMAGE,

|

||||||

is_intermediate: true,

|

is_intermediate: true,

|

||||||

fp32: vaePrecision === 'fp32' ? true : false,

|

fp32,

|

||||||

},

|

},

|

||||||

[NOISE]: {

|

[NOISE]: {

|

||||||

type: 'noise',

|

type: 'noise',

|

||||||

@ -164,7 +164,7 @@ export const buildCanvasOutpaintGraph = (

|

|||||||

type: 'create_denoise_mask',

|

type: 'create_denoise_mask',

|

||||||

id: INPAINT_CREATE_MASK,

|

id: INPAINT_CREATE_MASK,

|

||||||

is_intermediate: true,

|

is_intermediate: true,

|

||||||

fp32: vaePrecision === 'fp32' ? true : false,

|

fp32,

|

||||||

},

|

},

|

||||||

[DENOISE_LATENTS]: {

|

[DENOISE_LATENTS]: {

|

||||||

type: 'denoise_latents',

|

type: 'denoise_latents',

|

||||||

@ -202,7 +202,7 @@ export const buildCanvasOutpaintGraph = (

|

|||||||

type: 'l2i',

|

type: 'l2i',

|

||||||

id: LATENTS_TO_IMAGE,

|

id: LATENTS_TO_IMAGE,

|

||||||

is_intermediate: true,

|

is_intermediate: true,

|

||||||

fp32: vaePrecision === 'fp32' ? true : false,

|

fp32,

|

||||||

},

|

},

|

||||||

[CANVAS_OUTPUT]: {

|

[CANVAS_OUTPUT]: {

|

||||||

type: 'color_correct',

|

type: 'color_correct',

|

||||||

@ -333,7 +333,7 @@ export const buildCanvasOutpaintGraph = (

|

|||||||

// Create Inpaint Mask

|

// Create Inpaint Mask

|

||||||

{

|

{

|

||||||

source: {

|

source: {

|

||||||

node_id: MASK_BLUR,

|

node_id: isUsingScaledDimensions ? MASK_RESIZE_UP : MASK_COMBINE,

|

||||||

field: 'image',

|

field: 'image',

|

||||||

},

|

},

|

||||||

destination: {

|

destination: {

|

||||||

@ -443,6 +443,16 @@ export const buildCanvasOutpaintGraph = (

|

|||||||

field: 'latents',

|

field: 'latents',

|

||||||

},

|

},

|

||||||

},

|

},

|

||||||

|

{

|

||||||

|

source: {

|

||||||

|

node_id: INPAINT_INFILL,

|

||||||

|

field: 'image',

|

||||||

|

},

|

||||||

|

destination: {

|

||||||

|

node_id: INPAINT_CREATE_MASK,

|

||||||

|

field: 'image',

|

||||||

|

},

|

||||||

|

},

|

||||||

// Decode the result from Inpaint

|

// Decode the result from Inpaint

|

||||||

{

|

{

|

||||||

source: {

|

source: {

|

||||||

@ -463,6 +473,7 @@ export const buildCanvasOutpaintGraph = (

|

|||||||

type: 'infill_patchmatch',

|

type: 'infill_patchmatch',

|

||||||

id: INPAINT_INFILL,

|

id: INPAINT_INFILL,

|

||||||

is_intermediate: true,

|

is_intermediate: true,

|

||||||

|

downscale: infillPatchmatchDownscaleSize,

|

||||||

};

|

};

|

||||||

}

|

}

|

||||||

|

|

||||||

@ -474,17 +485,25 @@ export const buildCanvasOutpaintGraph = (

|

|||||||

};

|

};

|

||||||

}

|

}

|