mirror of

https://github.com/vmstan/gravity-sync.git

synced 2024-08-30 18:22:11 +00:00

3.2.1 (#129)

* 3.2.1 * Change FILE_OWNER & RILE_OWNER to use UID:GID (#128) Adressing #99 - use UID:GID instead names to support pihole docker user. * Cleanup tabs on main script * Add pihole version output * Output version based on Docker type * AND THEN * AND THEN AND THEN * bash version * add DNSMASQ to output * Add GS version * No color * Custom settings * VERIFY_PASS * Reformat Remote info * Info screen * ssh v * rsync * -e * no echo, duh * header * sqlite3 version output * sudo git docker versions * move spacer * uname * remove duplicate docs Co-authored-by: Michael Stanclift <vmstan@sovereign.local> Co-authored-by: Krzysiek Kurek <kk50657@sgh.waw.pl>

This commit is contained in:

parent

755a10e55b

commit

1c033ef1f6

@ -21,6 +21,11 @@ Not implemented in 3.2.0, but coming within this release, Gravity Sync will be c

|

||||

|

||||

An example would be setting different caching options for Pi-hole, or specifying the lookup targets for additional networks. Similar requirements as above for the CNAME syncing must be met for existing installs to leverage this functionality.

|

||||

|

||||

#### 3.2.1

|

||||

|

||||

- Changes application of permissions for Docker instances to UID:GID instead of names. (#99/#128)

|

||||

- Adds `./gravity-sync info` function to help with troubleshooting installation/configuration settings.

|

||||

|

||||

## 3.1

|

||||

|

||||

### The Container Release

|

||||

|

||||

347

docs/ADVANCED.md

347

docs/ADVANCED.md

@ -1,347 +0,0 @@

|

||||

# Gravity Sync

|

||||

|

||||

## Advanced Configuration

|

||||

|

||||

The purpose of this guide is to break out the manual install instructions, and any advanced configuration flags, into a separate document to limit confusion from the primary README. It is expected that users have read and are familiar with the process and concepts outlined in the primary README.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- If you're installing Gravity Sync on a system running Fedora or CentOS, make sure that you are not just using the built in root account and have a dedicated user in the Administrator group. You'll also need SELinux disabled to install Pi-hole.

|

||||

|

||||

## Installation

|

||||

|

||||

If you don't want to use the automated installer, you can use git to manually clone Gravity Sync to your _secondary_ Pi-hole server.

|

||||

|

||||

```bash

|

||||

git clone https://github.com/vmstan/gravity-sync.git

|

||||

```

|

||||

|

||||

If you don't trust `git` to install your software, or just like doing things by hand, that's fine.

|

||||

|

||||

_Keep in mind that installing via this method means you won't be able to use Gravity Sync's built-in update mechanism._

|

||||

|

||||

Download the latest release from [GitHub](https://github.com/vmstan/gravity-sync/releases) and extract the files to your _secondary_ Pi-hole server.

|

||||

|

||||

```bash

|

||||

cd ~

|

||||

wget https://github.com/vmstan/gravity-sync/archive/v3.x.x.zip

|

||||

unzip v3.x.x.zip -d gravity-sync

|

||||

cd gravity-sync

|

||||

```

|

||||

|

||||

## Configuration

|

||||

|

||||

If you don't want to use the automated configuration utility at `./gravity-sync.sh config` you can setup your configuration manually as well.

|

||||

|

||||

After you install Gravity Sync to your server there will be a file called `gravity-sync.conf.example` that you can use as the basis for your own `gravity-sync.conf` file. Make a copy of the example file and modify it with your site specific settings.

|

||||

|

||||

```bash

|

||||

cp gravity-sync.conf.example gravity-sync.conf

|

||||

vi gravity-sync.conf

|

||||

```

|

||||

|

||||

_Note: If you don't like VI or don't have VIM on your system, use NANO, or if you don't like any of those substitute for your text editor of choice. I'm not here to start a war._

|

||||

|

||||

Make sure you've set the REMOTE_HOST and REMOTE_USER variables with the IP (or DNS name) and user account to authenticate to the primary Pi. This account will need to have sudo permissions on the remote system.

|

||||

|

||||

```bash

|

||||

REMOTE_HOST='192.168.1.10'

|

||||

REMOTE_USER='pi'

|

||||

```

|

||||

|

||||

### SSH Configuration

|

||||

|

||||

Gravity Sync uses SSH to run commands on the primary Pi-hole, and sync the two systems by performing file copies.

|

||||

|

||||

#### Key-Pair Authentication

|

||||

|

||||

This is the preferred option, as it's more reliable and less dependent on third party plugins.

|

||||

|

||||

You'll need to generate an SSH key for your secondary Pi-hole user and copy it to your primary Pi-hole. This will allow you to connect to and copy the necessary files without needing a password each time. When generating the SSH key, accept all the defaults and do not put a passphrase on your key file.

|

||||

|

||||

_Note: If you already have this setup on your systems for other purposes, you can skip this step._

|

||||

|

||||

```bash

|

||||

ssh-keygen -t rsa

|

||||

ssh-copy-id -i ~/.ssh/id_rsa.pub REMOTE_USER@REMOTE_HOST

|

||||

```

|

||||

|

||||

Substitute REMOTE_USER for the account on the primary Pi-hole with sudo permissions, and REMOTE_HOST for the IP or DNS name of the Pi-hole you have designated as the primary.

|

||||

|

||||

Make sure to leave the `REMOTE_PASS` variable set to nothing in `gravity-sync.conf` if you want to use key-pair authentication.

|

||||

|

||||

#### Password Authentication

|

||||

|

||||

This option has been removed from Gravity Sync as of version 3.1.

|

||||

|

||||

### The Pull Function

|

||||

|

||||

The Gravity Sync Pull, prior to version 2.0, was the standard method of sync operation, and will not prompt for user input after execution.

|

||||

|

||||

```bash

|

||||

./gravity-sync.sh pull

|

||||

```

|

||||

|

||||

If the execution completes, you will now have overwritten your running `gravity.db` and `custom.list` on the secondary Pi-hole after creating a copy of the running files (with `.backup` appended) in the `backup` subfolder located with your script. Gravity Sync will also keep a copy of the last sync'd files from the primary (in the `backup` folder appended with `.pull`) for future use.

|

||||

|

||||

### The Push Function

|

||||

|

||||

Gravity Sync includes the ability to `push` from the secondary Pi-hole back to the primary. This would be useful in a situation where your primary Pi-hole is down for an extended period of time, and you have made list changes on the secondary Pi-hole that you want to force back to the primary, when it comes online.

|

||||

|

||||

```bash

|

||||

./gravity-sync.sh push

|

||||

```

|

||||

|

||||

Before executing, this will make a copy of the remote database under `backup/gravity.db.push` and `backup/custom.list.push` then sync the local configuration to the primary Pi-hole.

|

||||

|

||||

This function purposefully asks for user interaction to avoid being accidentally automated.

|

||||

|

||||

- If your script prompts for a password on the remote system, make sure that your remote user account is setup not to require passwords in the sudoers file.

|

||||

|

||||

### The Restore Function

|

||||

|

||||

Gravity Sync can also `restore` the database on the secondary Pi-hole in the event you've overwritten it accidentally. This might happen in the above scenario where you've had your primary Pi-hole down for an extended period, made changes to the secondary, but perhaps didn't get a chance to perform a `push` of the changes back to the primary, before your automated sync ran.

|

||||

|

||||

```bash

|

||||

./gravity-sync.sh restore

|

||||

```

|

||||

|

||||

This will copy your last `gravity.db.backup` and `custom.list.backup` to the running copy on the secondary Pi-hole.

|

||||

|

||||

This function purposefully asks for user interaction to avoid being accidentally automated.

|

||||

|

||||

### Hidden Figures

|

||||

|

||||

There are a series of advanced configuration options that you may need to change to better adapt Gravity Sync to your environment. They are referenced at the end of the `gravity-sync.conf` file. It is suggested that you make any necessary variable changes to this file, as they will supersede the ones located in the core script. If you want to revert back to the Gravity Sync default for any of these settings, just apply a `#` to the beginning of the line to comment it out.

|

||||

|

||||

#### `PH_IN_TYPE` and `RH_IN_TYPE`

|

||||

|

||||

These variables allow you to configure either a default/standard Pi-hole installation on both the local and remote hosts. Available options are either `default` or `docker` exactly has written.

|

||||

|

||||

- Default setting in Gravity Sync is `default`.

|

||||

- These variables can be set via `./gravity-sync.sh config` function.

|

||||

|

||||

#### `PIHOLE_DIR` and `RIHOLE_DIR`

|

||||

|

||||

These variables allow you to change the location of the Pi-hole settings folder on both the local and remote hosts. This is required for Docker installations of Pi-hole. This directory location should be from the root of the file system and be configured **without** a trailing slash.

|

||||

|

||||

- Default setting in Gravity Sync is `/etc/pihole`.

|

||||

- These variables can be set via `./gravity-sync.sh config` function and required if a Docker install is selected.

|

||||

|

||||

#### `PIHOLE_BIN` and `RIHOLE_BIN`

|

||||

|

||||

These variables allow you to change the location of the Pi-hole binary folder on both the local and remote hosts. Unless you've done a custom Pi-hole installation, this setting is unlikely to require changes. This directory location should be from the root of the file system and be configured **without** a trailing slash.

|

||||

|

||||

- Default setting in Gravity Sync is `/usr/local/bin/pihole`.

|

||||

|

||||

#### `DOCKER_BIN` and `ROCKER_BIN`

|

||||

|

||||

These variables allow you to change the location of the Docker binary folder on both the local and remote hosts. This may be necessary on some systems, if you've done a custom installation of Docker. This directory location should be from the root of the file system and be configured **without** a trailing slash.

|

||||

|

||||

- Default setting in Gravity Sync is `/usr/bin/docker`.

|

||||

|

||||

#### `FILE_OWNER` and `RILE_OWNER`

|

||||

|

||||

These variables allow you to change the file owner of the Pi-hole gravity database on both the local and remote hosts. This is required for Docker installations of Pi-hole, but is likely unnecessary on standard installs.

|

||||

|

||||

- Default setting in Gravity Sync is `pihole:pihole`.

|

||||

- These variables are set via `./gravity-sync.sh config` function to `named:docker` automatically if a Docker install is selected.

|

||||

|

||||

#### `DOCKER_CON` and `ROCKER_CON`

|

||||

|

||||

These variables allow you to change the location of the name of the Docker container on both the local and remote hosts.

|

||||

|

||||

- Default setting in Gravity Sync is `pihole`.

|

||||

- These variables can be set via `./gravity-sync.sh config` function.

|

||||

|

||||

#### `GRAVITY_FI` and `CUSTOM_DNS`

|

||||

|

||||

These variables are for the `gravity.db` and `custom.list` files that are the two components replicated by Gravity Sync. You should not change them unless Pi-hole changes their naming convention for these files, in which case the core Gravity Sync files will be changed to adapt.

|

||||

|

||||

#### `VERIFY_PASS`

|

||||

|

||||

Gravity Sync will prompt to verify user interactivity during push, restore, or config operations (that overwrite an existing configuration) with the intention that it prevents someone from accidentally automating in the wrong direction or overwriting data intentionally. If you'd like to automate a push function, or just don't like to be asked twice to do something destructive, then you can opt-out.

|

||||

|

||||

- Default setting in Gravity Sync is `0`, change to `1` to bypass this check.

|

||||

|

||||

#### `SKIP_CUSTOM`

|

||||

|

||||

Starting in v1.7.0, Gravity Sync manages the `custom.list` file that contains the "Local DNS Records" function within the Pi-hole interface. If you do not want to sync this setting, perhaps if you're doing a multi-site deployment with differing local DNS settings, then you can opt-out of this sync.

|

||||

|

||||

- Default setting in Gravity Sync is `0`, change to `1` to exempt `custom.list` from replication.

|

||||

- This variable can be set via `./gravity-sync.sh config` function.

|

||||

|

||||

#### `DATE_OUTPUT`

|

||||

|

||||

_This feature has not been implemented, but the intent is to provide the ability to add timestamped output to each status indicator in the script output (ex: [2020-05-28 19:46:54] [EXEC] \$MESSAGE)._

|

||||

|

||||

#### `PING_AVOID`

|

||||

|

||||

The `./gravity-sync.sh config` function will attempt to ping the remote host to validate it has a valid network connection. If there is a firewall between your hosts preventing ICMP replies, or you otherwise wish to skip this step, it can be bypassed here.

|

||||

|

||||

- Default setting in Gravity Sync is `0`, change to `1` to skip this network test.

|

||||

- This variable can be set via `./gravity-sync.sh config` function.

|

||||

|

||||

#### `ROOT_CHECK_AVOID`

|

||||

|

||||

In versions of Gravity Sync prior to 3.1, at execution, Gravity Sync would check that it's deployed with its own user (not running as root), but for some deployments this was a hindrance.

|

||||

|

||||

- This variable is no longer parsed by Gravity Sync.

|

||||

|

||||

#### `BACKUP_RETAIN`

|

||||

|

||||

The `./gravity-sync.sh backup` function will retain a defined number of days worth of `gravity.db` and `custom.list` backups.

|

||||

|

||||

- Default setting in Gravity Sync is `7`, adjust as desired.

|

||||

- This variable can be set via `./gravity-sync.sh config` function.

|

||||

|

||||

#### `SSH_PORT`

|

||||

|

||||

Gravity Sync is configured by default to use the standard SSH port (22) but if you need to change this, such as if you're traversing a NAT/firewall for a multi-site deployment, to use a non-standard port.

|

||||

|

||||

- Default setting in Gravity Sync is 22.

|

||||

- This variable can be set via `./gravity-sync.sh config` function.

|

||||

|

||||

#### `SSH_PKIF`

|

||||

|

||||

Gravity Sync is configured by default to use the `.ssh/id_rsa` key-file that is generated using the `ssh-keygen` command. If you have an existing key-file stored somewhere else that you'd like to use, you can configure that here. The key-file will still need to be in the users `$HOME` directory.

|

||||

|

||||

At this time Gravity Sync does not support using a passphrase in RSA key-files. If you have a passphrase applied to your standard `.ssh/id_rsa` either remove it, or generate a new file and specify that key for use only by Gravity Sync.

|

||||

|

||||

- Default setting in Gravity Sync is `.ssh/id_rsa`.

|

||||

- This variable can be set via `./gravity-sync.sh config` function.

|

||||

|

||||

#### `LOG_PATH`

|

||||

|

||||

Gravity Sync will place logs in the same folder as the script (identified as .cron and .log) but if you'd like to place these in a another location, you can do that by identifying the full path to the directory (ex: `/full/path/to/logs`) without a trailing slash.

|

||||

|

||||

- Default setting in Gravity Sync is a variable called `${LOCAL_FOLDR}`.

|

||||

|

||||

#### `SYNCING_LOG=''`

|

||||

|

||||

Gravity Sync will write a timestamp for any completed sync, pull, push or restore job to this file. If you want to change the name of this file, you will also need to adjust the LOG_PATH variable above, otherwise your file will be remove during an `update` operations.

|

||||

|

||||

- Default setting in Gravity Sync is `gravity-sync.log`

|

||||

|

||||

#### `CRONJOB_LOG=''`

|

||||

|

||||

Gravity Sync will log the execution history of the previous automation task via Cron to this file. If you want to change the name of this file, you will also need to adjust the LOG_PATH variable above, otherwise your file will be remove during an `update` operations.

|

||||

|

||||

This will have an impact to both the `./gravity-sync.sh automate` function and the `./gravity-sync.sh cron` functions. If you need to change this after running the automate function, either modify your crontab manually or delete the entry and re-run the automate function.

|

||||

|

||||

- Default setting in Gravity Sync is `gravity-sync.cron`

|

||||

|

||||

#### `HISTORY_MD5=''`

|

||||

|

||||

Gravity Sync will log the file hashes of the previous `smart` task to this file. If you want to change the name of this file, you will also need to adjust the LOG_PATH variable above, otherwise your file will be removed during an `update` operations.

|

||||

|

||||

- Default setting in Gravity Sync is `gravity-sync.md5`

|

||||

|

||||

#### `BASH_PATH=''`

|

||||

|

||||

If you need to adjust the path to bash that is identified for automated execution via Crontab, you can do that here. This will only have an impact if changed before generating the crontab via the `./gravity-sync.sh automate` function. If you need to change this after the fact, either modify your crontab manually or delete the entry and re-run the automate function.

|

||||

|

||||

## Execution

|

||||

|

||||

If you are just straight up unable to run the `gravity-sync.sh` file, make sure it's marked as an executable by Linux.

|

||||

|

||||

```bash

|

||||

chmod +x gravity-sync.sh

|

||||

```

|

||||

|

||||

## Updates

|

||||

|

||||

If you manually installed Gravity Sync via `.zip` or `.tar.gz` you will need to download and overwrite the `gravity-sync.sh` file with a newer version. If you've chosen this path, I won't lay out exactly what you'll need to do every time, but you should at least review the contents of the script bundle (specifically the example configuration file) to make sure there are no new additional files or required settings.

|

||||

|

||||

At the very least, I would recommend backing up your existing `gravity-sync` folder and then deploying a fresh copy each time you update, and then either creating a new .conf file or copying your old file over to the new folder.

|

||||

|

||||

### Development Builds

|

||||

|

||||

Starting in v1.7.2, you can easily flag if you want to receive the development branch of Gravity Sync when running the built in `./gravity-sync.sh update` function. Beginning in v1.7.4 `./gravity-sync.sh dev` will now toggle the dev flag on/off. Starting in v2.2.3, it will prompt you to select the development branch you want to use.

|

||||

|

||||

To manually adjust the flag, create an empty file in the `gravity-sync` folder called `dev` and then edit the file to include only one line `BRANCH='origin/x.x.x'` (where x.x.x is the development version you want to use) afterwards the standard `./gravity-sync.sh update` function will apply the correct updates.

|

||||

|

||||

Delete the `dev` file and update again to revert back to the stable/master branch.

|

||||

|

||||

This method for implementation is decidedly different than the configuration flags in the .conf file, as explained above, to make it easier to identify development systems.

|

||||

|

||||

### Updater Troubleshooting

|

||||

|

||||

If the built in updater doesn't function as expected, you can manually run the git commands that operate under the covers.

|

||||

|

||||

```bash

|

||||

git fetch --all

|

||||

git reset --hard origin/master

|

||||

```

|

||||

|

||||

If your code is still not updating after this, reinstallation is suggested rather than spending all your time troubleshooting `git` commands.

|

||||

|

||||

## Automation

|

||||

|

||||

There are many automation methods available to run scripts on a regular basis of a Linux system. The one built into all of them is cron, but if you'd like to utilize something different then the principles are still the same.

|

||||

|

||||

If you prefer to still use cron but modify your settings by hand, using the entry below will cause the entry to run at the top and bottom of every hour (1:00 PM, 1:30 PM, 2:00 PM, etc) but you are free to dial this back or be more aggressive if you feel the need.

|

||||

|

||||

```bash

|

||||

crontab -e

|

||||

*/15 * * * * /bin/bash /home/USER/gravity-sync/gravity-sync.sh > /home/USER/gravity-sync/gravity-sync.cron

|

||||

0 23 * * * /bin/bash /home/USER//gravity-sync/gravity-sync.sh backup >/dev/null 2>&1

|

||||

```

|

||||

|

||||

### Automating Automation

|

||||

|

||||

To automate the deployment of automation option you can call it with 2 parameters:

|

||||

|

||||

- First interval in minutes to run sync [0-30]

|

||||

- Second the hour to run backup [0-24]

|

||||

|

||||

_Note: a value of 0 will disable the cron entry._

|

||||

|

||||

For example, `./gravity-sync.sh automate 15 23` will configure automation of the sync function every 15 minutes and of a backup at 23:00.

|

||||

|

||||

## Reference Architectures

|

||||

|

||||

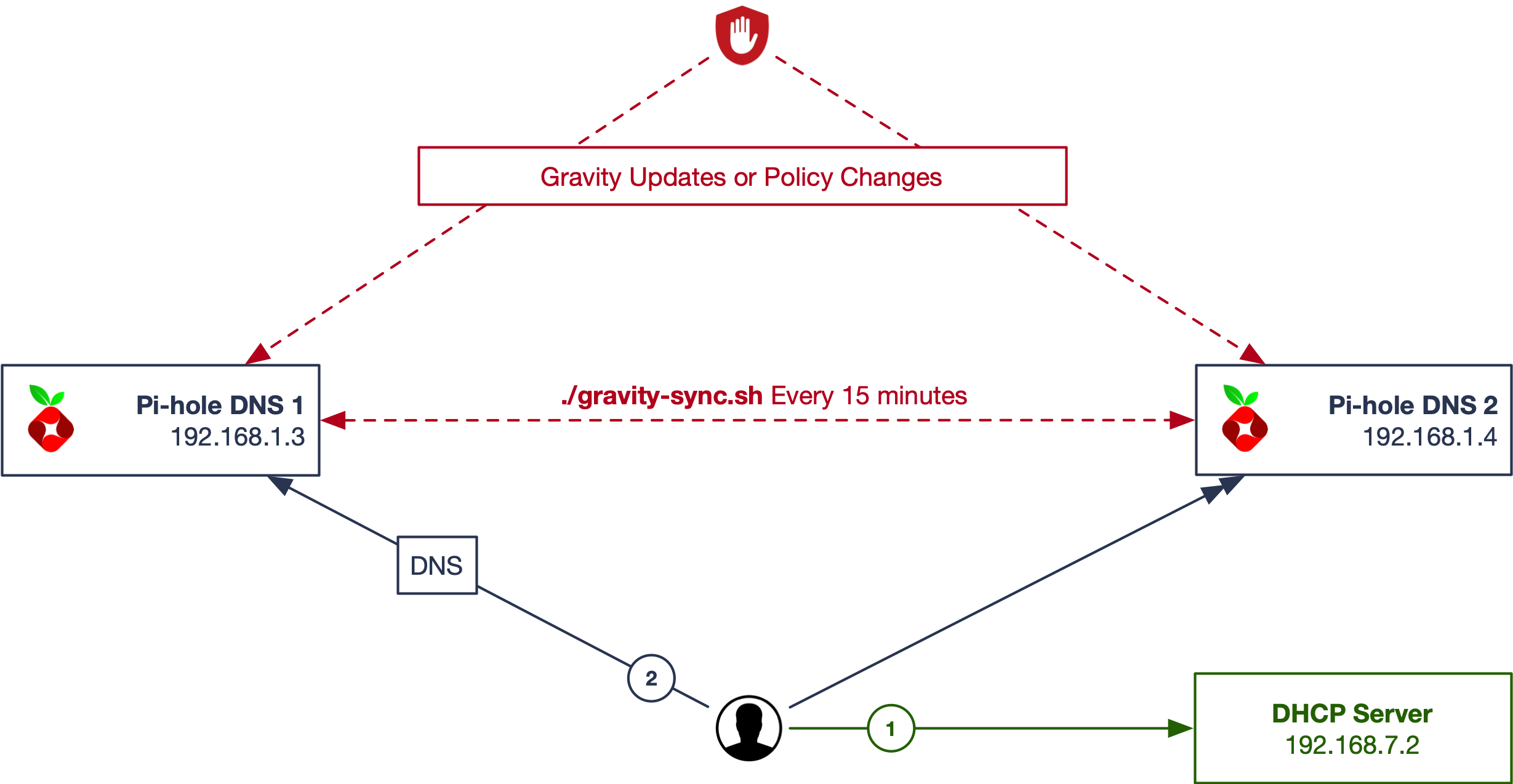

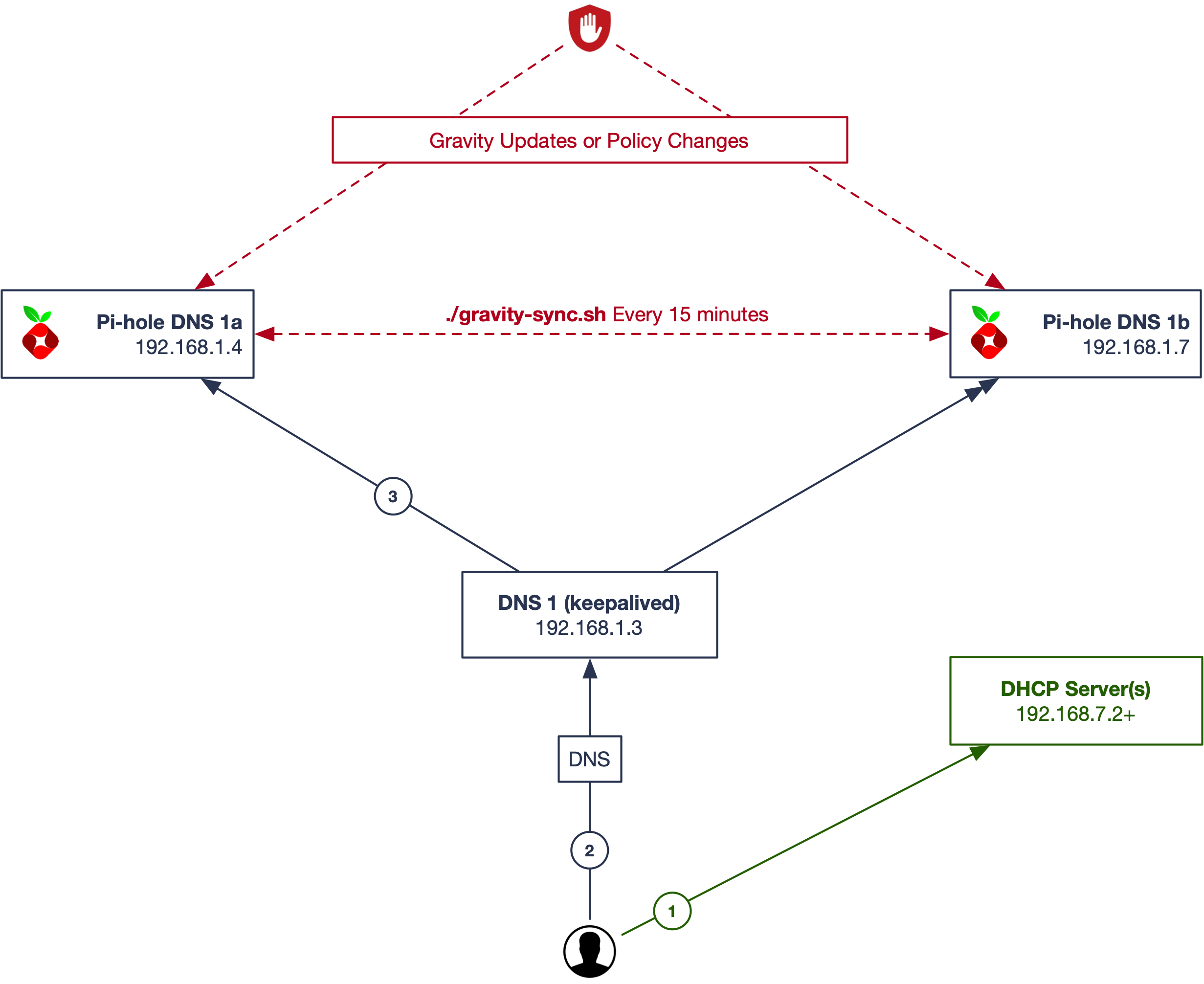

The designation of primary and secondary is purely at your discretion. The doesn't matter if you're using an HA process like keepalived to present a single DNS IP address to clients, or handing out two DNS resolvers via DHCP. Generally it is expected that the two (or more) Pi-hole(s) will be at the same physical location, or at least on the same internal networks. It should also be possible to to replicate to a secondary Pi-hole across networks, either over a VPN or open-Internet, with the appropriate firewall/NAT configuration.

|

||||

|

||||

There are three reference architectures that I'll outline. All of them require an external DHCP server (such as a router, or dedicated DHCP server) handing out the DNS address(es) for your Pi-holes. Use of the integrated DHCP function in Pi-hole when using Gravity Sync is discouraged, although I'm sure there are ways to make it work. **Gravity Sync does not manage any DHCP settings.**

|

||||

|

||||

### Easy Peasy

|

||||

|

||||

|

||||

|

||||

This design requires the least amount of overhead, or additional software/network configuration beyond Pi-hole and Gravity Sync.

|

||||

|

||||

1. Client requests an IP address from a DHCP server on the network and receives it along with DNS and gateway information back. Two DNS servers (Pi-hole) are returned to the client.

|

||||

2. Client queries one of the two DNS servers, and Pi-hole does it's thing.

|

||||

|

||||

You can make changes to your block-list, exceptions, etc, on either Pi-hole and they will be sync'd to the other within the timeframe you establish (here, 15 minutes.) The downside in the above design is you have two places where your clients are logging lookup requests to. Gravity Sync will let you change filter settings in either location, but if you're doing it often things may get overwritten.

|

||||

|

||||

### Stay Alive

|

||||

|

||||

|

||||

|

||||

One way to get around having logging in two places is by using keepalived and present a single virtual IP address of the two Pi-hole, to clients in an active/passive mode. The two nodes will check their own status, and each other, and hand the VIP around if there are issues.

|

||||

|

||||

1. Client requests an IP address from a DHCP server on the network and receives it along with DNS and gateway information back. One DNS server (VIP) is returned to the client.

|

||||

2. The VIP managed by the keepalived service will determine which Pi-hole responds. You make your configuration changes to the active VIP address.

|

||||

3. Client queries the single DNS servers, and Pi-hole does it's thing.

|

||||

|

||||

You make your configuration changes to the active VIP address and they will be sync'd to the other within the timeframe you establish (here, 15 minutes.)

|

||||

|

||||

### Crazy Town

|

||||

|

||||

|

||||

|

||||

For those who really love Pi-hole and Gravity Sync. Combining the best of both worlds.

|

||||

|

||||

1. Client requests an IP address from a DHCP server on the network and receives it along with DNS and gateway information back. Two DNS servers (VIPs) are returned to the client.

|

||||

2. The VIPs are managed by the keepalived service on each side and will determine which of two Pi-hole responds. You can make your configuration changes to the active VIP address on either side.

|

||||

3. Client queries one of the two VIP servers, and the responding Pi-hole does it's thing.

|

||||

|

||||

Here we use `./gravity-sync pull` on the secondary Pi-hole at each side, and off-set the update intervals from the main sync.

|

||||

|

||||

(I call this crazy, but this is what I use at home.)

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

If you get the error `sudo: a terminal is required to read the password` or `sudo: no tty present and no askpass program specified` during your execution, make sure you have [implemented passwordless sudo](https://linuxize.com/post/how-to-run-sudo-command-without-password/), as defined in the system requirements, for the user accounts on both the local and remote systems.

|

||||

@ -1,458 +0,0 @@

|

||||

# The Changelog

|

||||

|

||||

## 3.1

|

||||

|

||||

### The Container Release

|

||||

|

||||

The premise of this release was to focus on adding better support for Docker container instances of Pi-hole. This release also changes a lot of things about the requirements that Gravity Sync has always had, which were not running as the root user, and requiring that the script be run from the user's home directory. Those two restrictions are gone.

|

||||

|

||||

You can now use a standard Pi-hole install as your primary, or your secondary. You can use a Docker install of Pi-hole as your primary, or your secondary. You can mix and match between the two however you choose. You can have Pi-hole installed in different directories at each side, either as standard installs or with container configuration files in different locations. Overall it's much more flexible.

|

||||

|

||||

#### Docker Support

|

||||

|

||||

- Only the [official Pi-hole managed Docker image](https://hub.docker.com/r/pihole/pihole) is supported. Other builds may work, but they have not been tested.

|

||||

- If you are using a name for your container other than the default `pihole` in your Docker configuration, you must specify this in your `gravity-sync.conf` file.

|

||||

- Smart Sync, and the associated push/pull operations, now will send exec commands to run Pi-hole restart commands within the Docker container.

|

||||

- Your container configuration must expose access to the virtual `/etc/pihole` location to the host's file system, and be configured in your `gravity-sync.conf` file.

|

||||

|

||||

**Example:** if your container configuration looked something like like `-v /home/vmstan/etc-pihole/:/etc/pihole` then the location `/home/vmstan/etc-pihole` would need to be accessible by the user running Gravity Sync, and be configured as the `PIHOLE_DIR` (or `RIHOLE_DIR`) in your `gravity-sync.conf` file.

|

||||

|

||||

#### Installation Script

|

||||

|

||||

- Detects the running instance of default Pi-hole Docker container image, if standard Pi-hole lookup fails. Pi-hole must still be installed prior to Gravity Sync.

|

||||

- Changes detection of root vs sudo users, and adapts commands to match. You no longer need to avoid running the script as `root`.

|

||||

- Only deploys passwordless SUDO components if deemed necessary. (i.e. Not running as `root`.)

|

||||

- Now automatically runs the local configuration function on the secondary Pi-hole after execution.

|

||||

- Deploys script via `git` to whatever directory the installer runs in, instead of defaulting to the user's `$HOME` directory.

|

||||

- Gravity Sync no longer requires that it be run from the user's `$HOME` directory.

|

||||

|

||||

#### Configuration Workflow

|

||||

|

||||

- Overall, a simpler configuration function, as the online installer now checks for the dependencies prior to execution.

|

||||

- New users with basic Pi-hole installs will only be prompted for the address of the primary (remote) Pi-hole, an SSH user and then the SSH password to establish a trusted relationship and share the keyfiles.

|

||||

- Automatically prompts on during setup to configure advanced variables if a Docker installation is detected on the secondary (local) Pi-hole.

|

||||

- Advanced users can set more options for non-standard deployments at installation. If you are using a Docker deployment of Pi-hole on your primary (remote) Pi-hole, but not the system running Gravity Sync, you will need to enter this advanced mode when prompted.

|

||||

- Existing users with default setups should not need to run the config utility again after upgrading, but those with custom installs (especially existing container users) should consider it to adopt new variable names and options in your config files.

|

||||

- Creates a BASH environment alias to run the command `gravity-sync` from anywhere on the system. If you use a different shell (such as zsh or fish) as your default this may need to be added manually.

|

||||

|

||||

#### New Variables

|

||||

|

||||

- `REMOTE_FILE_OWNER` variable renamed `RILE_OWNER` for consistency.

|

||||

- `RIHOLE_DIR` variable added to set different Pi-hole directory for remote host than local.

|

||||

- `DOCKER_CON` and `ROCKER_CON` variables added to specify different names for local and remote Pi-hole Docker containers.

|

||||

- `PH_IN_TYPE` and `RH_IN_TYPE` variables allow you to to either standard or Docker deployments of Pi-hole, per side.

|

||||

- `DOCKER_BIN` and `ROCKER_BIN` variables allow you to set non-standard locations for Docker binary files, per side.

|

||||

- Adds all variables to `gravity-sync.conf.example` for easy customization.

|

||||

|

||||

#### Removals

|

||||

|

||||

- Support for `sshpass` has been removed, the only valid authentication method going forward will be ssh-key based.

|

||||

- If you've previously configured Gravity Sync using `sshpass` you will need to run `./gravity-sync.sh config` again to create a new configuration file.

|

||||

|

||||

#### Bug Killer

|

||||

|

||||

- Lots of long standing little buggles have been squashed.

|

||||

|

||||

#### Branding

|

||||

|

||||

- I made a logo.

|

||||

|

||||

<img src="https://raw.githubusercontent.com/vmstan/gravity-sync/master/docs/gs-logo.svg" height="150" width="150" alt="Gravity Sync">

|

||||

|

||||

#### 3.1.1

|

||||

|

||||

- Fixes issue where Docker based Pi-hole restarts may not complete. [#109](https://github.com/vmstan/gravity-sync/issues/109)

|

||||

|

||||

## 3.0

|

||||

|

||||

### The Breakout Release

|

||||

|

||||

This release focuses on breaking out elements of the script from the main file into a collection of a dozen or so files located under the `includes/gs-*.sh` hirearchy. Seperating out allows new contributors to work on different parts of the script individually, provides an oppertunity to clean up and reorganize parts of the code, and hopefully provides less risk of breaking the entire script.

|

||||

|

||||

This release also features a brand new installation script, including a host check for both the primary and secondary Pi-hole system. This should reduce frustration of users who are missing parts of the system requirements. This will also place a file in the sudoers.d file on both systems to make sure passwordless sudo is configured as part of the installation.

|

||||

|

||||

Lastly, we adopts Pi-hole style iconography such as `✓ ✗ e ! ?` instead of `[ GOOD ]` in command output.

|

||||

|

||||

Enjoy!

|

||||

|

||||

#### 3.0.1

|

||||

|

||||

- `dev` function now automatically updates Gravity Sync after application.

|

||||

- `dev` function pulls new branches down before prompting to select which one to update against.

|

||||

- Minor shuffle of `gravity-sync.sh` contents.

|

||||

- Clarify installation requirements in `README.md`.

|

||||

- Fixes issues with permissions on `gravity.db` after push operations.

|

||||

- Fixes missing script startup output during `dev` operation.

|

||||

|

||||

#### 3.0.2

|

||||

|

||||

- Realigned EPS conduits, they overheat if you leave them pointed the same way for too long.

|

||||

- Corrected error when running via crontab where includes directory was not properly sourced.

|

||||

|

||||

## 2.2

|

||||

|

||||

### The Purged Release

|

||||

|

||||

This release removes support for Dropbear SSH client/server. If you are using this instead of OpenSSH (common with DietPi) please reconfigure your installation to use OpenSSH. You will want to delete your existing `~/.ssh/id_rsa` and `~/.ssh/id_rsa.pub` files and run `./gravity-sync.sh configure` again to generate a new key and copy it to the primary Pi-hole.

|

||||

|

||||

This release also adds the `./gravity-sync.sh purge` function that will totally wipe out your existing Gravity Sync installation and reset it to the default state for the version you are running. If all troubleshooting of a bad installation fails, this is the command of last resort.

|

||||

|

||||

- Updates the remote backup timeout from 15 to 60, preventing the `gravity.db` backup on the remote Pi-hole from failing. (PR [#76](https://github.com/vmstan/gravity-sync/pull/76))

|

||||

- Adds uninstall instructions to the README.md file. (Basically, run the new `purge` function and then delete the `gravity-sync` folder.)

|

||||

- I found a markdown spellcheck utility for Visual Studio Code, and ran it against all my markdown files. I'm sorry, I don't spell good. 🤷♂️

|

||||

- New Star Trek references.

|

||||

|

||||

#### 2.2.1

|

||||

|

||||

- Corrects issue with Smart Sync where it would fail if there was no `custom.list` already present on the local Pi-hole.

|

||||

- Adds Pi-hole default directories to `gravity-sync.conf.example` file.

|

||||

- Adds `RIHOLE_BIN` variable to specify different Pi-hole binary location on remote server.

|

||||

|

||||

#### 2.2.2

|

||||

|

||||

- Corrects another logical problem that prevented `custom.list` from being backed up and replicated, if it didn't already exist on the local Pi-hole.

|

||||

|

||||

#### 2.2.3

|

||||

|

||||

- Adds variable to easily override database/binary file owners, useful for container deployments. (Thanks @dpraul)

|

||||

- Adds variable to easily override Pi-hole binary directory for remote host, seperate from local host. (Thanks @dpraul)

|

||||

- Rewritten `dev` option now lets you select the branch to pull code against, allowing for more flexibility in updating against test versions of the code. The `beta` function introduced in 2.1.5 has now been removed.

|

||||

- Validates existance of SQLite installation on local Pi-hole.

|

||||

- Adds Gravity Sync permissions for running user to local `/etc/sudoer.d` file during `config` operation.

|

||||

- Adds `./gravity-sync.sh sudo` function to create above file for existing setups, or to configure the remote Pi-hole by placing the installer files on that system. This is not required for existing functional installs, but this should also negate the need to give the Gravity Sync user NOPASSWD permissions to the entire system.

|

||||

|

||||

## 2.1

|

||||

|

||||

### The Backup Release

|

||||

|

||||

A new function `./gravity-sync.sh backup` will now perform a `SQLITE3` operated backup of the `gravity.db` on the local Pi-hole. This can be run at any time you wish, but can also be automated by the `./gravity-sync.sh automate` function to run once a day. New and existing users will be prompted to configure both during this task. If can also disable both using the automate function, or just automate one or the other, by setting the value to `0` during setup.

|

||||

|

||||

New users will automatically have their local settings backed up after completion of the initial setup, before the first run of any sync tasks.

|

||||

|

||||

By default, 7 days worth of backups will be retained in the `backup` folder. You can adjust the retention length by changing the `BACKUP_RETAIN` function in your `gravity-sync.conf` file. See the `ADVANCED.md` file for more information on setting these custom configuration options.

|

||||

|

||||

There are also enhancements to the `./gravity-sync.sh restore` function, where as previously this task would only restore the previous copy of the database that is made during sync operations, now this will ask you to select a previous backup copy (by date) and will use that file to restore. This will stop the Pi-hole services on the local server while the task is completed. After a successful restoration, you will now also be prompted to perform a `push` operation of the restored database to the primary Pi-hole server.

|

||||

|

||||

It's suggested to make sure your local restore was successful before completing the `restore` operation with the `push` job.

|

||||

|

||||

#### Dropbear Notice

|

||||

|

||||

Support for the the Dropbear SSH client/server (which was added in 1.7.6) will be removed in an upcoming version of Gravity Sync. If you are using this instead of OpenSSH (common with DietPi) please reconfigure your installation to use OpenSSH. You will want to delete your existing `~/.ssh/id_rsa` and `~/.ssh/id_rsa.pub` files and run `./gravity-sync.sh configure` again to generate a new key and copy it to the primary Pi-hole.

|

||||

|

||||

The `./gravity-sync.sh update` and `version` functions will look for the `dbclient` binary on the local system and warn users about the upcoming changes.

|

||||

|

||||

#### 2.1.1

|

||||

|

||||

- Last release was incorrectly published without logic to ignore `custom.list` if request or not used.

|

||||

|

||||

#### 2.1.2

|

||||

|

||||

- Corrects a bug in `backup` automation that causes the backup to run every minute during the hour selected.

|

||||

|

||||

#### 2.1.5

|

||||

|

||||

Skipping a few digits because what does it really matter?

|

||||

|

||||

- Implements a new beta branch, and with it a new `./gravity-sync.sh beta` function to enable it. This will hopefully allow new features and such to be added for test users who can adopt them and provide feedback before rolling out to the main update branch.

|

||||

- Uses new SQLITE3 backup methodology introduced in 2.1, for all push/pull sync operations.

|

||||

- `./gravity-sync.sh restore` lets you select a different `gravity.db` and `custom.list` for restoration.

|

||||

- One new Star Trek reference.

|

||||

- `./gravity-sync.sh restore` now shows recent complete Backup executions.

|

||||

|

||||

#### 2.1.6

|

||||

|

||||

- Adds prompts during `./gravity-sync.sh configure` to allow custom SSH port and enable PING avoidance.

|

||||

- Adds `ROOT_CHECK_AVOID` variable to advanced configuration options, to help facilitate running Gravity Sync with container installations of Pi-hole. (PR [#64](https://github.com/vmstan/gravity-sync/pull/64))

|

||||

- Adds the ability to automate automation. :mind_blown_emoji: Please see the ADVANCED.md document for more information. (PR [#64](https://github.com/vmstan/gravity-sync/pull/64))

|

||||

|

||||

(Thanks to [@fbourqui](https://github.com/fbourqui) for this contributions to this release.)

|

||||

|

||||

#### 2.1.7

|

||||

|

||||

- Adjusts placement of configuration import to fully implement `ROOT_CHECK_AVOID` variable.

|

||||

- Someday I'll understand all these git error messages.

|

||||

|

||||

## 2.0

|

||||

|

||||

### The Smart Release

|

||||

|

||||

In this release, Gravity Sync will now detect not only if each component (`gravity.db` and `custom.list`) has changed since the last sync, but also what direction they need to go. It will then initiate a `push` and/or `pull` specific to each piece.

|

||||

|

||||

**Example:** If the `gravity.db` has been modified on the primary Pi-hole, but the `custom.list` file has been changed on the secondary, Gravity Sync will now do a pull of the `gravity.db` then push `custom.list` and finally restart the correct components on each server. It will also now only perform a sync of each component if there are changes within each type to replicate. So if you only make a small change to your Local DNS settings, it doesn't kickoff the larger `gravity.db` replication.

|

||||

|

||||

The default command for Gravity Sync is now just `./gravity-sync.sh` -- but you can also run `./gravity-sync.sh smart` if you feel like it, and it'll do the same thing.

|

||||

|

||||

This allows you to be more flexible in where you make your configuration changes to block/allow lists and local DNS settings being made on either the primary or secondary, but it's best practice to continue making changes on one side where possible. In the event there are configuration changes to the same element (example, `custom.list` changes at both sides) then Gravity Sync will attempt to determine based on timestamps on what side the last changed happened, in which case the latest changes will be considered authoritative and overwrite the other side. Gravity Sync does not merge the contents of the files when changes happen, it simply overwrites the entire content.

|

||||

|

||||

New installs will use the `smart` function by default. Existing users who want to use this new method as their standard should run `./gravity-sync.sh automate` function to replace the existing automated `pull` with the new Smart Sync. This is not required. The previous `./gravity-sync.sh pull` and `./gravity-sync.sh push` commands continue to function as they did previously, with no intention to break this functionality.

|

||||

|

||||

#### 2.0.1

|

||||

|

||||

- Fixes bug that caused existing crontab entry not to be removed when switching from `pull` to Smart Sync. [#50](https://github.com/vmstan/gravity-sync/issues/50)

|

||||

|

||||

#### 2.0.2

|

||||

|

||||

- Correct output of `smart` function when script is run without proper function requested.

|

||||

- Decided marketing team was correct about display of versions in `CHANGELOG.md` -- sorry Chris.

|

||||

- Adds reference architectures to the `ADVANCED.md` file.

|

||||

- Checks for RSYNC functionality to remote host during `./gravity-sync.sh configure` and prompts to install. [#53](https://github.com/vmstan/gravity-sync/issues/53)

|

||||

- Move much of the previous `README.md` to `ADVANCED.md` file.

|

||||

|

||||

## 1.8

|

||||

|

||||

### The Logical Release

|

||||

|

||||

There is nothing really sexy here, but a lot of changes under the covers to improve reliability between different SSH client types. A lot of the logic and functions are more consistent and cleaner. In some cultures, fewer bugs and more reliability are considered features. Much of this will continue through the 1.8.x line.

|

||||

|

||||

- SSH/RSYNC connection logic rewritten to be specific to client options between OpenSSH, OpenSSH w/ SSHPASS, and Dropbear.

|

||||

- Key-pair generation functions rewritten to be specific to client options, also now works with no (or at least fewer) user prompts.

|

||||

- SSHPASS options should be more reliable if used, but removes messages that SSHPASS is not installed during setup, if it's not needed and Redirects user to documentation.

|

||||

- Adds custom port specification to ssh-copy-id and dropbearkey commands during configuration generation.

|

||||

- Generally better error handling of configuration options.

|

||||

|

||||

#### 1.8.1

|

||||

|

||||

- Detects if script is running as the root user or via `sudo ./gravity-sync.sh` and exits on error. [#34](https://github.com/vmstan/gravity-sync/issues/34)

|

||||

|

||||

#### 1.8.2

|

||||

|

||||

- Corrects issue where `custom.list` file would not replicate if the file didn't exist locally, and there were no other changes to replicate. [#39](https://github.com/vmstan/gravity-sync/issues/39)

|

||||

|

||||

#### 1.8.3

|

||||

|

||||

- Simplified method for input of automation frequency when running `./gravity-sync.sh automate` function.

|

||||

- Now removes existing automation task from crontab, if it exists, when re-running `automate` function.

|

||||

- Automation can be disabled by setting frequency to `0` when prompted.

|

||||

- Adds `dev` tag to `./gravity-sync.sh version` output for users running off the development branch.

|

||||

|

||||

## 1.7

|

||||

|

||||

### The Andrew Release

|

||||

|

||||

#### Features

|

||||

|

||||

- Gravity Sync will now manage the `custom.list` file that contains the "Local DNS Records" function within the Pi-hole interface.

|

||||

- If you do not want this feature enabled it can be bypassed by adding a `SKIP_CUSTOM='1'` to your .conf file.

|

||||

- Sync will be trigged during a pull operation if there are changes to either file.

|

||||

|

||||

#### Known Issues

|

||||

|

||||

- No new Star Trek references.

|

||||

|

||||

#### 1.7.1

|

||||

|

||||

- There is a changelog file now. I'm mentioning it in the changelog file. So meta.

|

||||

- `./gravity-sync.sh version` will check for and alert you for new versions.

|

||||

|

||||

#### 1.7.2

|

||||

|

||||

This update changes the way that beta/development updates are applied. To continue receiving the development branch, create an empty file in the `gravity-sync` folder called `dev` and afterwards the standard `./gravity-sync.sh update` function will apply the correct updates.

|

||||

|

||||

```bash

|

||||

cd gravity-sync

|

||||

touch dev

|

||||

./gravity-sync.sh update

|

||||

```

|

||||

|

||||

Delete the `dev` file and update again to revert back to the stable/master branch.

|

||||

|

||||

#### 1.7.3

|

||||

|

||||

- Cleaning up output of argument listing

|

||||

- Removes `beta` function for applying development branch updates.

|

||||

|

||||

#### 1.7.4

|

||||

|

||||

- `./gravity-sync.sh dev` will now toggle dev flag on/off. No `touch` required, although it still works that way under the covers. Improvement of methods added in 1.7.2.

|

||||

- `./gravity-sync.sh update` performs better error handling.

|

||||

- Slightly less verbose in some places to make up for being more verbose in others.

|

||||

- [DONE] has become [ OK ] in output.

|

||||

- [INFO] header is now yellow all the way across.

|

||||

- Tightens up verbiage of status messages.

|

||||

- Fixes `custom.list` not being processed by `./gravity-sync.sh restore` function.

|

||||

- Detects absence of `ssh` client command on host OS (DietPi)

|

||||

- Detects absence of `rsync` client command on host OS (DietPi)

|

||||

- Detects absence of `ssh-keygen` utility on host OS and will use `dropbearkey` as an alternative (DietPi)

|

||||

- Changelog polarity reversed after heated discussions with marketing team.

|

||||

|

||||

#### 1.7.5

|

||||

|

||||

- No code changes!

|

||||

- Primary README now only reflect "The Easy Way" to install and configure Gravity Sync

|

||||

- "The Less Easy Way" are now part of [ADVANCED.md](https://github.com/vmstan/gravity-sync/blob/master/ADVANCED.md)

|

||||

- All advanced configuration options are outlined in [ADVANCED.md](https://github.com/vmstan/gravity-sync/blob/master/ADVANCED.md)

|

||||

|

||||

#### 1.7.6

|

||||

|

||||

- Detects `dbclient` install as alternative to OpenSSH Client.

|

||||

- Attempts to install OpenSSH Client if not found, and Dropbear is not alternative.

|

||||

- Fix bug with `dropbearkey` not finding .ssh folder.

|

||||

- Numerous fixes to accommodate DietPi in general.

|

||||

- Fixes issue where `compare` function would show changes where actually none existed.

|

||||

- [WARN] header is now purple all the way across, consistent with [INFO] as of 1.7.4.

|

||||

- Fixes issue where `custom.list` would only pull if the file already existed on the secondary Pi-hole.

|

||||

- One new Star Trek reference.

|

||||

|

||||

#### 1.7.7

|

||||

|

||||

- `config` function will attempt to ping remote host to validate network connection, can by bypassed by adding `PING_AVOID='1'` to your `gravity-sync.conf` file.

|

||||

- Changes some [INFO] messages to [WARN] where appropriate.

|

||||

- Adds aliases for more Gravity Sync functions.

|

||||

- Shows current version on each script execution.

|

||||

- Adds time output to Aborting message (exit without change.)

|

||||

- Includes parsing of functions in time calculation.

|

||||

- Checks for existence of Pi-hole binaries during validation.

|

||||

- Less chatty about each step of configuration validation if it completes.

|

||||

- Less chatty about replication validation if it's not necessary.

|

||||

- Less chatty about file validation if no changes are required.

|

||||

- When applying `update` in DEV mode, the Git branch used will be shown.

|

||||

- Validates log export operation.

|

||||

|

||||

## 1.6

|

||||

|

||||

### The Restorative Release

|

||||

|

||||

- New `./gravity-sync.sh restore` function will bring a previous version of the `gravity.db` back from the dead.

|

||||

- Changes the way that Gravity Sync prompts for data input and how confirmation prompts are handled.

|

||||

- Adds ability to override verification of 'push', 'restore' or 'config' reset, see `.example` file for details.

|

||||

- Five new Star Trek references.

|

||||

- New functions add consistency in status output.

|

||||

|

||||

## 1.5

|

||||

|

||||

### The Automated Release

|

||||

|

||||

- You can now easily deploy the task automation via crontab by running `./gravity-sync.sh automate` which will simply ask how often you'd like to run the script per hour, and then create the entry for you.

|

||||

- If you've already configured an entry for this manually with a prior version, the script should detect this and ask that you manually remove it or edit it via crontab -e. I'm hesitant to delete existing entries here, as it could potentially remove something unrelated to Gravity Sync.

|

||||

- Changes the method for pulling development branch updates via the 'beta' function.

|

||||

- Cleanup of various exit commands.

|

||||

|

||||

## 1.4

|

||||

|

||||

### The Configuration Release

|

||||

|

||||

- Adds new `./gravity-sync config` feature to simplify deployment!

|

||||

- Adds variables for SSH settings.

|

||||

- Rearranges functions, which impacts nothing.

|

||||

- All new and exciting code comments.

|

||||

- No new Star Trek references.

|

||||

|

||||

#### 1.4.1

|

||||

|

||||

- Adds variables for custom log locations to `gravity-sync.conf`, see `.example` file for listing.

|

||||

|

||||

#### 1.4.2

|

||||

|

||||

- Will prompt to create new `gravity-sync.conf` file when run without an existing configuration.

|

||||

|

||||

#### 1.4.3

|

||||

|

||||

- Bug fixes around not properly utilizing custom SSH key-file.

|

||||

|

||||

## 1.3

|

||||

|

||||

### The Comparison Release

|

||||

|

||||

1.3 should be called 2.0, but I'll resist that temptation -- but there are so many new enhancements!

|

||||

|

||||

- Gravity Sync will now compare remote and local databases and only replicate if it detects a difference.

|

||||

- Verifies most commands complete before continuing each step to fail more gracefully.

|

||||

- Additional debugging options such as checking last cronjob output via `./gravity-sync.sh cron` if configured.

|

||||

- Much more consistency in how running commands are processed in interactive mode.

|

||||

|

||||

#### 1.3.1

|

||||

|

||||

- Changes [GOOD] to [DONE] in execution output.

|

||||

- Better validation of initial SSH connection.

|

||||

- Support for password based authentication using SSHPASS.

|

||||

|

||||

#### 1.3.2

|

||||

|

||||

- MUCH cleaner output, same great features.

|

||||

|

||||

#### 1.3.3

|

||||

|

||||

- Corrected Pi-hole bin path issue that cause automated sync not to reload services.

|

||||

|

||||

#### 1.3.4

|

||||

|

||||

- Moves backup of local database before initiating remote pull.

|

||||

- Validates file ownership and permissions before attempting to rewrite.

|

||||

- Added two Star Trek references.

|

||||

|

||||

## 1.2

|

||||

|

||||

### The Functional Release

|

||||

|

||||

- Refactored process to use functions and cleanup process of execution.

|

||||

- Does not look for permission to update when run.

|

||||

- Cleanup and expand comments.

|

||||

|

||||

#### 1.2.1

|

||||

|

||||

- Improved logging functions.

|

||||

|

||||

#### 1.2.2

|

||||

|

||||

- Different style for status updates.

|

||||

|

||||

#### 1.2.3

|

||||

|

||||

- Uses a dedicated backup folder for `.backup` and `.last` files.

|

||||

- Copies db instead of moving to rename and then replacing to be more reliable.

|

||||

- Even cleaner label status.

|

||||

|

||||

#### 1.2.4

|

||||

|

||||

- Changes `~` to `$HOME`.

|

||||

- Fixes bug that prevented sync from working when run via crontab.

|

||||

|

||||

#### 1.2.5

|

||||

|

||||

- Push function now does a backup, on the secondary PH, of the primary database, before pushing.

|

||||

|

||||

## 1.1

|

||||

|

||||

### The Pushy Release

|

||||

|

||||

- Separated main purpose of script into `pull` argument.

|

||||

- Allow process to reverse back using `push` argument.

|

||||

|

||||

#### 1.1.2

|

||||

|

||||

- First release since move from being just a Gist.

|

||||

- Just relearning how to use GitHub, minor bug fixes.

|

||||

|

||||

#### 1.1.3

|

||||

|

||||

- Now includes example an configuration file.

|

||||

|

||||

#### 1.1.4

|

||||

|

||||

- Added update script.

|

||||

- Added version check.

|

||||

|

||||

#### 1.1.5

|

||||

|

||||

- Added ability to view logs with `./gravity-sync.sh logs`.

|

||||

|

||||

#### 1.1.6

|

||||

|

||||

- Code easier to read with proper tabs.

|

||||

|

||||

## 1.0

|

||||

|

||||

### The Initial Release

|

||||

|

||||

No version control, variables or anything fancy. It only worked if everything was exactly perfect.

|

||||

|

||||

```bash

|

||||

echo 'Copying gravity.db from HA primary'

|

||||

rsync -e 'ssh -p 22' ubuntu@192.168.7.5:/etc/pihole/gravity.db /home/pi/gravity-sync

|

||||

echo 'Replacing gravity.db on HA secondary'

|

||||

sudo cp /home/pi/gravity-sync/gravity.db /etc/pihole/

|

||||

echo 'Reloading configuration of HA secondary FTLDNS from new gravity.db'

|

||||

pihole restartdns reload-lists

|

||||

```

|

||||

|

||||

For real, that's it. 6 lines, and could probably have be done with less.

|

||||

@ -3,7 +3,7 @@ SCRIPT_START=$SECONDS

|

||||

|

||||

# GRAVITY SYNC BY VMSTAN #####################

|

||||

PROGRAM='Gravity Sync'

|

||||

VERSION='3.2.0'

|

||||

VERSION='3.2.1'

|

||||

|

||||

# For documentation or downloading updates visit https://github.com/vmstan/gravity-sync

|

||||

# Requires Pi-Hole 5.x or higher already be installed, for help visit https://pi-hole.net

|

||||

@ -119,55 +119,55 @@ case $# in

|

||||

case $1 in

|

||||

smart|sync)

|

||||

start_gs

|

||||

task_smart ;;

|

||||

task_smart ;;

|

||||

pull)

|

||||

start_gs

|

||||

task_pull ;;

|

||||

task_pull ;;

|

||||

push)

|

||||

start_gs

|

||||

task_push ;;

|

||||

task_push ;;

|

||||

restore)

|

||||

start_gs

|

||||

task_restore ;;

|

||||

task_restore ;;

|

||||

version)

|

||||

start_gs_noconfig

|

||||

task_version ;;

|

||||

task_version ;;

|

||||

update|upgrade)

|

||||

start_gs_noconfig

|

||||

task_update ;;

|

||||

task_update ;;

|

||||

dev|devmode|development|develop)

|

||||

start_gs_noconfig

|

||||

task_devmode ;;

|

||||

task_devmode ;;

|

||||

logs|log)

|

||||

start_gs

|

||||

task_logs ;;

|

||||

task_logs ;;

|

||||

compare)

|

||||

start_gs

|

||||

task_compare ;;

|

||||

task_compare ;;

|

||||

cron)

|

||||

start_gs

|

||||

task_cron ;;

|

||||

task_cron ;;

|

||||

config|configure)

|

||||

start_gs_noconfig

|

||||

task_configure ;;

|

||||

task_configure ;;

|

||||

auto|automate)

|

||||

start_gs

|

||||

task_automate ;;

|

||||

task_automate ;;

|

||||

backup)

|

||||

start_gs

|

||||

task_backup ;;

|

||||

task_backup ;;

|

||||

purge)

|

||||

start_gs

|

||||

task_purge ;;

|

||||

task_purge ;;

|

||||

sudo)

|

||||

start_gs

|

||||

task_sudo ;;

|

||||

task_sudo ;;

|

||||

info)

|

||||

start_gs

|

||||

task_info ;;

|

||||

task_info ;;

|

||||

*)

|

||||

start_gs

|

||||

task_invalid ;;

|

||||

task_invalid ;;

|

||||

esac

|

||||

;;

|

||||

|

||||

@ -175,7 +175,7 @@ case $# in

|

||||

case $1 in

|

||||

auto|automate)

|

||||

start_gs

|

||||

task_automate ;;

|

||||

task_automate ;;

|

||||

esac

|

||||

;;

|

||||

|

||||

@ -183,13 +183,13 @@ case $# in

|

||||

case $1 in

|

||||

auto|automate)

|

||||

start_gs

|

||||

task_automate $2 $3 ;;

|

||||

task_automate $2 $3 ;;

|

||||

esac

|

||||

;;

|

||||

|

||||

*)

|

||||

start_gs

|

||||

task_invalid ;;

|

||||

task_invalid ;;

|

||||

esac

|

||||

|

||||

# END OF SCRIPT ##############################

|

||||

@ -170,7 +170,7 @@ function advanced_config_generate {

|

||||

|

||||

MESSAGE="Saving Local Volume Ownership to ${CONFIG_FILE}"

|

||||

echo_stat

|

||||

sed -i "/# FILE_OWNER=''/c\FILE_OWNER='named:docker'" ${LOCAL_FOLDR}/${CONFIG_FILE}

|

||||

sed -i "/# FILE_OWNER=''/c\FILE_OWNER='999:999'" ${LOCAL_FOLDR}/${CONFIG_FILE}

|

||||

error_validate

|

||||

fi

|

||||

|

||||

@ -235,7 +235,7 @@ function advanced_config_generate {

|

||||

|

||||

MESSAGE="Saving Remote Volume Ownership to ${CONFIG_FILE}"

|

||||

echo_stat

|

||||

sed -i "/# RILE_OWNER=''/c\RILE_OWNER='named:docker'" ${LOCAL_FOLDR}/${CONFIG_FILE}

|

||||

sed -i "/# RILE_OWNER=''/c\RILE_OWNER='999:999'" ${LOCAL_FOLDR}/${CONFIG_FILE}

|

||||

error_validate

|

||||

fi

|

||||

|

||||

@ -430,4 +430,4 @@ function create_alias {

|

||||

|

||||

echo -e "alias gravity-sync='${GS_FILEPATH}'" | sudo tee -a /etc/bash.bashrc > /dev/null

|

||||

error_validate

|

||||

}

|

||||

}

|

||||

|

||||

@ -76,22 +76,129 @@ function show_version {

|

||||

}

|

||||

|

||||

function show_info() {

|

||||

|

||||

if [ -f ${LOCAL_FOLDR}/dev ]

|

||||

then

|

||||

DEVVERSION="-dev"

|

||||

elif [ -f ${LOCAL_FOLDR}/beta ]

|

||||

then

|

||||

DEVVERSION="-beta"

|

||||

else

|

||||

DEVVERSION=""

|

||||

fi

|

||||

|

||||

echo -e "========================================================"

|

||||

echo -e "${YELLOW}Local Pi-hole Settings${NC}"

|

||||

echo -e "${YELLOW}Local Software Versions${NC}"

|

||||

echo -e "${RED}Gravity Sync${NC} ${VERSION}${DEVVERSION}"

|

||||

echo -e "${BLUE}Pi-hole${NC}"

|

||||

if [ "${PH_IN_TYPE}" == "default" ]

|

||||

then

|

||||

pihole version

|

||||

elif [ "${PH_IN_TYPE}" == "docker" ]

|

||||

then

|

||||

docker exec -it pihole pihole -v

|

||||

fi

|

||||

|

||||

uname -srm

|

||||

echo -e "bash $BASH_VERSION"

|

||||

ssh -V

|

||||

rsync --version | grep version

|

||||

SQLITE3_VERSION=$(sqlite3 --version)

|

||||

echo -e "sqlite3 ${SQLITE3_VERSION}"

|

||||

sudo --version | grep "Sudo version"

|

||||

git --version

|

||||

|

||||

if hash docker 2>/dev/null

|

||||

then

|

||||

docker --version

|

||||

fi

|

||||

echo -e ""

|

||||

|

||||

echo -e "${YELLOW}Local/Secondary Instance Settings${NC}"

|

||||

echo -e "Local Hostname: $HOSTNAME"

|

||||

echo -e "Local Pi-hole Type: ${PH_IN_TYPE}"

|

||||

echo -e "Local Pi-hole Config Directory: ${PIHOLE_DIR}"

|

||||

echo -e "Local Pi-hole Binary Directory: ${PIHOLE_BIN}"

|

||||

echo -e "Local Docker Binary Directory: ${DOCKER_BIN}"

|

||||

echo -e "Local File Owner Settings: ${DOCKER_BIN}"

|

||||

echo -e "Local Docker Container Name: ${DOCKER_CON}"

|

||||

echo -e "Local DNSMASQ Config Directory: ${DNSMAQ_DIR}"

|

||||

|

||||

if [ "${PH_IN_TYPE}" == "default" ]

|

||||

then

|

||||

echo -e "Local Pi-hole Binary Directory: ${PIHOLE_BIN}"

|

||||

elif [ "${PH_IN_TYPE}" == "docker" ]

|

||||

then

|

||||

echo -e "Local Pi-hole Container Name: ${DOCKER_CON}"

|

||||

echo -e "Local Docker Binary Directory: ${DOCKER_BIN}"

|

||||

fi

|

||||

|

||||

echo -e "Local File Owner Settings: ${FILE_OWNER}"

|

||||

|

||||

if [ ${SKIP_CUSTOM} == '0' ]

|

||||

then

|

||||

echo -e "DNS Replication: Enabled (default)"

|

||||

elif [ ${SKIP_CUSTOM} == '1' ]

|

||||

then

|

||||

echo -e "DNS Replication: Disabled (custom)"

|

||||

else

|

||||

echo -e "DNS Replication: Invalid Configuration"

|

||||

fi

|

||||

|

||||

if [ ${INCLUDE_CNAME} == '1' ]

|

||||

then

|

||||

echo -e "CNAME Replication: Enabled (custom)"

|

||||

elif [ ${INCLUDE_CNAME} == '0' ]

|

||||

then

|

||||

echo -e "CNAME Replication: Disabled (default)"

|

||||

else

|

||||

echo -e "CNAME Replication: Invalid Configuration"

|

||||

fi

|

||||

|

||||

if [ ${VERIFY_PASS} == '1' ]

|

||||

then

|

||||

echo -e "Verify Operations: Enabled (default)"

|

||||

elif [ ${VERIFY_PASS} == '0' ]

|

||||

then

|

||||

echo -e "Verify Operations: Disabled (custom)"

|

||||

else

|

||||

echo -e "Verify Operations: Invalid Configuration"

|

||||

fi

|

||||

|

||||

if [ ${PING_AVOID} == '0' ]

|

||||

then

|

||||

echo -e "Ping Test: Enabled (default)"

|

||||

elif [ ${PING_AVOID} == '1' ]

|

||||

then

|

||||

echo -e "Ping Test: Disabled (custom)"

|

||||

else

|

||||

echo -e "Ping Test: Invalid Configuration"

|

||||

fi

|

||||

|

||||

if [ ${BACKUP_RETAIN} == '7' ]

|

||||

then

|

||||

echo -e "Backup Retention: 7 days (default)"

|

||||

elif [ ${BACKUP_RETAIN} == '1' ]

|

||||

then

|

||||

echo -e "Backup Retention: 1 day (custom)"

|

||||

else

|

||||

echo -e "Backup Retention: ${BACKUP_RETAIN} days (custom)"

|

||||

fi

|

||||

|

||||

echo -e ""

|

||||

echo -e "${YELLOW}Remote Pi-hole Settings${NC}"

|

||||

echo -e "${YELLOW}Remote/Primary Instance Settings${NC}"

|

||||

echo -e "Remote Hostname/IP: ${REMOTE_HOST}"

|

||||

echo -e "Remote Username: ${REMOTE_USER}"

|

||||

echo -e "Remote Pi-hole Type: ${RH_IN_TYPE}"

|

||||

echo -e "Remote Pi-hole Config Directory: ${RIHOLE_DIR}"

|

||||

echo -e "Remote Pi-hole Binary Directory: ${RIHOLE_BIN}"

|

||||

echo -e "Remote Docker Binary Directory: ${DOCKER_BIN}"

|

||||

echo -e "Remote File Owner Settings: ${DOCKER_BIN}"

|

||||

echo -e "Remote Docker Container Name: ${DOCKER_CON}"

|

||||

echo -e "Remote DNSMASQ Config Directory: ${RNSMAQ_DIR}"

|