mirror of

https://github.com/invoke-ai/InvokeAI

synced 2024-08-30 20:32:17 +00:00

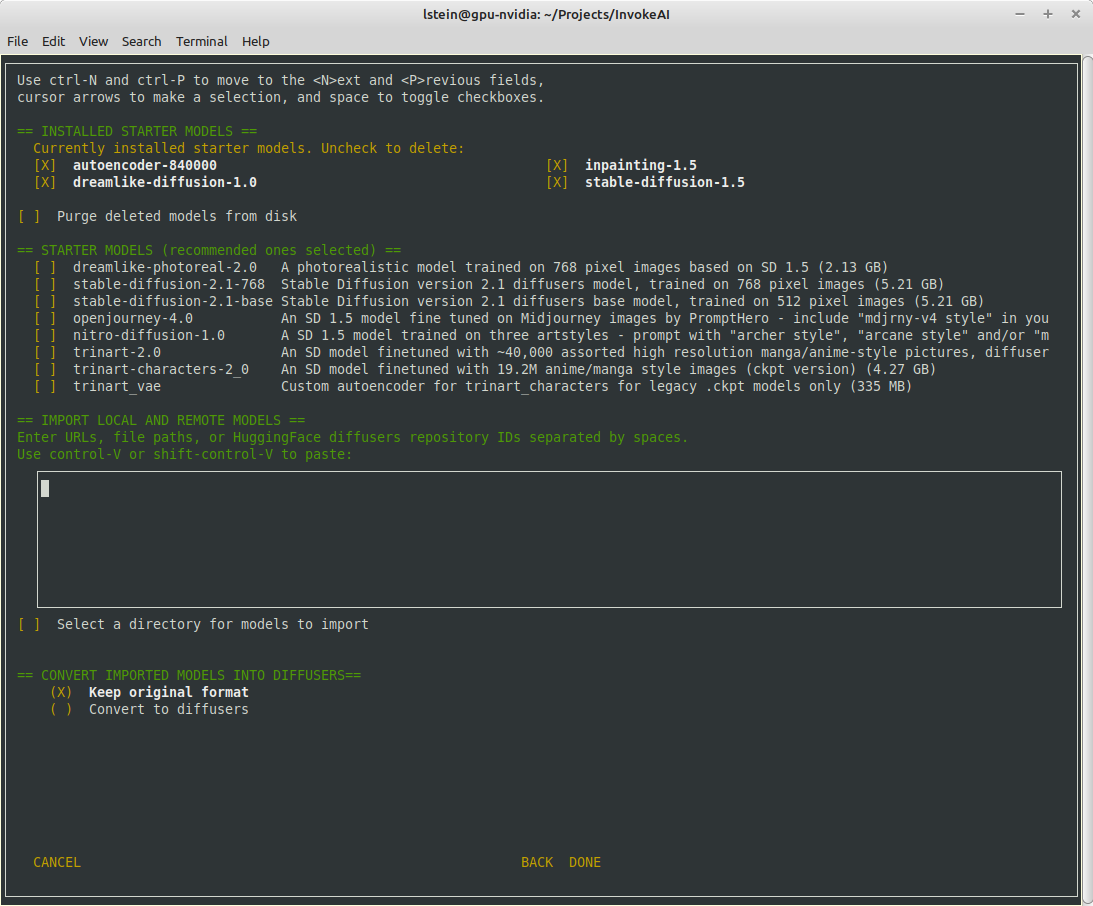

Add new console frontend to initial model selection, and other model mgmt improvements (#2644)

## Major Changes The invokeai-configure script has now been refactored. The work of selecting and downloading initial models at install time is now done by a script named `invokeai-model-install` (module name is `ldm.invoke.config.model_install`) Screen 1 - adjust startup options:  Screen 2 - select SD models:  The calling arguments for `invokeai-configure` have not changed, so nothing should break. After initializing the root directory, the script calls `invokeai-model-install` to let the user select the starting models to install. `invokeai-model-install puts up a console GUI with checkboxes to indicate which models to install. It respects the `--default_only` and `--yes` arguments so that CI will continue to work. Here are the various effects you can achieve: `invokeai-configure` This will use console-based UI to initialize invokeai.init, download support models, and choose and download SD models `invokeai-configure --yes` Without activating the GUI, populate invokeai.init with default values, download support models and download the "recommended" SD models `invokeai-configure --default_only` Activate the GUI for changing init options, but don't show the SD download form, and automatically download the default SD model (currently SD-1.5) `invokeai-model-install` Select and install models. This can be used to download arbitrary models from the Internet, install HuggingFace models using their repo_id, or watch a directory for models to load at startup time `invokeai-model-install --yes` Import the recommended SD models without a GUI `invokeai-model-install --default_only` As above, but only import the default model ## Flexible Model Imports The console GUI allows the user to import arbitrary models into InvokeAI using: 1. A HuggingFace Repo_id 2. A URL (http/https/ftp) that points to a checkpoint or safetensors file 3. A local path on disk pointing to a checkpoint/safetensors file or diffusers directory 4. A directory to be scanned for all checkpoint/safetensors files to be imported The UI allows the user to specify multiple models to bulk import. The user can specify whether to import the ckpt/safetensors as-is, or convert to `diffusers`. The user can also designate a directory to be scanned at startup time for checkpoint/safetensors files. ## Backend Changes To support the model selection GUI PR introduces a new method in `ldm.invoke.model_manager` called `heuristic_import(). This accepts a string-like object which can be a repo_id, URL, local path or directory. It will figure out what the object is and import it. It interrogates the contents of checkpoint and safetensors files to determine what type of SD model they are -- v1.x, v2.x or v1.x inpainting. ## Installer I am attaching a zip file of the installer if you would like to try the process from end to end. [InvokeAI-installer-v2.3.0.zip](https://github.com/invoke-ai/InvokeAI/files/10785474/InvokeAI-installer-v2.3.0.zip)

This commit is contained in:

@ -67,6 +67,8 @@ del /q .tmp1 .tmp2

|

||||

@rem -------------- Install and Configure ---------------

|

||||

|

||||

call python .\lib\main.py

|

||||

pause

|

||||

exit /b

|

||||

|

||||

@rem ------------------------ Subroutines ---------------

|

||||

@rem routine to do comparison of semantic version numbers

|

||||

|

||||

@ -9,13 +9,16 @@ cd $scriptdir

|

||||

function version { echo "$@" | awk -F. '{ printf("%d%03d%03d%03d\n", $1,$2,$3,$4); }'; }

|

||||

|

||||

MINIMUM_PYTHON_VERSION=3.9.0

|

||||

MAXIMUM_PYTHON_VERSION=3.11.0

|

||||

PYTHON=""

|

||||

for candidate in python3.10 python3.9 python3 python python3.11 ; do

|

||||

for candidate in python3.10 python3.9 python3 python ; do

|

||||

if ppath=`which $candidate`; then

|

||||

python_version=$($ppath -V | awk '{ print $2 }')

|

||||

if [ $(version $python_version) -ge $(version "$MINIMUM_PYTHON_VERSION") ]; then

|

||||

PYTHON=$ppath

|

||||

break

|

||||

if [ $(version $python_version) -lt $(version "$MAXIMUM_PYTHON_VERSION") ]; then

|

||||

PYTHON=$ppath

|

||||

break

|

||||

fi

|

||||

fi

|

||||

fi

|

||||

done

|

||||

@ -28,3 +31,4 @@ if [ -z "$PYTHON" ]; then

|

||||

fi

|

||||

|

||||

exec $PYTHON ./lib/main.py ${@}

|

||||

read -p "Press any key to exit"

|

||||

|

||||

@ -11,11 +11,13 @@ echo 1. command-line

|

||||

echo 2. browser-based UI

|

||||

echo 3. run textual inversion training

|

||||

echo 4. merge models (diffusers type only)

|

||||

echo 5. re-run the configure script to download new models

|

||||

echo 6. update InvokeAI

|

||||

echo 7. open the developer console

|

||||

echo 8. command-line help

|

||||

set /P restore="Please enter 1, 2, 3, 4, 5, 6, 7 or 8: [2] "

|

||||

echo 5. download and install models

|

||||

echo 6. change InvokeAI startup options

|

||||

echo 7. re-run the configure script to fix a broken install

|

||||

echo 8. open the developer console

|

||||

echo 9. update InvokeAI

|

||||

echo 10. command-line help

|

||||

set /P restore="Please enter 1-10: [2] "

|

||||

if not defined restore set restore=2

|

||||

IF /I "%restore%" == "1" (

|

||||

echo Starting the InvokeAI command-line..

|

||||

@ -25,17 +27,20 @@ IF /I "%restore%" == "1" (

|

||||

python .venv\Scripts\invokeai.exe --web %*

|

||||

) ELSE IF /I "%restore%" == "3" (

|

||||

echo Starting textual inversion training..

|

||||

python .venv\Scripts\invokeai-ti.exe --gui %*

|

||||

python .venv\Scripts\invokeai-ti.exe --gui

|

||||

) ELSE IF /I "%restore%" == "4" (

|

||||

echo Starting model merging script..

|

||||

python .venv\Scripts\invokeai-merge.exe --gui %*

|

||||

python .venv\Scripts\invokeai-merge.exe --gui

|

||||

) ELSE IF /I "%restore%" == "5" (

|

||||

echo Running invokeai-configure...

|

||||

python .venv\Scripts\invokeai-configure.exe %*

|

||||

echo Running invokeai-model-install...

|

||||

python .venv\Scripts\invokeai-model-install.exe

|

||||

) ELSE IF /I "%restore%" == "6" (

|

||||

echo Running invokeai-update...

|

||||

python .venv\Scripts\invokeai-update.exe %*

|

||||

echo Running invokeai-configure...

|

||||

python .venv\Scripts\invokeai-configure.exe --skip-sd-weight --skip-support-models

|

||||

) ELSE IF /I "%restore%" == "7" (

|

||||

echo Running invokeai-configure...

|

||||

python .venv\Scripts\invokeai-configure.exe --yes --default_only

|

||||

) ELSE IF /I "%restore%" == "8" (

|

||||

echo Developer Console

|

||||

echo Python command is:

|

||||

where python

|

||||

@ -47,7 +52,10 @@ IF /I "%restore%" == "1" (

|

||||

echo *************************

|

||||

echo *** Type `exit` to quit this shell and deactivate the Python virtual environment ***

|

||||

call cmd /k

|

||||

) ELSE IF /I "%restore%" == "8" (

|

||||

) ELSE IF /I "%restore%" == "9" (

|

||||

echo Running invokeai-update...

|

||||

python .venv\Scripts\invokeai-update.exe %*

|

||||

) ELSE IF /I "%restore%" == "10" (

|

||||

echo Displaying command line help...

|

||||

python .venv\Scripts\invokeai.exe --help %*

|

||||

pause

|

||||

|

||||

@ -30,12 +30,14 @@ if [ "$0" != "bash" ]; then

|

||||

echo "2. browser-based UI"

|

||||

echo "3. run textual inversion training"

|

||||

echo "4. merge models (diffusers type only)"

|

||||

echo "5. re-run the configure script to download new models"

|

||||

echo "6. update InvokeAI"

|

||||

echo "7. open the developer console"

|

||||

echo "8. command-line help"

|

||||

echo "5. download and install models"

|

||||

echo "6. change InvokeAI startup options"

|

||||

echo "7. re-run the configure script to fix a broken install"

|

||||

echo "8. open the developer console"

|

||||

echo "9. update InvokeAI"

|

||||

echo "10. command-line help "

|

||||

echo ""

|

||||

read -p "Please enter 1, 2, 3, 4, 5, 6, 7 or 8: [2] " yn

|

||||

read -p "Please enter 1-10: [2] " yn

|

||||

choice=${yn:='2'}

|

||||

case $choice in

|

||||

1)

|

||||

@ -55,19 +57,24 @@ if [ "$0" != "bash" ]; then

|

||||

exec invokeai-merge --gui $@

|

||||

;;

|

||||

5)

|

||||

echo "Configuration:"

|

||||

exec invokeai-configure --root ${INVOKEAI_ROOT}

|

||||

exec invokeai-model-install --root ${INVOKEAI_ROOT}

|

||||

;;

|

||||

6)

|

||||

echo "Update:"

|

||||

exec invokeai-update

|

||||

exec invokeai-configure --root ${INVOKEAI_ROOT} --skip-sd-weights --skip-support-models

|

||||

;;

|

||||

7)

|

||||

echo "Developer Console:"

|

||||

exec invokeai-configure --root ${INVOKEAI_ROOT} --yes --default_only

|

||||

;;

|

||||

8)

|

||||

echo "Developer Console:"

|

||||

file_name=$(basename "${BASH_SOURCE[0]}")

|

||||

bash --init-file "$file_name"

|

||||

;;

|

||||

8)

|

||||

9)

|

||||

echo "Update:"

|

||||

exec invokeai-update

|

||||

;;

|

||||

10)

|

||||

exec invokeai --help

|

||||

;;

|

||||

*)

|

||||

|

||||

@ -56,33 +56,3 @@ trinart-2.0:

|

||||

vae:

|

||||

repo_id: stabilityai/sd-vae-ft-mse

|

||||

recommended: False

|

||||

trinart-characters-2_0:

|

||||

description: An SD model finetuned with 19.2M anime/manga style images (ckpt version) (4.27 GB)

|

||||

repo_id: naclbit/trinart_derrida_characters_v2_stable_diffusion

|

||||

config: v1-inference.yaml

|

||||

file: derrida_final.ckpt

|

||||

format: ckpt

|

||||

vae:

|

||||

repo_id: naclbit/trinart_derrida_characters_v2_stable_diffusion

|

||||

file: autoencoder_fix_kl-f8-trinart_characters.ckpt

|

||||

width: 512

|

||||

height: 512

|

||||

recommended: False

|

||||

ft-mse-improved-autoencoder-840000:

|

||||

description: StabilityAI improved autoencoder fine-tuned for human faces. Improves legacy .ckpt models (335 MB)

|

||||

repo_id: stabilityai/sd-vae-ft-mse-original

|

||||

format: ckpt

|

||||

config: VAE/default

|

||||

file: vae-ft-mse-840000-ema-pruned.ckpt

|

||||

width: 512

|

||||

height: 512

|

||||

recommended: True

|

||||

trinart_vae:

|

||||

description: Custom autoencoder for trinart_characters for legacy .ckpt models only (335 MB)

|

||||

repo_id: naclbit/trinart_characters_19.2m_stable_diffusion_v1

|

||||

config: VAE/trinart

|

||||

format: ckpt

|

||||

file: autoencoder_fix_kl-f8-trinart_characters.ckpt

|

||||

width: 512

|

||||

height: 512

|

||||

recommended: False

|

||||

|

||||

@ -211,7 +211,7 @@ class Generate:

|

||||

Globals.full_precision = self.precision == "float32"

|

||||

|

||||

if is_xformers_available():

|

||||

if not Globals.disable_xformers:

|

||||

if torch.cuda.is_available() and not Globals.disable_xformers:

|

||||

print(">> xformers memory-efficient attention is available and enabled")

|

||||

else:

|

||||

print(

|

||||

@ -221,9 +221,13 @@ class Generate:

|

||||

print(">> xformers not installed")

|

||||

|

||||

# model caching system for fast switching

|

||||

self.model_manager = ModelManager(mconfig, self.device, self.precision,

|

||||

max_loaded_models=max_loaded_models,

|

||||

sequential_offload=self.free_gpu_mem)

|

||||

self.model_manager = ModelManager(

|

||||

mconfig,

|

||||

self.device,

|

||||

self.precision,

|

||||

max_loaded_models=max_loaded_models,

|

||||

sequential_offload=self.free_gpu_mem,

|

||||

)

|

||||

# don't accept invalid models

|

||||

fallback = self.model_manager.default_model() or FALLBACK_MODEL_NAME

|

||||

model = model or fallback

|

||||

@ -246,7 +250,7 @@ class Generate:

|

||||

# load safety checker if requested

|

||||

if safety_checker:

|

||||

try:

|

||||

print(">> Initializing safety checker")

|

||||

print(">> Initializing NSFW checker")

|

||||

from diffusers.pipelines.stable_diffusion.safety_checker import (

|

||||

StableDiffusionSafetyChecker,

|

||||

)

|

||||

@ -270,6 +274,8 @@ class Generate:

|

||||

"** An error was encountered while installing the safety checker:"

|

||||

)

|

||||

print(traceback.format_exc())

|

||||

else:

|

||||

print(">> NSFW checker is disabled")

|

||||

|

||||

def prompt2png(self, prompt, outdir, **kwargs):

|

||||

"""

|

||||

|

||||

@ -5,7 +5,7 @@ import sys

|

||||

import traceback

|

||||

from argparse import Namespace

|

||||

from pathlib import Path

|

||||

from typing import List, Optional, Union

|

||||

from typing import Union

|

||||

|

||||

import click

|

||||

|

||||

@ -17,6 +17,7 @@ if sys.platform == "darwin":

|

||||

import pyparsing # type: ignore

|

||||

|

||||

import ldm.invoke

|

||||

|

||||

from ..generate import Generate

|

||||

from .args import (Args, dream_cmd_from_png, metadata_dumps,

|

||||

metadata_from_png)

|

||||

@ -83,6 +84,7 @@ def main():

|

||||

import transformers # type: ignore

|

||||

|

||||

from ldm.generate import Generate

|

||||

|

||||

transformers.logging.set_verbosity_error()

|

||||

import diffusers

|

||||

|

||||

@ -155,11 +157,14 @@ def main():

|

||||

report_model_error(opt, e)

|

||||

|

||||

# try to autoconvert new models

|

||||

# autoimport new .ckpt files

|

||||

if path := opt.autoimport:

|

||||

gen.model_manager.heuristic_import(

|

||||

str(path), convert=False, commit_to_conf=opt.conf

|

||||

)

|

||||

|

||||

if path := opt.autoconvert:

|

||||

gen.model_manager.autoconvert_weights(

|

||||

conf_path=opt.conf,

|

||||

weights_directory=path,

|

||||

gen.model_manager.heuristic_import(

|

||||

str(path), convert=True, commit_to_conf=opt.conf

|

||||

)

|

||||

|

||||

# web server loops forever

|

||||

@ -529,32 +534,25 @@ def do_command(command: str, gen, opt: Args, completer) -> tuple:

|

||||

"** please provide (1) a URL to a .ckpt file to import; (2) a local path to a .ckpt file; or (3) a diffusers repository id in the form stabilityai/stable-diffusion-2-1"

|

||||

)

|

||||

else:

|

||||

import_model(path[1], gen, opt, completer)

|

||||

completer.add_history(command)

|

||||

try:

|

||||

import_model(path[1], gen, opt, completer)

|

||||

completer.add_history(command)

|

||||

except KeyboardInterrupt:

|

||||

print('\n')

|

||||

operation = None

|

||||

|

||||

elif command.startswith("!convert"):

|

||||

elif command.startswith(("!convert","!optimize")):

|

||||

path = shlex.split(command)

|

||||

if len(path) < 2:

|

||||

print("** please provide the path to a .ckpt or .safetensors model")

|

||||

elif not os.path.exists(path[1]):

|

||||

print(f"** {path[1]}: model not found")

|

||||

else:

|

||||

optimize_model(path[1], gen, opt, completer)

|

||||

completer.add_history(command)

|

||||

try:

|

||||

convert_model(path[1], gen, opt, completer)

|

||||

completer.add_history(command)

|

||||

except KeyboardInterrupt:

|

||||

print('\n')

|

||||

operation = None

|

||||

|

||||

elif command.startswith("!optimize"):

|

||||

path = shlex.split(command)

|

||||

if len(path) < 2:

|

||||

print("** please provide an installed model name")

|

||||

elif not path[1] in gen.model_manager.list_models():

|

||||

print(f"** {path[1]}: model not found")

|

||||

else:

|

||||

optimize_model(path[1], gen, opt, completer)

|

||||

completer.add_history(command)

|

||||

operation = None

|

||||

|

||||

|

||||

elif command.startswith("!edit"):

|

||||

path = shlex.split(command)

|

||||

if len(path) < 2:

|

||||

@ -626,190 +624,69 @@ def set_default_output_dir(opt: Args, completer: Completer):

|

||||

completer.set_default_dir(opt.outdir)

|

||||

|

||||

|

||||

def import_model(model_path: str, gen, opt, completer):

|

||||

def import_model(model_path: str, gen, opt, completer, convert=False) -> str:

|

||||

"""

|

||||

model_path can be (1) a URL to a .ckpt file; (2) a local .ckpt file path;

|

||||

(3) a huggingface repository id; or (4) a local directory containing a

|

||||

diffusers model.

|

||||

"""

|

||||

model_path = model_path.replace('\\','/') # windows

|

||||

model_path = model_path.replace("\\", "/") # windows

|

||||

default_name = Path(model_path).stem

|

||||

model_name = None

|

||||

model_desc = None

|

||||

|

||||

if model_path.startswith(("http:", "https:", "ftp:")):

|

||||

model_name = import_ckpt_model(model_path, gen, opt, completer)

|

||||

|

||||

elif (

|

||||

os.path.exists(model_path)

|

||||

and model_path.endswith((".ckpt", ".safetensors"))

|

||||

and os.path.isfile(model_path)

|

||||

if (

|

||||

Path(model_path).is_dir()

|

||||

and not (Path(model_path) / "model_index.json").exists()

|

||||

):

|

||||

model_name = import_ckpt_model(model_path, gen, opt, completer)

|

||||

|

||||

elif os.path.isdir(model_path):

|

||||

# Allow for a directory containing multiple models.

|

||||

models = list(Path(model_path).rglob("*.ckpt")) + list(

|

||||

Path(model_path).rglob("*.safetensors")

|

||||

)

|

||||

|

||||

if models:

|

||||

# Only the last model name will be used below.

|

||||

for model in sorted(models):

|

||||

if click.confirm(f"Import {model.stem} ?", default=True):

|

||||

model_name = import_ckpt_model(model, gen, opt, completer)

|

||||

print()

|

||||

else:

|

||||

model_name = import_diffuser_model(Path(model_path), gen, opt, completer)

|

||||

|

||||

elif re.match(r"^[\w.+-]+/[\w.+-]+$", model_path):

|

||||

model_name = import_diffuser_model(model_path, gen, opt, completer)

|

||||

|

||||

pass

|

||||

else:

|

||||

print(

|

||||

f"** {model_path} is neither the path to a .ckpt file nor a diffusers repository id. Can't import."

|

||||

)

|

||||

if model_path.startswith(('http:','https:')):

|

||||

try:

|

||||

default_name = url_attachment_name(model_path)

|

||||

default_name = Path(default_name).stem

|

||||

except Exception as e:

|

||||

print(f'** URL: {str(e)}')

|

||||

model_name, model_desc = _get_model_name_and_desc(

|

||||

gen.model_manager,

|

||||

completer,

|

||||

model_name=default_name,

|

||||

)

|

||||

imported_name = gen.model_manager.heuristic_import(

|

||||

model_path,

|

||||

model_name=model_name,

|

||||

description=model_desc,

|

||||

convert=convert,

|

||||

)

|

||||

|

||||

if not model_name:

|

||||

if not imported_name:

|

||||

print("** Import failed or was skipped")

|

||||

return

|

||||

|

||||

if not _verify_load(model_name, gen):

|

||||

if not _verify_load(imported_name, gen):

|

||||

print("** model failed to load. Discarding configuration entry")

|

||||

gen.model_manager.del_model(model_name)

|

||||

gen.model_manager.del_model(imported_name)

|

||||

return

|

||||

if click.confirm('Make this the default model?', default=False):

|

||||

gen.model_manager.set_default_model(model_name)

|

||||

if click.confirm("Make this the default model?", default=False):

|

||||

gen.model_manager.set_default_model(imported_name)

|

||||

|

||||

gen.model_manager.commit(opt.conf)

|

||||

completer.update_models(gen.model_manager.list_models())

|

||||

print(f">> {model_name} successfully installed")

|

||||

|

||||

|

||||

def import_checkpoint_list(models: List[Path], gen, opt, completer)->List[str]:

|

||||

'''

|

||||

Does a mass import of all the checkpoint/safetensors on a path list

|

||||

'''

|

||||

model_names = list()

|

||||

choice = input('** Directory of checkpoint/safetensors models detected. Install <a>ll or <s>elected models? [a] ') or 'a'

|

||||

do_all = choice.startswith('a')

|

||||

if do_all:

|

||||

config_file = _ask_for_config_file(models[0], completer, plural=True)

|

||||

manager = gen.model_manager

|

||||

for model in sorted(models):

|

||||

model_name = f'{model.stem}'

|

||||

model_description = f'Imported model {model_name}'

|

||||

if model_name in manager.model_names():

|

||||

print(f'** {model_name} is already imported. Skipping.')

|

||||

elif manager.import_ckpt_model(

|

||||

model,

|

||||

config = config_file,

|

||||

model_name = model_name,

|

||||

model_description = model_description,

|

||||

commit_to_conf = opt.conf):

|

||||

model_names.append(model_name)

|

||||

print(f'>> Model {model_name} imported successfully')

|

||||

else:

|

||||

print(f'** Model {model} failed to import')

|

||||

else:

|

||||

for model in sorted(models):

|

||||

if click.confirm(f'Import {model.stem} ?', default=True):

|

||||

if model_name := import_ckpt_model(model, gen, opt, completer):

|

||||

print(f'>> Model {model.stem} imported successfully')

|

||||

model_names.append(model_name)

|

||||

else:

|

||||

print(f'** Model {model} failed to import')

|

||||

print()

|

||||

return model_names

|

||||

|

||||

def import_diffuser_model(

|

||||

path_or_repo: Union[Path, str], gen, _, completer

|

||||

) -> Optional[str]:

|

||||

path_or_repo = path_or_repo.replace('\\','/') # windows

|

||||

manager = gen.model_manager

|

||||

default_name = Path(path_or_repo).stem

|

||||

default_description = f"Imported model {default_name}"

|

||||

model_name, model_description = _get_model_name_and_desc(

|

||||

manager,

|

||||

completer,

|

||||

model_name=default_name,

|

||||

model_description=default_description,

|

||||

)

|

||||

vae = None

|

||||

if click.confirm('Replace this model\'s VAE with "stabilityai/sd-vae-ft-mse"?', default=False):

|

||||

vae = dict(repo_id='stabilityai/sd-vae-ft-mse')

|

||||

|

||||

if not manager.import_diffuser_model(

|

||||

path_or_repo, model_name=model_name, vae=vae, description=model_description

|

||||

):

|

||||

print("** model failed to import")

|

||||

return None

|

||||

return model_name

|

||||

|

||||

def import_ckpt_model(

|

||||

path_or_url: Union[Path, str], gen, opt, completer

|

||||

) -> Optional[str]:

|

||||

path_or_url = path_or_url.replace('\\','/')

|

||||

manager = gen.model_manager

|

||||

is_a_url = str(path_or_url).startswith(('http:','https:'))

|

||||

base_name = Path(url_attachment_name(path_or_url)).name if is_a_url else Path(path_or_url).name

|

||||

default_name = Path(base_name).stem

|

||||

default_description = f"Imported model {default_name}"

|

||||

|

||||

model_name, model_description = _get_model_name_and_desc(

|

||||

manager,

|

||||

completer,

|

||||

model_name=default_name,

|

||||

model_description=default_description,

|

||||

)

|

||||

config_file = None

|

||||

default = (

|

||||

Path(Globals.root, "configs/stable-diffusion/v1-inpainting-inference.yaml")

|

||||

if re.search("inpaint", default_name, flags=re.IGNORECASE)

|

||||

else Path(Globals.root, "configs/stable-diffusion/v1-inference.yaml")

|

||||

)

|

||||

|

||||

completer.complete_extensions((".yaml", ".yml"))

|

||||

completer.set_line(str(default))

|

||||

done = False

|

||||

while not done:

|

||||

config_file = input("Configuration file for this model: ").strip()

|

||||

done = os.path.exists(config_file)

|

||||

|

||||

completer.complete_extensions((".ckpt", ".safetensors"))

|

||||

vae = None

|

||||

default = Path(

|

||||

Globals.root, "models/ldm/stable-diffusion-v1/vae-ft-mse-840000-ema-pruned.ckpt"

|

||||

)

|

||||

completer.set_line(str(default))

|

||||

done = False

|

||||

while not done:

|

||||

vae = input("VAE file for this model (leave blank for none): ").strip() or None

|

||||

done = (not vae) or os.path.exists(vae)

|

||||

completer.complete_extensions(None)

|

||||

|

||||

if not manager.import_ckpt_model(

|

||||

path_or_url,

|

||||

config=config_file,

|

||||

vae=vae,

|

||||

model_name=model_name,

|

||||

model_description=model_description,

|

||||

commit_to_conf=opt.conf,

|

||||

):

|

||||

print("** model failed to import")

|

||||

return None

|

||||

|

||||

return model_name

|

||||

|

||||

|

||||

def _verify_load(model_name: str, gen) -> bool:

|

||||

print(">> Verifying that new model loads...")

|

||||

current_model = gen.model_name

|

||||

try:

|

||||

if not gen.model_manager.get_model(model_name):

|

||||

if not gen.set_model(model_name):

|

||||

return False

|

||||

except Exception as e:

|

||||

print(f'** model failed to load: {str(e)}')

|

||||

print('** note that importing 2.X checkpoints is not supported. Please use !convert_model instead.')

|

||||

print(f"** model failed to load: {str(e)}")

|

||||

print(

|

||||

"** note that importing 2.X checkpoints is not supported. Please use !convert_model instead."

|

||||

)

|

||||

return False

|

||||

if click.confirm('Keep model loaded?', default=True):

|

||||

if click.confirm("Keep model loaded?", default=True):

|

||||

gen.set_model(model_name)

|

||||

else:

|

||||

print(">> Restoring previous model")

|

||||

@ -821,6 +698,7 @@ def _get_model_name_and_desc(

|

||||

model_manager, completer, model_name: str = "", model_description: str = ""

|

||||

):

|

||||

model_name = _get_model_name(model_manager.list_models(), completer, model_name)

|

||||

model_description = model_description or f"Imported model {model_name}"

|

||||

completer.set_line(model_description)

|

||||

model_description = (

|

||||

input(f"Description for this model [{model_description}]: ").strip()

|

||||

@ -828,46 +706,11 @@ def _get_model_name_and_desc(

|

||||

)

|

||||

return model_name, model_description

|

||||

|

||||

def _ask_for_config_file(model_path: Union[str,Path], completer, plural: bool=False)->Path:

|

||||

default = '1'

|

||||

if re.search('inpaint',str(model_path),flags=re.IGNORECASE):

|

||||

default = '3'

|

||||

choices={

|

||||

'1': 'v1-inference.yaml',

|

||||

'2': 'v2-inference-v.yaml',

|

||||

'3': 'v1-inpainting-inference.yaml',

|

||||

}

|

||||

|

||||

prompt = '''What type of models are these?:

|

||||

[1] Models based on Stable Diffusion 1.X

|

||||

[2] Models based on Stable Diffusion 2.X

|

||||

[3] Inpainting models based on Stable Diffusion 1.X

|

||||

[4] Something else''' if plural else '''What type of model is this?:

|

||||

[1] A model based on Stable Diffusion 1.X

|

||||

[2] A model based on Stable Diffusion 2.X

|

||||

[3] An inpainting models based on Stable Diffusion 1.X

|

||||

[4] Something else'''

|

||||

print(prompt)

|

||||

choice = input(f'Your choice: [{default}] ')

|

||||

choice = choice.strip() or default

|

||||

if config_file := choices.get(choice,None):

|

||||

return Path('configs','stable-diffusion',config_file)

|

||||

|

||||

# otherwise ask user to select

|

||||

done = False

|

||||

completer.complete_extensions(('.yaml','.yml'))

|

||||

completer.set_line(str(Path(Globals.root,'configs/stable-diffusion/')))

|

||||

while not done:

|

||||

config_path = input('Configuration file for this model (leave blank to abort): ').strip()

|

||||

done = not config_path or os.path.exists(config_path)

|

||||

return config_path

|

||||

|

||||

def optimize_model(model_name_or_path: Union[Path,str], gen, opt, completer):

|

||||

model_name_or_path = model_name_or_path.replace('\\','/') # windows

|

||||

def convert_model(model_name_or_path: Union[Path, str], gen, opt, completer) -> str:

|

||||

model_name_or_path = model_name_or_path.replace("\\", "/") # windows

|

||||

manager = gen.model_manager

|

||||

ckpt_path = None

|

||||

original_config_file=None

|

||||

|

||||

original_config_file = None

|

||||

if model_name_or_path == gen.model_name:

|

||||

print("** Can't convert the active model. !switch to another model first. **")

|

||||

return

|

||||

@ -877,61 +720,39 @@ def optimize_model(model_name_or_path: Union[Path,str], gen, opt, completer):

|

||||

original_config_file = Path(model_info["config"])

|

||||

model_name = model_name_or_path

|

||||

model_description = model_info["description"]

|

||||

vae = model_info["vae"]

|

||||

else:

|

||||

print(f"** {model_name_or_path} is not a legacy .ckpt weights file")

|

||||

return

|

||||

elif os.path.exists(model_name_or_path):

|

||||

original_config_file = original_config_file or _ask_for_config_file(model_name_or_path, completer)

|

||||

if not original_config_file:

|

||||

return

|

||||

ckpt_path = Path(model_name_or_path)

|

||||

model_name, model_description = _get_model_name_and_desc(

|

||||

manager, completer, ckpt_path.stem, f"Converted model {ckpt_path.stem}"

|

||||

if vae_repo := ldm.invoke.model_manager.VAE_TO_REPO_ID.get(Path(vae).stem):

|

||||

vae_repo = dict(repo_id=vae_repo)

|

||||

else:

|

||||

vae_repo = None

|

||||

model_name = manager.convert_and_import(

|

||||

ckpt_path,

|

||||

diffusers_path=Path(

|

||||

Globals.root, "models", Globals.converted_ckpts_dir, model_name_or_path

|

||||

),

|

||||

model_name=model_name,

|

||||

model_description=model_description,

|

||||

original_config_file=original_config_file,

|

||||

vae=vae_repo,

|

||||

)

|

||||

else:

|

||||

print(

|

||||

f"** {model_name_or_path} is neither an existing model nor the path to a .ckpt file"

|

||||

)

|

||||

try:

|

||||

model_name = import_model(model_name_or_path, gen, opt, completer, convert=True)

|

||||

except KeyboardInterrupt:

|

||||

return

|

||||

|

||||

if not model_name:

|

||||

print("** Conversion failed. Aborting.")

|

||||

return

|

||||

|

||||

if not ckpt_path.is_absolute():

|

||||

ckpt_path = Path(Globals.root, ckpt_path)

|

||||

|

||||

if original_config_file and not original_config_file.is_absolute():

|

||||

original_config_file = Path(Globals.root, original_config_file)

|

||||

|

||||

diffuser_path = Path(

|

||||

Globals.root, "models", Globals.converted_ckpts_dir, model_name

|

||||

)

|

||||

if diffuser_path.exists():

|

||||

print(

|

||||

f"** {model_name_or_path} is already optimized. Will not overwrite. If this is an error, please remove the directory {diffuser_path} and try again."

|

||||

)

|

||||

return

|

||||

|

||||

vae = None

|

||||

if click.confirm('Replace this model\'s VAE with "stabilityai/sd-vae-ft-mse"?', default=False):

|

||||

vae = dict(repo_id='stabilityai/sd-vae-ft-mse')

|

||||

|

||||

new_config = gen.model_manager.convert_and_import(

|

||||

ckpt_path,

|

||||

diffuser_path,

|

||||

model_name=model_name,

|

||||

model_description=model_description,

|

||||

vae=vae,

|

||||

original_config_file=original_config_file,

|

||||

commit_to_conf=opt.conf,

|

||||

)

|

||||

if not new_config:

|

||||

return

|

||||

|

||||

completer.update_models(gen.model_manager.list_models())

|

||||

if click.confirm(f'Load optimized model {model_name}?', default=True):

|

||||

gen.set_model(model_name)

|

||||

|

||||

if click.confirm(f'Delete the original .ckpt file at {ckpt_path}?',default=False):

|

||||

manager.commit(opt.conf)

|

||||

if click.confirm(f"Delete the original .ckpt file at {ckpt_path}?", default=False):

|

||||

ckpt_path.unlink(missing_ok=True)

|

||||

print(f"{ckpt_path} deleted")

|

||||

return model_name

|

||||

|

||||

|

||||

def del_config(model_name: str, gen, opt, completer):

|

||||

@ -943,11 +764,15 @@ def del_config(model_name: str, gen, opt, completer):

|

||||

print(f"** Unknown model {model_name}")

|

||||

return

|

||||

|

||||

if not click.confirm(f'Remove {model_name} from the list of models known to InvokeAI?',default=True):

|

||||

if not click.confirm(

|

||||

f"Remove {model_name} from the list of models known to InvokeAI?", default=True

|

||||

):

|

||||

return

|

||||

|

||||

delete_completely = click.confirm('Completely remove the model file or directory from disk?',default=False)

|

||||

gen.model_manager.del_model(model_name,delete_files=delete_completely)

|

||||

delete_completely = click.confirm(

|

||||

"Completely remove the model file or directory from disk?", default=False

|

||||

)

|

||||

gen.model_manager.del_model(model_name, delete_files=delete_completely)

|

||||

gen.model_manager.commit(opt.conf)

|

||||

print(f"** {model_name} deleted")

|

||||

completer.update_models(gen.model_manager.list_models())

|

||||

@ -970,13 +795,30 @@ def edit_model(model_name: str, gen, opt, completer):

|

||||

completer.set_line(info[attribute])

|

||||

info[attribute] = input(f"{attribute}: ") or info[attribute]

|

||||

|

||||

if info["format"] == "diffusers":

|

||||

vae = info.get("vae", dict(repo_id=None, path=None, subfolder=None))

|

||||

completer.set_line(vae.get("repo_id") or "stabilityai/sd-vae-ft-mse")

|

||||

vae["repo_id"] = input("External VAE repo_id: ").strip() or None

|

||||

if not vae["repo_id"]:

|

||||

completer.set_line(vae.get("path") or "")

|

||||

vae["path"] = (

|

||||

input("Path to a local diffusers VAE model (usually none): ").strip()

|

||||

or None

|

||||

)

|

||||

completer.set_line(vae.get("subfolder") or "")

|

||||

vae["subfolder"] = (

|

||||

input("Name of subfolder containing the VAE model (usually none): ").strip()

|

||||

or None

|

||||

)

|

||||

info["vae"] = vae

|

||||

|

||||

if new_name != model_name:

|

||||

manager.del_model(model_name)

|

||||

|

||||

# this does the update

|

||||

manager.add_model(new_name, info, True)

|

||||

|

||||

if click.confirm('Make this the default model?',default=False):

|

||||

if click.confirm("Make this the default model?", default=False):

|

||||

manager.set_default_model(new_name)

|

||||

manager.commit(opt.conf)

|

||||

completer.update_models(manager.list_models())

|

||||

@ -1354,7 +1196,10 @@ def report_model_error(opt: Namespace, e: Exception):

|

||||

"** Reconfiguration is being forced by environment variable INVOKE_MODEL_RECONFIGURE"

|

||||

)

|

||||

else:

|

||||

if click.confirm('Do you want to run invokeai-configure script to select and/or reinstall models?', default=True):

|

||||

if not click.confirm(

|

||||

'Do you want to run invokeai-configure script to select and/or reinstall models?',

|

||||

default=False

|

||||

):

|

||||

return

|

||||

|

||||

print("invokeai-configure is launching....\n")

|

||||

|

||||

@ -93,6 +93,7 @@ import shlex

|

||||

import sys

|

||||

from argparse import Namespace

|

||||

from pathlib import Path

|

||||

from typing import List

|

||||

|

||||

import ldm.invoke

|

||||

import ldm.invoke.pngwriter

|

||||

@ -173,10 +174,10 @@ class Args(object):

|

||||

self._arg_switches = self.parse_cmd('') # fill in defaults

|

||||

self._cmd_switches = self.parse_cmd('') # fill in defaults

|

||||

|

||||

def parse_args(self):

|

||||

def parse_args(self, args: List[str]=None):

|

||||

'''Parse the shell switches and store.'''

|

||||

sysargs = args if args is not None else sys.argv[1:]

|

||||

try:

|

||||

sysargs = sys.argv[1:]

|

||||

# pre-parse before we do any initialization to get root directory

|

||||

# and intercept --version request

|

||||

switches = self._arg_parser.parse_args(sysargs)

|

||||

@ -539,11 +540,17 @@ class Args(object):

|

||||

default=False,

|

||||

help='Check for and blur potentially NSFW images. Use --no-nsfw_checker to disable.',

|

||||

)

|

||||

model_group.add_argument(

|

||||

'--autoimport',

|

||||

default=None,

|

||||

type=str,

|

||||

help='Check the indicated directory for .ckpt/.safetensors weights files at startup and import directly',

|

||||

)

|

||||

model_group.add_argument(

|

||||

'--autoconvert',

|

||||

default=None,

|

||||

type=str,

|

||||

help='Check the indicated directory for .ckpt weights files at startup and import as optimized diffuser models',

|

||||

help='Check the indicated directory for .ckpt/.safetensors weights files at startup and import as optimized diffuser models',

|

||||

)

|

||||

model_group.add_argument(

|

||||

'--patchmatch',

|

||||

@ -561,8 +568,8 @@ class Args(object):

|

||||

'--outdir',

|

||||

'-o',

|

||||

type=str,

|

||||

help='Directory to save generated images and a log of prompts and seeds. Default: outputs/img-samples',

|

||||

default='outputs/img-samples',

|

||||

help='Directory to save generated images and a log of prompts and seeds. Default: ROOTDIR/outputs',

|

||||

default='outputs',

|

||||

)

|

||||

file_group.add_argument(

|

||||

'--prompt_as_dir',

|

||||

|

||||

@ -803,6 +803,7 @@ def load_pipeline_from_original_stable_diffusion_ckpt(

|

||||

extract_ema:bool=True,

|

||||

upcast_attn:bool=False,

|

||||

vae:AutoencoderKL=None,

|

||||

precision:torch.dtype=torch.float32,

|

||||

return_generator_pipeline:bool=False,

|

||||

)->Union[StableDiffusionPipeline,StableDiffusionGeneratorPipeline]:

|

||||

'''

|

||||

@ -828,6 +829,7 @@ def load_pipeline_from_original_stable_diffusion_ckpt(

|

||||

checkpoints that have both EMA and non-EMA weights. Whether to extract the EMA weights

|

||||

or not. Defaults to `False`. Pass `True` to extract the EMA weights. EMA weights usually yield higher

|

||||

quality images for inference. Non-EMA weights are usually better to continue fine-tuning.

|

||||

:param precision: precision to use - torch.float16, torch.float32 or torch.autocast

|

||||

:param upcast_attention: Whether the attention computation should always be upcasted. This is necessary when

|

||||

running stable diffusion 2.1.

|

||||

'''

|

||||

@ -988,12 +990,12 @@ def load_pipeline_from_original_stable_diffusion_ckpt(

|

||||

safety_checker = StableDiffusionSafetyChecker.from_pretrained('CompVis/stable-diffusion-safety-checker',cache_dir=global_cache_dir("hub"))

|

||||

feature_extractor = AutoFeatureExtractor.from_pretrained("CompVis/stable-diffusion-safety-checker",cache_dir=cache_dir)

|

||||

pipe = pipeline_class(

|

||||

vae=vae,

|

||||

text_encoder=text_model,

|

||||

vae=vae.to(precision),

|

||||

text_encoder=text_model.to(precision),

|

||||

tokenizer=tokenizer,

|

||||

unet=unet,

|

||||

unet=unet.to(precision),

|

||||

scheduler=scheduler,

|

||||

safety_checker=safety_checker,

|

||||

safety_checker=safety_checker.to(precision),

|

||||

feature_extractor=feature_extractor,

|

||||

)

|

||||

else:

|

||||

|

||||

File diff suppressed because it is too large

Load Diff

495

ldm/invoke/config/model_install.py

Normal file

495

ldm/invoke/config/model_install.py

Normal file

@ -0,0 +1,495 @@

|

||||

#!/usr/bin/env python

|

||||

# Copyright (c) 2022 Lincoln D. Stein (https://github.com/lstein)

|

||||

# Before running stable-diffusion on an internet-isolated machine,

|

||||

# run this script from one with internet connectivity. The

|

||||

# two machines must share a common .cache directory.

|

||||

|

||||

"""

|

||||

This is the npyscreen frontend to the model installation application.

|

||||

The work is actually done in backend code in model_install_backend.py.

|

||||

"""

|

||||

|

||||

import argparse

|

||||

import curses

|

||||

import os

|

||||

import sys

|

||||

import traceback

|

||||

from argparse import Namespace

|

||||

from pathlib import Path

|

||||

from typing import List

|

||||

|

||||

import npyscreen

|

||||

import torch

|

||||

from npyscreen import widget

|

||||

from omegaconf import OmegaConf

|

||||

|

||||

from ..devices import choose_precision, choose_torch_device

|

||||

from ..globals import Globals, global_config_dir

|

||||

from .model_install_backend import (Dataset_path, default_config_file,

|

||||

default_dataset, get_root,

|

||||

install_requested_models,

|

||||

recommended_datasets)

|

||||

from .widgets import (MultiSelectColumns, TextBox,

|

||||

OffsetButtonPress, CenteredTitleText)

|

||||

|

||||

class addModelsForm(npyscreen.FormMultiPage):

|

||||

# for responsive resizing - disabled

|

||||

#FIX_MINIMUM_SIZE_WHEN_CREATED = False

|

||||

|

||||

def __init__(self, parentApp, name, multipage=False, *args, **keywords):

|

||||

self.multipage = multipage

|

||||

self.initial_models = OmegaConf.load(Dataset_path)

|

||||

try:

|

||||

self.existing_models = OmegaConf.load(default_config_file())

|

||||

except:

|

||||

self.existing_models = dict()

|

||||

self.starter_model_list = [

|

||||

x for x in list(self.initial_models.keys()) if x not in self.existing_models

|

||||

]

|

||||

self.installed_models = dict()

|

||||

super().__init__(parentApp=parentApp, name=name, *args, **keywords)

|

||||

|

||||

def create(self):

|

||||

window_height, window_width = curses.initscr().getmaxyx()

|

||||

starter_model_labels = self._get_starter_model_labels()

|

||||

recommended_models = [

|

||||

x

|

||||

for x in self.starter_model_list

|

||||

if self.initial_models[x].get("recommended", False)

|

||||

]

|

||||

self.installed_models = sorted(

|

||||

[x for x in list(self.initial_models.keys()) if x in self.existing_models]

|

||||

)

|

||||

self.nextrely -= 1

|

||||

self.add_widget_intelligent(

|

||||

npyscreen.FixedText,

|

||||

value="Use ctrl-N and ctrl-P to move to the <N>ext and <P>revious fields,",

|

||||

editable=False,

|

||||

color='CAUTION',

|

||||

)

|

||||

self.add_widget_intelligent(

|

||||

npyscreen.FixedText,

|

||||

value="Use cursor arrows to make a selection, and space to toggle checkboxes.",

|

||||

editable=False,

|

||||

color='CAUTION'

|

||||

)

|

||||

self.nextrely += 1

|

||||

if len(self.installed_models) > 0:

|

||||

self.add_widget_intelligent(

|

||||

CenteredTitleText,

|

||||

name="== INSTALLED STARTER MODELS ==",

|

||||

editable=False,

|

||||

color="CONTROL",

|

||||

)

|

||||

self.nextrely -= 1

|

||||

self.add_widget_intelligent(

|

||||

CenteredTitleText,

|

||||

name="Currently installed starter models. Uncheck to delete:",

|

||||

editable=False,

|

||||

labelColor="CAUTION",

|

||||

)

|

||||

self.nextrely -= 1

|

||||

columns = self._get_columns()

|

||||

self.previously_installed_models = self.add_widget_intelligent(

|

||||

MultiSelectColumns,

|

||||

columns=columns,

|

||||

values=self.installed_models,

|

||||

value=[x for x in range(0, len(self.installed_models))],

|

||||

max_height=1 + len(self.installed_models) // columns,

|

||||

relx=4,

|

||||

slow_scroll=True,

|

||||

scroll_exit=True,

|

||||

)

|

||||

self.purge_deleted = self.add_widget_intelligent(

|

||||

npyscreen.Checkbox,

|

||||

name="Purge deleted models from disk",

|

||||

value=False,

|

||||

scroll_exit=True,

|

||||

relx=4,

|

||||

)

|

||||

self.nextrely += 1

|

||||

self.add_widget_intelligent(

|

||||

CenteredTitleText,

|

||||

name="== STARTER MODELS (recommended ones selected) ==",

|

||||

editable=False,

|

||||

color="CONTROL",

|

||||

)

|

||||

self.nextrely -= 1

|

||||

self.add_widget_intelligent(

|

||||

CenteredTitleText,

|

||||

name="Select from a starter set of Stable Diffusion models from HuggingFace:",

|

||||

editable=False,

|

||||

labelColor="CAUTION",

|

||||

)

|

||||

|

||||

self.nextrely -= 1

|

||||

# if user has already installed some initial models, then don't patronize them

|

||||

# by showing more recommendations

|

||||

show_recommended = not self.existing_models

|

||||

self.models_selected = self.add_widget_intelligent(

|

||||

npyscreen.MultiSelect,

|

||||

name="Install Starter Models",

|

||||

values=starter_model_labels,

|

||||

value=[

|

||||

self.starter_model_list.index(x)

|

||||

for x in self.starter_model_list

|

||||

if show_recommended and x in recommended_models

|

||||

],

|

||||

max_height=len(starter_model_labels) + 1,

|

||||

relx=4,

|

||||

scroll_exit=True,

|

||||

)

|

||||

self.add_widget_intelligent(

|

||||

CenteredTitleText,

|

||||

name='== IMPORT LOCAL AND REMOTE MODELS ==',

|

||||

editable=False,

|

||||

color="CONTROL",

|

||||

)

|

||||

self.nextrely -= 1

|

||||

|

||||

for line in [

|

||||

"In the box below, enter URLs, file paths, or HuggingFace repository IDs.",

|

||||

"Separate model names by lines or whitespace (Use shift-control-V to paste):",

|

||||

]:

|

||||

self.add_widget_intelligent(

|

||||

CenteredTitleText,

|

||||

name=line,

|

||||

editable=False,

|

||||

labelColor="CONTROL",

|

||||

relx = 4,

|

||||

)

|

||||

self.nextrely -= 1

|

||||

self.import_model_paths = self.add_widget_intelligent(

|

||||

TextBox, max_height=5, scroll_exit=True, editable=True, relx=4

|

||||

)

|

||||

self.nextrely += 1

|

||||

self.show_directory_fields = self.add_widget_intelligent(

|

||||

npyscreen.FormControlCheckbox,

|

||||

name="Select a directory for models to import",

|

||||

value=False,

|

||||

)

|

||||

self.autoload_directory = self.add_widget_intelligent(

|

||||

npyscreen.TitleFilename,

|

||||

name="Directory (<tab> autocompletes):",

|

||||

select_dir=True,

|

||||

must_exist=True,

|

||||

use_two_lines=False,

|

||||

labelColor="DANGER",

|

||||

begin_entry_at=34,

|

||||

scroll_exit=True,

|

||||

)

|

||||

self.autoscan_on_startup = self.add_widget_intelligent(

|

||||

npyscreen.Checkbox,

|

||||

name="Scan this directory each time InvokeAI starts for new models to import",

|

||||

value=False,

|

||||

relx=4,

|

||||

scroll_exit=True,

|

||||

)

|

||||

self.nextrely += 1

|

||||

self.convert_models = self.add_widget_intelligent(

|

||||

npyscreen.TitleSelectOne,

|

||||

name="== CONVERT IMPORTED MODELS INTO DIFFUSERS==",

|

||||

values=["Keep original format", "Convert to diffusers"],

|

||||

value=0,

|

||||

begin_entry_at=4,

|

||||

max_height=4,

|

||||

hidden=True, # will appear when imported models box is edited

|

||||

scroll_exit=True,

|

||||

)

|

||||

self.cancel = self.add_widget_intelligent(

|

||||

npyscreen.ButtonPress,

|

||||

name="CANCEL",

|

||||

rely=-3,

|

||||

when_pressed_function=self.on_cancel,

|

||||

)

|

||||

done_label = "DONE"

|

||||

back_label = "BACK"

|

||||

button_length = len(done_label)

|

||||

button_offset = 0

|

||||

if self.multipage:

|

||||

button_length += len(back_label) + 1

|

||||

button_offset += len(back_label) + 1

|

||||

self.back_button = self.add_widget_intelligent(

|

||||

OffsetButtonPress,

|

||||

name=back_label,

|

||||

relx=(window_width - button_length) // 2,

|

||||

offset=-3,

|

||||

rely=-3,

|

||||

when_pressed_function=self.on_back,

|

||||

)

|

||||

self.ok_button = self.add_widget_intelligent(

|

||||

OffsetButtonPress,

|

||||

name=done_label,

|

||||

offset=+3,

|

||||

relx=button_offset + 1 + (window_width - button_length) // 2,

|

||||

rely=-3,

|

||||

when_pressed_function=self.on_ok,

|

||||

)

|

||||

|

||||

for i in [self.autoload_directory, self.autoscan_on_startup]:

|

||||

self.show_directory_fields.addVisibleWhenSelected(i)

|

||||

|

||||

self.show_directory_fields.when_value_edited = self._clear_scan_directory

|

||||

self.import_model_paths.when_value_edited = self._show_hide_convert

|

||||

self.autoload_directory.when_value_edited = self._show_hide_convert

|

||||

|

||||

def resize(self):

|

||||

super().resize()

|

||||

self.models_selected.values = self._get_starter_model_labels()

|

||||

|

||||

def _clear_scan_directory(self):

|

||||

if not self.show_directory_fields.value:

|

||||

self.autoload_directory.value = ""

|

||||

|

||||

def _show_hide_convert(self):

|

||||

model_paths = self.import_model_paths.value or ""

|

||||

autoload_directory = self.autoload_directory.value or ""

|

||||

self.convert_models.hidden = (

|

||||

len(model_paths) == 0 and len(autoload_directory) == 0

|

||||

)

|

||||

|

||||

def _get_starter_model_labels(self) -> List[str]:

|

||||

window_height, window_width = curses.initscr().getmaxyx()

|

||||

label_width = 25

|

||||

checkbox_width = 4

|

||||

spacing_width = 2

|

||||

description_width = window_width - label_width - checkbox_width - spacing_width

|

||||

im = self.initial_models

|

||||

names = self.starter_model_list

|

||||

descriptions = [

|

||||

im[x].description[0 : description_width - 3] + "..."

|

||||

if len(im[x].description) > description_width

|

||||

else im[x].description

|

||||

for x in names

|

||||

]

|

||||

return [

|

||||

f"%-{label_width}s %s" % (names[x], descriptions[x])

|

||||

for x in range(0, len(names))

|

||||

]

|

||||

|

||||

def _get_columns(self) -> int:

|

||||

window_height, window_width = curses.initscr().getmaxyx()

|

||||

cols = (

|

||||

4

|

||||

if window_width > 240

|

||||

else 3

|

||||

if window_width > 160

|

||||

else 2

|

||||

if window_width > 80

|

||||

else 1

|

||||

)

|

||||

return min(cols, len(self.installed_models))

|

||||

|

||||

def on_ok(self):

|

||||

self.parentApp.setNextForm(None)

|

||||

self.editing = False

|

||||

self.parentApp.user_cancelled = False

|

||||

self.marshall_arguments()

|

||||

|

||||

def on_back(self):

|

||||

self.parentApp.switchFormPrevious()

|

||||

self.editing = False

|

||||

|

||||

def on_cancel(self):

|

||||

if npyscreen.notify_yes_no(

|

||||

"Are you sure you want to cancel?\nYou may re-run this script later using the invoke.sh or invoke.bat command.\n"

|

||||

):

|

||||

self.parentApp.setNextForm(None)

|

||||

self.parentApp.user_cancelled = True

|

||||

self.editing = False

|

||||

|

||||

def marshall_arguments(self):

|

||||

"""

|

||||

Assemble arguments and store as attributes of the application:

|

||||

.starter_models: dict of model names to install from INITIAL_CONFIGURE.yaml

|

||||

True => Install

|

||||

False => Remove

|

||||

.scan_directory: Path to a directory of models to scan and import

|

||||

.autoscan_on_startup: True if invokeai should scan and import at startup time

|

||||

.import_model_paths: list of URLs, repo_ids and file paths to import

|

||||

.convert_to_diffusers: if True, convert legacy checkpoints into diffusers

|

||||

"""

|

||||

# we're using a global here rather than storing the result in the parentapp

|

||||

# due to some bug in npyscreen that is causing attributes to be lost

|

||||

selections = self.parentApp.user_selections

|

||||

|

||||

# starter models to install/remove

|

||||

starter_models = dict(

|

||||

map(

|

||||

lambda x: (self.starter_model_list[x], True), self.models_selected.value

|

||||

)

|

||||

)

|

||||

selections.purge_deleted_models = False

|

||||

if hasattr(self, "previously_installed_models"):

|

||||

unchecked = [

|

||||

self.previously_installed_models.values[x]

|

||||

for x in range(0, len(self.previously_installed_models.values))

|

||||

if x not in self.previously_installed_models.value

|

||||

]

|

||||

starter_models.update(map(lambda x: (x, False), unchecked))

|

||||

selections.purge_deleted_models = self.purge_deleted.value

|

||||

selections.starter_models = starter_models

|

||||

|

||||

# load directory and whether to scan on startup

|

||||

if self.show_directory_fields.value:

|

||||

selections.scan_directory = self.autoload_directory.value

|

||||

selections.autoscan_on_startup = self.autoscan_on_startup.value

|

||||

else:

|

||||

selections.scan_directory = None

|

||||

selections.autoscan_on_startup = False

|

||||

|

||||

# URLs and the like

|

||||

selections.import_model_paths = self.import_model_paths.value.split()

|

||||

selections.convert_to_diffusers = self.convert_models.value[0] == 1

|

||||

|

||||

|

||||

class AddModelApplication(npyscreen.NPSAppManaged):

|

||||

def __init__(self):

|

||||

super().__init__()

|

||||

self.user_cancelled = False

|

||||

self.user_selections = Namespace(

|

||||

starter_models=None,

|

||||

purge_deleted_models=False,

|

||||

scan_directory=None,

|

||||

autoscan_on_startup=None,

|

||||

import_model_paths=None,

|

||||

convert_to_diffusers=None,

|

||||

)

|

||||

|

||||

def onStart(self):

|

||||

npyscreen.setTheme(npyscreen.Themes.DefaultTheme)

|

||||

self.main_form = self.addForm(

|

||||

"MAIN", addModelsForm, name="Install Stable Diffusion Models"

|

||||

)

|

||||

|

||||

|

||||

# --------------------------------------------------------

|

||||

def process_and_execute(opt: Namespace, selections: Namespace):

|

||||

models_to_remove = [

|

||||

x for x in selections.starter_models if not selections.starter_models[x]

|

||||

]

|

||||

models_to_install = [

|

||||

x for x in selections.starter_models if selections.starter_models[x]

|

||||

]

|

||||

directory_to_scan = selections.scan_directory

|

||||

scan_at_startup = selections.autoscan_on_startup

|

||||

potential_models_to_install = selections.import_model_paths

|

||||

convert_to_diffusers = selections.convert_to_diffusers

|

||||

|

||||

install_requested_models(

|

||||

install_initial_models=models_to_install,

|

||||

remove_models=models_to_remove,

|

||||

scan_directory=Path(directory_to_scan) if directory_to_scan else None,

|

||||

external_models=potential_models_to_install,

|

||||

scan_at_startup=scan_at_startup,

|

||||

convert_to_diffusers=convert_to_diffusers,

|

||||

precision="float32"

|

||||

if opt.full_precision

|

||||

else choose_precision(torch.device(choose_torch_device())),

|

||||

purge_deleted=selections.purge_deleted_models,

|

||||

config_file_path=Path(opt.config_file) if opt.config_file else None,

|

||||

)

|

||||

|

||||

|

||||

# --------------------------------------------------------

|

||||

def select_and_download_models(opt: Namespace):

|

||||

precision = (

|

||||

"float32"

|

||||

if opt.full_precision

|

||||

else choose_precision(torch.device(choose_torch_device()))

|

||||

)

|

||||

if opt.default_only:

|

||||

install_requested_models(

|

||||

install_initial_models=default_dataset(),

|

||||

precision=precision,

|

||||

)

|

||||

elif opt.yes_to_all:

|

||||

install_requested_models(

|

||||

install_initial_models=recommended_datasets(),

|

||||

precision=precision,

|

||||

)

|

||||

else:

|

||||

installApp = AddModelApplication()

|

||||

installApp.run()

|

||||

|

||||

if not installApp.user_cancelled:

|

||||

process_and_execute(opt, installApp.user_selections)

|

||||

|

||||

|

||||

# -------------------------------------

|

||||

def main():

|

||||

parser = argparse.ArgumentParser(description="InvokeAI model downloader")

|

||||

parser.add_argument(

|

||||

"--full-precision",

|

||||

dest="full_precision",

|

||||

action=argparse.BooleanOptionalAction,

|

||||

type=bool,

|

||||

default=False,

|

||||

help="use 32-bit weights instead of faster 16-bit weights",

|

||||

)

|

||||

parser.add_argument(

|

||||

"--yes",

|

||||

"-y",

|

||||

dest="yes_to_all",

|

||||

action="store_true",

|

||||

help='answer "yes" to all prompts',

|

||||

)

|

||||

parser.add_argument(

|

||||

"--default_only",

|

||||

action="store_true",

|

||||

help="only install the default model",

|

||||

)

|

||||

parser.add_argument(

|

||||

"--config_file",

|

||||

"-c",

|

||||

dest="config_file",

|

||||

type=str,

|

||||

default=None,

|

||||

help="path to configuration file to create",

|

||||

)

|

||||

parser.add_argument(

|

||||

"--root_dir",

|

||||

dest="root",

|

||||

type=str,

|

||||

default=None,

|

||||

help="path to root of install directory",

|

||||

)

|

||||

opt = parser.parse_args()

|

||||

|

||||

# setting a global here

|

||||

Globals.root = os.path.expanduser(get_root(opt.root) or "")

|

||||

|

||||

if not global_config_dir().exists():

|

||||

print(

|

||||

">> Your InvokeAI root directory is not set up. Calling invokeai-configure."

|

||||

)

|

||||

import ldm.invoke.config.invokeai_configure

|

||||

|

||||

ldm.invoke.config.invokeai_configure.main()

|

||||

sys.exit(0)

|

||||

|

||||

try:

|

||||

select_and_download_models(opt)

|

||||

except AssertionError as e:

|

||||

print(str(e))

|

||||

sys.exit(-1)

|

||||

except KeyboardInterrupt:

|

||||

print("\nGoodbye! Come back soon.")

|

||||

except (widget.NotEnoughSpaceForWidget, Exception) as e:

|

||||

if str(e).startswith("Height of 1 allocated"):

|

||||

print(

|

||||

"** Insufficient vertical space for the interface. Please make your window taller and try again"

|

||||

)

|

||||

elif str(e).startswith("addwstr"):

|

||||

print(

|

||||

"** Insufficient horizontal space for the interface. Please make your window wider and try again."

|

||||

)

|

||||

else:

|

||||

print(f"** An error has occurred: {str(e)}")

|

||||

traceback.print_exc()

|

||||

sys.exit(-1)

|

||||

|

||||

|

||||

# -------------------------------------

|

||||

if __name__ == "__main__":

|

||||

main()

|

||||

452

ldm/invoke/config/model_install_backend.py

Normal file

452

ldm/invoke/config/model_install_backend.py

Normal file

@ -0,0 +1,452 @@

|

||||

"""

|

||||

Utility (backend) functions used by model_install.py

|

||||

"""