mirror of

https://github.com/invoke-ai/InvokeAI

synced 2024-08-30 20:32:17 +00:00

Merge branch 'main' into releases/v3.2.0rc1

This commit is contained in:

@ -296,8 +296,18 @@ code for InvokeAI. For this to work, you will need to install the

|

||||

on your system, please see the [Git Installation

|

||||

Guide](https://github.com/git-guides/install-git)

|

||||

|

||||

You will also need to install the [frontend development toolchain](https://github.com/invoke-ai/InvokeAI/blob/main/docs/contributing/contribution_guides/contributingToFrontend.md).

|

||||

|

||||

If you have a "normal" installation, you should create a totally separate virtual environment for the git-based installation, else the two may interfere.

|

||||

|

||||

> **Why do I need the frontend toolchain**?

|

||||

>

|

||||

> The InvokeAI project uses trunk-based development. That means our `main` branch is the development branch, and releases are tags on that branch. Because development is very active, we don't keep an updated build of the UI in `main` - we only build it for production releases.

|

||||

>

|

||||

> That means that between releases, to have a functioning application when running directly from the repo, you will need to run the UI in dev mode or build it regularly (any time the UI code changes).

|

||||

|

||||

1. Create a fork of the InvokeAI repository through the GitHub UI or [this link](https://github.com/invoke-ai/InvokeAI/fork)

|

||||

1. From the command line, run this command:

|

||||

2. From the command line, run this command:

|

||||

```bash

|

||||

git clone https://github.com/<your_github_username>/InvokeAI.git

|

||||

```

|

||||

@ -305,10 +315,10 @@ Guide](https://github.com/git-guides/install-git)

|

||||

This will create a directory named `InvokeAI` and populate it with the

|

||||

full source code from your fork of the InvokeAI repository.

|

||||

|

||||

2. Activate the InvokeAI virtual environment as per step (4) of the manual

|

||||

3. Activate the InvokeAI virtual environment as per step (4) of the manual

|

||||

installation protocol (important!)

|

||||

|

||||

3. Enter the InvokeAI repository directory and run one of these

|

||||

4. Enter the InvokeAI repository directory and run one of these

|

||||

commands, based on your GPU:

|

||||

|

||||

=== "CUDA (NVidia)"

|

||||

@ -334,11 +344,15 @@ installation protocol (important!)

|

||||

Be sure to pass `-e` (for an editable install) and don't forget the

|

||||

dot ("."). It is part of the command.

|

||||

|

||||

You can now run `invokeai` and its related commands. The code will be

|

||||

5. Install the [frontend toolchain](https://github.com/invoke-ai/InvokeAI/blob/main/docs/contributing/contribution_guides/contributingToFrontend.md) and do a production build of the UI as described.

|

||||

|

||||

6. You can now run `invokeai` and its related commands. The code will be

|

||||

read from the repository, so that you can edit the .py source files

|

||||

and watch the code's behavior change.

|

||||

|

||||

4. If you wish to contribute to the InvokeAI project, you are

|

||||

When you pull in new changes to the repo, be sure to re-build the UI.

|

||||

|

||||

7. If you wish to contribute to the InvokeAI project, you are

|

||||

encouraged to establish a GitHub account and "fork"

|

||||

https://github.com/invoke-ai/InvokeAI into your own copy of the

|

||||

repository. You can then use GitHub functions to create and submit

|

||||

|

||||

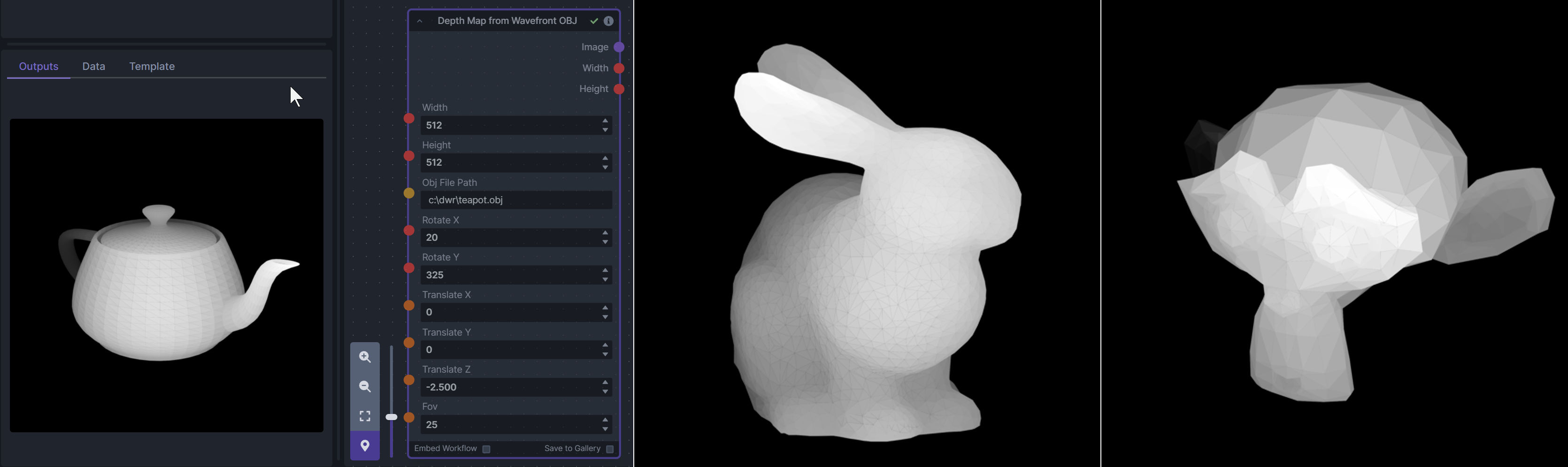

@ -121,18 +121,6 @@ To be imported, an .obj must use triangulated meshes, so make sure to enable tha

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

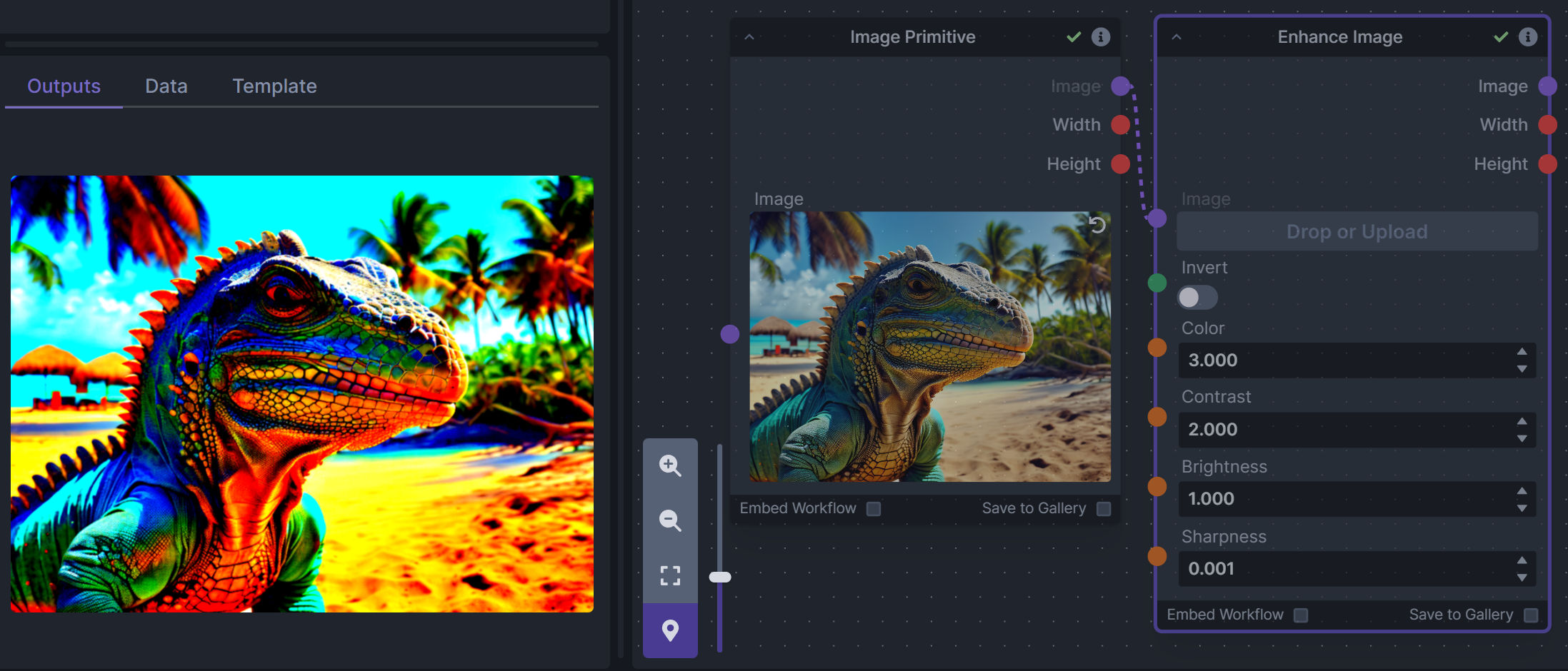

### Enhance Image (simple adjustments)

|

||||

|

||||

**Description:** Boost or reduce color saturation, contrast, brightness, sharpness, or invert colors of any image at any stage with this simple wrapper for pillow [PIL]'s ImageEnhance module.

|

||||

|

||||

Color inversion is toggled with a simple switch, while each of the four enhancer modes are activated by entering a value other than 1 in each corresponding input field. Values less than 1 will reduce the corresponding property, while values greater than 1 will enhance it.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/image-enhance-node

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

### Generative Grammar-Based Prompt Nodes

|

||||

|

||||

@ -153,16 +141,26 @@ This includes 3 Nodes:

|

||||

|

||||

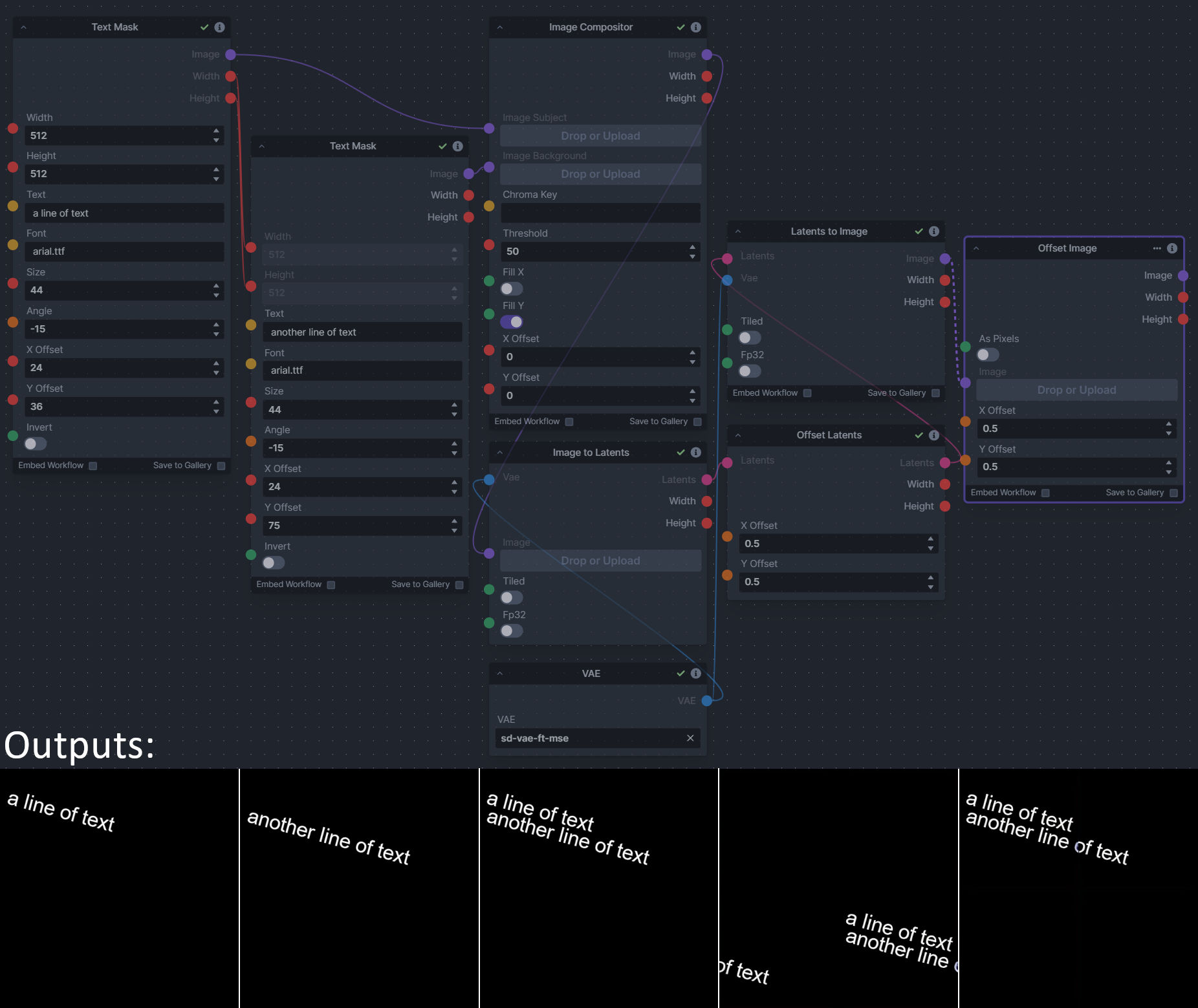

**Description:** This is a pack of nodes for composing masks and images, including a simple text mask creator and both image and latent offset nodes. The offsets wrap around, so these can be used in conjunction with the Seamless node to progressively generate centered on different parts of the seamless tiling.

|

||||

|

||||

This includes 4 Nodes:

|

||||

- *Text Mask (simple 2D)* - create and position a white on black (or black on white) line of text using any font locally available to Invoke.

|

||||

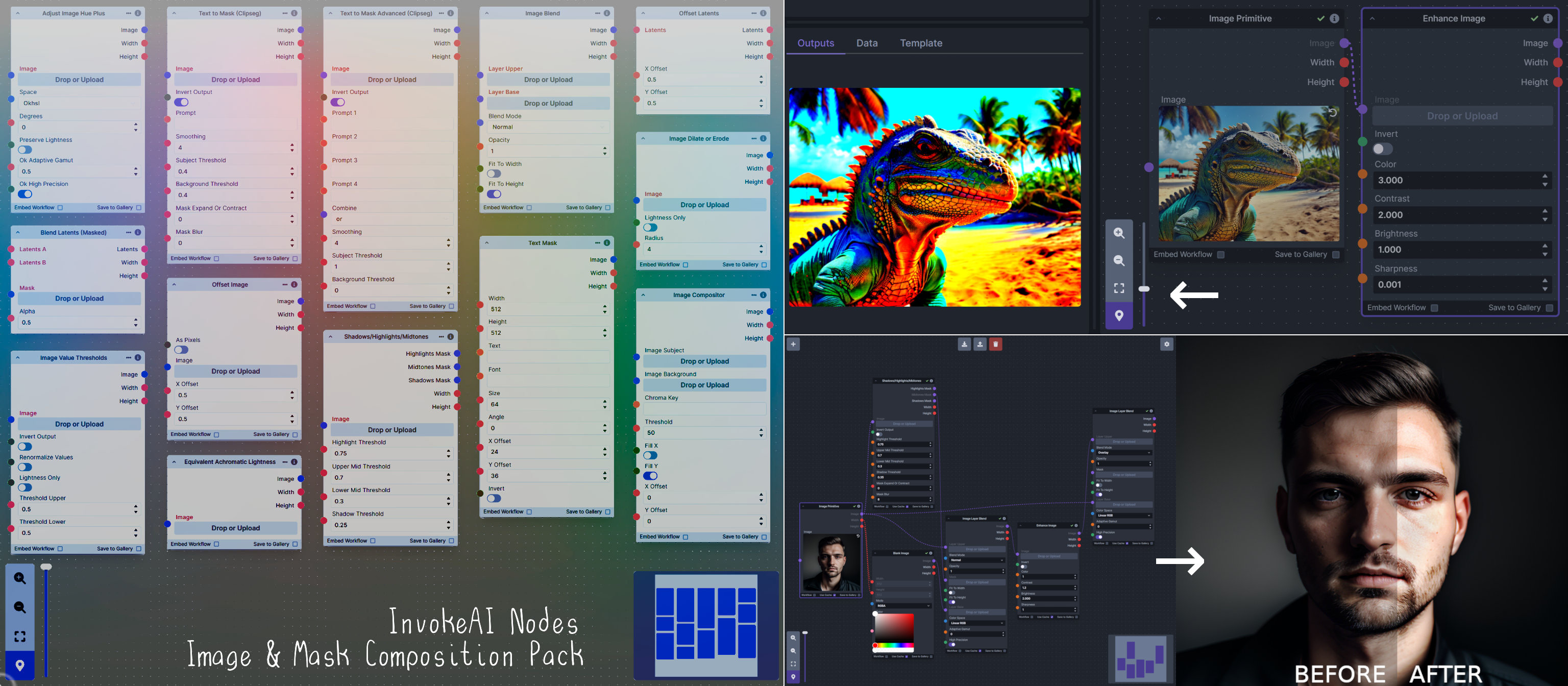

This includes 14 Nodes:

|

||||

- *Adjust Image Hue Plus* - Rotate the hue of an image in one of several different color spaces.

|

||||

- *Blend Latents/Noise (Masked)* - Use a mask to blend part of one latents tensor [including Noise outputs] into another. Can be used to "renoise" sections during a multi-stage [masked] denoising process.

|

||||

- *Enhance Image* - Boost or reduce color saturation, contrast, brightness, sharpness, or invert colors of any image at any stage with this simple wrapper for pillow [PIL]'s ImageEnhance module.

|

||||

- *Equivalent Achromatic Lightness* - Calculates image lightness accounting for Helmholtz-Kohlrausch effect based on a method described by High, Green, and Nussbaum (2023).

|

||||

- *Text to Mask (Clipseg)* - Input a prompt and an image to generate a mask representing areas of the image matched by the prompt.

|

||||

- *Text to Mask Advanced (Clipseg)* - Output up to four prompt masks combined with logical "and", logical "or", or as separate channels of an RGBA image.

|

||||

- *Image Layer Blend* - Perform a layered blend of two images using alpha compositing. Opacity of top layer is selectable, with optional mask and several different blend modes/color spaces.

|

||||

- *Image Compositor* - Take a subject from an image with a flat backdrop and layer it on another image using a chroma key or flood select background removal.

|

||||

- *Image Dilate or Erode* - Dilate or expand a mask (or any image!). This is equivalent to an expand/contract operation.

|

||||

- *Image Value Thresholds* - Clip an image to pure black/white beyond specified thresholds.

|

||||

- *Offset Latents* - Offset a latents tensor in the vertical and/or horizontal dimensions, wrapping it around.

|

||||

- *Offset Image* - Offset an image in the vertical and/or horizontal dimensions, wrapping it around.

|

||||

- *Shadows/Highlights/Midtones* - Extract three masks (with adjustable hard or soft thresholds) representing shadows, midtones, and highlights regions of an image.

|

||||

- *Text Mask (simple 2D)* - create and position a white on black (or black on white) line of text using any font locally available to Invoke.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/composition-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

**Nodes and Output Examples:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

### Size Stepper Nodes

|

||||

|

||||

@ -332,6 +332,7 @@ class InvokeAiInstance:

|

||||

Configure the InvokeAI runtime directory

|

||||

"""

|

||||

|

||||

auto_install = False

|

||||

# set sys.argv to a consistent state

|

||||

new_argv = [sys.argv[0]]

|

||||

for i in range(1, len(sys.argv)):

|

||||

@ -340,13 +341,17 @@ class InvokeAiInstance:

|

||||

new_argv.append(el)

|

||||

new_argv.append(sys.argv[i + 1])

|

||||

elif el in ["-y", "--yes", "--yes-to-all"]:

|

||||

new_argv.append(el)

|

||||

auto_install = True

|

||||

sys.argv = new_argv

|

||||

|

||||

import messages

|

||||

import requests # to catch download exceptions

|

||||

from messages import introduction

|

||||

|

||||

introduction()

|

||||

auto_install = auto_install or messages.user_wants_auto_configuration()

|

||||

if auto_install:

|

||||

sys.argv.append("--yes")

|

||||

else:

|

||||

messages.introduction()

|

||||

|

||||

from invokeai.frontend.install.invokeai_configure import invokeai_configure

|

||||

|

||||

|

||||

@ -7,7 +7,7 @@ import os

|

||||

import platform

|

||||

from pathlib import Path

|

||||

|

||||

from prompt_toolkit import prompt

|

||||

from prompt_toolkit import HTML, prompt

|

||||

from prompt_toolkit.completion import PathCompleter

|

||||

from prompt_toolkit.validation import Validator

|

||||

from rich import box, print

|

||||

@ -65,17 +65,50 @@ def confirm_install(dest: Path) -> bool:

|

||||

if dest.exists():

|

||||

print(f":exclamation: Directory {dest} already exists :exclamation:")

|

||||

dest_confirmed = Confirm.ask(

|

||||

":stop_sign: Are you sure you want to (re)install in this location?",

|

||||

":stop_sign: (re)install in this location?",

|

||||

default=False,

|

||||

)

|

||||

else:

|

||||

print(f"InvokeAI will be installed in {dest}")

|

||||

dest_confirmed = not Confirm.ask("Would you like to pick a different location?", default=False)

|

||||

dest_confirmed = Confirm.ask("Use this location?", default=True)

|

||||

console.line()

|

||||

|

||||

return dest_confirmed

|

||||

|

||||

|

||||

def user_wants_auto_configuration() -> bool:

|

||||

"""Prompt the user to choose between manual and auto configuration."""

|

||||

console.rule("InvokeAI Configuration Section")

|

||||

console.print(

|

||||

Panel(

|

||||

Group(

|

||||

"\n".join(

|

||||

[

|

||||

"Libraries are installed and InvokeAI will now set up its root directory and configuration. Choose between:",

|

||||

"",

|

||||

" * AUTOMATIC configuration: install reasonable defaults and a minimal set of starter models.",

|

||||

" * MANUAL configuration: manually inspect and adjust configuration options and pick from a larger set of starter models.",

|

||||

"",

|

||||

"Later you can fine tune your configuration by selecting option [6] 'Change InvokeAI startup options' from the invoke.bat/invoke.sh launcher script.",

|

||||

]

|

||||

),

|

||||

),

|

||||

box=box.MINIMAL,

|

||||

padding=(1, 1),

|

||||

)

|

||||

)

|

||||

choice = (

|

||||

prompt(

|

||||

HTML("Choose <b><a></b>utomatic or <b><m></b>anual configuration [a/m] (a): "),

|

||||

validator=Validator.from_callable(

|

||||

lambda n: n == "" or n.startswith(("a", "A", "m", "M")), error_message="Please select 'a' or 'm'"

|

||||

),

|

||||

)

|

||||

or "a"

|

||||

)

|

||||

return choice.lower().startswith("a")

|

||||

|

||||

|

||||

def dest_path(dest=None) -> Path:

|

||||

"""

|

||||

Prompt the user for the destination path and create the path

|

||||

|

||||

@ -49,7 +49,7 @@ def check_internet() -> bool:

|

||||

return False

|

||||

|

||||

|

||||

logger = InvokeAILogger.getLogger()

|

||||

logger = InvokeAILogger.get_logger()

|

||||

|

||||

|

||||

class ApiDependencies:

|

||||

|

||||

@ -7,6 +7,7 @@ from fastapi.routing import APIRouter

|

||||

from pydantic import BaseModel, Field

|

||||

|

||||

from invokeai.app.invocations.upscale import ESRGAN_MODELS

|

||||

from invokeai.app.services.invocation_cache.invocation_cache_common import InvocationCacheStatus

|

||||

from invokeai.backend.image_util.invisible_watermark import InvisibleWatermark

|

||||

from invokeai.backend.image_util.patchmatch import PatchMatch

|

||||

from invokeai.backend.image_util.safety_checker import SafetyChecker

|

||||

@ -113,3 +114,33 @@ async def set_log_level(

|

||||

async def clear_invocation_cache() -> None:

|

||||

"""Clears the invocation cache"""

|

||||

ApiDependencies.invoker.services.invocation_cache.clear()

|

||||

|

||||

|

||||

@app_router.put(

|

||||

"/invocation_cache/enable",

|

||||

operation_id="enable_invocation_cache",

|

||||

responses={200: {"description": "The operation was successful"}},

|

||||

)

|

||||

async def enable_invocation_cache() -> None:

|

||||

"""Clears the invocation cache"""

|

||||

ApiDependencies.invoker.services.invocation_cache.enable()

|

||||

|

||||

|

||||

@app_router.put(

|

||||

"/invocation_cache/disable",

|

||||

operation_id="disable_invocation_cache",

|

||||

responses={200: {"description": "The operation was successful"}},

|

||||

)

|

||||

async def disable_invocation_cache() -> None:

|

||||

"""Clears the invocation cache"""

|

||||

ApiDependencies.invoker.services.invocation_cache.disable()

|

||||

|

||||

|

||||

@app_router.get(

|

||||

"/invocation_cache/status",

|

||||

operation_id="get_invocation_cache_status",

|

||||

responses={200: {"model": InvocationCacheStatus}},

|

||||

)

|

||||

async def get_invocation_cache_status() -> InvocationCacheStatus:

|

||||

"""Clears the invocation cache"""

|

||||

return ApiDependencies.invoker.services.invocation_cache.get_status()

|

||||

|

||||

@ -146,7 +146,8 @@ async def update_model(

|

||||

async def import_model(

|

||||

location: str = Body(description="A model path, repo_id or URL to import"),

|

||||

prediction_type: Optional[Literal["v_prediction", "epsilon", "sample"]] = Body(

|

||||

description="Prediction type for SDv2 checkpoint files", default="v_prediction"

|

||||

description="Prediction type for SDv2 checkpoints and rare SDv1 checkpoints",

|

||||

default=None,

|

||||

),

|

||||

) -> ImportModelResponse:

|

||||

"""Add a model using its local path, repo_id, or remote URL. Model characteristics will be probed and configured automatically"""

|

||||

|

||||

@ -8,7 +8,6 @@ app_config.parse_args()

|

||||

|

||||

if True: # hack to make flake8 happy with imports coming after setting up the config

|

||||

import asyncio

|

||||

import logging

|

||||

import mimetypes

|

||||

import socket

|

||||

from inspect import signature

|

||||

@ -41,7 +40,9 @@ if True: # hack to make flake8 happy with imports coming after setting up the c

|

||||

import invokeai.backend.util.mps_fixes # noqa: F401 (monkeypatching on import)

|

||||

|

||||

|

||||

logger = InvokeAILogger.getLogger(config=app_config)

|

||||

app_config = InvokeAIAppConfig.get_config()

|

||||

app_config.parse_args()

|

||||

logger = InvokeAILogger.get_logger(config=app_config)

|

||||

|

||||

# fix for windows mimetypes registry entries being borked

|

||||

# see https://github.com/invoke-ai/InvokeAI/discussions/3684#discussioncomment-6391352

|

||||

@ -223,7 +224,7 @@ def invoke_api():

|

||||

exc_info=e,

|

||||

)

|

||||

else:

|

||||

jurigged.watch(logger=InvokeAILogger.getLogger(name="jurigged").info)

|

||||

jurigged.watch(logger=InvokeAILogger.get_logger(name="jurigged").info)

|

||||

|

||||

port = find_port(app_config.port)

|

||||

if port != app_config.port:

|

||||

@ -242,7 +243,7 @@ def invoke_api():

|

||||

|

||||

# replace uvicorn's loggers with InvokeAI's for consistent appearance

|

||||

for logname in ["uvicorn.access", "uvicorn"]:

|

||||

log = logging.getLogger(logname)

|

||||

log = InvokeAILogger.get_logger(logname)

|

||||

log.handlers.clear()

|

||||

for ch in logger.handlers:

|

||||

log.addHandler(ch)

|

||||

|

||||

@ -7,8 +7,6 @@ from .services.config import InvokeAIAppConfig

|

||||

# parse_args() must be called before any other imports. if it is not called first, consumers of the config

|

||||

# which are imported/used before parse_args() is called will get the default config values instead of the

|

||||

# values from the command line or config file.

|

||||

config = InvokeAIAppConfig.get_config()

|

||||

config.parse_args()

|

||||

|

||||

if True: # hack to make flake8 happy with imports coming after setting up the config

|

||||

import argparse

|

||||

@ -61,8 +59,9 @@ if True: # hack to make flake8 happy with imports coming after setting up the c

|

||||

if torch.backends.mps.is_available():

|

||||

import invokeai.backend.util.mps_fixes # noqa: F401 (monkeypatching on import)

|

||||

|

||||

|

||||

logger = InvokeAILogger().getLogger(config=config)

|

||||

config = InvokeAIAppConfig.get_config()

|

||||

config.parse_args()

|

||||

logger = InvokeAILogger().get_logger(config=config)

|

||||

|

||||

|

||||

class CliCommand(BaseModel):

|

||||

|

||||

@ -88,6 +88,12 @@ class FieldDescriptions:

|

||||

num_1 = "The first number"

|

||||

num_2 = "The second number"

|

||||

mask = "The mask to use for the operation"

|

||||

board = "The board to save the image to"

|

||||

image = "The image to process"

|

||||

tile_size = "Tile size"

|

||||

inclusive_low = "The inclusive low value"

|

||||

exclusive_high = "The exclusive high value"

|

||||

decimal_places = "The number of decimal places to round to"

|

||||

|

||||

|

||||

class Input(str, Enum):

|

||||

@ -173,6 +179,7 @@ class UIType(str, Enum):

|

||||

WorkflowField = "WorkflowField"

|

||||

IsIntermediate = "IsIntermediate"

|

||||

MetadataField = "MetadataField"

|

||||

BoardField = "BoardField"

|

||||

# endregion

|

||||

|

||||

|

||||

@ -656,6 +663,8 @@ def invocation(

|

||||

:param Optional[str] title: Adds a title to the invocation. Use if the auto-generated title isn't quite right. Defaults to None.

|

||||

:param Optional[list[str]] tags: Adds tags to the invocation. Invocations may be searched for by their tags. Defaults to None.

|

||||

:param Optional[str] category: Adds a category to the invocation. Used to group the invocations in the UI. Defaults to None.

|

||||

:param Optional[str] version: Adds a version to the invocation. Must be a valid semver string. Defaults to None.

|

||||

:param Optional[bool] use_cache: Whether or not to use the invocation cache. Defaults to True. The user may override this in the workflow editor.

|

||||

"""

|

||||

|

||||

def wrapper(cls: Type[GenericBaseInvocation]) -> Type[GenericBaseInvocation]:

|

||||

|

||||

@ -559,3 +559,33 @@ class SamDetectorReproducibleColors(SamDetector):

|

||||

img[:, :] = ann_color

|

||||

final_img.paste(Image.fromarray(img, mode="RGB"), (0, 0), Image.fromarray(np.uint8(m * 255)))

|

||||

return np.array(final_img, dtype=np.uint8)

|

||||

|

||||

|

||||

@invocation(

|

||||

"color_map_image_processor",

|

||||

title="Color Map Processor",

|

||||

tags=["controlnet"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class ColorMapImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Generates a color map from the provided image"""

|

||||

|

||||

color_map_tile_size: int = InputField(default=64, ge=0, description=FieldDescriptions.tile_size)

|

||||

|

||||

def run_processor(self, image: Image.Image):

|

||||

image = image.convert("RGB")

|

||||

image = np.array(image, dtype=np.uint8)

|

||||

height, width = image.shape[:2]

|

||||

|

||||

width_tile_size = min(self.color_map_tile_size, width)

|

||||

height_tile_size = min(self.color_map_tile_size, height)

|

||||

|

||||

color_map = cv2.resize(

|

||||

image,

|

||||

(width // width_tile_size, height // height_tile_size),

|

||||

interpolation=cv2.INTER_CUBIC,

|

||||

)

|

||||

color_map = cv2.resize(color_map, (width, height), interpolation=cv2.INTER_NEAREST)

|

||||

color_map = Image.fromarray(color_map)

|

||||

return color_map

|

||||

|

||||

@ -8,12 +8,12 @@ import numpy

|

||||

from PIL import Image, ImageChops, ImageFilter, ImageOps

|

||||

|

||||

from invokeai.app.invocations.metadata import CoreMetadata

|

||||

from invokeai.app.invocations.primitives import ColorField, ImageField, ImageOutput

|

||||

from invokeai.app.invocations.primitives import BoardField, ColorField, ImageField, ImageOutput

|

||||

from invokeai.backend.image_util.invisible_watermark import InvisibleWatermark

|

||||

from invokeai.backend.image_util.safety_checker import SafetyChecker

|

||||

|

||||

from ..models.image import ImageCategory, ResourceOrigin

|

||||

from .baseinvocation import BaseInvocation, FieldDescriptions, InputField, InvocationContext, invocation

|

||||

from .baseinvocation import BaseInvocation, FieldDescriptions, Input, InputField, InvocationContext, invocation

|

||||

|

||||

|

||||

@invocation("show_image", title="Show Image", tags=["image"], category="image", version="1.0.0")

|

||||

@ -972,13 +972,14 @@ class ImageChannelMultiplyInvocation(BaseInvocation):

|

||||

title="Save Image",

|

||||

tags=["primitives", "image"],

|

||||

category="primitives",

|

||||

version="1.0.0",

|

||||

version="1.0.1",

|

||||

use_cache=False,

|

||||

)

|

||||

class SaveImageInvocation(BaseInvocation):

|

||||

"""Saves an image. Unlike an image primitive, this invocation stores a copy of the image."""

|

||||

|

||||

image: ImageField = InputField(description="The image to load")

|

||||

image: ImageField = InputField(description=FieldDescriptions.image)

|

||||

board: Optional[BoardField] = InputField(default=None, description=FieldDescriptions.board, input=Input.Direct)

|

||||

metadata: CoreMetadata = InputField(

|

||||

default=None,

|

||||

description=FieldDescriptions.core_metadata,

|

||||

@ -992,6 +993,7 @@ class SaveImageInvocation(BaseInvocation):

|

||||

image=image,

|

||||

image_origin=ResourceOrigin.INTERNAL,

|

||||

image_category=ImageCategory.GENERAL,

|

||||

board_id=self.board.board_id if self.board else None,

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

|

||||

@ -58,9 +58,7 @@ class IPAdapterInvocation(BaseInvocation):

|

||||

# Inputs

|

||||

image: ImageField = InputField(description="The IP-Adapter image prompt.")

|

||||

ip_adapter_model: IPAdapterModelField = InputField(

|

||||

description="The IP-Adapter model.",

|

||||

title="IP-Adapter Model",

|

||||

input=Input.Direct,

|

||||

description="The IP-Adapter model.", title="IP-Adapter Model", input=Input.Direct, ui_order=-1

|

||||

)

|

||||

|

||||

# weight: float = InputField(default=1.0, description="The weight of the IP-Adapter.", ui_type=UIType.Float)

|

||||

|

||||

@ -65,13 +65,27 @@ class DivideInvocation(BaseInvocation):

|

||||

class RandomIntInvocation(BaseInvocation):

|

||||

"""Outputs a single random integer."""

|

||||

|

||||

low: int = InputField(default=0, description="The inclusive low value")

|

||||

high: int = InputField(default=np.iinfo(np.int32).max, description="The exclusive high value")

|

||||

low: int = InputField(default=0, description=FieldDescriptions.inclusive_low)

|

||||

high: int = InputField(default=np.iinfo(np.int32).max, description=FieldDescriptions.exclusive_high)

|

||||

|

||||

def invoke(self, context: InvocationContext) -> IntegerOutput:

|

||||

return IntegerOutput(value=np.random.randint(self.low, self.high))

|

||||

|

||||

|

||||

@invocation("rand_float", title="Random Float", tags=["math", "float", "random"], category="math", version="1.0.0")

|

||||

class RandomFloatInvocation(BaseInvocation):

|

||||

"""Outputs a single random float"""

|

||||

|

||||

low: float = InputField(default=0.0, description=FieldDescriptions.inclusive_low)

|

||||

high: float = InputField(default=1.0, description=FieldDescriptions.exclusive_high)

|

||||

decimals: int = InputField(default=2, description=FieldDescriptions.decimal_places)

|

||||

|

||||

def invoke(self, context: InvocationContext) -> FloatOutput:

|

||||

random_float = np.random.uniform(self.low, self.high)

|

||||

rounded_float = round(random_float, self.decimals)

|

||||

return FloatOutput(value=rounded_float)

|

||||

|

||||

|

||||

@invocation(

|

||||

"float_to_int",

|

||||

title="Float To Integer",

|

||||

|

||||

@ -42,7 +42,8 @@ class CoreMetadata(BaseModelExcludeNull):

|

||||

cfg_scale: float = Field(description="The classifier-free guidance scale parameter")

|

||||

steps: int = Field(description="The number of steps used for inference")

|

||||

scheduler: str = Field(description="The scheduler used for inference")

|

||||

clip_skip: int = Field(

|

||||

clip_skip: Optional[int] = Field(

|

||||

default=None,

|

||||

description="The number of skipped CLIP layers",

|

||||

)

|

||||

model: MainModelField = Field(description="The main model used for inference")

|

||||

@ -116,7 +117,8 @@ class MetadataAccumulatorInvocation(BaseInvocation):

|

||||

cfg_scale: float = InputField(description="The classifier-free guidance scale parameter")

|

||||

steps: int = InputField(description="The number of steps used for inference")

|

||||

scheduler: str = InputField(description="The scheduler used for inference")

|

||||

clip_skip: int = InputField(

|

||||

clip_skip: Optional[int] = Field(

|

||||

default=None,

|

||||

description="The number of skipped CLIP layers",

|

||||

)

|

||||

model: MainModelField = InputField(description="The main model used for inference")

|

||||

|

||||

@ -226,6 +226,12 @@ class ImageField(BaseModel):

|

||||

image_name: str = Field(description="The name of the image")

|

||||

|

||||

|

||||

class BoardField(BaseModel):

|

||||

"""A board primitive field"""

|

||||

|

||||

board_id: str = Field(description="The id of the board")

|

||||

|

||||

|

||||

@invocation_output("image_output")

|

||||

class ImageOutput(BaseInvocationOutput):

|

||||

"""Base class for nodes that output a single image"""

|

||||

|

||||

@ -241,7 +241,7 @@ class InvokeAIAppConfig(InvokeAISettings):

|

||||

version : bool = Field(default=False, description="Show InvokeAI version and exit", category="Other")

|

||||

|

||||

# CACHE

|

||||

ram : Union[float, Literal["auto"]] = Field(default=6.0, gt=0, description="Maximum memory amount used by model cache for rapid switching (floating point number or 'auto')", category="Model Cache", )

|

||||

ram : Union[float, Literal["auto"]] = Field(default=7.5, gt=0, description="Maximum memory amount used by model cache for rapid switching (floating point number or 'auto')", category="Model Cache", )

|

||||

vram : Union[float, Literal["auto"]] = Field(default=0.25, ge=0, description="Amount of VRAM reserved for model storage (floating point number or 'auto')", category="Model Cache", )

|

||||

lazy_offload : bool = Field(default=True, description="Keep models in VRAM until their space is needed", category="Model Cache", )

|

||||

|

||||

@ -277,6 +277,7 @@ class InvokeAIAppConfig(InvokeAISettings):

|

||||

|

||||

class Config:

|

||||

validate_assignment = True

|

||||

env_prefix = "INVOKEAI"

|

||||

|

||||

def parse_args(self, argv: Optional[list[str]] = None, conf: Optional[DictConfig] = None, clobber=False):

|

||||

"""

|

||||

|

||||

@ -117,6 +117,10 @@ def are_connection_types_compatible(from_type: Any, to_type: Any) -> bool:

|

||||

if from_type is int and to_type is float:

|

||||

return True

|

||||

|

||||

# allow int|float -> str, pydantic will cast for us

|

||||

if (from_type is int or from_type is float) and to_type is str:

|

||||

return True

|

||||

|

||||

# if not issubclass(from_type, to_type):

|

||||

if not is_union_subtype(from_type, to_type):

|

||||

return False

|

||||

|

||||

@ -2,6 +2,7 @@ from abc import ABC, abstractmethod

|

||||

from typing import Optional, Union

|

||||

|

||||

from invokeai.app.invocations.baseinvocation import BaseInvocation, BaseInvocationOutput

|

||||

from invokeai.app.services.invocation_cache.invocation_cache_common import InvocationCacheStatus

|

||||

|

||||

|

||||

class InvocationCacheBase(ABC):

|

||||

@ -32,7 +33,7 @@ class InvocationCacheBase(ABC):

|

||||

|

||||

@abstractmethod

|

||||

def delete(self, key: Union[int, str]) -> None:

|

||||

"""Deleteds an invocation output from the cache"""

|

||||

"""Deletes an invocation output from the cache"""

|

||||

pass

|

||||

|

||||

@abstractmethod

|

||||

@ -44,3 +45,18 @@ class InvocationCacheBase(ABC):

|

||||

def create_key(self, invocation: BaseInvocation) -> int:

|

||||

"""Gets the key for the invocation's cache item"""

|

||||

pass

|

||||

|

||||

@abstractmethod

|

||||

def disable(self) -> None:

|

||||

"""Disables the cache, overriding the max cache size"""

|

||||

pass

|

||||

|

||||

@abstractmethod

|

||||

def enable(self) -> None:

|

||||

"""Enables the cache, letting the the max cache size take effect"""

|

||||

pass

|

||||

|

||||

@abstractmethod

|

||||

def get_status(self) -> InvocationCacheStatus:

|

||||

"""Returns the status of the cache"""

|

||||

pass

|

||||

|

||||

@ -0,0 +1,9 @@

|

||||

from pydantic import BaseModel, Field

|

||||

|

||||

|

||||

class InvocationCacheStatus(BaseModel):

|

||||

size: int = Field(description="The current size of the invocation cache")

|

||||

hits: int = Field(description="The number of cache hits")

|

||||

misses: int = Field(description="The number of cache misses")

|

||||

enabled: bool = Field(description="Whether the invocation cache is enabled")

|

||||

max_size: int = Field(description="The maximum size of the invocation cache")

|

||||

@ -1,81 +1,126 @@

|

||||

from queue import Queue

|

||||

from collections import OrderedDict

|

||||

from dataclasses import dataclass, field

|

||||

from threading import Lock

|

||||

from typing import Optional, Union

|

||||

|

||||

from invokeai.app.invocations.baseinvocation import BaseInvocation, BaseInvocationOutput

|

||||

from invokeai.app.services.invocation_cache.invocation_cache_base import InvocationCacheBase

|

||||

from invokeai.app.services.invocation_cache.invocation_cache_common import InvocationCacheStatus

|

||||

from invokeai.app.services.invoker import Invoker

|

||||

|

||||

|

||||

@dataclass(order=True)

|

||||

class CachedItem:

|

||||

invocation_output: BaseInvocationOutput = field(compare=False)

|

||||

invocation_output_json: str = field(compare=False)

|

||||

|

||||

|

||||

class MemoryInvocationCache(InvocationCacheBase):

|

||||

__cache: dict[Union[int, str], tuple[BaseInvocationOutput, str]]

|

||||

__max_cache_size: int

|

||||

__cache_ids: Queue

|

||||

__invoker: Invoker

|

||||

_cache: OrderedDict[Union[int, str], CachedItem]

|

||||

_max_cache_size: int

|

||||

_disabled: bool

|

||||

_hits: int

|

||||

_misses: int

|

||||

_invoker: Invoker

|

||||

_lock: Lock

|

||||

|

||||

def __init__(self, max_cache_size: int = 0) -> None:

|

||||

self.__cache = dict()

|

||||

self.__max_cache_size = max_cache_size

|

||||

self.__cache_ids = Queue()

|

||||

self._cache = OrderedDict()

|

||||

self._max_cache_size = max_cache_size

|

||||

self._disabled = False

|

||||

self._hits = 0

|

||||

self._misses = 0

|

||||

self._lock = Lock()

|

||||

|

||||

def start(self, invoker: Invoker) -> None:

|

||||

self.__invoker = invoker

|

||||

if self.__max_cache_size == 0:

|

||||

self._invoker = invoker

|

||||

if self._max_cache_size == 0:

|

||||

return

|

||||

self.__invoker.services.images.on_deleted(self._delete_by_match)

|

||||

self.__invoker.services.latents.on_deleted(self._delete_by_match)

|

||||

self._invoker.services.images.on_deleted(self._delete_by_match)

|

||||

self._invoker.services.latents.on_deleted(self._delete_by_match)

|

||||

|

||||

def get(self, key: Union[int, str]) -> Optional[BaseInvocationOutput]:

|

||||

if self.__max_cache_size == 0:

|

||||

return

|

||||

|

||||

item = self.__cache.get(key, None)

|

||||

if item is not None:

|

||||

return item[0]

|

||||

with self._lock:

|

||||

if self._max_cache_size == 0 or self._disabled:

|

||||

return None

|

||||

item = self._cache.get(key, None)

|

||||

if item is not None:

|

||||

self._hits += 1

|

||||

self._cache.move_to_end(key)

|

||||

return item.invocation_output

|

||||

self._misses += 1

|

||||

return None

|

||||

|

||||

def save(self, key: Union[int, str], invocation_output: BaseInvocationOutput) -> None:

|

||||

if self.__max_cache_size == 0:

|

||||

return

|

||||

with self._lock:

|

||||

if self._max_cache_size == 0 or self._disabled or key in self._cache:

|

||||

return

|

||||

# If the cache is full, we need to remove the least used

|

||||

number_to_delete = len(self._cache) + 1 - self._max_cache_size

|

||||

self._delete_oldest_access(number_to_delete)

|

||||

self._cache[key] = CachedItem(invocation_output, invocation_output.json())

|

||||

|

||||

if key not in self.__cache:

|

||||

self.__cache[key] = (invocation_output, invocation_output.json())

|

||||

self.__cache_ids.put(key)

|

||||

if self.__cache_ids.qsize() > self.__max_cache_size:

|

||||

try:

|

||||

self.__cache.pop(self.__cache_ids.get())

|

||||

except KeyError:

|

||||

# this means the cache_ids are somehow out of sync w/ the cache

|

||||

pass

|

||||

def _delete_oldest_access(self, number_to_delete: int) -> None:

|

||||

number_to_delete = min(number_to_delete, len(self._cache))

|

||||

for _ in range(number_to_delete):

|

||||

self._cache.popitem(last=False)

|

||||

|

||||

def _delete(self, key: Union[int, str]) -> None:

|

||||

if self._max_cache_size == 0:

|

||||

return

|

||||

if key in self._cache:

|

||||

del self._cache[key]

|

||||

|

||||

def delete(self, key: Union[int, str]) -> None:

|

||||

if self.__max_cache_size == 0:

|

||||

return

|

||||

|

||||

if key in self.__cache:

|

||||

del self.__cache[key]

|

||||

with self._lock:

|

||||

return self._delete(key)

|

||||

|

||||

def clear(self, *args, **kwargs) -> None:

|

||||

if self.__max_cache_size == 0:

|

||||

return

|

||||

with self._lock:

|

||||

if self._max_cache_size == 0:

|

||||

return

|

||||

self._cache.clear()

|

||||

self._misses = 0

|

||||

self._hits = 0

|

||||

|

||||

self.__cache.clear()

|

||||

self.__cache_ids = Queue()

|

||||

|

||||

def create_key(self, invocation: BaseInvocation) -> int:

|

||||

@staticmethod

|

||||

def create_key(invocation: BaseInvocation) -> int:

|

||||

return hash(invocation.json(exclude={"id"}))

|

||||

|

||||

def disable(self) -> None:

|

||||

with self._lock:

|

||||

if self._max_cache_size == 0:

|

||||

return

|

||||

self._disabled = True

|

||||

|

||||

def enable(self) -> None:

|

||||

with self._lock:

|

||||

if self._max_cache_size == 0:

|

||||

return

|

||||

self._disabled = False

|

||||

|

||||

def get_status(self) -> InvocationCacheStatus:

|

||||

with self._lock:

|

||||

return InvocationCacheStatus(

|

||||

hits=self._hits,

|

||||

misses=self._misses,

|

||||

enabled=not self._disabled and self._max_cache_size > 0,

|

||||

size=len(self._cache),

|

||||

max_size=self._max_cache_size,

|

||||

)

|

||||

|

||||

def _delete_by_match(self, to_match: str) -> None:

|

||||

if self.__max_cache_size == 0:

|

||||

return

|

||||

|

||||

keys_to_delete = set()

|

||||

for key, value_tuple in self.__cache.items():

|

||||

if to_match in value_tuple[1]:

|

||||

keys_to_delete.add(key)

|

||||

|

||||

if not keys_to_delete:

|

||||

return

|

||||

|

||||

for key in keys_to_delete:

|

||||

self.delete(key)

|

||||

|

||||

self.__invoker.services.logger.debug(f"Deleted {len(keys_to_delete)} cached invocation outputs for {to_match}")

|

||||

with self._lock:

|

||||

if self._max_cache_size == 0:

|

||||

return

|

||||

keys_to_delete = set()

|

||||

for key, cached_item in self._cache.items():

|

||||

if to_match in cached_item.invocation_output_json:

|

||||

keys_to_delete.add(key)

|

||||

if not keys_to_delete:

|

||||

return

|

||||

for key in keys_to_delete:

|

||||

self._delete(key)

|

||||

self._invoker.services.logger.debug(

|

||||

f"Deleted {len(keys_to_delete)} cached invocation outputs for {to_match}"

|

||||

)

|

||||

|

||||

@ -47,20 +47,27 @@ class DefaultSessionProcessor(SessionProcessorBase):

|

||||

async def _on_queue_event(self, event: FastAPIEvent) -> None:

|

||||

event_name = event[1]["event"]

|

||||

|

||||

match event_name:

|

||||

case "graph_execution_state_complete" | "invocation_error" | "session_retrieval_error" | "invocation_retrieval_error":

|

||||

self.__queue_item = None

|

||||

self._poll_now()

|

||||

case "session_canceled" if self.__queue_item is not None and self.__queue_item.session_id == event[1][

|

||||

"data"

|

||||

]["graph_execution_state_id"]:

|

||||

self.__queue_item = None

|

||||

self._poll_now()

|

||||

case "batch_enqueued":

|

||||

self._poll_now()

|

||||

case "queue_cleared":

|

||||

self.__queue_item = None

|

||||

self._poll_now()

|

||||

# This was a match statement, but match is not supported on python 3.9

|

||||

if event_name in [

|

||||

"graph_execution_state_complete",

|

||||

"invocation_error",

|

||||

"session_retrieval_error",

|

||||

"invocation_retrieval_error",

|

||||

]:

|

||||

self.__queue_item = None

|

||||

self._poll_now()

|

||||

elif (

|

||||

event_name == "session_canceled"

|

||||

and self.__queue_item is not None

|

||||

and self.__queue_item.session_id == event[1]["data"]["graph_execution_state_id"]

|

||||

):

|

||||

self.__queue_item = None

|

||||

self._poll_now()

|

||||

elif event_name == "batch_enqueued":

|

||||

self._poll_now()

|

||||

elif event_name == "queue_cleared":

|

||||

self.__queue_item = None

|

||||

self._poll_now()

|

||||

|

||||

def resume(self) -> SessionProcessorStatus:

|

||||

if not self.__resume_event.is_set():

|

||||

@ -92,30 +99,34 @@ class DefaultSessionProcessor(SessionProcessorBase):

|

||||

self.__invoker.services.logger

|

||||

while not stop_event.is_set():

|

||||

poll_now_event.clear()

|

||||

try:

|

||||

# do not dequeue if there is already a session running

|

||||

if self.__queue_item is None and resume_event.is_set():

|

||||

queue_item = self.__invoker.services.session_queue.dequeue()

|

||||

|

||||

# do not dequeue if there is already a session running

|

||||

if self.__queue_item is None and resume_event.is_set():

|

||||

queue_item = self.__invoker.services.session_queue.dequeue()

|

||||

if queue_item is not None:

|

||||

self.__invoker.services.logger.debug(f"Executing queue item {queue_item.item_id}")

|

||||

self.__queue_item = queue_item

|

||||

self.__invoker.services.graph_execution_manager.set(queue_item.session)

|

||||

self.__invoker.invoke(

|

||||

session_queue_batch_id=queue_item.batch_id,

|

||||

session_queue_id=queue_item.queue_id,

|

||||

session_queue_item_id=queue_item.item_id,

|

||||

graph_execution_state=queue_item.session,

|

||||

invoke_all=True,

|

||||

)

|

||||

queue_item = None

|

||||

|

||||

if queue_item is not None:

|

||||

self.__invoker.services.logger.debug(f"Executing queue item {queue_item.item_id}")

|

||||

self.__queue_item = queue_item

|

||||

self.__invoker.services.graph_execution_manager.set(queue_item.session)

|

||||

self.__invoker.invoke(

|

||||

session_queue_batch_id=queue_item.batch_id,

|

||||

session_queue_id=queue_item.queue_id,

|

||||

session_queue_item_id=queue_item.item_id,

|

||||

graph_execution_state=queue_item.session,

|

||||

invoke_all=True,

|

||||

)

|

||||

queue_item = None

|

||||

|

||||

if queue_item is None:

|

||||

self.__invoker.services.logger.debug("Waiting for next polling interval or event")

|

||||

if queue_item is None:

|

||||

self.__invoker.services.logger.debug("Waiting for next polling interval or event")

|

||||

poll_now_event.wait(POLLING_INTERVAL)

|

||||

continue

|

||||

except Exception as e:

|

||||

self.__invoker.services.logger.error(f"Error in session processor: {e}")

|

||||

poll_now_event.wait(POLLING_INTERVAL)

|

||||

continue

|

||||

except Exception as e:

|

||||

self.__invoker.services.logger.error(f"Error in session processor: {e}")

|

||||

self.__invoker.services.logger.error(f"Fatal Error in session processor: {e}")

|

||||

pass

|

||||

finally:

|

||||

stop_event.clear()

|

||||

|

||||

@ -162,15 +162,15 @@ class SessionQueueItemWithoutGraph(BaseModel):

|

||||

session_id: str = Field(

|

||||

description="The ID of the session associated with this queue item. The session doesn't exist in graph_executions until the queue item is executed."

|

||||

)

|

||||

field_values: Optional[list[NodeFieldValue]] = Field(

|

||||

default=None, description="The field values that were used for this queue item"

|

||||

)

|

||||

queue_id: str = Field(description="The id of the queue with which this item is associated")

|

||||

error: Optional[str] = Field(default=None, description="The error message if this queue item errored")

|

||||

created_at: Union[datetime.datetime, str] = Field(description="When this queue item was created")

|

||||

updated_at: Union[datetime.datetime, str] = Field(description="When this queue item was updated")

|

||||

started_at: Optional[Union[datetime.datetime, str]] = Field(description="When this queue item was started")

|

||||

completed_at: Optional[Union[datetime.datetime, str]] = Field(description="When this queue item was completed")

|

||||

queue_id: str = Field(description="The id of the queue with which this item is associated")

|

||||

field_values: Optional[list[NodeFieldValue]] = Field(

|

||||

default=None, description="The field values that were used for this queue item"

|

||||

)

|

||||

|

||||

@classmethod

|

||||

def from_dict(cls, queue_item_dict: dict) -> "SessionQueueItemDTO":

|

||||

|

||||

@ -59,13 +59,14 @@ class SqliteSessionQueue(SessionQueueBase):

|

||||

|

||||

async def _on_session_event(self, event: FastAPIEvent) -> FastAPIEvent:

|

||||

event_name = event[1]["event"]

|

||||

match event_name:

|

||||

case "graph_execution_state_complete":

|

||||

await self._handle_complete_event(event)

|

||||

case "invocation_error" | "session_retrieval_error" | "invocation_retrieval_error":

|

||||

await self._handle_error_event(event)

|

||||

case "session_canceled":

|

||||

await self._handle_cancel_event(event)

|

||||

|

||||

# This was a match statement, but match is not supported on python 3.9

|

||||

if event_name == "graph_execution_state_complete":

|

||||

await self._handle_complete_event(event)

|

||||

elif event_name in ["invocation_error", "session_retrieval_error", "invocation_retrieval_error"]:

|

||||

await self._handle_error_event(event)

|

||||

elif event_name == "session_canceled":

|

||||

await self._handle_cancel_event(event)

|

||||

return event

|

||||

|

||||

async def _handle_complete_event(self, event: FastAPIEvent) -> None:

|

||||

|

||||

@ -70,7 +70,6 @@ def get_literal_fields(field) -> list[Any]:

|

||||

config = InvokeAIAppConfig.get_config()

|

||||

|

||||

Model_dir = "models"

|

||||

|

||||

Default_config_file = config.model_conf_path

|

||||

SD_Configs = config.legacy_conf_path

|

||||

|

||||

@ -93,7 +92,7 @@ INIT_FILE_PREAMBLE = """# InvokeAI initialization file

|

||||

# or renaming it and then running invokeai-configure again.

|

||||

"""

|

||||

|

||||

logger = InvokeAILogger.getLogger()

|

||||

logger = InvokeAILogger.get_logger()

|

||||

|

||||

|

||||

class DummyWidgetValue(Enum):

|

||||

@ -458,7 +457,7 @@ Use cursor arrows to make a checkbox selection, and space to toggle.

|

||||

)

|

||||

self.add_widget_intelligent(

|

||||

npyscreen.TitleFixedText,

|

||||

name="Model RAM cache size (GB). Make this at least large enough to hold a single full model.",

|

||||

name="Model RAM cache size (GB). Make this at least large enough to hold a single full model (2GB for SD-1, 6GB for SDXL).",

|

||||

begin_entry_at=0,

|

||||

editable=False,

|

||||

color="CONTROL",

|

||||

@ -651,8 +650,19 @@ def edit_opts(program_opts: Namespace, invokeai_opts: Namespace) -> argparse.Nam

|

||||

return editApp.new_opts()

|

||||

|

||||

|

||||

def default_ramcache() -> float:

|

||||

"""Run a heuristic for the default RAM cache based on installed RAM."""

|

||||

|

||||

# Note that on my 64 GB machine, psutil.virtual_memory().total gives 62 GB,

|

||||

# So we adjust everthing down a bit.

|

||||

return (

|

||||

15.0 if MAX_RAM >= 60 else 7.5 if MAX_RAM >= 30 else 4 if MAX_RAM >= 14 else 2.1

|

||||

) # 2.1 is just large enough for sd 1.5 ;-)

|

||||

|

||||

|

||||

def default_startup_options(init_file: Path) -> Namespace:

|

||||

opts = InvokeAIAppConfig.get_config()

|

||||

opts.ram = default_ramcache()

|

||||

return opts

|

||||

|

||||

|

||||

@ -894,7 +904,7 @@ def main():

|

||||

if opt.full_precision:

|

||||

invoke_args.extend(["--precision", "float32"])

|

||||

config.parse_args(invoke_args)

|

||||

logger = InvokeAILogger().getLogger(config=config)

|

||||

logger = InvokeAILogger().get_logger(config=config)

|

||||

|

||||

errors = set()

|

||||

|

||||

|

||||

@ -30,7 +30,7 @@ warnings.filterwarnings("ignore")

|

||||

|

||||

# --------------------------globals-----------------------

|

||||

config = InvokeAIAppConfig.get_config()

|

||||

logger = InvokeAILogger.getLogger(name="InvokeAI")

|

||||

logger = InvokeAILogger.get_logger(name="InvokeAI")

|

||||

|

||||

# the initial "configs" dir is now bundled in the `invokeai.configs` package

|

||||

Dataset_path = Path(configs.__path__[0]) / "INITIAL_MODELS.yaml"

|

||||

@ -47,8 +47,14 @@ Config_preamble = """

|

||||

|

||||

LEGACY_CONFIGS = {

|

||||

BaseModelType.StableDiffusion1: {

|

||||

ModelVariantType.Normal: "v1-inference.yaml",

|

||||

ModelVariantType.Inpaint: "v1-inpainting-inference.yaml",

|

||||

ModelVariantType.Normal: {

|

||||

SchedulerPredictionType.Epsilon: "v1-inference.yaml",

|

||||

SchedulerPredictionType.VPrediction: "v1-inference-v.yaml",

|

||||

},

|

||||

ModelVariantType.Inpaint: {

|

||||

SchedulerPredictionType.Epsilon: "v1-inpainting-inference.yaml",

|

||||

SchedulerPredictionType.VPrediction: "v1-inpainting-inference-v.yaml",

|

||||

},

|

||||

},

|

||||

BaseModelType.StableDiffusion2: {

|

||||

ModelVariantType.Normal: {

|

||||

@ -69,14 +75,6 @@ LEGACY_CONFIGS = {

|

||||

}

|

||||

|

||||

|

||||

@dataclass

|

||||

class ModelInstallList:

|

||||

"""Class for listing models to be installed/removed"""

|

||||

|

||||

install_models: List[str] = field(default_factory=list)

|

||||

remove_models: List[str] = field(default_factory=list)

|

||||

|

||||

|

||||

@dataclass

|

||||

class InstallSelections:

|

||||

install_models: List[str] = field(default_factory=list)

|

||||

@ -94,6 +92,7 @@ class ModelLoadInfo:

|

||||

installed: bool = False

|

||||

recommended: bool = False

|

||||

default: bool = False

|

||||

requires: Optional[List[str]] = field(default_factory=list)

|

||||

|

||||

|

||||

class ModelInstall(object):

|

||||

@ -131,8 +130,6 @@ class ModelInstall(object):

|

||||

|

||||

# supplement with entries in models.yaml

|

||||

installed_models = [x for x in self.mgr.list_models()]

|

||||

# suppresses autoloaded models

|

||||

# installed_models = [x for x in self.mgr.list_models() if not self._is_autoloaded(x)]

|

||||

|

||||

for md in installed_models:

|

||||

base = md["base_model"]

|

||||

@ -164,9 +161,12 @@ class ModelInstall(object):

|

||||

|

||||

def list_models(self, model_type):

|

||||

installed = self.mgr.list_models(model_type=model_type)

|

||||

print()

|

||||

print(f"Installed models of type `{model_type}`:")

|

||||

print(f"{'Model Key':50} Model Path")

|

||||

for i in installed:

|

||||

print(f"{i['model_name']}\t{i['base_model']}\t{i['path']}")

|

||||

print(f"{'/'.join([i['base_model'],i['model_type'],i['model_name']]):50} {i['path']}")

|

||||

print()

|

||||

|

||||

# logic here a little reversed to maintain backward compatibility

|

||||

def starter_models(self, all_models: bool = False) -> Set[str]:

|

||||

@ -204,6 +204,8 @@ class ModelInstall(object):

|

||||

job += 1

|

||||

|

||||

# add requested models

|

||||

self._remove_installed(selections.install_models)

|

||||

self._add_required_models(selections.install_models)

|

||||

for path in selections.install_models:

|

||||

logger.info(f"Installing {path} [{job}/{jobs}]")

|

||||

try:

|

||||

@ -263,6 +265,26 @@ class ModelInstall(object):

|

||||

|

||||

return models_installed

|

||||

|

||||

def _remove_installed(self, model_list: List[str]):

|

||||

all_models = self.all_models()

|

||||

for path in model_list:

|

||||

key = self.reverse_paths.get(path)

|

||||

if key and all_models[key].installed:

|

||||

logger.warning(f"{path} already installed. Skipping.")

|

||||

model_list.remove(path)

|

||||

|

||||

def _add_required_models(self, model_list: List[str]):

|

||||

additional_models = []

|

||||

all_models = self.all_models()

|

||||

for path in model_list:

|

||||

if not (key := self.reverse_paths.get(path)):

|

||||

continue

|

||||

for requirement in all_models[key].requires:

|

||||

requirement_key = self.reverse_paths.get(requirement)

|

||||

if not all_models[requirement_key].installed:

|

||||

additional_models.append(requirement)

|

||||

model_list.extend(additional_models)

|

||||

|

||||

# install a model from a local path. The optional info parameter is there to prevent

|

||||

# the model from being probed twice in the event that it has already been probed.

|

||||

def _install_path(self, path: Path, info: ModelProbeInfo = None) -> AddModelResult:

|

||||

@ -286,7 +308,7 @@ class ModelInstall(object):

|

||||

location = download_with_resume(url, Path(staging))

|

||||

if not location:

|

||||

logger.error(f"Unable to download {url}. Skipping.")

|

||||

info = ModelProbe().heuristic_probe(location)

|

||||

info = ModelProbe().heuristic_probe(location, self.prediction_helper)

|

||||

dest = self.config.models_path / info.base_type.value / info.model_type.value / location.name

|

||||

dest.parent.mkdir(parents=True, exist_ok=True)

|

||||

models_path = shutil.move(location, dest)

|

||||

@ -393,7 +415,7 @@ class ModelInstall(object):

|

||||

possible_conf = path.with_suffix(".yaml")

|

||||

if possible_conf.exists():

|

||||

legacy_conf = str(self.relative_to_root(possible_conf))

|

||||

elif info.base_type == BaseModelType.StableDiffusion2:

|

||||

elif info.base_type in [BaseModelType.StableDiffusion1, BaseModelType.StableDiffusion2]:

|

||||

legacy_conf = Path(

|

||||

self.config.legacy_conf_dir,

|

||||

LEGACY_CONFIGS[info.base_type][info.variant_type][info.prediction_type],

|

||||

@ -492,7 +514,7 @@ def yes_or_no(prompt: str, default_yes=True):

|

||||

|

||||

# ---------------------------------------------

|

||||

def hf_download_from_pretrained(model_class: object, model_name: str, destination: Path, **kwargs):

|

||||

logger = InvokeAILogger.getLogger("InvokeAI")

|

||||

logger = InvokeAILogger.get_logger("InvokeAI")

|

||||

logger.addFilter(lambda x: "fp16 is not a valid" not in x.getMessage())

|

||||

|

||||

model = model_class.from_pretrained(

|

||||

|

||||

@ -74,7 +74,7 @@ if is_accelerate_available():

|

||||

from accelerate import init_empty_weights

|

||||

from accelerate.utils import set_module_tensor_to_device

|

||||

|

||||

logger = InvokeAILogger.getLogger(__name__)

|

||||

logger = InvokeAILogger.get_logger(__name__)

|

||||

CONVERT_MODEL_ROOT = InvokeAIAppConfig.get_config().models_path / "core/convert"

|

||||

|

||||

|

||||

@ -1279,12 +1279,12 @@ def download_from_original_stable_diffusion_ckpt(

|

||||

extract_ema = original_config["model"]["params"]["use_ema"]

|

||||

|

||||

if (

|

||||

model_version == BaseModelType.StableDiffusion2

|

||||

model_version in [BaseModelType.StableDiffusion2, BaseModelType.StableDiffusion1]

|

||||

and original_config["model"]["params"].get("parameterization") == "v"

|

||||

):

|

||||

prediction_type = "v_prediction"

|

||||

upcast_attention = True

|

||||

image_size = 768

|

||||

image_size = 768 if model_version == BaseModelType.StableDiffusion2 else 512

|

||||

else:

|

||||

prediction_type = "epsilon"

|

||||

upcast_attention = False

|

||||

|

||||

@ -90,8 +90,7 @@ class ModelProbe(object):

|

||||

to place it somewhere in the models directory hierarchy. If the model is

|

||||

already loaded into memory, you may provide it as model in order to avoid

|

||||

opening it a second time. The prediction_type_helper callable is a function that receives

|

||||

the path to the model and returns the BaseModelType. It is called to distinguish

|

||||

between V2-Base and V2-768 SD models.

|

||||

the path to the model and returns the SchedulerPredictionType.

|

||||

"""

|

||||

if model_path:

|

||||

format_type = "diffusers" if model_path.is_dir() else "checkpoint"

|

||||

@ -305,25 +304,36 @@ class PipelineCheckpointProbe(CheckpointProbeBase):

|

||||

else:

|

||||

raise InvalidModelException("Cannot determine base type")

|

||||

|

||||

def get_scheduler_prediction_type(self) -> SchedulerPredictionType:

|

||||

def get_scheduler_prediction_type(self) -> Optional[SchedulerPredictionType]:

|

||||

"""Return model prediction type."""

|

||||

# if there is a .yaml associated with this checkpoint, then we do not need

|

||||

# to probe for the prediction type as it will be ignored.

|

||||

if self.checkpoint_path and self.checkpoint_path.with_suffix(".yaml").exists():

|

||||

return None

|

||||

|

||||

type = self.get_base_type()

|

||||

if type == BaseModelType.StableDiffusion1:

|

||||

return SchedulerPredictionType.Epsilon

|

||||

checkpoint = self.checkpoint

|

||||

state_dict = self.checkpoint.get("state_dict") or checkpoint

|

||||

key_name = "model.diffusion_model.input_blocks.2.1.transformer_blocks.0.attn2.to_k.weight"

|

||||

if key_name in state_dict and state_dict[key_name].shape[-1] == 1024:

|

||||

if "global_step" in checkpoint:

|

||||

if checkpoint["global_step"] == 220000:

|

||||

return SchedulerPredictionType.Epsilon

|

||||

elif checkpoint["global_step"] == 110000:

|

||||

return SchedulerPredictionType.VPrediction

|

||||

if (

|

||||

self.checkpoint_path and self.helper and not self.checkpoint_path.with_suffix(".yaml").exists()

|

||||

): # if a .yaml config file exists, then this step not needed

|

||||

return self.helper(self.checkpoint_path)

|

||||

else:

|

||||

return None

|

||||

if type == BaseModelType.StableDiffusion2:

|

||||

checkpoint = self.checkpoint

|

||||

state_dict = self.checkpoint.get("state_dict") or checkpoint

|

||||

key_name = "model.diffusion_model.input_blocks.2.1.transformer_blocks.0.attn2.to_k.weight"

|

||||

if key_name in state_dict and state_dict[key_name].shape[-1] == 1024:

|

||||

if "global_step" in checkpoint:

|

||||

if checkpoint["global_step"] == 220000:

|

||||

return SchedulerPredictionType.Epsilon

|

||||

elif checkpoint["global_step"] == 110000:

|

||||

return SchedulerPredictionType.VPrediction

|

||||

if self.helper and self.checkpoint_path:

|

||||

if helper_guess := self.helper(self.checkpoint_path):

|

||||

return helper_guess

|

||||

return SchedulerPredictionType.VPrediction # a guess for sd2 ckpts

|

||||

|

||||

elif type == BaseModelType.StableDiffusion1:

|

||||

if self.helper and self.checkpoint_path:

|

||||

if helper_guess := self.helper(self.checkpoint_path):

|

||||

return helper_guess

|

||||

return SchedulerPredictionType.Epsilon # a reasonable guess for sd1 ckpts

|

||||

else:

|

||||

return None

|

||||

|

||||

|

||||

class VaeCheckpointProbe(CheckpointProbeBase):

|

||||

|

||||

@ -71,7 +71,13 @@ class ModelSearch(ABC):

|

||||

if any(

|

||||

[

|

||||

(path / x).exists()

|

||||

for x in {"config.json", "model_index.json", "learned_embeds.bin", "pytorch_lora_weights.bin"}

|

||||

for x in {

|

||||

"config.json",

|

||||

"model_index.json",

|

||||

"learned_embeds.bin",

|

||||

"pytorch_lora_weights.bin",

|

||||

"image_encoder.txt",

|

||||

}

|

||||

]

|

||||

):

|

||||

try:

|

||||

|

||||

@ -24,7 +24,7 @@ from invokeai.backend.util.logging import InvokeAILogger

|

||||

# Modified ControlNetModel with encoder_attention_mask argument added

|

||||

|

||||

|

||||

logger = InvokeAILogger.getLogger(__name__)

|

||||

logger = InvokeAILogger.get_logger(__name__)

|

||||

|

||||

|

||||

class ControlNetModel(ModelMixin, ConfigMixin, FromOriginalControlnetMixin):

|

||||

|

||||

@ -1,7 +1,6 @@

|

||||

# Copyright (c) 2023 Lincoln D. Stein and The InvokeAI Development Team

|

||||

|

||||

"""

|

||||

invokeai.backend.util.logging

|

||||

"""invokeai.backend.util.logging

|

||||

|

||||

Logging class for InvokeAI that produces console messages

|

||||

|

||||

@ -9,9 +8,9 @@ Usage:

|

||||

|

||||

from invokeai.backend.util.logging import InvokeAILogger

|

||||

|

||||

logger = InvokeAILogger.getLogger(name='InvokeAI') // Initialization

|

||||

logger = InvokeAILogger.get_logger(name='InvokeAI') // Initialization

|

||||

(or)

|

||||

logger = InvokeAILogger.getLogger(__name__) // To use the filename

|

||||

logger = InvokeAILogger.get_logger(__name__) // To use the filename

|

||||

logger.configure()

|

||||

|

||||

logger.critical('this is critical') // Critical Message

|

||||

@ -34,13 +33,13 @@ IAILogger.debug('this is a debugging message')

|

||||

## Configuration

|

||||

|

||||

The default configuration will print to stderr on the console. To add

|

||||

additional logging handlers, call getLogger with an initialized InvokeAIAppConfig

|

||||

additional logging handlers, call get_logger with an initialized InvokeAIAppConfig

|

||||

object:

|

||||

|

||||

|

||||

config = InvokeAIAppConfig.get_config()

|

||||

config.parse_args()

|

||||

logger = InvokeAILogger.getLogger(config=config)

|

||||

logger = InvokeAILogger.get_logger(config=config)

|

||||

|

||||

### Three command-line options control logging:

|

||||

|

||||

@ -173,6 +172,7 @@ InvokeAI:

|

||||

log_level: info

|

||||

log_format: color

|

||||

```

|

||||

|

||||

"""

|

||||

|

||||

import logging.handlers

|

||||

@ -193,39 +193,35 @@ except ImportError:

|

||||

|

||||

# module level functions

|

||||

def debug(msg, *args, **kwargs):

|

||||

InvokeAILogger.getLogger().debug(msg, *args, **kwargs)

|

||||

InvokeAILogger.get_logger().debug(msg, *args, **kwargs)

|

||||

|

||||

|

||||

def info(msg, *args, **kwargs):

|

||||

InvokeAILogger.getLogger().info(msg, *args, **kwargs)

|

||||