## What type of PR is this? (check all applicable)

- [x] Feature

## Have you discussed this change with the InvokeAI team?

- [x] Yes

## Description

This PR adds support for SDXL Models in the Linear UI

### DONE

- SDXL Base Text To Image Support

- SDXL Base Image To Image Support

- SDXL Refiner Support

- SDXL Relevant UI

## [optional] Are there any post deployment tasks we need to perform?

Double check to ensure nothing major changed with 1.0 -- In any case

those changes would be backend related mostly. If Refiner is scrapped

for 1.0 models, then we simply disable the Refiner Graph.

Rolled back the earlier split of the refiner model query.

Now, when you use `useGetMainModelsQuery()`, you must provide it an array of base model types.

They are provided as constants for simplicity:

- ALL_BASE_MODELS

- NON_REFINER_BASE_MODELS

- REFINER_BASE_MODELS

Opted to just use args for the hook instead of wrapping the hook in another hook, we can tidy this up later if desired.

We can derive `isRefinerAvailable` from the query result (eg are there any refiner models installed). This is a piece of server state, so by using the list models response directly, we can avoid needing to manually keep the client in sync with the server.

Created a `useIsRefinerAvailable()` hook to return this boolean wherever it is needed.

Also updated the main models & refiner models endpoints to only return the appropriate models. Now we don't need to filter the data on these endpoints.

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [X] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [X] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [X] No

## Description

Updated script to close stale issues with the newest version of the

actions/stale

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [X] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

Not sure how this script gets kicked off

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [ ] Bug Fix

- [ ] Optimization

- [x] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ ] Yes

- [x] No, because: This is a minor fix that I happened upon while

reading

## Have you updated all relevant documentation?

- [x] Yes

- [ ] No

## Description

Within the `mkdocs.yml` file, there's a typo where `Model Merging` is

spelled as `Model Mergeing`. I also found some unnecessary white space

that I removed.

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [x] No : Not big enough of a change to require tests (unless it is)

## [optional] Are there any post deployment tasks we need to perform?

Might need to re-run the yml file for docs to regenerate, but I'm hardly

familiar with the codebase so 🤷

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [x] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ ] Yes

- [x] No, because: n/a

## Have you updated all relevant documentation?

- [ ] Yes

- [x] No n/a

## Description

Add a generation mode indicator to canvas.

- use the existing logic to determine if generation is txt2img, img2img,

inpaint or outpaint

- technically `outpaint` and `inpaint` are the same, just display

"Inpaint" if its either

- debounce this by 1s to prevent jank

I was going to disable controlnet conditionally when the mode is inpaint

but that involves a lot of fiddly changes to the controlnet UI

components. Instead, I'm hoping we can get inpaint moved over to latents

by next release, at which point controlnet will work.

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

https://github.com/invoke-ai/InvokeAI/assets/4822129/87464ae9-4136-4367-b992-e243ff0d05b4

## Added/updated tests?

- [ ] Yes

- [x] No : n/a

## [optional] Are there any post deployment tasks we need to perform?

n/a

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [x] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [x] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [x] No, n/a

## Description

When a queue item is popped for processing, we need to retrieve its

session from the DB. Pydantic serializes the graph at this stage.

It's possible for a graph to have been made invalid during the graph

preparation stage (e.g. an ancestor node executes, and its output is not

valid for its successor node's input field).

When this occurs, the session in the DB will fail validation, but we

don't have a chance to find out until it is retrieved and parsed by

pydantic.

This logic was previously not wrapped in any exception handling.

Just after retrieving a session, we retrieve the specific invocation to

execute from the session. It's possible that this could also have some

sort of error, though it should be impossible for it to be a pydantic

validation error (that would have been caught during session

validation). There was also no exception handling here.

When either of these processes fail, the processor gets soft-locked

because the processor's cleanup logic is never run. (I didn't dig deeper

into exactly what cleanup is not happening, because the fix is to just

handle the exceptions.)

This PR adds exception handling to both the session retrieval and node

retrieval and events for each: `session_retrieval_error` and

`invocation_retrieval_error`.

These events are caught and displayed in the UI as toasts, along with

the type of the python exception (e.g. `Validation Error`). The events

are also logged to the browser console.

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

Closes#3860 , #3412

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

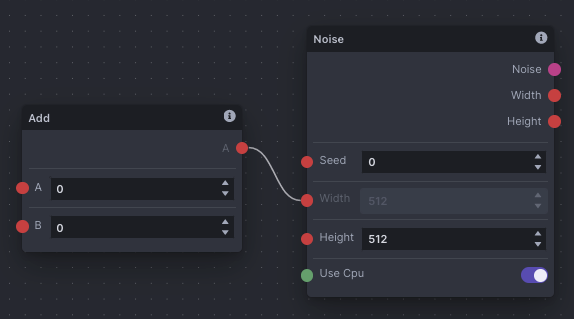

Create an valid graph that will become invalid during execution. Here's

an example:

This is valid before execution, but the `width` field of the `Noise`

node will end up with an invalid value (`0`). Previously, this would

soft-lock the app and you'd have to restart it.

Now, with this graph, you will get an error toast, and the app will not

get locked up.

## Added/updated tests?

- [x] Yes (ish)

- [ ] No

@Kyle0654 @brandonrising

It seems because the processor runs in its own thread, `pytest` cannot

catch exceptions raised in the processor.

I added a test that does work, insofar as it does recreate the issue.

But, because the exception occurs in a separate thread, the test doesn't

see it. The result is that the test passes even without the fix.

So when running the test, we see the exception:

```py

Exception in thread invoker_processor:

Traceback (most recent call last):

File "/usr/lib/python3.10/threading.py", line 1016, in _bootstrap_inner

self.run()

File "/usr/lib/python3.10/threading.py", line 953, in run

self._target(*self._args, **self._kwargs)

File "/home/bat/Documents/Code/InvokeAI/invokeai/app/services/processor.py", line 50, in __process

self.__invoker.services.graph_execution_manager.get(

File "/home/bat/Documents/Code/InvokeAI/invokeai/app/services/sqlite.py", line 79, in get

return self._parse_item(result[0])

File "/home/bat/Documents/Code/InvokeAI/invokeai/app/services/sqlite.py", line 52, in _parse_item

return parse_raw_as(item_type, item)

File "pydantic/tools.py", line 82, in pydantic.tools.parse_raw_as

File "pydantic/tools.py", line 38, in pydantic.tools.parse_obj_as

File "pydantic/main.py", line 341, in pydantic.main.BaseModel.__init__

```

But `pytest` doesn't actually see it as an exception. Not sure how to

fix this, it's a bit beyond me.

## [optional] Are there any post deployment tasks we need to perform?

nope don't think so