motivation: i want to be doing future prompting development work in the

`compel` lib (https://github.com/damian0815/compel) - which is currently

pip installable with `pip install compel`.

-At some point pathlib was added to the list of imported modules and

this broken the os.path code that assembled the sample data set.

-Now fixed by replacing os.path calls with Path methods

-At some point pathlib was added to the list of imported modules and this

broken the os.path code that assembled the sample data set.

-Now fixed by replacing os.path calls with Path methods

This bug is related to the format in which we stored prompts for some time: an array of weighted subprompts.

This caused some strife when recalling a prompt if the prompt had colons in it, due to our recently introduced handling of negative prompts.

Currently there is no need to store a prompt as anything other than a string, so we revert to doing that.

Compatibility with structured prompts is maintained via helper hook.

Lots of earlier embeds use a common trigger token such as * or the

hebrew letter shan. Previously, the textual inversion manager would

refuse to load the second and subsequent embeddings that used a

previously-claimed trigger. Now, when this case is encountered, the

trigger token is replaced by <filename> and the user is informed of the

fact.

In theory, this reduces peak memory consumption by doing the conditioned

and un-conditioned predictions one after the other instead of in a

single mini-batch.

In practice, it doesn't reduce the reported "Max VRAM used for this

generation" for me, even without xformers. (But it does slow things down

by a good 18%.)

That suggests to me that the peak memory usage is during VAE decoding,

not the diffusion unet, but ymmv. It does [improve things for gogurt's

16 GB

M1](https://github.com/invoke-ai/InvokeAI/pull/2732#issuecomment-1436187407),

so it seems worthwhile.

To try it out, use the `--sequential_guidance` option:

2dded68267/ldm/invoke/args.py (L487-L492)

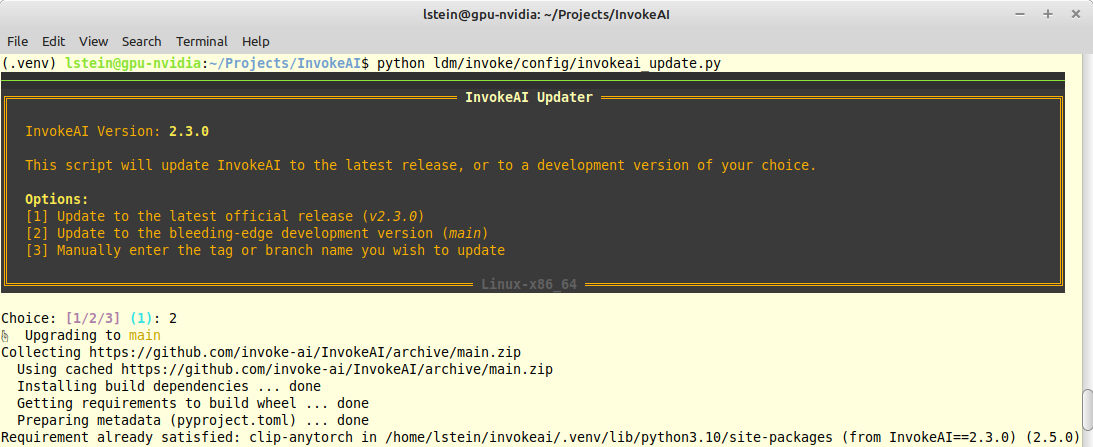

- Adds an update action to launcher script

- This action calls new python script `invokeai-update`, which prompts

user to update to latest release version, main development version, or

an arbitrary git tag or branch name.

- It then uses `pip` to update to whatever tag was specified.

The user interface (such as it is) looks like this:

- The TI script was looping over all files in the training image

directory, regardless of whether they were image files or not. This PR

adds a check for image file extensions.

-

- Closes#2715

- Fixes longstanding bug in the token vector size code which caused .pt

files to be assigned the wrong token vector length. These were then

tossed out during directory scanning.

- Fixes longstanding bug in the token vector size code which caused

.pt files to be assigned the wrong token vector length. These

were then tossed out during directory scanning.