mirror of

https://github.com/invoke-ai/InvokeAI

synced 2024-08-30 20:32:17 +00:00

Compare commits

2 Commits

feat/batch

...

feat/cleau

| Author | SHA1 | Date | |

|---|---|---|---|

| edc8f5fb6f | |||

| 6bb657b3f3 |

36

.github/CODEOWNERS

vendored

36

.github/CODEOWNERS

vendored

@ -1,34 +1,34 @@

|

||||

# continuous integration

|

||||

/.github/workflows/ @lstein @blessedcoolant @hipsterusername

|

||||

/.github/workflows/ @lstein @blessedcoolant

|

||||

|

||||

# documentation

|

||||

/docs/ @lstein @blessedcoolant @hipsterusername @Millu

|

||||

/mkdocs.yml @lstein @blessedcoolant @hipsterusername @Millu

|

||||

/mkdocs.yml @lstein @blessedcoolant

|

||||

|

||||

# nodes

|

||||

/invokeai/app/ @Kyle0654 @blessedcoolant @psychedelicious @brandonrising @hipsterusername

|

||||

/invokeai/app/ @Kyle0654 @blessedcoolant @psychedelicious @brandonrising

|

||||

|

||||

# installation and configuration

|

||||

/pyproject.toml @lstein @blessedcoolant @hipsterusername

|

||||

/docker/ @lstein @blessedcoolant @hipsterusername

|

||||

/scripts/ @ebr @lstein @hipsterusername

|

||||

/installer/ @lstein @ebr @hipsterusername

|

||||

/invokeai/assets @lstein @ebr @hipsterusername

|

||||

/invokeai/configs @lstein @hipsterusername

|

||||

/invokeai/version @lstein @blessedcoolant @hipsterusername

|

||||

/pyproject.toml @lstein @blessedcoolant

|

||||

/docker/ @lstein @blessedcoolant

|

||||

/scripts/ @ebr @lstein

|

||||

/installer/ @lstein @ebr

|

||||

/invokeai/assets @lstein @ebr

|

||||

/invokeai/configs @lstein

|

||||

/invokeai/version @lstein @blessedcoolant

|

||||

|

||||

# web ui

|

||||

/invokeai/frontend @blessedcoolant @psychedelicious @lstein @maryhipp @hipsterusername

|

||||

/invokeai/backend @blessedcoolant @psychedelicious @lstein @maryhipp @hipsterusername

|

||||

/invokeai/frontend @blessedcoolant @psychedelicious @lstein @maryhipp

|

||||

/invokeai/backend @blessedcoolant @psychedelicious @lstein @maryhipp

|

||||

|

||||

# generation, model management, postprocessing

|

||||

/invokeai/backend @damian0815 @lstein @blessedcoolant @gregghelt2 @StAlKeR7779 @brandonrising @ryanjdick @hipsterusername

|

||||

/invokeai/backend @damian0815 @lstein @blessedcoolant @gregghelt2 @StAlKeR7779 @brandonrising @ryanjdick

|

||||

|

||||

# front ends

|

||||

/invokeai/frontend/CLI @lstein @hipsterusername

|

||||

/invokeai/frontend/install @lstein @ebr @hipsterusername

|

||||

/invokeai/frontend/merge @lstein @blessedcoolant @hipsterusername

|

||||

/invokeai/frontend/training @lstein @blessedcoolant @hipsterusername

|

||||

/invokeai/frontend/web @psychedelicious @blessedcoolant @maryhipp @hipsterusername

|

||||

/invokeai/frontend/CLI @lstein

|

||||

/invokeai/frontend/install @lstein @ebr

|

||||

/invokeai/frontend/merge @lstein @blessedcoolant

|

||||

/invokeai/frontend/training @lstein @blessedcoolant

|

||||

/invokeai/frontend/web @psychedelicious @blessedcoolant @maryhipp

|

||||

|

||||

|

||||

|

||||

@ -244,12 +244,8 @@ copy-paste the template above.

|

||||

We can use the `@invocation` decorator to provide some additional info to the

|

||||

UI, like a custom title, tags and category.

|

||||

|

||||

We also encourage providing a version. This must be a

|

||||

[semver](https://semver.org/) version string ("$MAJOR.$MINOR.$PATCH"). The UI

|

||||

will let users know if their workflow is using a mismatched version of the node.

|

||||

|

||||

```python

|

||||

@invocation("resize", title="My Resizer", tags=["resize", "image"], category="My Invocations", version="1.0.0")

|

||||

@invocation("resize", title="My Resizer", tags=["resize", "image"], category="My Invocations")

|

||||

class ResizeInvocation(BaseInvocation):

|

||||

"""Resizes an image"""

|

||||

|

||||

@ -283,6 +279,8 @@ take a look a at our [contributing nodes overview](contributingNodes).

|

||||

|

||||

## Advanced

|

||||

|

||||

-->

|

||||

|

||||

### Custom Output Types

|

||||

|

||||

Like with custom inputs, sometimes you might find yourself needing custom

|

||||

|

||||

@ -22,26 +22,12 @@ To use a community node graph, download the the `.json` node graph file and load

|

||||

|

||||

|

||||

|

||||

--------------------------------

|

||||

### Ideal Size

|

||||

|

||||

**Description:** This node calculates an ideal image size for a first pass of a multi-pass upscaling. The aim is to avoid duplication that results from choosing a size larger than the model is capable of.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/ideal-size-node

|

||||

|

||||

--------------------------------

|

||||

### Film Grain

|

||||

|

||||

**Description:** This node adds a film grain effect to the input image based on the weights, seeds, and blur radii parameters. It works with RGB input images only.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/film-grain-node

|

||||

|

||||

--------------------------------

|

||||

### Image Picker

|

||||

|

||||

**Description:** This InvokeAI node takes in a collection of images and randomly chooses one. This can be useful when you have a number of poses to choose from for a ControlNet node, or a number of input images for another purpose.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/image-picker-node

|

||||

|

||||

--------------------------------

|

||||

### Retroize

|

||||

@ -109,91 +95,6 @@ a Text-Generation-Webui instance (might work remotely too, but I never tried it)

|

||||

|

||||

This node works best with SDXL models, especially as the style can be described independantly of the LLM's output.

|

||||

|

||||

--------------------------------

|

||||

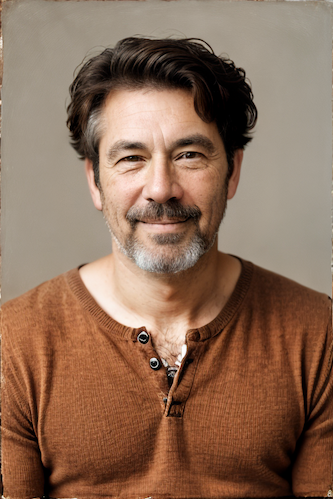

### Depth Map from Wavefront OBJ

|

||||

|

||||

**Description:** Render depth maps from Wavefront .obj files (triangulated) using this simple 3D renderer utilizing numpy and matplotlib to compute and color the scene. There are simple parameters to change the FOV, camera position, and model orientation.

|

||||

|

||||

To be imported, an .obj must use triangulated meshes, so make sure to enable that option if exporting from a 3D modeling program. This renderer makes each triangle a solid color based on its average depth, so it will cause anomalies if your .obj has large triangles. In Blender, the Remesh modifier can be helpful to subdivide a mesh into small pieces that work well given these limitations.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/depth-from-obj-node

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

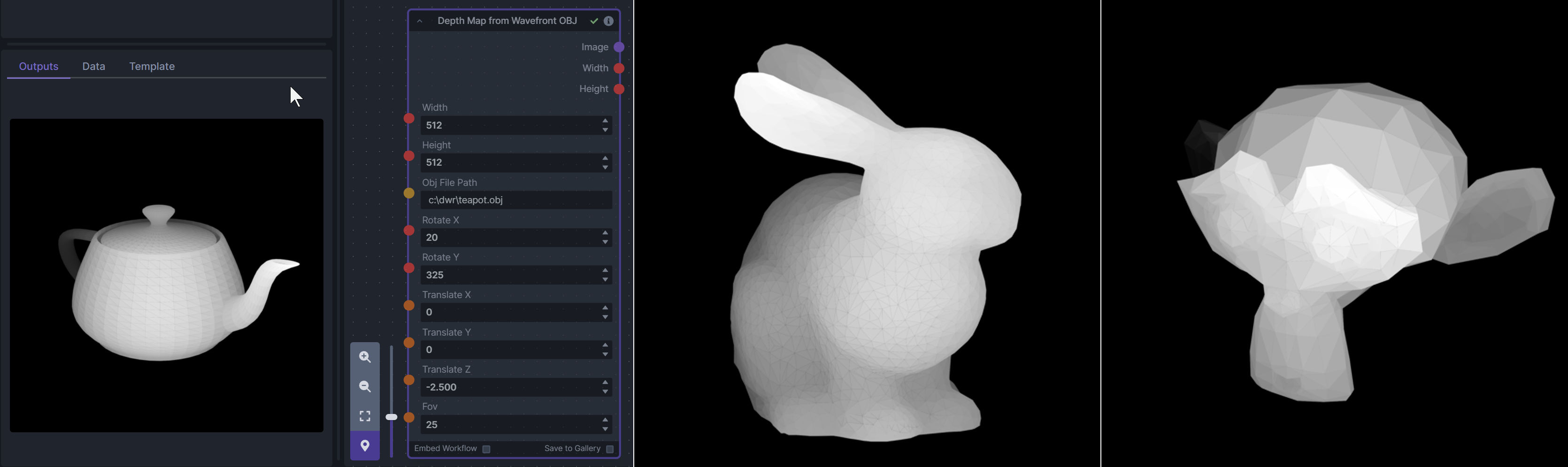

### Enhance Image (simple adjustments)

|

||||

|

||||

**Description:** Boost or reduce color saturation, contrast, brightness, sharpness, or invert colors of any image at any stage with this simple wrapper for pillow [PIL]'s ImageEnhance module.

|

||||

|

||||

Color inversion is toggled with a simple switch, while each of the four enhancer modes are activated by entering a value other than 1 in each corresponding input field. Values less than 1 will reduce the corresponding property, while values greater than 1 will enhance it.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/image-enhance-node

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

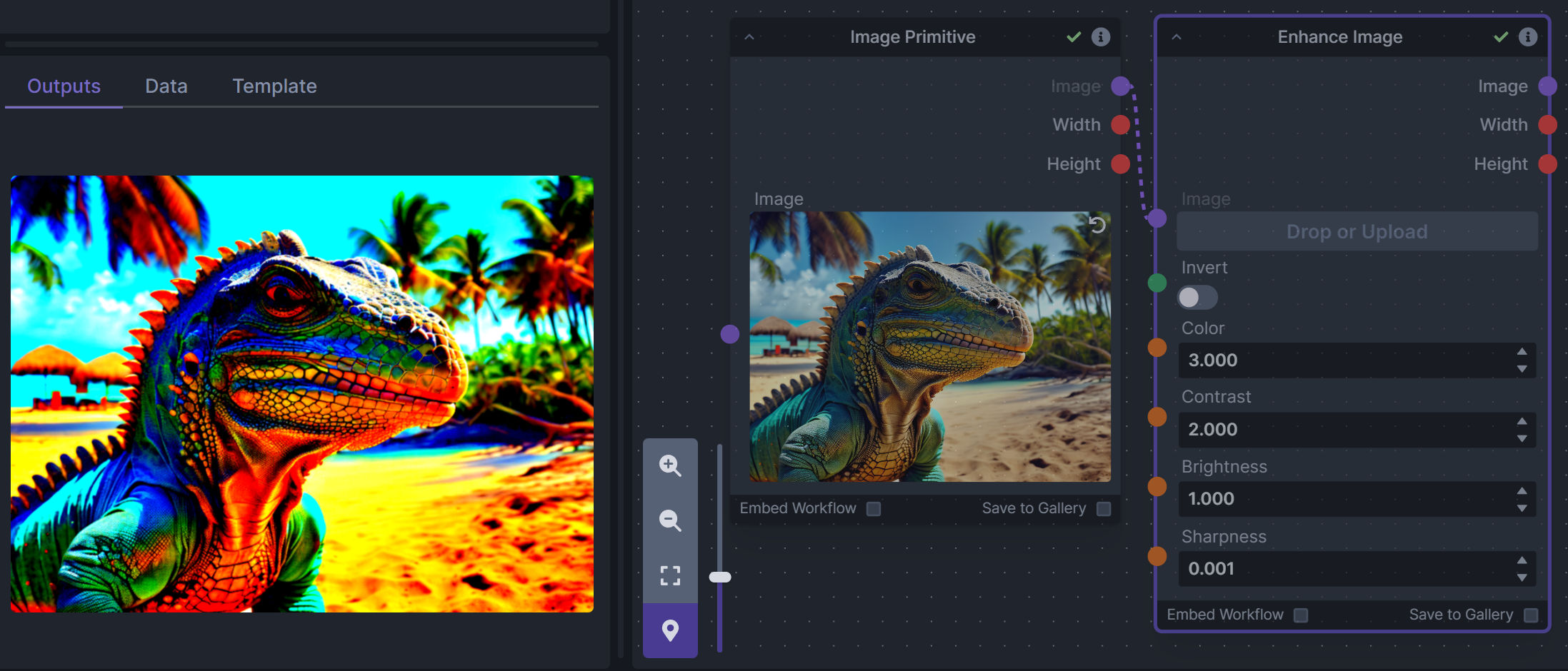

### Generative Grammar-Based Prompt Nodes

|

||||

|

||||

**Description:** This set of 3 nodes generates prompts from simple user-defined grammar rules (loaded from custom files - examples provided below). The prompts are made by recursively expanding a special template string, replacing nonterminal "parts-of-speech" until no more nonterminal terms remain in the string.

|

||||

|

||||

This includes 3 Nodes:

|

||||

- *Lookup Table from File* - loads a YAML file "prompt" section (or of a whole folder of YAML's) into a JSON-ified dictionary (Lookups output)

|

||||

- *Lookups Entry from Prompt* - places a single entry in a new Lookups output under the specified heading

|

||||

- *Prompt from Lookup Table* - uses a Collection of Lookups as grammar rules from which to randomly generate prompts.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/generative-grammar-prompt-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

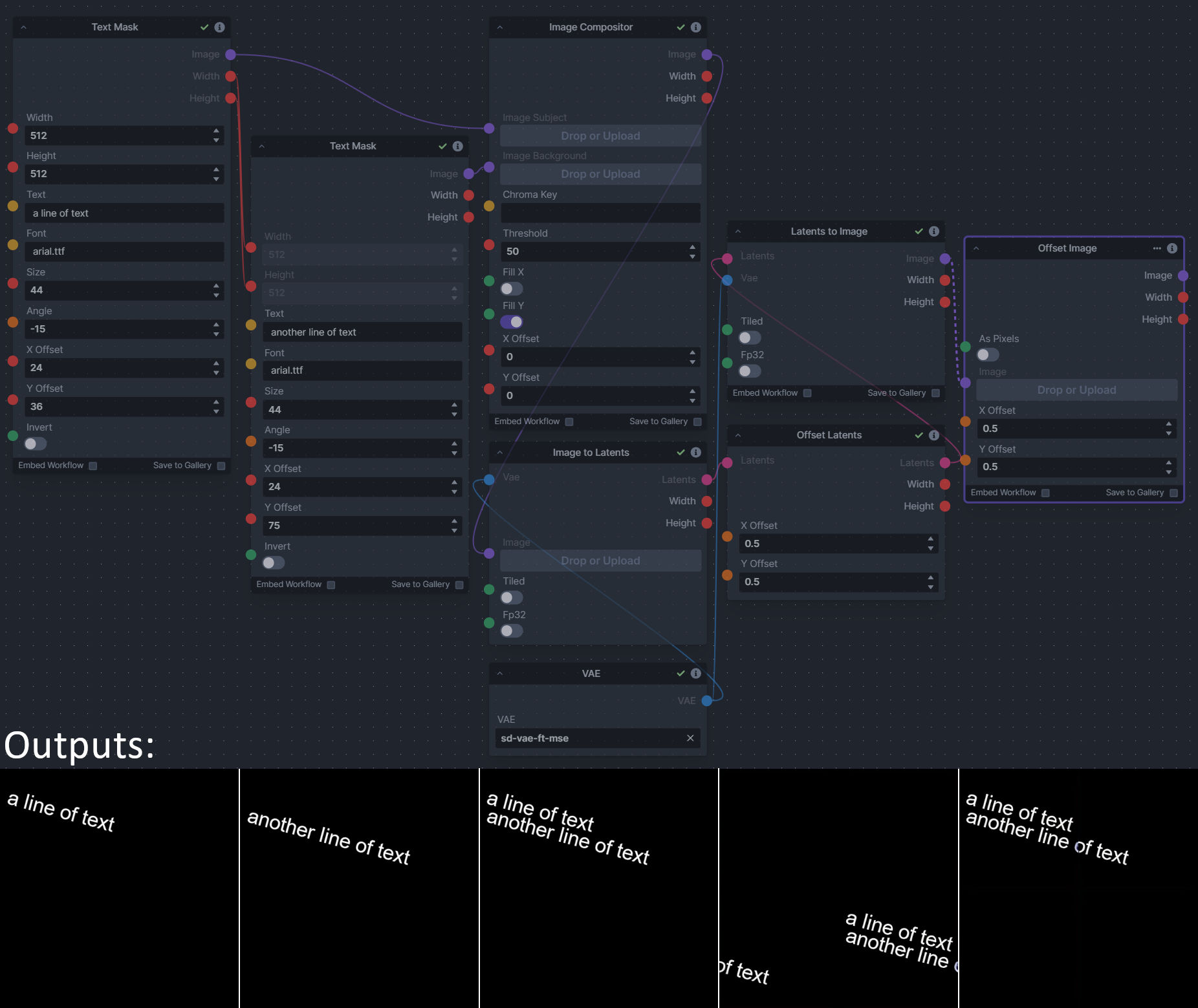

### Image and Mask Composition Pack

|

||||

|

||||

**Description:** This is a pack of nodes for composing masks and images, including a simple text mask creator and both image and latent offset nodes. The offsets wrap around, so these can be used in conjunction with the Seamless node to progressively generate centered on different parts of the seamless tiling.

|

||||

|

||||

This includes 4 Nodes:

|

||||

- *Text Mask (simple 2D)* - create and position a white on black (or black on white) line of text using any font locally available to Invoke.

|

||||

- *Image Compositor* - Take a subject from an image with a flat backdrop and layer it on another image using a chroma key or flood select background removal.

|

||||

- *Offset Latents* - Offset a latents tensor in the vertical and/or horizontal dimensions, wrapping it around.

|

||||

- *Offset Image* - Offset an image in the vertical and/or horizontal dimensions, wrapping it around.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/composition-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

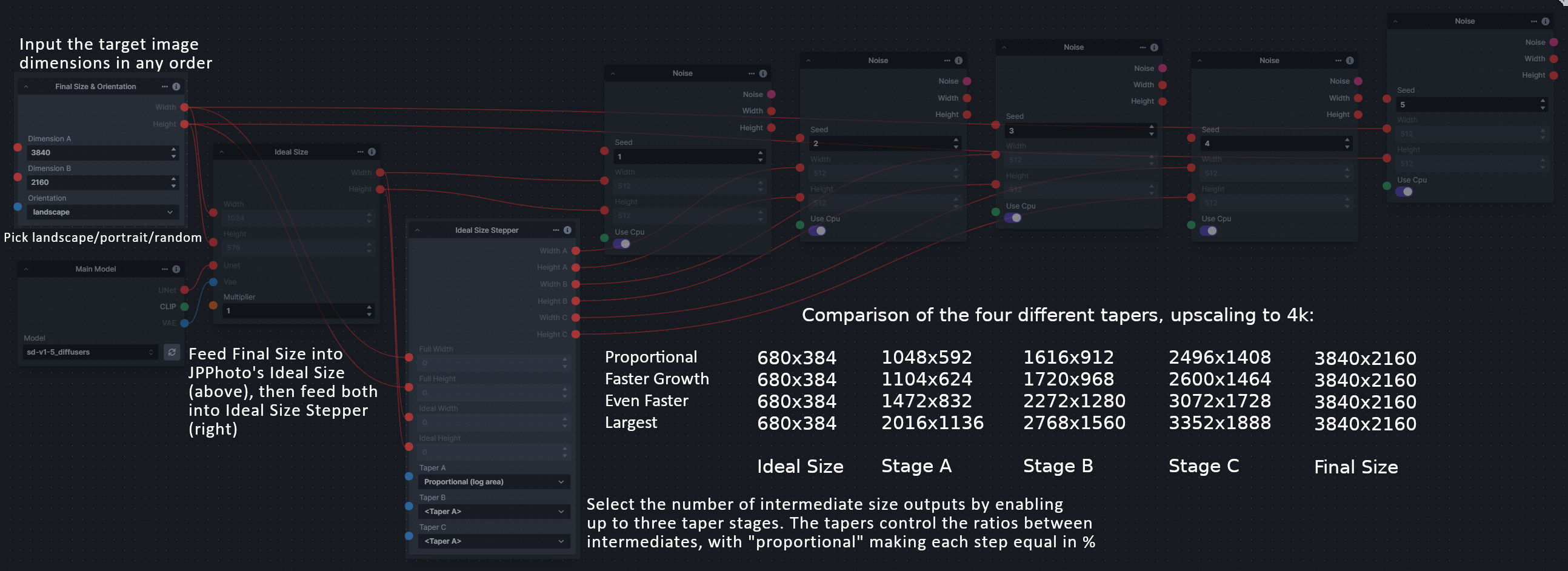

### Size Stepper Nodes

|

||||

|

||||

**Description:** This is a set of nodes for calculating the necessary size increments for doing upscaling workflows. Use the *Final Size & Orientation* node to enter your full size dimensions and orientation (portrait/landscape/random), then plug that and your initial generation dimensions into the *Ideal Size Stepper* and get 1, 2, or 3 intermediate pairs of dimensions for upscaling. Note this does not output the initial size or full size dimensions: the 1, 2, or 3 outputs of this node are only the intermediate sizes.

|

||||

|

||||

A third node is included, *Random Switch (Integers)*, which is just a generic version of Final Size with no orientation selection.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/size-stepper-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Text font to Image

|

||||

|

||||

**Description:** text font to text image node for InvokeAI, download a font to use (or if in font cache uses it from there), the text is always resized to the image size, but can control that with padding, optional 2nd line

|

||||

|

||||

**Node Link:** https://github.com/mickr777/textfontimage

|

||||

|

||||

**Output Examples**

|

||||

|

||||

|

||||

|

||||

Results after using the depth controlnet

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Example Node Template

|

||||

|

||||

@ -35,13 +35,13 @@ The table below contains a list of the default nodes shipped with InvokeAI and t

|

||||

|Inverse Lerp Image | Inverse linear interpolation of all pixels of an image|

|

||||

|Image Primitive | An image primitive value|

|

||||

|Lerp Image | Linear interpolation of all pixels of an image|

|

||||

|Offset Image Channel | Add to or subtract from an image color channel by a uniform value.|

|

||||

|Multiply Image Channel | Multiply or Invert an image color channel by a scalar value.|

|

||||

|Image Luminosity Adjustment | Adjusts the Luminosity (Value) of an image.|

|

||||

|Multiply Images | Multiplies two images together using `PIL.ImageChops.multiply()`.|

|

||||

|Blur NSFW Image | Add blur to NSFW-flagged images|

|

||||

|Paste Image | Pastes an image into another image.|

|

||||

|ImageProcessor | Base class for invocations that preprocess images for ControlNet|

|

||||

|Resize Image | Resizes an image to specific dimensions|

|

||||

|Image Saturation Adjustment | Adjusts the Saturation of an image.|

|

||||

|Scale Image | Scales an image by a factor|

|

||||

|Image to Latents | Encodes an image into latents.|

|

||||

|Add Invisible Watermark | Add an invisible watermark to an image|

|

||||

|

||||

@ -1,7 +1,6 @@

|

||||

# Copyright (c) 2022 Kyle Schouviller (https://github.com/kyle0654)

|

||||

|

||||

from logging import Logger

|

||||

import sqlite3

|

||||

from invokeai.app.services.board_image_record_storage import (

|

||||

SqliteBoardImageRecordStorage,

|

||||

)

|

||||

@ -29,8 +28,6 @@ from ..services.invoker import Invoker

|

||||

from ..services.processor import DefaultInvocationProcessor

|

||||

from ..services.sqlite import SqliteItemStorage

|

||||

from ..services.model_manager_service import ModelManagerService

|

||||

from ..services.batch_manager import BatchManager

|

||||

from ..services.batch_manager_storage import SqliteBatchProcessStorage

|

||||

from ..services.invocation_stats import InvocationStatsService

|

||||

from .events import FastAPIEventService

|

||||

|

||||

@ -74,18 +71,18 @@ class ApiDependencies:

|

||||

db_path.parent.mkdir(parents=True, exist_ok=True)

|

||||

db_location = str(db_path)

|

||||

|

||||

db_conn = sqlite3.connect(db_location, check_same_thread=False) # TODO: figure out a better threading solution

|

||||

|

||||

graph_execution_manager = SqliteItemStorage[GraphExecutionState](conn=db_conn, table_name="graph_executions")

|

||||

graph_execution_manager = SqliteItemStorage[GraphExecutionState](

|

||||

filename=db_location, table_name="graph_executions"

|

||||

)

|

||||

|

||||

urls = LocalUrlService()

|

||||

image_record_storage = SqliteImageRecordStorage(conn=db_conn)

|

||||

image_record_storage = SqliteImageRecordStorage(db_location)

|

||||

image_file_storage = DiskImageFileStorage(f"{output_folder}/images")

|

||||

names = SimpleNameService()

|

||||

latents = ForwardCacheLatentsStorage(DiskLatentsStorage(f"{output_folder}/latents"))

|

||||

|

||||

board_record_storage = SqliteBoardRecordStorage(conn=db_conn)

|

||||

board_image_record_storage = SqliteBoardImageRecordStorage(conn=db_conn)

|

||||

board_record_storage = SqliteBoardRecordStorage(db_location)

|

||||

board_image_record_storage = SqliteBoardImageRecordStorage(db_location)

|

||||

|

||||

boards = BoardService(

|

||||

services=BoardServiceDependencies(

|

||||

@ -119,19 +116,15 @@ class ApiDependencies:

|

||||

)

|

||||

)

|

||||

|

||||

batch_manager_storage = SqliteBatchProcessStorage(conn=db_conn)

|

||||

batch_manager = BatchManager(batch_manager_storage)

|

||||

|

||||

services = InvocationServices(

|

||||

model_manager=ModelManagerService(config, logger),

|

||||

events=events,

|

||||

latents=latents,

|

||||

images=images,

|

||||

batch_manager=batch_manager,

|

||||

boards=boards,

|

||||

board_images=board_images,

|

||||

queue=MemoryInvocationQueue(),

|

||||

graph_library=SqliteItemStorage[LibraryGraph](conn=db_conn, table_name="graphs"),

|

||||

graph_library=SqliteItemStorage[LibraryGraph](filename=db_location, table_name="graphs"),

|

||||

graph_execution_manager=graph_execution_manager,

|

||||

processor=DefaultInvocationProcessor(),

|

||||

configuration=config,

|

||||

|

||||

@ -1,19 +1,19 @@

|

||||

import typing

|

||||

from enum import Enum

|

||||

from pathlib import Path

|

||||

|

||||

from fastapi import Body

|

||||

from fastapi.routing import APIRouter

|

||||

from pathlib import Path

|

||||

from pydantic import BaseModel, Field

|

||||

|

||||

from invokeai.app.invocations.upscale import ESRGAN_MODELS

|

||||

from invokeai.backend.image_util.invisible_watermark import InvisibleWatermark

|

||||

from invokeai.backend.image_util.patchmatch import PatchMatch

|

||||

from invokeai.backend.image_util.safety_checker import SafetyChecker

|

||||

from invokeai.backend.util.logging import logging

|

||||

from invokeai.backend.image_util.invisible_watermark import InvisibleWatermark

|

||||

from invokeai.app.invocations.upscale import ESRGAN_MODELS

|

||||

|

||||

from invokeai.version import __version__

|

||||

|

||||

from ..dependencies import ApiDependencies

|

||||

from invokeai.backend.util.logging import logging

|

||||

|

||||

|

||||

class LogLevel(int, Enum):

|

||||

@ -55,7 +55,7 @@ async def get_version() -> AppVersion:

|

||||

|

||||

@app_router.get("/config", operation_id="get_config", status_code=200, response_model=AppConfig)

|

||||

async def get_config() -> AppConfig:

|

||||

infill_methods = ["tile", "lama", "cv2"]

|

||||

infill_methods = ["tile", "lama"]

|

||||

if PatchMatch.patchmatch_available():

|

||||

infill_methods.append("patchmatch")

|

||||

|

||||

|

||||

@ -1,106 +0,0 @@

|

||||

# Copyright (c) 2022 Kyle Schouviller (https://github.com/kyle0654)

|

||||

|

||||

from fastapi import Body, HTTPException, Path, Response

|

||||

from fastapi.routing import APIRouter

|

||||

|

||||

from invokeai.app.services.batch_manager_storage import BatchSession, BatchSessionNotFoundException

|

||||

|

||||

# Importing * is bad karma but needed here for node detection

|

||||

from ...invocations import * # noqa: F401 F403

|

||||

from ...services.batch_manager import Batch, BatchProcessResponse

|

||||

from ...services.graph import Graph

|

||||

from ..dependencies import ApiDependencies

|

||||

|

||||

batches_router = APIRouter(prefix="/v1/batches", tags=["sessions"])

|

||||

|

||||

|

||||

@batches_router.post(

|

||||

"/",

|

||||

operation_id="create_batch",

|

||||

responses={

|

||||

200: {"model": BatchProcessResponse},

|

||||

400: {"description": "Invalid json"},

|

||||

},

|

||||

)

|

||||

async def create_batch(

|

||||

graph: Graph = Body(description="The graph to initialize the session with"),

|

||||

batch: Batch = Body(description="Batch config to apply to the given graph"),

|

||||

) -> BatchProcessResponse:

|

||||

"""Creates a batch process"""

|

||||

return ApiDependencies.invoker.services.batch_manager.create_batch_process(batch, graph)

|

||||

|

||||

|

||||

@batches_router.put(

|

||||

"/b/{batch_process_id}/invoke",

|

||||

operation_id="start_batch",

|

||||

responses={

|

||||

202: {"description": "Batch process started"},

|

||||

404: {"description": "Batch session not found"},

|

||||

},

|

||||

)

|

||||

async def start_batch(

|

||||

batch_process_id: str = Path(description="ID of Batch to start"),

|

||||

) -> Response:

|

||||

"""Executes a batch process"""

|

||||

try:

|

||||

ApiDependencies.invoker.services.batch_manager.run_batch_process(batch_process_id)

|

||||

return Response(status_code=202)

|

||||

except BatchSessionNotFoundException:

|

||||

raise HTTPException(status_code=404, detail="Batch session not found")

|

||||

|

||||

|

||||

@batches_router.delete(

|

||||

"/b/{batch_process_id}",

|

||||

operation_id="cancel_batch",

|

||||

responses={202: {"description": "The batch is canceled"}},

|

||||

)

|

||||

async def cancel_batch(

|

||||

batch_process_id: str = Path(description="The id of the batch process to cancel"),

|

||||

) -> Response:

|

||||

"""Cancels a batch process"""

|

||||

ApiDependencies.invoker.services.batch_manager.cancel_batch_process(batch_process_id)

|

||||

return Response(status_code=202)

|

||||

|

||||

|

||||

@batches_router.get(

|

||||

"/incomplete",

|

||||

operation_id="list_incomplete_batches",

|

||||

responses={200: {"model": list[BatchProcessResponse]}},

|

||||

)

|

||||

async def list_incomplete_batches() -> list[BatchProcessResponse]:

|

||||

"""Lists incomplete batch processes"""

|

||||

return ApiDependencies.invoker.services.batch_manager.get_incomplete_batch_processes()

|

||||

|

||||

|

||||

@batches_router.get(

|

||||

"/",

|

||||

operation_id="list_batches",

|

||||

responses={200: {"model": list[BatchProcessResponse]}},

|

||||

)

|

||||

async def list_batches() -> list[BatchProcessResponse]:

|

||||

"""Lists all batch processes"""

|

||||

return ApiDependencies.invoker.services.batch_manager.get_batch_processes()

|

||||

|

||||

|

||||

@batches_router.get(

|

||||

"/b/{batch_process_id}",

|

||||

operation_id="get_batch",

|

||||

responses={200: {"model": BatchProcessResponse}},

|

||||

)

|

||||

async def get_batch(

|

||||

batch_process_id: str = Path(description="The id of the batch process to get"),

|

||||

) -> BatchProcessResponse:

|

||||

"""Gets a Batch Process"""

|

||||

return ApiDependencies.invoker.services.batch_manager.get_batch(batch_process_id)

|

||||

|

||||

|

||||

@batches_router.get(

|

||||

"/b/{batch_process_id}/sessions",

|

||||

operation_id="get_batch_sessions",

|

||||

responses={200: {"model": list[BatchSession]}},

|

||||

)

|

||||

async def get_batch_sessions(

|

||||

batch_process_id: str = Path(description="The id of the batch process to get"),

|

||||

) -> list[BatchSession]:

|

||||

"""Gets a list of batch sessions for a given batch process"""

|

||||

return ApiDependencies.invoker.services.batch_manager.get_sessions(batch_process_id)

|

||||

@ -9,7 +9,13 @@ from pydantic.fields import Field

|

||||

# Importing * is bad karma but needed here for node detection

|

||||

from ...invocations import * # noqa: F401 F403

|

||||

from ...invocations.baseinvocation import BaseInvocation

|

||||

from ...services.graph import Edge, EdgeConnection, Graph, GraphExecutionState, NodeAlreadyExecutedError

|

||||

from ...services.graph import (

|

||||

Edge,

|

||||

EdgeConnection,

|

||||

Graph,

|

||||

GraphExecutionState,

|

||||

NodeAlreadyExecutedError,

|

||||

)

|

||||

from ...services.item_storage import PaginatedResults

|

||||

from ..dependencies import ApiDependencies

|

||||

|

||||

|

||||

@ -13,15 +13,11 @@ class SocketIO:

|

||||

|

||||

def __init__(self, app: FastAPI):

|

||||

self.__sio = SocketManager(app=app)

|

||||

self.__sio.on("subscribe", handler=self._handle_sub)

|

||||

self.__sio.on("unsubscribe", handler=self._handle_unsub)

|

||||

|

||||

self.__sio.on("subscribe_session", handler=self._handle_sub_session)

|

||||

self.__sio.on("unsubscribe_session", handler=self._handle_unsub_session)

|

||||

local_handler.register(event_name=EventServiceBase.session_event, _func=self._handle_session_event)

|

||||

|

||||

self.__sio.on("subscribe_batch", handler=self._handle_sub_batch)

|

||||

self.__sio.on("unsubscribe_batch", handler=self._handle_unsub_batch)

|

||||

local_handler.register(event_name=EventServiceBase.batch_event, _func=self._handle_batch_event)

|

||||

|

||||

async def _handle_session_event(self, event: Event):

|

||||

await self.__sio.emit(

|

||||

event=event[1]["event"],

|

||||

@ -29,25 +25,12 @@ class SocketIO:

|

||||

room=event[1]["data"]["graph_execution_state_id"],

|

||||

)

|

||||

|

||||

async def _handle_sub_session(self, sid, data, *args, **kwargs):

|

||||

async def _handle_sub(self, sid, data, *args, **kwargs):

|

||||

if "session" in data:

|

||||

self.__sio.enter_room(sid, data["session"])

|

||||

|

||||

async def _handle_unsub_session(self, sid, data, *args, **kwargs):

|

||||

# @app.sio.on('unsubscribe')

|

||||

|

||||

async def _handle_unsub(self, sid, data, *args, **kwargs):

|

||||

if "session" in data:

|

||||

self.__sio.leave_room(sid, data["session"])

|

||||

|

||||

async def _handle_batch_event(self, event: Event):

|

||||

await self.__sio.emit(

|

||||

event=event[1]["event"],

|

||||

data=event[1]["data"],

|

||||

room=event[1]["data"]["batch_id"],

|

||||

)

|

||||

|

||||

async def _handle_sub_batch(self, sid, data, *args, **kwargs):

|

||||

if "batch_id" in data:

|

||||

self.__sio.enter_room(sid, data["batch_id"])

|

||||

|

||||

async def _handle_unsub_batch(self, sid, data, *args, **kwargs):

|

||||

if "batch_id" in data:

|

||||

self.__sio.enter_room(sid, data["batch_id"])

|

||||

|

||||

@ -24,7 +24,7 @@ import invokeai.frontend.web as web_dir

|

||||

import mimetypes

|

||||

|

||||

from .api.dependencies import ApiDependencies

|

||||

from .api.routers import sessions, batches, models, images, boards, board_images, app_info

|

||||

from .api.routers import sessions, models, images, boards, board_images, app_info

|

||||

from .api.sockets import SocketIO

|

||||

from .invocations.baseinvocation import BaseInvocation, _InputField, _OutputField, UIConfigBase

|

||||

|

||||

@ -90,8 +90,6 @@ async def shutdown_event():

|

||||

|

||||

app.include_router(sessions.session_router, prefix="/api")

|

||||

|

||||

app.include_router(batches.batches_router, prefix="/api")

|

||||

|

||||

app.include_router(models.models_router, prefix="/api")

|

||||

|

||||

app.include_router(images.images_router, prefix="/api")

|

||||

|

||||

@ -5,7 +5,6 @@ import re

|

||||

import shlex

|

||||

import sys

|

||||

import time

|

||||

import sqlite3

|

||||

from typing import Union, get_type_hints, Optional

|

||||

|

||||

from pydantic import BaseModel, ValidationError

|

||||

@ -30,8 +29,6 @@ from invokeai.app.services.image_record_storage import SqliteImageRecordStorage

|

||||

from invokeai.app.services.images import ImageService, ImageServiceDependencies

|

||||

from invokeai.app.services.resource_name import SimpleNameService

|

||||

from invokeai.app.services.urls import LocalUrlService

|

||||

from invokeai.app.services.batch_manager import BatchManager

|

||||

from invokeai.app.services.batch_manager_storage import SqliteBatchProcessStorage

|

||||

from invokeai.app.services.invocation_stats import InvocationStatsService

|

||||

from .services.default_graphs import default_text_to_image_graph_id, create_system_graphs

|

||||

from .services.latent_storage import DiskLatentsStorage, ForwardCacheLatentsStorage

|

||||

@ -255,18 +252,19 @@ def invoke_cli():

|

||||

db_location = config.db_path

|

||||

db_location.parent.mkdir(parents=True, exist_ok=True)

|

||||

|

||||

db_conn = sqlite3.connect(db_location, check_same_thread=False) # TODO: figure out a better threading solution

|

||||

logger.info(f'InvokeAI database location is "{db_location}"')

|

||||

|

||||

graph_execution_manager = SqliteItemStorage[GraphExecutionState](conn=db_conn, table_name="graph_executions")

|

||||

graph_execution_manager = SqliteItemStorage[GraphExecutionState](

|

||||

filename=db_location, table_name="graph_executions"

|

||||

)

|

||||

|

||||

urls = LocalUrlService()

|

||||

image_record_storage = SqliteImageRecordStorage(conn=db_conn)

|

||||

image_record_storage = SqliteImageRecordStorage(db_location)

|

||||

image_file_storage = DiskImageFileStorage(f"{output_folder}/images")

|

||||

names = SimpleNameService()

|

||||

|

||||

board_record_storage = SqliteBoardRecordStorage(conn=db_conn)

|

||||

board_image_record_storage = SqliteBoardImageRecordStorage(conn=db_conn)

|

||||

board_record_storage = SqliteBoardRecordStorage(db_location)

|

||||

board_image_record_storage = SqliteBoardImageRecordStorage(db_location)

|

||||

|

||||

boards = BoardService(

|

||||

services=BoardServiceDependencies(

|

||||

@ -300,19 +298,15 @@ def invoke_cli():

|

||||

)

|

||||

)

|

||||

|

||||

batch_manager_storage = SqliteBatchProcessStorage(conn=db_conn)

|

||||

batch_manager = BatchManager(batch_manager_storage)

|

||||

|

||||

services = InvocationServices(

|

||||

model_manager=model_manager,

|

||||

events=events,

|

||||

latents=ForwardCacheLatentsStorage(DiskLatentsStorage(f"{output_folder}/latents")),

|

||||

images=images,

|

||||

boards=boards,

|

||||

batch_manager=batch_manager,

|

||||

board_images=board_images,

|

||||

queue=MemoryInvocationQueue(),

|

||||

graph_library=SqliteItemStorage[LibraryGraph](conn=db_conn, table_name="graphs"),

|

||||

graph_library=SqliteItemStorage[LibraryGraph](filename=db_location, table_name="graphs"),

|

||||

graph_execution_manager=graph_execution_manager,

|

||||

processor=DefaultInvocationProcessor(),

|

||||

performance_statistics=InvocationStatsService(graph_execution_manager),

|

||||

|

||||

@ -26,16 +26,11 @@ from typing import (

|

||||

from pydantic import BaseModel, Field, validator

|

||||

from pydantic.fields import Undefined, ModelField

|

||||

from pydantic.typing import NoArgAnyCallable

|

||||

import semver

|

||||

|

||||

if TYPE_CHECKING:

|

||||

from ..services.invocation_services import InvocationServices

|

||||

|

||||

|

||||

class InvalidVersionError(ValueError):

|

||||

pass

|

||||

|

||||

|

||||

class FieldDescriptions:

|

||||

denoising_start = "When to start denoising, expressed a percentage of total steps"

|

||||

denoising_end = "When to stop denoising, expressed a percentage of total steps"

|

||||

@ -110,39 +105,24 @@ class UIType(str, Enum):

|

||||

"""

|

||||

|

||||

# region Primitives

|

||||

Integer = "integer"

|

||||

Float = "float"

|

||||

Boolean = "boolean"

|

||||

Color = "ColorField"

|

||||

String = "string"

|

||||

Array = "array"

|

||||

Image = "ImageField"

|

||||

Latents = "LatentsField"

|

||||

Conditioning = "ConditioningField"

|

||||

Control = "ControlField"

|

||||

Float = "float"

|

||||

Image = "ImageField"

|

||||

Integer = "integer"

|

||||

Latents = "LatentsField"

|

||||

String = "string"

|

||||

# endregion

|

||||

|

||||

# region Collection Primitives

|

||||

BooleanCollection = "BooleanCollection"

|

||||

ColorCollection = "ColorCollection"

|

||||

ConditioningCollection = "ConditioningCollection"

|

||||

ControlCollection = "ControlCollection"

|

||||

FloatCollection = "FloatCollection"

|

||||

Color = "ColorField"

|

||||

ImageCollection = "ImageCollection"

|

||||

IntegerCollection = "IntegerCollection"

|

||||

ConditioningCollection = "ConditioningCollection"

|

||||

ColorCollection = "ColorCollection"

|

||||

LatentsCollection = "LatentsCollection"

|

||||

IntegerCollection = "IntegerCollection"

|

||||

FloatCollection = "FloatCollection"

|

||||

StringCollection = "StringCollection"

|

||||

# endregion

|

||||

|

||||

# region Polymorphic Primitives

|

||||

BooleanPolymorphic = "BooleanPolymorphic"

|

||||

ColorPolymorphic = "ColorPolymorphic"

|

||||

ConditioningPolymorphic = "ConditioningPolymorphic"

|

||||

ControlPolymorphic = "ControlPolymorphic"

|

||||

FloatPolymorphic = "FloatPolymorphic"

|

||||

ImagePolymorphic = "ImagePolymorphic"

|

||||

IntegerPolymorphic = "IntegerPolymorphic"

|

||||

LatentsPolymorphic = "LatentsPolymorphic"

|

||||

StringPolymorphic = "StringPolymorphic"

|

||||

BooleanCollection = "BooleanCollection"

|

||||

# endregion

|

||||

|

||||

# region Models

|

||||

@ -196,7 +176,6 @@ class _InputField(BaseModel):

|

||||

ui_type: Optional[UIType]

|

||||

ui_component: Optional[UIComponent]

|

||||

ui_order: Optional[int]

|

||||

item_default: Optional[Any]

|

||||

|

||||

|

||||

class _OutputField(BaseModel):

|

||||

@ -244,7 +223,6 @@ def InputField(

|

||||

ui_component: Optional[UIComponent] = None,

|

||||

ui_hidden: bool = False,

|

||||

ui_order: Optional[int] = None,

|

||||

item_default: Optional[Any] = None,

|

||||

**kwargs: Any,

|

||||

) -> Any:

|

||||

"""

|

||||

@ -271,11 +249,6 @@ def InputField(

|

||||

For this case, you could provide `UIComponent.Textarea`.

|

||||

|

||||

: param bool ui_hidden: [False] Specifies whether or not this field should be hidden in the UI.

|

||||

|

||||

: param int ui_order: [None] Specifies the order in which this field should be rendered in the UI. \

|

||||

|

||||

: param bool item_default: [None] Specifies the default item value, if this is a collection input. \

|

||||

Ignored for non-collection fields..

|

||||

"""

|

||||

return Field(

|

||||

*args,

|

||||

@ -309,7 +282,6 @@ def InputField(

|

||||

ui_component=ui_component,

|

||||

ui_hidden=ui_hidden,

|

||||

ui_order=ui_order,

|

||||

item_default=item_default,

|

||||

**kwargs,

|

||||

)

|

||||

|

||||

@ -360,8 +332,6 @@ def OutputField(

|

||||

`UIType.SDXLMainModelField` to indicate that the field is an SDXL main model field.

|

||||

|

||||

: param bool ui_hidden: [False] Specifies whether or not this field should be hidden in the UI. \

|

||||

|

||||

: param int ui_order: [None] Specifies the order in which this field should be rendered in the UI. \

|

||||

"""

|

||||

return Field(

|

||||

*args,

|

||||

@ -406,9 +376,6 @@ class UIConfigBase(BaseModel):

|

||||

tags: Optional[list[str]] = Field(default_factory=None, description="The node's tags")

|

||||

title: Optional[str] = Field(default=None, description="The node's display name")

|

||||

category: Optional[str] = Field(default=None, description="The node's category")

|

||||

version: Optional[str] = Field(

|

||||

default=None, description='The node\'s version. Should be a valid semver string e.g. "1.0.0" or "3.8.13".'

|

||||

)

|

||||

|

||||

|

||||

class InvocationContext:

|

||||

@ -507,8 +474,6 @@ class BaseInvocation(ABC, BaseModel):

|

||||

schema["tags"] = uiconfig.tags

|

||||

if uiconfig and hasattr(uiconfig, "category"):

|

||||

schema["category"] = uiconfig.category

|

||||

if uiconfig and hasattr(uiconfig, "version"):

|

||||

schema["version"] = uiconfig.version

|

||||

if "required" not in schema or not isinstance(schema["required"], list):

|

||||

schema["required"] = list()

|

||||

schema["required"].extend(["type", "id"])

|

||||

@ -577,11 +542,7 @@ GenericBaseInvocation = TypeVar("GenericBaseInvocation", bound=BaseInvocation)

|

||||

|

||||

|

||||

def invocation(

|

||||

invocation_type: str,

|

||||

title: Optional[str] = None,

|

||||

tags: Optional[list[str]] = None,

|

||||

category: Optional[str] = None,

|

||||

version: Optional[str] = None,

|

||||

invocation_type: str, title: Optional[str] = None, tags: Optional[list[str]] = None, category: Optional[str] = None

|

||||

) -> Callable[[Type[GenericBaseInvocation]], Type[GenericBaseInvocation]]:

|

||||

"""

|

||||

Adds metadata to an invocation.

|

||||

@ -608,12 +569,6 @@ def invocation(

|

||||

cls.UIConfig.tags = tags

|

||||

if category is not None:

|

||||

cls.UIConfig.category = category

|

||||

if version is not None:

|

||||

try:

|

||||

semver.Version.parse(version)

|

||||

except ValueError as e:

|

||||

raise InvalidVersionError(f'Invalid version string for node "{invocation_type}": "{version}"') from e

|

||||

cls.UIConfig.version = version

|

||||

|

||||

# Add the invocation type to the pydantic model of the invocation

|

||||

invocation_type_annotation = Literal[invocation_type] # type: ignore

|

||||

@ -625,9 +580,8 @@ def invocation(

|

||||

config=cls.__config__,

|

||||

)

|

||||

cls.__fields__.update({"type": invocation_type_field})

|

||||

# to support 3.9, 3.10 and 3.11, as described in https://docs.python.org/3/howto/annotations.html

|

||||

if annotations := cls.__dict__.get("__annotations__", None):

|

||||

annotations.update({"type": invocation_type_annotation})

|

||||

cls.__annotations__.update({"type": invocation_type_annotation})

|

||||

|

||||

return cls

|

||||

|

||||

return wrapper

|

||||

@ -661,10 +615,7 @@ def invocation_output(

|

||||

config=cls.__config__,

|

||||

)

|

||||

cls.__fields__.update({"type": output_type_field})

|

||||

|

||||

# to support 3.9, 3.10 and 3.11, as described in https://docs.python.org/3/howto/annotations.html

|

||||

if annotations := cls.__dict__.get("__annotations__", None):

|

||||

annotations.update({"type": output_type_annotation})

|

||||

cls.__annotations__.update({"type": output_type_annotation})

|

||||

|

||||

return cls

|

||||

|

||||

|

||||

@ -10,9 +10,7 @@ from invokeai.app.util.misc import SEED_MAX, get_random_seed

|

||||

from .baseinvocation import BaseInvocation, InputField, InvocationContext, invocation

|

||||

|

||||

|

||||

@invocation(

|

||||

"range", title="Integer Range", tags=["collection", "integer", "range"], category="collections", version="1.0.0"

|

||||

)

|

||||

@invocation("range", title="Integer Range", tags=["collection", "integer", "range"], category="collections")

|

||||

class RangeInvocation(BaseInvocation):

|

||||

"""Creates a range of numbers from start to stop with step"""

|

||||

|

||||

@ -35,7 +33,6 @@ class RangeInvocation(BaseInvocation):

|

||||

title="Integer Range of Size",

|

||||

tags=["collection", "integer", "size", "range"],

|

||||

category="collections",

|

||||

version="1.0.0",

|

||||

)

|

||||

class RangeOfSizeInvocation(BaseInvocation):

|

||||

"""Creates a range from start to start + size with step"""

|

||||

@ -53,7 +50,6 @@ class RangeOfSizeInvocation(BaseInvocation):

|

||||

title="Random Range",

|

||||

tags=["range", "integer", "random", "collection"],

|

||||

category="collections",

|

||||

version="1.0.0",

|

||||

)

|

||||

class RandomRangeInvocation(BaseInvocation):

|

||||

"""Creates a collection of random numbers"""

|

||||

|

||||

@ -44,7 +44,7 @@ class ConditioningFieldData:

|

||||

# PerpNeg = "perp_neg"

|

||||

|

||||

|

||||

@invocation("compel", title="Prompt", tags=["prompt", "compel"], category="conditioning", version="1.0.0")

|

||||

@invocation("compel", title="Prompt", tags=["prompt", "compel"], category="conditioning")

|

||||

class CompelInvocation(BaseInvocation):

|

||||

"""Parse prompt using compel package to conditioning."""

|

||||

|

||||

@ -267,7 +267,6 @@ class SDXLPromptInvocationBase:

|

||||

title="SDXL Prompt",

|

||||

tags=["sdxl", "compel", "prompt"],

|

||||

category="conditioning",

|

||||

version="1.0.0",

|

||||

)

|

||||

class SDXLCompelPromptInvocation(BaseInvocation, SDXLPromptInvocationBase):

|

||||

"""Parse prompt using compel package to conditioning."""

|

||||

@ -280,8 +279,8 @@ class SDXLCompelPromptInvocation(BaseInvocation, SDXLPromptInvocationBase):

|

||||

crop_left: int = InputField(default=0, description="")

|

||||

target_width: int = InputField(default=1024, description="")

|

||||

target_height: int = InputField(default=1024, description="")

|

||||

clip: ClipField = InputField(description=FieldDescriptions.clip, input=Input.Connection, title="CLIP 1")

|

||||

clip2: ClipField = InputField(description=FieldDescriptions.clip, input=Input.Connection, title="CLIP 2")

|

||||

clip: ClipField = InputField(description=FieldDescriptions.clip, input=Input.Connection)

|

||||

clip2: ClipField = InputField(description=FieldDescriptions.clip, input=Input.Connection)

|

||||

|

||||

@torch.no_grad()

|

||||

def invoke(self, context: InvocationContext) -> ConditioningOutput:

|

||||

@ -352,7 +351,6 @@ class SDXLCompelPromptInvocation(BaseInvocation, SDXLPromptInvocationBase):

|

||||

title="SDXL Refiner Prompt",

|

||||

tags=["sdxl", "compel", "prompt"],

|

||||

category="conditioning",

|

||||

version="1.0.0",

|

||||

)

|

||||

class SDXLRefinerCompelPromptInvocation(BaseInvocation, SDXLPromptInvocationBase):

|

||||

"""Parse prompt using compel package to conditioning."""

|

||||

@ -405,7 +403,7 @@ class ClipSkipInvocationOutput(BaseInvocationOutput):

|

||||

clip: ClipField = OutputField(default=None, description=FieldDescriptions.clip, title="CLIP")

|

||||

|

||||

|

||||

@invocation("clip_skip", title="CLIP Skip", tags=["clipskip", "clip", "skip"], category="conditioning", version="1.0.0")

|

||||

@invocation("clip_skip", title="CLIP Skip", tags=["clipskip", "clip", "skip"], category="conditioning")

|

||||

class ClipSkipInvocation(BaseInvocation):

|

||||

"""Skip layers in clip text_encoder model."""

|

||||

|

||||

|

||||

@ -95,12 +95,14 @@ class ControlOutput(BaseInvocationOutput):

|

||||

control: ControlField = OutputField(description=FieldDescriptions.control)

|

||||

|

||||

|

||||

@invocation("controlnet", title="ControlNet", tags=["controlnet"], category="controlnet", version="1.0.0")

|

||||

@invocation("controlnet", title="ControlNet", tags=["controlnet"], category="controlnet")

|

||||

class ControlNetInvocation(BaseInvocation):

|

||||

"""Collects ControlNet info to pass to other nodes"""

|

||||

|

||||

image: ImageField = InputField(description="The control image")

|

||||

control_model: ControlNetModelField = InputField(description=FieldDescriptions.controlnet_model, input=Input.Direct)

|

||||

control_model: ControlNetModelField = InputField(

|

||||

default="lllyasviel/sd-controlnet-canny", description=FieldDescriptions.controlnet_model, input=Input.Direct

|

||||

)

|

||||

control_weight: Union[float, List[float]] = InputField(

|

||||

default=1.0, description="The weight given to the ControlNet", ui_type=UIType.Float

|

||||

)

|

||||

@ -127,9 +129,7 @@ class ControlNetInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation(

|

||||

"image_processor", title="Base Image Processor", tags=["controlnet"], category="controlnet", version="1.0.0"

|

||||

)

|

||||

@invocation("image_processor", title="Base Image Processor", tags=["controlnet"], category="controlnet")

|

||||

class ImageProcessorInvocation(BaseInvocation):

|

||||

"""Base class for invocations that preprocess images for ControlNet"""

|

||||

|

||||

@ -173,7 +173,6 @@ class ImageProcessorInvocation(BaseInvocation):

|

||||

title="Canny Processor",

|

||||

tags=["controlnet", "canny"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class CannyImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Canny edge detection for ControlNet"""

|

||||

@ -196,7 +195,6 @@ class CannyImageProcessorInvocation(ImageProcessorInvocation):

|

||||

title="HED (softedge) Processor",

|

||||

tags=["controlnet", "hed", "softedge"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class HedImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies HED edge detection to image"""

|

||||

@ -225,7 +223,6 @@ class HedImageProcessorInvocation(ImageProcessorInvocation):

|

||||

title="Lineart Processor",

|

||||

tags=["controlnet", "lineart"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class LineartImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies line art processing to image"""

|

||||

@ -247,7 +244,6 @@ class LineartImageProcessorInvocation(ImageProcessorInvocation):

|

||||

title="Lineart Anime Processor",

|

||||

tags=["controlnet", "lineart", "anime"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class LineartAnimeImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies line art anime processing to image"""

|

||||

@ -270,7 +266,6 @@ class LineartAnimeImageProcessorInvocation(ImageProcessorInvocation):

|

||||

title="Openpose Processor",

|

||||

tags=["controlnet", "openpose", "pose"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class OpenposeImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies Openpose processing to image"""

|

||||

@ -295,7 +290,6 @@ class OpenposeImageProcessorInvocation(ImageProcessorInvocation):

|

||||

title="Midas Depth Processor",

|

||||

tags=["controlnet", "midas"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class MidasDepthImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies Midas depth processing to image"""

|

||||

@ -322,7 +316,6 @@ class MidasDepthImageProcessorInvocation(ImageProcessorInvocation):

|

||||

title="Normal BAE Processor",

|

||||

tags=["controlnet"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class NormalbaeImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies NormalBae processing to image"""

|

||||

@ -338,9 +331,7 @@ class NormalbaeImageProcessorInvocation(ImageProcessorInvocation):

|

||||

return processed_image

|

||||

|

||||

|

||||

@invocation(

|

||||

"mlsd_image_processor", title="MLSD Processor", tags=["controlnet", "mlsd"], category="controlnet", version="1.0.0"

|

||||

)

|

||||

@invocation("mlsd_image_processor", title="MLSD Processor", tags=["controlnet", "mlsd"], category="controlnet")

|

||||

class MlsdImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies MLSD processing to image"""

|

||||

|

||||

@ -361,9 +352,7 @@ class MlsdImageProcessorInvocation(ImageProcessorInvocation):

|

||||

return processed_image

|

||||

|

||||

|

||||

@invocation(

|

||||

"pidi_image_processor", title="PIDI Processor", tags=["controlnet", "pidi"], category="controlnet", version="1.0.0"

|

||||

)

|

||||

@invocation("pidi_image_processor", title="PIDI Processor", tags=["controlnet", "pidi"], category="controlnet")

|

||||

class PidiImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies PIDI processing to image"""

|

||||

|

||||

@ -389,7 +378,6 @@ class PidiImageProcessorInvocation(ImageProcessorInvocation):

|

||||

title="Content Shuffle Processor",

|

||||

tags=["controlnet", "contentshuffle"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class ContentShuffleImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies content shuffle processing to image"""

|

||||

@ -419,7 +407,6 @@ class ContentShuffleImageProcessorInvocation(ImageProcessorInvocation):

|

||||

title="Zoe (Depth) Processor",

|

||||

tags=["controlnet", "zoe", "depth"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class ZoeDepthImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies Zoe depth processing to image"""

|

||||

@ -435,7 +422,6 @@ class ZoeDepthImageProcessorInvocation(ImageProcessorInvocation):

|

||||

title="Mediapipe Face Processor",

|

||||

tags=["controlnet", "mediapipe", "face"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class MediapipeFaceProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies mediapipe face processing to image"""

|

||||

@ -458,7 +444,6 @@ class MediapipeFaceProcessorInvocation(ImageProcessorInvocation):

|

||||

title="Leres (Depth) Processor",

|

||||

tags=["controlnet", "leres", "depth"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class LeresImageProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies leres processing to image"""

|

||||

@ -487,7 +472,6 @@ class LeresImageProcessorInvocation(ImageProcessorInvocation):

|

||||

title="Tile Resample Processor",

|

||||

tags=["controlnet", "tile"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class TileResamplerProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Tile resampler processor"""

|

||||

@ -527,7 +511,6 @@ class TileResamplerProcessorInvocation(ImageProcessorInvocation):

|

||||

title="Segment Anything Processor",

|

||||

tags=["controlnet", "segmentanything"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

)

|

||||

class SegmentAnythingProcessorInvocation(ImageProcessorInvocation):

|

||||

"""Applies segment anything processing to image"""

|

||||

|

||||

@ -10,7 +10,12 @@ from invokeai.app.models.image import ImageCategory, ResourceOrigin

|

||||

from .baseinvocation import BaseInvocation, InputField, InvocationContext, invocation

|

||||

|

||||

|

||||

@invocation("cv_inpaint", title="OpenCV Inpaint", tags=["opencv", "inpaint"], category="inpaint", version="1.0.0")

|

||||

@invocation(

|

||||

"cv_inpaint",

|

||||

title="OpenCV Inpaint",

|

||||

tags=["opencv", "inpaint"],

|

||||

category="inpaint",

|

||||

)

|

||||

class CvInpaintInvocation(BaseInvocation):

|

||||

"""Simple inpaint using opencv."""

|

||||

|

||||

|

||||

@ -16,7 +16,7 @@ from ..models.image import ImageCategory, ResourceOrigin

|

||||

from .baseinvocation import BaseInvocation, FieldDescriptions, InputField, InvocationContext, invocation

|

||||

|

||||

|

||||

@invocation("show_image", title="Show Image", tags=["image"], category="image", version="1.0.0")

|

||||

@invocation("show_image", title="Show Image", tags=["image"], category="image")

|

||||

class ShowImageInvocation(BaseInvocation):

|

||||

"""Displays a provided image using the OS image viewer, and passes it forward in the pipeline."""

|

||||

|

||||

@ -36,7 +36,7 @@ class ShowImageInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("blank_image", title="Blank Image", tags=["image"], category="image", version="1.0.0")

|

||||

@invocation("blank_image", title="Blank Image", tags=["image"], category="image")

|

||||

class BlankImageInvocation(BaseInvocation):

|

||||

"""Creates a blank image and forwards it to the pipeline"""

|

||||

|

||||

@ -65,7 +65,7 @@ class BlankImageInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("img_crop", title="Crop Image", tags=["image", "crop"], category="image", version="1.0.0")

|

||||

@invocation("img_crop", title="Crop Image", tags=["image", "crop"], category="image")

|

||||

class ImageCropInvocation(BaseInvocation):

|

||||

"""Crops an image to a specified box. The box can be outside of the image."""

|

||||

|

||||

@ -98,7 +98,7 @@ class ImageCropInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("img_paste", title="Paste Image", tags=["image", "paste"], category="image", version="1.0.0")

|

||||

@invocation("img_paste", title="Paste Image", tags=["image", "paste"], category="image")

|

||||

class ImagePasteInvocation(BaseInvocation):

|

||||

"""Pastes an image into another image."""

|

||||

|

||||

@ -146,7 +146,7 @@ class ImagePasteInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("tomask", title="Mask from Alpha", tags=["image", "mask"], category="image", version="1.0.0")

|

||||

@invocation("tomask", title="Mask from Alpha", tags=["image", "mask"], category="image")

|

||||

class MaskFromAlphaInvocation(BaseInvocation):

|

||||

"""Extracts the alpha channel of an image as a mask."""

|

||||

|

||||

@ -177,7 +177,7 @@ class MaskFromAlphaInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("img_mul", title="Multiply Images", tags=["image", "multiply"], category="image", version="1.0.0")

|

||||

@invocation("img_mul", title="Multiply Images", tags=["image", "multiply"], category="image")

|

||||

class ImageMultiplyInvocation(BaseInvocation):

|

||||

"""Multiplies two images together using `PIL.ImageChops.multiply()`."""

|

||||

|

||||

@ -210,7 +210,7 @@ class ImageMultiplyInvocation(BaseInvocation):

|

||||

IMAGE_CHANNELS = Literal["A", "R", "G", "B"]

|

||||

|

||||

|

||||

@invocation("img_chan", title="Extract Image Channel", tags=["image", "channel"], category="image", version="1.0.0")

|

||||

@invocation("img_chan", title="Extract Image Channel", tags=["image", "channel"], category="image")

|

||||

class ImageChannelInvocation(BaseInvocation):

|

||||

"""Gets a channel from an image."""

|

||||

|

||||

@ -242,7 +242,7 @@ class ImageChannelInvocation(BaseInvocation):

|

||||

IMAGE_MODES = Literal["L", "RGB", "RGBA", "CMYK", "YCbCr", "LAB", "HSV", "I", "F"]

|

||||

|

||||

|

||||

@invocation("img_conv", title="Convert Image Mode", tags=["image", "convert"], category="image", version="1.0.0")

|

||||

@invocation("img_conv", title="Convert Image Mode", tags=["image", "convert"], category="image")

|

||||

class ImageConvertInvocation(BaseInvocation):

|

||||

"""Converts an image to a different mode."""

|

||||

|

||||

@ -271,7 +271,7 @@ class ImageConvertInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("img_blur", title="Blur Image", tags=["image", "blur"], category="image", version="1.0.0")

|

||||

@invocation("img_blur", title="Blur Image", tags=["image", "blur"], category="image")

|

||||

class ImageBlurInvocation(BaseInvocation):

|

||||

"""Blurs an image"""

|

||||

|

||||

@ -325,7 +325,7 @@ PIL_RESAMPLING_MAP = {

|

||||

}

|

||||

|

||||

|

||||

@invocation("img_resize", title="Resize Image", tags=["image", "resize"], category="image", version="1.0.0")

|

||||

@invocation("img_resize", title="Resize Image", tags=["image", "resize"], category="image")

|

||||

class ImageResizeInvocation(BaseInvocation):

|

||||

"""Resizes an image to specific dimensions"""

|

||||

|

||||

@ -365,7 +365,7 @@ class ImageResizeInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("img_scale", title="Scale Image", tags=["image", "scale"], category="image", version="1.0.0")

|

||||

@invocation("img_scale", title="Scale Image", tags=["image", "scale"], category="image")

|

||||

class ImageScaleInvocation(BaseInvocation):

|

||||

"""Scales an image by a factor"""

|

||||

|

||||

@ -406,7 +406,7 @@ class ImageScaleInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("img_lerp", title="Lerp Image", tags=["image", "lerp"], category="image", version="1.0.0")

|

||||

@invocation("img_lerp", title="Lerp Image", tags=["image", "lerp"], category="image")

|

||||

class ImageLerpInvocation(BaseInvocation):

|

||||

"""Linear interpolation of all pixels of an image"""

|

||||

|

||||

@ -439,7 +439,7 @@ class ImageLerpInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("img_ilerp", title="Inverse Lerp Image", tags=["image", "ilerp"], category="image", version="1.0.0")

|

||||

@invocation("img_ilerp", title="Inverse Lerp Image", tags=["image", "ilerp"], category="image")

|

||||

class ImageInverseLerpInvocation(BaseInvocation):

|

||||

"""Inverse linear interpolation of all pixels of an image"""

|

||||

|

||||

@ -472,7 +472,7 @@ class ImageInverseLerpInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("img_nsfw", title="Blur NSFW Image", tags=["image", "nsfw"], category="image", version="1.0.0")

|

||||

@invocation("img_nsfw", title="Blur NSFW Image", tags=["image", "nsfw"], category="image")

|

||||

class ImageNSFWBlurInvocation(BaseInvocation):

|

||||

"""Add blur to NSFW-flagged images"""

|

||||

|

||||

@ -517,9 +517,7 @@ class ImageNSFWBlurInvocation(BaseInvocation):

|

||||

return caution.resize((caution.width // 2, caution.height // 2))

|

||||

|

||||

|

||||

@invocation(

|

||||

"img_watermark", title="Add Invisible Watermark", tags=["image", "watermark"], category="image", version="1.0.0"

|

||||

)

|

||||

@invocation("img_watermark", title="Add Invisible Watermark", tags=["image", "watermark"], category="image")

|

||||

class ImageWatermarkInvocation(BaseInvocation):

|

||||

"""Add an invisible watermark to an image"""

|

||||

|

||||

@ -550,7 +548,7 @@ class ImageWatermarkInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("mask_edge", title="Mask Edge", tags=["image", "mask", "inpaint"], category="image", version="1.0.0")

|

||||

@invocation("mask_edge", title="Mask Edge", tags=["image", "mask", "inpaint"], category="image")

|

||||

class MaskEdgeInvocation(BaseInvocation):

|

||||

"""Applies an edge mask to an image"""

|

||||

|

||||

@ -563,7 +561,7 @@ class MaskEdgeInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||

mask = context.services.images.get_pil_image(self.image.image_name).convert("L")

|

||||

mask = context.services.images.get_pil_image(self.image.image_name)

|

||||

|

||||

npimg = numpy.asarray(mask, dtype=numpy.uint8)

|

||||

npgradient = numpy.uint8(255 * (1.0 - numpy.floor(numpy.abs(0.5 - numpy.float32(npimg) / 255.0) * 2.0)))

|

||||

@ -595,9 +593,7 @@ class MaskEdgeInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation(

|

||||

"mask_combine", title="Combine Masks", tags=["image", "mask", "multiply"], category="image", version="1.0.0"

|

||||

)

|

||||

@invocation("mask_combine", title="Combine Masks", tags=["image", "mask", "multiply"], category="image")

|

||||

class MaskCombineInvocation(BaseInvocation):

|

||||

"""Combine two masks together by multiplying them using `PIL.ImageChops.multiply()`."""

|

||||

|

||||

@ -627,7 +623,7 @@ class MaskCombineInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("color_correct", title="Color Correct", tags=["image", "color"], category="image", version="1.0.0")

|

||||

@invocation("color_correct", title="Color Correct", tags=["image", "color"], category="image")

|

||||

class ColorCorrectInvocation(BaseInvocation):

|

||||

"""

|

||||

Shifts the colors of a target image to match the reference image, optionally

|

||||

@ -700,13 +696,8 @@ class ColorCorrectInvocation(BaseInvocation):

|

||||

# Blur the mask out (into init image) by specified amount

|

||||

if self.mask_blur_radius > 0:

|

||||

nm = numpy.asarray(pil_init_mask, dtype=numpy.uint8)

|

||||

inverted_nm = 255 - nm

|

||||

dilation_size = int(round(self.mask_blur_radius) + 20)

|

||||

dilating_kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (dilation_size, dilation_size))

|

||||

inverted_dilated_nm = cv2.dilate(inverted_nm, dilating_kernel)

|

||||

dilated_nm = 255 - inverted_dilated_nm

|

||||

nmd = cv2.erode(

|

||||

dilated_nm,

|

||||

nm,

|

||||

kernel=numpy.ones((3, 3), dtype=numpy.uint8),

|

||||

iterations=int(self.mask_blur_radius / 2),

|

||||

)

|

||||

@ -737,7 +728,7 @@ class ColorCorrectInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

@invocation("img_hue_adjust", title="Adjust Image Hue", tags=["image", "hue"], category="image", version="1.0.0")

|

||||

@invocation("img_hue_adjust", title="Adjust Image Hue", tags=["image", "hue"], category="image")

|

||||

class ImageHueAdjustmentInvocation(BaseInvocation):

|

||||

"""Adjusts the Hue of an image."""

|

||||

|

||||

@ -778,95 +769,38 @@ class ImageHueAdjustmentInvocation(BaseInvocation):

|

||||

)

|

||||

|

||||

|

||||

COLOR_CHANNELS = Literal[

|

||||

"Red (RGBA)",

|

||||

"Green (RGBA)",

|

||||

"Blue (RGBA)",

|

||||

"Alpha (RGBA)",

|

||||

"Cyan (CMYK)",

|

||||

"Magenta (CMYK)",

|

||||

"Yellow (CMYK)",

|

||||

"Black (CMYK)",

|

||||

"Hue (HSV)",

|

||||

"Saturation (HSV)",

|

||||

"Value (HSV)",

|

||||

"Luminosity (LAB)",

|

||||

"A (LAB)",

|

||||

"B (LAB)",

|

||||

"Y (YCbCr)",

|

||||

"Cb (YCbCr)",

|

||||

"Cr (YCbCr)",

|

||||

]

|

||||

|

||||

CHANNEL_FORMATS = {

|

||||

"Red (RGBA)": ("RGBA", 0),

|

||||

"Green (RGBA)": ("RGBA", 1),

|

||||

"Blue (RGBA)": ("RGBA", 2),

|

||||

"Alpha (RGBA)": ("RGBA", 3),

|

||||

"Cyan (CMYK)": ("CMYK", 0),

|

||||

"Magenta (CMYK)": ("CMYK", 1),

|

||||

"Yellow (CMYK)": ("CMYK", 2),

|

||||

"Black (CMYK)": ("CMYK", 3),

|

||||

"Hue (HSV)": ("HSV", 0),

|

||||

"Saturation (HSV)": ("HSV", 1),

|

||||

"Value (HSV)": ("HSV", 2),

|

||||

"Luminosity (LAB)": ("LAB", 0),

|

||||

"A (LAB)": ("LAB", 1),

|

||||

"B (LAB)": ("LAB", 2),

|

||||

"Y (YCbCr)": ("YCbCr", 0),

|

||||

"Cb (YCbCr)": ("YCbCr", 1),

|

||||

"Cr (YCbCr)": ("YCbCr", 2),

|

||||

}

|

||||

|

||||

|

||||

@invocation(

|