Things like `isProcessing` are no longer relevant with queue. Removed them all & updated everything be appropriate for queue. May be a few little quirks I've missed...

Supersedes #4574

The invocation cache provides simple node memoization functionality. Nodes that use the cache are memoized and not re-executed if their inputs haven't changed. Instead, the stored output is returned.

## Results

This feature provides anywhere some significant to massive performance improvement.

The improvement is most marked on large batches of generations where you only change a couple things (e.g. different seed or prompt for each iteration) and low-VRAM systems, where skipping an extraneous model load is a big deal.

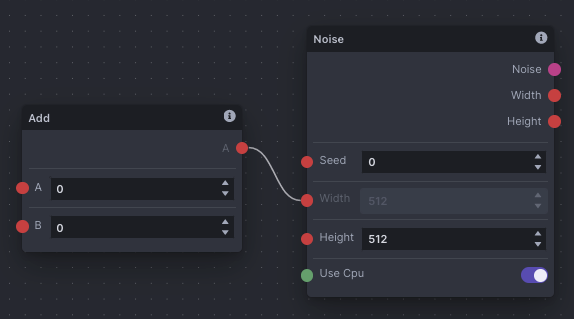

## Overview

A new `invocation_cache` service is added to handle the caching. There's not much to it.

All nodes now inherit a boolean `use_cache` field from `BaseInvocation`. This is a node field and not a class attribute, because specific instances of nodes may want to opt in or out of caching.

The recently-added `invoke_internal()` method on `BaseInvocation` is used as an entrypoint for the cache logic.

To create a cache key, the invocation is first serialized using pydantic's provided `json()` method, skipping the unique `id` field. Then python's very fast builtin `hash()` is used to create an integer key. All implementations of `InvocationCacheBase` must provide a class method `create_key()` which accepts an invocation and outputs a string or integer key.

## In-Memory Implementation

An in-memory implementation is provided. In this implementation, the node outputs are stored in memory as python classes. The in-memory cache does not persist application restarts.

Max node cache size is added as `node_cache_size` under the `Generation` config category.

It defaults to 512 - this number is up for discussion, but given that these are relatively lightweight pydantic models, I think it's safe to up this even higher.

Note that the cache isn't storing the big stuff - tensors and images are store on disk, and outputs include only references to them.

## Node Definition

The default for all nodes is to use the cache. The `@invocation` decorator now accepts an optional `use_cache: bool` argument to override the default of `True`.

Non-deterministic nodes, however, should set this to `False`. Currently, all random-stuff nodes, including `dynamic_prompt`, are set to `False`.

The field name `use_cache` is now effectively a reserved field name and possibly a breaking change if any community nodes use this as a field name. In hindsight, all our reserved field names should have been prefixed with underscores or something.

## One Gotcha

Leaf nodes probably want to opt out of the cache, because if they are not cached, their outputs are not saved again.

If you run the same graph multiple times, you only end up with a single image output, because the image storage side-effects are in the `invoke()` method, which is bypassed if we have a cache hit.

## Linear UI

The linear graphs _almost_ just work, but due to the gotcha, we need to be careful about the final image-outputting node. To resolve this, a `SaveImageInvocation` node is added and used in the linear graphs.

This node is similar to `ImagePrimitive`, except it saves a copy of its input image, and has `use_cache` set to `False` by default.

This is now the leaf node in all linear graphs, and is the only node in those graphs with `use_cache == False` _and_ the only node with `is_intermedate == False`.

## Workflow Editor

All nodes now have a footer with a new `Use Cache [ ]` checkbox. It defaults to the value set by the invocation in its python definition, but can be changed by the user.

The workflow/node validation logic has been updated to migrate old workflows to use the new default values for `use_cache`. Users may still want to review the settings that have been chosen. In the event of catastrophic failure when running this migration, the default value of `True` is applied, as this is correct for most nodes.

Users should consider saving their workflows after loading them in and having them updated.

## Future Enhancements - Callback

A future enhancement would be to provide a callback to the `use_cache` flag that would be run as the node is executed to determine, based on its own internal state, if the cache should be used or not.

This would be useful for `DynamicPromptInvocation`, where the deterministic behaviour is determined by the `combinatorial: bool` field.

## Future Enhancements - Persisted Cache

Similar to how the latents storage is backed by disk, the invocation cache could be persisted to the database or disk. We'd need to be very careful about deserializing outputs, but it's perhaps worth exploring in the future.

This dynamic prompts parameter allows the seed to be randomized per prompt or per iteration:

- Per iteration: Use the same seed for all prompts in a single dynamic prompt expansion

- Per prompt: Use a different seed for every single prompt

"Per iteration" is appropriate for exploring a the latents space with a stable starting noise, while "Per prompt" provides more variation.

Use a generator to do only as much work as is needed.

Previously, though we only ended up creating exactly as many queue items as was needed, there was still some intermediary work that calculated *all* permutations. When that number was very high, the system had a very hard time and used a lot of memory.

The logic has been refactored to use a generator. Additionally, the batch validators are optimized to return early and use less memory.

There seems to be little purpose in using the combinatorial generation for dynamic prompts. I've disabled it by hiding it from the UI and defaulting combinatorial to true. If we want to enable it again in the future it's straightforward to do so.

Due to a very messy branch with broad addition of `isort` on `main` alongside it, some git surgery was needed to get an agreeable git history. This commit represents all of the work on queued generation. See PR for notes.

`config/invokeai_config.py`:

- use `Optional` for things that are optional

- fix typing of `ram_cache_size()` and `vram_cache_size()`

- remove unused and incorrectly typed method `autoconvert_path`

- fix types and logic for `parse_args()`, in which `InvokeAIAppConfig.initconf` *must* be a `DictConfig`, but function would allow it to be set as a `ListConfig`, which presumably would cause issues elsewhere

`config/base.py`:

- use `cls` for first arg of class methods

- use `Optional` for things that are optional

- fix minor type issue related to setting of `env_prefix`

- remove unused `add_subparser()` method, which calls `add_parser()` on an `ArgumentParser` (method only available on the `_SubParsersAction` object, which is returned from ArgumentParser.add_subparsers()`)

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ ] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [ ] No

## Description

A few Missed Translations From the Translation Update

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [ ] Bug Fix

- [ X ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ X ] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [ X ] No

## Description

Mask Edge was set to default, and producing poor results. I've updated

the default back to Unmasked.

The immutable and serializable checks for redux can cause substantial performance issues. The immutable check in particular is pretty heavy. It's only run in dev mode, but this and really slow down the already-slower performance of dev mode.

The most important one for us is serializable, which has far less of a performance impact.

The immutable check is largely redundant because we use immer-backed RTK for everything and immer gives us confidence there.

Disable the immutable check, leaving serializable in.

A few weeks back, we changed how the canvas scales in response to changes in window/panel size.

This introduced a bug where if we the user hadn't already clicked the canvas tab once to initialize the stage elements, the stage's dimensions were zero, then the calculation of the stage's scale ends up zero, then something is divided by that zero and Konva dies.

This is only a problem on Chromium browsers - somehow Firefox handles it gracefully.

Now, when calculating the stage scale, never return a 0 - if it's a zero, return 1 instead. This is enough to fix the crash, but the image ends up centered on the top-left corner of the stage (the origin of the canvas).

Because the canvas elements are not initialized at this point (we haven't switched tabs yet), the stage dimensions fall back to (0,0). This means the center of the stage is also (0,0) - so the image is centered on (0,0), the top-left corner of the stage.

To fix this, we need to ensure we:

- Change to the canvas tab before actually setting the image, so the stage elements are able to initialize

- Use `flushSync` to flush DOM updates for this tab change so we actually have DOM elements to work with

- Update the stage dimensions once on first load of it (so in the effect that sets up the resize observer, we update the stage dimensions)

The result now is the expected behaviour - images sent to canvas do not crash and end up in the center of the canvas.

JSX is not serializable, so it cannot be in redux. Non-serializable global state may be put into `nanostores`.

- Use `nanostores` for `customStarUI`

- Use `nanostores` for `headerComponent`

- Re-enable the serializable & immutable check redux middlewares

* Update collections.py

RangeOfSizeInvocation was not taking step into account when generating the end point of the range

* - updated the node description to refelect this mod

- added a gt=0 constraint to ensure only a positive size of the range

- moved the + 1 to be on the size. To ensure the range is the requested size in cases where the step is negative

- formatted with Black

* Removed +1 from the range calculation

---------

Co-authored-by: psychedelicious <4822129+psychedelicious@users.noreply.github.com>

* New classes to support the PromptsFromFileInvocation Class

- PromptPosNegOutput

- PromptSplitNegInvocation

- PromptJoinInvocation

- PromptReplaceInvocation

* - Added PromptsToFileInvocation,

- PromptSplitNegInvocation

- now counts the bracket depth so ensures it cout the numbr of open and close brackets match.

- checks for escaped [ ] so ignores them if escaped e.g \[

- PromptReplaceInvocation - now has a user regex. and no regex in made caseinsesitive

* Update prompt.py

created class PromptsToFileInvocationOutput and use it in PromptsToFileInvocation instead of BaseInvocationOutput

* Update prompt.py

* Added schema_extra title and tags for PromptReplaceInvocation, PromptJoinInvocation, PromptSplitNegInvocation and PromptsToFileInvocation

* Added PTFileds Collect and Expand

* update to nodes v1

* added ui_type to file_path for PromptToFile

* update params for the primitive types used, remove the ui_type filepath, promptsToFile now only accepts collections until a fix is available

* updated the parameters for the StringOutput primitive

* moved the prompt tools nodes out of the prompt.py into prompt_tools.py

* more rework for v1

* added github link

* updated to use "@invocation"

* updated tags

* Adde new nodes PromptStrength and PromptStrengthsCombine

* chore: black

* feat(nodes): add version to prompt nodes

* renamed nodes from prompt related to string related. Also moved them into a strings.py file. Also moved and renamed the PromptsFromFileInvocation from prompt.py to strings.py. The PTfileds still remain in the Prompt_tool.py for now.

* added , version="1.0.0" to the invocations

* removed the PTField related nodes and the prompt-tools.py file all new nodes now live in the

* formatted prompt.py and strings.py with Black and fixed silly mistake in the new StringSplitInvocation

* - Revert Prompt.py back to original

- Update strings.py to be only StringJoin, StringJoinThre, StringReplace, StringSplitNeg, StringSplit

* applied isort to imports

* fix(nodes): typos in `strings.py`

---------

Co-authored-by: psychedelicious <4822129+psychedelicious@users.noreply.github.com>

Co-authored-by: Millun Atluri <Millu@users.noreply.github.com>

This maps values to labels for multiple-choice fields.

This allows "enum" fields (i.e. `Literal["val1", "val2", ...]` fields) to use code-friendly string values for choices, but present this to the UI as human-friendly labels.

* Added crop option to ImagePasteInvocation

ImagePasteInvocation extended the image with transparency when pasting outside of the base image's bounds. This introduces a new option to crop the resulting image back to the original base image.

* Updated version for ImagePasteInvocation as 3.1.1 was released.

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [x] Bug Fix

- [ ] Optimization

- [x] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [x] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [x] Yes

- [ ] No

## Description

There was an issue with the responsiveness of the quick links buttons in

the documentation.

## Related Tickets & Documents

- Related Issue #4455

- Closes#4455

## QA Instructions, Screenshots, Recordings

• On the documentation website, go to the Home page, scroll down to the

quick-links section.

[Home - InvokeAI Stable Diffusion Toolkit

Docs.webm](https://github.com/invoke-ai/InvokeAI/assets/92071471/0a7095c1-9d78-47f2-8da7-9c1e796bea3d)

## Added/updated tests?

- [ ] Yes

- [x] No : _It is a minor change in the documentation website._

## [optional] Are there any post deployment tasks we need to perform? No

We need to parse the config before doing anything related to invocations to ensure that the invocations union picks up on denied nodes.

- Move that to the top of api_app and cli_app

- Wrap subsequent imports in `if True:`, as a hack to satisfy flake8 and not have to noqa every line or the whole file

- Add tests to ensure graph validation fails when using a denied node, and that the invocations union does not have denied nodes (this indirectly provides confidence that the generated OpenAPI schema will not include denied nodes)

This simply hides nodes from the workflow editor. The nodes will still work if an API request is made with them. For example, you could hide `iterate` nodes from the workflow editor, but if the Linear UI makes use of those nodes, they will still function.

- Update `AppConfig` with optional property `nodesDenylist: string[]`

- If provided, nodes are filtered out by `type` in the workflow editor

Allow denying and explicitly allowing nodes. When a not-allowed node is used, a pydantic `ValidationError` will be raised.

- When collecting all invocations, check against the allowlist and denylist first. When pydantic constructs any unions related to nodes, the denied nodes will be omitted

- Add `allow_nodes` and `deny_nodes` to `InvokeAIAppConfig`. These are `Union[list[str], None]`, and may be populated with the `type` of invocations.

- When `allow_nodes` is `None`, allow all nodes, else if it is `list[str]`, only allow nodes in the list

- When `deny_nodes` is `None`, deny no nodes, else if it is `list[str]`, deny nodes in the list

- `deny_nodes` overrides `allow_nodes`

## What type of PR is this? (check all applicable)

3.1.1 Release build & updates

## Have you discussed this change with the InvokeAI team?

- [X] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [X] Yes

- [ ] No

## Description

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [x] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Description

Adds a configuration option to fetch metadata and workflows from api

isntead of the image file. Needed for commercial.

Minor corrections to spell and grammar in the feature request template.

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [ ] Bug Fix

- [ ] Optimization

- [x] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ ] Yes

- [x] No, because:

This PR should be self explanatory.

## Have you updated all relevant documentation?

- [x] Yes

- [ ] No

## Description

Minor corrections to spell and grammar in the feature request template.

No code or behavioural changes.

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

N/A

## Added/updated tests?

- [ ] Yes

- [x] No : _please replace this line with details on why tests

have not been included_

There are no tests for the issue template.

## [optional] Are there any post deployment tasks we need to perform?

I added extra steps to update the Cudnnn DLL found in the Torch package

because it wasn't optimised or didn't use the lastest version. So

manually updating it can speed up iteration but the result might differ

from each card. Exemple i passed from 3 it/s to a steady 20 it/s.

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [ ] Bug Fix

- [ ] Optimization

- [x] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [x] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [x] Yes

- [ ] No

## Description

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [x] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [x] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Description

fix(nodes): add version to iterate and collect

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

## What type of PR is this? (check all applicable)

- [x] Feature

## Have you discussed this change with the InvokeAI team?

- [x] Yes

## Description

Scale Before Processing Dimensions now respect the Aspect Ratio that is

locked in. This makes it way easier to control the setting when using it

with locked ratios on the canvas.

## What type of PR is this? (check all applicable)

- [X] Bug Fix

## Have you discussed this change with the InvokeAI team?

- [X] Yes

## Have you updated all relevant documentation?

- [X] Yes

## Description

Running the config script on Macs triggered an error due to absence of

VRAM on these machines! VRAM setting is now skipped.

## Added/updated tests?

- [ ] Yes

- [X] No : Will add this test in the near future.

I added extra steps to update the Cudnnn DLL found in the Torch package because it wasn't optimised or didn't use the lastest version. So manually updating it can speed up iteration but the result might differ from each card. Exemple i passed from 3 it/s to a steady 20 it/s.

@blessedcoolant Per discussion, have updated codeowners so that we're

not force merging things.

This will, however, necessitate a much more disciplined approval.

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [X] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [X] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [ ] No

## Description

Add textfontimage node to communityNodes.md

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [x] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [x] Yes

- [ ] No, because:

## Description

fix(ui): fix non-nodes validation logic being applied to nodes invoke

button

For example, if you had an invalid controlnet setup, it would prevent

you from invoking on nodes, when node validation was disabled.

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Closes

https://discord.com/channels/1020123559063990373/1028661664519831552/1148431783289966603

## What type of PR is this? (check all applicable)

- [x] Feature

- [x] Optimization

## Have you discussed this change with the InvokeAI team?

- [x] Yes

## Description

# Coherence Mode

A new parameter called Coherence Mode has been added to Coherence Pass

settings. This parameter controls what kind of Coherence Pass is done

after Inpainting and Outpainting.

- Unmasked: This performs a complete unmasked image to image pass on the

entire generation.

- Mask: This performs a masked image to image pass using your input mask

as the coherence mask.

- Mask Edge [DEFAULT] - This performs as masked image to image pass on

the edges of your mask to try and clear out the seams.

# Why The Coherence Masked Modes?

One of the issues with unmasked coherence pass arises when the diffusion

process is trying to align detailed or organic objects. Because Image to

Image tends change the image a little bit even at lower strengths, this

ends up in the paste back process being slightly misaligned. By

providing the mask to the Coherence Pass, we can try to eliminate this

in those cases. While it will be impossible to address this for every

image out there, having these options will allow the user to automate a

lot of this. For everything else there's manual paint over with inpaint.

# Graph Improvements

The graphs have now been refined quite a bit. We no longer do manual

blurring of the masks anymore for outpainting. This is no longer needed

because we now dilate the mask depending on the blur size while pasting

back. As a result we got rid of quite a few nodes that were handling

this in the older graph.

The graphs are also a lot cleaner now because we now tackle Scaled

Dimensions & Coherence Mode completely independently.

Inpainting result seem very promising especially with the Mask Edge

mode.

---

# New Infill Methods [Experimental]

We are currently trying out various new infill methods to see which ones

might perform the best in outpainting. We may keep all of them or keep

none. This will be decided as we test more.

## LaMa Infill

- Renabled LaMA infill in the UI.

- We are trying to get this to work without a memory overhead.

In order to use LaMa, you need to manually download and place the LaMa

JIT model in `models/core/misc/lama/lama.pt`. You can download the JIT

model from Sanster

[here](https://github.com/Sanster/models/releases/download/add_big_lama/big-lama.pt)

and rename it to `lama.pt` or you can use the script in the original

LaMA repo to convert the base model to a JIT model yourself.

## CV2 Infill

- Added a new infilling method using CV2's Inpaint.

## Patchmatch Rescaling

Patchmatch infill input image is now downscaled and infilled. Patchmatch

can be really slow at large resolutions and this is a pretty decent way

to get around that. Additionally, downscaling might also provide a

better patch match by avoiding larger areas to be infilled with

repeating patches. But that's just the theory. Still testing it out.

## [optional] Are there any post deployment tasks we need to perform?

- If we decide to keep LaMA infill, then we will need to host the model

and update the installer to download it as a core model.

Adds my (@dwringer's) released nodes to the community nodes page.

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [ ] Bug Fix

- [ ] Optimization

- [X] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [X] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [X] Yes

- [ ] No

## Description

Adds my released nodes -

Depth Map from Wavefront OBJ

Enhance Image

Generative Grammar-Based Prompt Nodes

Ideal Size Stepper

Image Compositor

Final Size & Orientation / Random Switch (Integers)

Text Mask (Simple 2D)

* Consolidated saturation/luminosity adjust.

Now allows increasing and inverting.

Accepts any color PIL format and channel designation.

* Updated docs/nodes/defaultNodes.md

* shortened tags list to channel types only

* fix typo in mode list

* split features into offset and multiply nodes

* Updated documentation

* Change invert to discrete boolean.

Previous math was unclear and had issues with 0 values.

* chore: black

* chore(ui): typegen

---------

Co-authored-by: psychedelicious <4822129+psychedelicious@users.noreply.github.com>

Revised links to my node py files, replacing them with links to independent repos. Additionally I consolidated some nodes together (Image and Mask Composition Pack, Size Stepper nodes).

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [x] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [x] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [x] Yes

- [ ] No

## Description

This PR is based on #4423 and should not be merged until it is merged.

[feat(nodes): add version to node

schemas](c179d4ccb7)

The `@invocation` decorator is extended with an optional `version` arg.

On execution of the decorator, the version string is parsed using the

`semver` package (this was an indirect dependency and has been added to

`pyproject.toml`).

All built-in nodes are set with `version="1.0.0"`.

The version is added to the OpenAPI Schema for consumption by the

client.

[feat(ui): handle node

versions](03de3e4f78)

- Node versions are now added to node templates

- Node data (including in workflows) include the version of the node

- On loading a workflow, we check to see if the node and template

versions match exactly. If not, a warning is logged to console.

- The node info icon (top-right corner of node, which you may click to

open the notes editor) now shows the version and mentions any issues.

- Some workflow validation logic has been shifted around and is now

executed in a redux listener.

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Closes#4393

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

Loading old workflows should prompt a warning, and the node status icon

should indicate some action is needed.

## [optional] Are there any post deployment tasks we need to perform?

I've updated the default workflows:

- Bump workflow versions from 1.0 to 1.0.1

- Add versions for all nodes in the workflows

- Test workflows

[Default

Workflows.zip](https://github.com/invoke-ai/InvokeAI/files/12511911/Default.Workflows.zip)

I'm not sure where these are being stored right now @Millu

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [x] Feature

- [x] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Description

### Polymorphic Fields

Initial support for polymorphic field types. Polymorphic types are a

single of or list of a specific type. For example, `Union[str,

list[str]]`.

Polymorphics do not yet have support for direct input in the UI (will

come in the future). They will be forcibly set as Connection-only

fields, in which case users will not be able to provide direct input to

the field.

If a polymorphic should present as a singleton type - which would allow

direct input - the node must provide an explicit type hint.

For example, `DenoiseLatents`' `CFG Scale` is polymorphic, but in the

node editor, we want to present this as a number input. In the node

definition, the field is given `ui_type=UIType.Float`, which tells the

UI to treat this as a `float` field.

The connection validation logic will prevent connecting a collection to

`CFG Scale` in this situation, because it is typed as `float`. The

workaround is to disable validation from the settings to make this

specific connection. A future improvement will resolve this.

### Collection Fields

This also introduces better support for collection field types. Like

polymorphics, collection types are parsed automatically by the client

and do not need any specific type hints.

Also like polymorphics, there is no support yet for direct input of

collection types in the UI.

### Other Changes

- Disabling validation in workflow editor now displays the visual hints

for valid connections, but lets you connect to anything.

- Added `ui_order: int` to `InputField` and `OutputField`. The UI will

use this, if present, to order fields in a node UI. See usage in

`DenoiseLatents` for an example.

- Updated the field colors - duplicate colors have just been lightened a

bit. It's not perfect but it was a quick fix.

- Field handles for collections are the same color as their single

counterparts, but have a dark dot in the center of them.

- Field handles for polymorphics are a rounded square with dot in the

middle.

- Removed all fields that just render `null` from `InputFieldRenderer`,

replaced with a single fallback

- Removed logic in `zValidatedWorkflow`, which checked for existence of

node templates for each node in a workflow. This logic introduced a

circular dependency, due to importing the global redux `store` in order

to get the node templates within a zod schema. It's actually fine to

just leave this out entirely; The case of a missing node template is

handled by the UI. Fixing it otherwise would introduce a substantial

headache.

- Fixed the `ControlNetInvocation.control_model` field default, which

was a string when it shouldn't have one.

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Closes#4266

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

Add this polymorphic float node to the end of your

`invokeai/app/invocations/primitives.py`:

```py

@invocation("float_poly", title="Float Poly Test", tags=["primitives", "float"], category="primitives")

class FloatPolyInvocation(BaseInvocation):

"""A float polymorphic primitive value"""

value: Union[float, list[float]] = InputField(default_factory=list, description="The float value")

def invoke(self, context: InvocationContext) -> FloatOutput:

return FloatOutput(value=self.value[0] if isinstance(self.value, list) else self.value)

``

Head over to nodes and try to connecting up some collection and polymorphic inputs.

- Node versions are now added to node templates

- Node data (including in workflows) include the version of the node

- On loading a workflow, we check to see if the node and template versions match exactly. If not, a warning is logged to console.

- The node info icon (top-right corner of node, which you may click to open the notes editor) now shows the version and mentions any issues.

- Some workflow validation logic has been shifted around and is now executed in a redux listener.

The `@invocation` decorator is extended with an optional `version` arg. On execution of the decorator, the version string is parsed using the `semver` package (this was an indirect dependency and has been added to `pyproject.toml`).

All built-in nodes are set with `version="1.0.0"`.

The version is added to the OpenAPI Schema for consumption by the client.

Initial support for polymorphic field types. Polymorphic types are a single of or list of a specific type. For example, `Union[str, list[str]]`.

Polymorphics do not yet have support for direct input in the UI (will come in the future). They will be forcibly set as Connection-only fields, in which case users will not be able to provide direct input to the field.

If a polymorphic should present as a singleton type - which would allow direct input - the node must provide an explicit type hint.

For example, `DenoiseLatents`' `CFG Scale` is polymorphic, but in the node editor, we want to present this as a number input. In the node definition, the field is given `ui_type=UIType.Float`, which tells the UI to treat this as a `float` field.

The connection validation logic will prevent connecting a collection to `CFG Scale` in this situation, because it is typed as `float`. The workaround is to disable validation from the settings to make this specific connection. A future improvement will resolve this.

This also introduces better support for collection field types. Like polymorphics, collection types are parsed automatically by the client and do not need any specific type hints.

Also like polymorphics, there is no support yet for direct input of collection types in the UI.

- Disabling validation in workflow editor now displays the visual hints for valid connections, but lets you connect to anything.

- Added `ui_order: int` to `InputField` and `OutputField`. The UI will use this, if present, to order fields in a node UI. See usage in `DenoiseLatents` for an example.

- Updated the field colors - duplicate colors have just been lightened a bit. It's not perfect but it was a quick fix.

- Field handles for collections are the same color as their single counterparts, but have a dark dot in the center of them.

- Field handles for polymorphics are a rounded square with dot in the middle.

- Removed all fields that just render `null` from `InputFieldRenderer`, replaced with a single fallback

- Removed logic in `zValidatedWorkflow`, which checked for existence of node templates for each node in a workflow. This logic introduced a circular dependency, due to importing the global redux `store` in order to get the node templates within a zod schema. It's actually fine to just leave this out entirely; The case of a missing node template is handled by the UI. Fixing it otherwise would introduce a substantial headache.

- Fixed the `ControlNetInvocation.control_model` field default, which was a string when it shouldn't have one.

## What type of PR is this? (check all applicable)

- [x] Feature

## Have you discussed this change with the InvokeAI team?

- [x] No

## Description

Automatically infer the name of the model from the path supplied IF the

model name slot is empty. If the model name is not empty, we presume

that the user has entered a model name or made changes to it and we do

not touch it in order to not override user changes.

## Related Tickets & Documents

- Addresses: #4443

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [x] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Description

fix(ui): clicking node collapse button does not bring node to front

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue

https://discord.com/channels/1020123559063990373/1130288930319761428/1147333454632071249

- Closes#4438

## What type of PR is this? (check all applicable)

- [X] Bug Fix

## Have you discussed this change with the InvokeAI team?

- [X] Yes

## Have you updated all relevant documentation?

- [X] Yes

## Description

There is a call in `baseinvocation.invocation_output()` to

`cls.__annotations__`. However, in Python 3.9 not all objects have this

attribute. I have worked around the limitation in the way described in

https://docs.python.org/3/howto/annotations.html , which supposedly will

produce same results in 3.9, 3.10 and 3.11.

## Related Tickets & Documents

See

https://discord.com/channels/1020123559063990373/1146897072394608660/1146939182300799017

for first bug report.

## What type of PR is this? (check all applicable)

- [x] Cleanup

## Have you discussed this change with the InvokeAI team?

- [x] Yes

- [ ] No, because:

## Description

Used https://github.com/albertas/deadcode to get rough overview of what

is not used, checked everything manually though. App still runs.

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Closes#4424

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

Ensure it doesn't explode when you run it.

* add StableDiffusionXLInpaintPipeline to probe list

* add StableDiffusionXLInpaintPipeline to probe list

* Blackified (?)

---------

Authored-by: Lincoln Stein <lstein@gmail.com>

Mucked about with to get it merged by: Kent Keirsey <31807370+hipsterusername@users.noreply.github.com>

Add a click handler for node wrapper component that exclusively selects that node, IF no other modifier keys are held.

Technically I believe this means we are doubling up on the selection logic, as reactflow handles this internally also. But this is by far the most reliable way to fix the UX.

## What type of PR is this? (check all applicable)

- [x] Refactor

- [ ] Feature

- [x] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ ] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [ ] No

## Description

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [x] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Description

The logic that introduced a circular import was actually extraneous. I

have entirely removed it.

This fixes the frontend lint test.

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ ] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [ ] No

## Description

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ ] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [ ] No

## Description

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

## What type of PR is this? (check all applicable)

This is the 3.1.0 release candidate. Minor bugfixes will be applied here

during testing and then merged into main upon release.

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [x] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Description

- Workflows are saved to image files directly

- Image-outputting nodes have an `Embed Workflow` checkbox which, if

enabled, saves the workflow

- `BaseInvocation` now has an `workflow: Optional[str]` field, so all

nodes automatically have the field (but again only image-outputting

nodes display this in UI)

- If this field is enabled, when the graph is created, the workflow is

stringified and set in this field

- Nodes should add `workflow=self.workflow` when they save their output

image to have the workflow written to the image

- Uploads now have their metadata retained so that you can upload

somebody else's image and have access to that workflow

- Graphs are no longer saved to images, workflows replace them

### TODO

- Images created in the linear UI do not have a workflow saved yet. Need

to write a function to build a workflow around the linear UI graph when

using linear tabs. Unfortunately it will not have the nice positioning

and size data the node editor gives you when you save a workflow...

we'll have to figure out how to handle this.

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

All invocation metadata (type, title, tags and category) are now defined in decorators.

The decorators add the `type: Literal["invocation_type"]: "invocation_type"` field to the invocation.

Category is a new invocation metadata, but it is not used by the frontend just yet.

- `@invocation()` decorator for invocations

```py

@invocation(

"sdxl_compel_prompt",

title="SDXL Prompt",

tags=["sdxl", "compel", "prompt"],

category="conditioning",

)

class SDXLCompelPromptInvocation(BaseInvocation, SDXLPromptInvocationBase):

...

```

- `@invocation_output()` decorator for invocation outputs

```py

@invocation_output("clip_skip_output")

class ClipSkipInvocationOutput(BaseInvocationOutput):

...

```

- update invocation docs

- add category to decorator

- regen frontend types

## What type of PR is this? (check all applicable)

- [x] Feature

- [x] Bug Fix

## Have you discussed this change with the InvokeAI team?

- [x] Yes

## Description

- Keep Boards Modal open by default.

- Combine Coherence and Mask settings under Compositing

- Auto Change Dimensions based on model type (option)

- Size resets are now model dependent

- Add Set Control Image Height & Width to Width and Height option.

- Fix numerous color & spacing issues (especially those pertaining to

sliders being too close to the bottom)

- Add Lock Ratio Option

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [x] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [x] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [ ] No

## Description

## Related Tickets & Documents

## QA Instructions, Screenshots, Recordings

## Added/updated tests?

- [ ] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ ] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [ ] No

## Description

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [X] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

In current main, long prompts and support for [Compel's `.and()`

syntax](https://github.com/damian0815/compel/blob/main/doc/syntax.md#conjunction)

is missing. This PR adds it back.

### needs Compel>=2.0.2.dev1

## What type of PR is this? (check all applicable)

- [x] Feature

## Have you discussed this change with the InvokeAI team?

- [x] Yes

## Description

Send stuff directly from canvas to ControlNet

## Usage

- Two new buttons available on canvas Controlnet to import image and

mask.

- Click them.

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [X] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [X] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [ ] No

## Description

Adds Next and Prev Buttons to the current image node

As usual you don't have to use 😄

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ X ] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ X ] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [ X ] No

## Description

Adds Seamless back into the options for Denoising.

## Related Tickets & Documents

- Related Issue #3975

## QA Instructions, Screenshots, Recordings

- Should test X, Y, and XY seamless tiling for all model architectures.

## Added/updated tests?

- [ ] Yes

- [ X ] No : Will need some guidance on automating this.

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ ] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [ ] No

## Description

Allow an image and action to be passed into the app for starting state

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [x] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [x] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [x] No

## Description

Fix masked generation with inpaint models

## Related Tickets & Documents

- Closes#4295

## Added/updated tests?

- [ ] Yes

- [x] No

Added a node to prompt Oobabooga Text-Generation-Webui

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [ ] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [x] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [x] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [x] Yes

- [ ] No

## Description

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [x] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

Adds loading workflows with exhaustive validation via `zod`.

There is a load button but no dedicated save/load UI yet. Also need to add versioning to the workflow format itself.

## What type of PR is this? (check all applicable)

- [X Refactor

- [X] Feature

## Have you discussed this change with the InvokeAI team?

- [X] Yes

## Have you updated all relevant documentation?

- [X] Yes

## Description

### Refactoring

This PR refactors `invokeai.app.services.config` to be easier to

maintain by splitting off the argument, environment and init file

parsing code from the InvokeAIAppConfig object. This will hopefully make

it easier for people to find the place where the various settings are

defined.

### New Features

In collaboration with @StAlKeR7779 , I have renamed and reorganized the

settings controlling image generation and model management to be more

intuitive. The relevant portion of the init file now looks like this:

```

Model Cache:

ram: 14.5

vram: 0.5

lazy_offload: true

Device:

precision: auto

device: auto

Generation:

sequential_guidance: false

attention_type: auto

attention_slice_size: auto

force_tiled_decode: false

```

Key differences are:

1. Split `Performance/Memory` into `Device`, `Generation` and `Model

Cache`

2. Added the ability to force the `device`. The value of this option is

one of {`auto`, `cpu`, `cuda`, `cuda:1`, `mps`}

3. Added the ability to force the `attention_type`. Possible values are

{`auto`, `normal`, `xformers`, `sliced`, `torch-sdp`}

4. Added the ability to force the `attention_slice_size` when `sliced`

attention is in use. The value of this option is one of {`auto`, `max`}

or an integer between 1 and 8.

@StAlKeR7779 Please confirm that I wired the `attention_type` and

`attention_slice_size` configuration options to the diffusers backend

correctly.

In addition, I have exposed the generation-related configuration options

to the TUI:

### Backward Compatibility

This refactor should be backward compatible with earlier versions of

`invokeai.yaml`. If the user re-runs the `invokeai-configure` script,

`invokeai.yaml` will be upgraded to the current format. Several

configuration attributes had to be changed in order to preserve backward

compatibility. These attributes been changed in the code where

appropriate. For the record:

| Old Name | Preferred New Name | Comment |

| ------------| ---------------|------------|

| `max_cache_size` | `ram_cache_size` |

| `max_vram_cache` | `vram_cache_size` |

| `always_use_cpu` | `use_cpu` | Better to check conf.device == "cpu" |

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [x] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Description

[fix(stats): fix fail case when previous graph is

invalid](d1d2d5a47d)

When retrieving a graph, it is parsed through pydantic. It is possible

that this graph is invalid, and an error is thrown.

Handle this by deleting the failed graph from the stats if this occurs.

[fix(stats): fix InvocationStatsService

types](1b70bd1380)

- move docstrings to ABC

- `start_time: int` -> `start_time: float`

- remove class attribute assignments in `StatsContext`

- add `update_mem_stats()` to ABC

- add class attributes to ABC, because they are referenced in instances

of the class. if they should not be on the ABC, then maybe there needs

to be some restructuring

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

On `main` (not this PR), create a situation in which an graph is valid

but will be rendered invalid on invoke. Easy way in node editor:

- create an `Integer Primitive` node, set value to 3

- create a `Resize Image` node and add an image to it

- route the output of `Integer Primitive` to the `width` of `Resize

Image`

- Invoke - this will cause first a `Validation Error` (expected), and if

you inspect the error in the JS console, you'll see it is a "session

retrieval error"

- Invoke again - this will also cause a `Validation Error`, but if you

inspect the error you should see it originates in the stats module (this

is the error this PR fixes)

- Fix the graph by setting the `Integer Primitive` to 512

- Invoke again - you get the same `Validation Error` originating from

stats, even tho there are no issues

Switch to this PR, and then you should only ever get the `Validation

Error` that that is classified as a "session retrieval error".

It is `"invocation"` for invocations and `"output"` for outputs. Clients may use this to confidently and positively identify if an OpenAPI schema object is an invocation or output, instead of using a potentially fragile heuristic.

Doing this via `BaseInvocation`'s `Config.schema_extra()` means all clients get an accurate OpenAPI schema.

Shifts the responsibility of correct types to the backend, where previously it was on the client.

Doing this via these classes' `Config.schema_extra()` method makes it unintrusive and clients will get the correct types for these properties.

Shifts the responsibility of correct types to the backend, where previously it was on the client.

The `type` property is required on all of them, but because this is defined in pydantic as a Literal, it is not required in the OpenAPI schema. Easier to fix this by changing the generated types than fiddling around with pydantic.

- move docstrings to ABC

- `start_time: int` -> `start_time: float`

- remove class attribute assignments in `StatsContext`

- add `update_mem_stats()` to ABC

- add class attributes to ABC, because they are referenced in instances of the class. if they should not be on the ABC, then maybe there needs to be some restructuring

When retrieving a graph, it is parsed through pydantic. It is possible that this graph is invalid, and an error is thrown.

Handle this by deleting the failed graph from the stats if this occurs.

Previously if an image was used in nodes and you deleted it, it would reset all of node editor. Same for controlnet.

Now it only resets the specific nodes or controlnets that used that image.

Add "nodrag", "nowheel" and "nopan" class names in interactable elements, as neeeded. This fixes the mouse interactions and also makes the node draggable from anywhere without needing shift.

Also fixes ctrl/cmd multi-select to support deselecting.

- move docstrings to ABC

- `start_time: int` -> `start_time: float`

- remove class attribute assignments in `StatsContext`

- add `update_mem_stats()` to ABC

- add class attributes to ABC, because they are referenced in instances of the class. if they should not be on the ABC, then maybe there needs to be some restructuring

When retrieving a graph, it is parsed through pydantic. It is possible that this graph is invalid, and an error is thrown.

Handle this by deleting the failed graph from the stats if this occurs.

## What type of PR is this? (check all applicable)

- [X] Feature

- [X] Bug Fix

## Have you discussed this change with the InvokeAI team?

- [X] Yes

## Have you updated all relevant documentation?

- [X] Yes

## Description

Follow symbolic links when auto importing from a directory. Previously

links to files worked, but links to directories weren’t entered during

the scanning/import process.

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [x] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ ] Yes

- [ ] No, because:

## Have you updated all relevant documentation?

- [ ] Yes

- [ ] No

## Description

## Related Tickets & Documents

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #

- Closes #

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->

## Added/updated tests?

- [ ] Yes

- [ ] No : _please replace this line with details on why tests

have not been included_

## [optional] Are there any post deployment tasks we need to perform?

Should be removed when added in diffusers

https://github.com/huggingface/diffusers/pull/4599

## What type of PR is this? (check all applicable)

- [x] Feature

## Have you discussed this change with the InvokeAI team?

- [x] Yes

## Description

PR to add Seam Painting back to the Canvas.

## TODO Later

While the graph works as intended, it has become extremely large and

complex. I don't know if there's a simpler way to do this. Maybe there

is but there's soo many connections and visualizing the graph in my head

is extremely difficult. We might need to create some kind of tooling for

this. Coz it's going going to get crazier.

But well works for now.

## What type of PR is this? (check all applicable)

- [X] Feature

## Have you discussed this change with the InvokeAI team?

- [X] Yes

## Have you updated all relevant documentation?

- [X] Yes

## Description

This PR enhances the logging of performance statistics to include RAM

and model cache information. After each generation, the following will

be logged. The new information follows TOTAL GRAPH EXECUTION TIME.

```

[2023-08-15 21:55:39,010]::[InvokeAI]::INFO --> Graph stats: 2408dbec-50d0-44a3-bbc4-427037e3f7d4

[2023-08-15 21:55:39,010]::[InvokeAI]::INFO --> Node Calls Seconds VRAM Used

[2023-08-15 21:55:39,010]::[InvokeAI]::INFO --> main_model_loader 1 0.004s 0.000G

[2023-08-15 21:55:39,010]::[InvokeAI]::INFO --> clip_skip 1 0.002s 0.000G

[2023-08-15 21:55:39,010]::[InvokeAI]::INFO --> compel 2 2.706s 0.246G

[2023-08-15 21:55:39,010]::[InvokeAI]::INFO --> rand_int 1 0.002s 0.244G

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> range_of_size 1 0.002s 0.244G

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> iterate 1 0.002s 0.244G

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> metadata_accumulator 1 0.002s 0.244G

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> noise 1 0.003s 0.244G

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> denoise_latents 1 2.429s 2.022G

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> l2i 1 1.020s 1.858G

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> TOTAL GRAPH EXECUTION TIME: 6.171s

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> RAM used by InvokeAI process: 4.50G (delta=0.10G)

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> RAM used to load models: 1.99G

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> VRAM in use: 0.303G

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> RAM cache statistics:

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> Model cache hits: 2

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> Model cache misses: 5

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> Models cached: 5

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> Models cleared from cache: 0

[2023-08-15 21:55:39,011]::[InvokeAI]::INFO --> Cache high water mark: 1.99/7.50G

```

There may be a memory leak in InvokeAI. I'm seeing the process memory

usage increasing by about 100 MB with each generation as shown in the

example above.

Previously the editor was using prop-drilling node data and templates to get values deep into nodes. This ended up causing very noticeable performance degradation. For example, any text entry fields were super laggy.

Refactor the whole thing to use memoized selectors via hooks. The hooks are mostly very narrow, returning only the data needed.

Data objects are never passed down, only node id and field name - sometimes the field kind ('input' or 'output').

The end result is a *much* smoother node editor with very minimal rerenders.

There is a tricky mouse event interaction between chakra's `useOutsideClick()` hook (used by chakra `<Menu />`) and reactflow. The hook doesn't work when you click the main reactflow area.

To get around this, I've used a dirty hack, copy-pasting the simple context menu component we use, and extending it slightly to respond to a global `contextMenusClosed` redux action.

- also implement pessimistic updates for starring, only changing the images that were successfully updated by backend

- some autoformat changes crept in

If `reactflow` initializes before the node templates are parsed, edges may not be rendered and the viewport may get reset.

- Add `isReady` state to `NodesState`. This is false when we are loading or parsing node templates and true when that is finished.

- Conditionally render `reactflow` based on `isReady`.

- Add `viewport` to `NodesState` & handlers to keep it synced. This allows `reactflow` to mount and unmount freely and not lose viewport.

Refine concept of "parameter" nodes to "primitives":

- integer

- float

- string

- boolean

- image

- latents

- conditioning

- color

Each primitive has:

- A field definition, if it is not already python primitive value. The field is how this primitive value is passed between nodes. Collections are lists of the field in node definitions. ex: `ImageField` & `list[ImageField]`

- A single output class. ex: `ImageOutput`

- A collection output class. ex: `ImageCollectionOutput`

- A node, which functions to load or pass on the primitive value. ex: `ImageInvocation` (in this case, `ImageInvocation` replaces `LoadImage`)

Plus a number of related changes:

- Reorganize these into `primitives.py`

- Update all nodes and logic to use primitives

- Consolidate "prompt" outputs into "string" & "mask" into "image" (there's no reason for these to be different, the function identically)

- Update default graphs & tests

- Regen frontend types & minor frontend tidy related to changes

## What type of PR is this? (check all applicable)

- [X] Bug Fix

## Have you discussed this change with the InvokeAI team?

- [X] Yes

## Have you updated all relevant documentation?

- [X] Yes

## Description

On Windows systems, model merging was crashing at the very last step

with an error related to not being able to serialize a WindowsPath

object. I have converted the path that is passed to `save_pretrained`

into a string, which I believe will solve the problem.

Note that I had to rebuild the web frontend and add it to the PR in

order to test on my Windows VM which does not have the full node stack

installed due to space limitations.

## Related Tickets & Documents

https://discord.com/channels/1020123559063990373/1042475531079262378/1140680788954861698

## What type of PR is this? (check all applicable)

- [ ] Refactor

- [ ] Feature

- [x] Bug Fix

- [ ] Optimization

- [ ] Documentation Update

- [ ] Community Node Submission

## Have you discussed this change with the InvokeAI team?

- [ ] Yes

- [x] No, because: it's smol

## Have you updated all relevant documentation?

- [ ] Yes

- [x] No

## Description

docker_entrypoint.sh does not quote variable expansion to prevent word

splitting, causing paths with spaces to fail as in #3913

## Related Tickets & Documents

#3913

<!--

For pull requests that relate or close an issue, please include them

below.

For example having the text: "closes #1234" would connect the current

pull

request to issue 1234. And when we merge the pull request, Github will

automatically close the issue.

-->

- Related Issue #3913

- Closes#3913

## QA Instructions, Screenshots, Recordings

<!--

Please provide steps on how to test changes, any hardware or

software specifications as well as any other pertinent information.

-->