PR for the Model Manager UI work related to 3.0

[DONE]

- Update ModelType Config names to be specific so that the front end can

parse them correctly.

- Rebuild frontend schema to reflect these changes.

- Update Linear UI Text To Image and Image to Image to work with the new

model loader.

- Updated the ModelInput component in the Node Editor to work with the

new changes.

[TODO REMEMBER]

- Add proper types for ModelLoaderType in `ModelSelect.tsx`

[TODO]

- Everything else.

Basically updated all slices to be more descriptive in their names. Did so in order to make sure theres good naming scheme available for secondary models.

To determine whether the Load More button should work, we need to keep track of how many images are left to load for a given board or category.

The Assets tab doesn't work, though. Need to figure out a better way to handle this.

We need to access the initial image dimensions during the creation of the `ImageToImage` graph to determine if we need to resize the image.

Because the `initialImage` is now just an image name, we need to either store (easy) or dynamically retrieve its dimensions during graph creation (a bit less easy).

Took the easiest path. May need to revise this in the future.

Images that are used as parameters (e.g. init image, canvas images) are stored as full `ImageDTO` objects in state, separate from and duplicating any object representing those same objects in the `imagesSlice`.

We cannot store only image names as parameters, then pull the full `ImageDTO` from `imagesSlice`, because if an image is not on a loaded page, it doesn't exist in `imagesSlice`. For example, if you scroll down a few pages in the gallery and send that image to canvas, on reloading the app, the canvas will be unable to load that image.

We solved this temporarily by storing the full `ImageDTO` object wherever it was needed, but this is both inefficient and allows for stale `ImageDTO`s across the app.

One other possible solution was to just fetch the `ImageDTO` for all images at startup, and insert them into the `imagesSlice`, but then we run into an issue where we are displaying images in the gallery totally out of context.

For example, if an image from several pages into the gallery was sent to canvas, and the user refreshes, we'd display the first 20 images in gallery. Then to populate the canvas, we'd fetch that image we sent to canvas and add it to `imagesSlice`. Now we'd have 21 images in the gallery: 1 to 20 and whichever image we sent to canvas. Weird.

Using `rtk-query` solves this by allowing us to very easily fetch individual images in the components that need them, and not directly interact with `imagesSlice`.

This commit changes all references to images-as-parameters to store only the name of the image, and not the full `ImageDTO` object. Then, we use an `rtk-query` generated `useGetImageDTOQuery()` hook in each of those components to fetch the image.

We can use cache invalidation when we mutate any image to trigger automated re-running of the query and all the images are automatically kept up to date.

This also obviates the need for the convoluted URL fetching scheme for images that are used as parameters. The `imagesSlice` still need this handling unfortunately.

Added sde schedulers.

Problem - they add random on each step, to get consistent image we need

to provide seed or generator.

I done it, but if you think that it better do in other way - feel free

to change.

Also made ancestral schedulers reproducible, this done same way as for

sde scheduler.

- Add graph builders for canvas txt2img & img2img - they are mostly copy and paste from the linear graph builders but different in a few ways that are very tricky to work around. Just made totally new functions for them.

- Canvas txt2img and img2img support ControlNet (not inpaint/outpaint). There's no way to determine in real-time which mode the canvas is in just yet, so we cannot disable the ControlNet UI when the mode will be inpaint/outpaint - it will always display. It's possible to determine this in near-real-time, will add this at some point.

- Canvas inpaint/outpaint migrated to use model loader, though inpaint/outpaint are still using the non-latents nodes.

Instead of manually creating every node and edge, we can simply copy/paste the base graph from node editor, then sub in parameters.

This is a much more intelligible process. We still need to handle seed, img2img fit and controlnet separately.

- Ports Schedulers to use IAIMantineSelect.

- Adds ability to favorite schedulers in Settings. Favorited schedulers

show up at the top of the list.

- Adds IAIMantineMultiSelect component.

- Change SettingsSchedulers component to use IAIMantineMultiSelect

instead of Chakra Menus.

- remove UI-specific state (the enabled schedulers) from redux, instead derive it in a selector

- simplify logic by putting schedulers in an object instead of an array

- rename `activeSchedulers` to `enabledSchedulers`

- remove need for `useEffect()` when `enabledSchedulers` changes by adding a listener for the `enabledSchedulersChanged` action/event to `generationSlice`

- increase type safety by making `enabledSchedulers` an array of `SchedulerParam`, which is created by the zod schema for scheduler

- `DiskImageStorage` and `DiskLatentsStorage` have now both been updated

to exclusively work with `Path` objects and not rely on the `os` lib to

handle pathing related functions.

- We now also validate the existence of the required image output

folders and latent output folders to ensure that the app does not break

in case the required folders get tampered with mid-session.

- Just overall general cleanup.

Tested it. Don't seem to be any thing breaking.

- remove `image_origin` from most places where we interact with images

- consolidate image file storage into a single `images/` dir

Images have an `image_origin` attribute but it is not actually used when retrieving images, nor will it ever be. It is still used when creating images and helps to differentiate between internally generated images and uploads.

It was included in eg API routes and image service methods as a holdover from the previous app implementation where images were not managed in a database. Now that we have images in a db, we can do away with this and simplify basically everything that touches images.

The one potentially controversial change is to no longer separate internal and external images on disk. If we retain this separation, we have to keep `image_origin` around in a number of spots and it getting image paths on disk painful.

So, I am have gotten rid of this organisation. Images are now all stored in `images`, regardless of their origin. As we improve the image management features, this change will hopefully become transparent.

Diffusers is due for an update soon. #3512

Opening up a PR now with the required changes for when the new version

is live.

I've tested it out on Windows and nothing has broken from what I could

tell. I'd like someone to run some tests on Linux / Mac just to make

sure. Refer to the PR above on how to test it or install the release

branch.

```

pip install diffusers[torch]==0.17.0

```

Feel free to push any other changes to this PR you see fit.

There are some bugs with it that I cannot figure out related to `floating-ui` and `downshift`'s handling of refs.

Will need to revisit this component in the future.

* Testing change to LatentsToText to allow setting different cfg_scale values per diffusion step.

* Adding first attempt at float param easing node, using Penner easing functions.

* Core implementation of ControlNet and MultiControlNet.

* Added support for ControlNet and MultiControlNet to legacy non-nodal Txt2Img in backend/generator. Although backend/generator will likely disappear by v3.x, right now they are very useful for testing core ControlNet and MultiControlNet functionality while node codebase is rapidly evolving.

* Added example of using ControlNet with legacy Txt2Img generator

* Resolving rebase conflict

* Added first controlnet preprocessor node for canny edge detection.

* Initial port of controlnet node support from generator-based TextToImageInvocation node to latent-based TextToLatentsInvocation node

* Switching to ControlField for output from controlnet nodes.

* Resolving conflicts in rebase to origin/main

* Refactored ControlNet nodes so they subclass from PreprocessedControlInvocation, and only need to override run_processor(image) (instead of reimplementing invoke())

* changes to base class for controlnet nodes

* Added HED, LineArt, and OpenPose ControlNet nodes

* Added an additional "raw_processed_image" output port to controlnets, mainly so could route ImageField to a ShowImage node

* Added more preprocessor nodes for:

MidasDepth

ZoeDepth

MLSD

NormalBae

Pidi

LineartAnime

ContentShuffle

Removed pil_output options, ControlNet preprocessors should always output as PIL. Removed diagnostics and other general cleanup.

* Prep for splitting pre-processor and controlnet nodes

* Refactored controlnet nodes: split out controlnet stuff into separate node, stripped controlnet stuff form image processing/analysis nodes.

* Added resizing of controlnet image based on noise latent. Fixes a tensor mismatch issue.

* More rebase repair.

* Added support for using multiple control nets. Unfortunately this breaks direct usage of Control node output port ==> TextToLatent control input port -- passing through a Collect node is now required. Working on fixing this...

* Fixed use of ControlNet control_weight parameter

* Fixed lint-ish formatting error

* Core implementation of ControlNet and MultiControlNet.

* Added first controlnet preprocessor node for canny edge detection.

* Initial port of controlnet node support from generator-based TextToImageInvocation node to latent-based TextToLatentsInvocation node

* Switching to ControlField for output from controlnet nodes.

* Refactored controlnet node to output ControlField that bundles control info.

* changes to base class for controlnet nodes

* Added more preprocessor nodes for:

MidasDepth

ZoeDepth

MLSD

NormalBae

Pidi

LineartAnime

ContentShuffle

Removed pil_output options, ControlNet preprocessors should always output as PIL. Removed diagnostics and other general cleanup.

* Prep for splitting pre-processor and controlnet nodes

* Refactored controlnet nodes: split out controlnet stuff into separate node, stripped controlnet stuff form image processing/analysis nodes.

* Added resizing of controlnet image based on noise latent. Fixes a tensor mismatch issue.

* Cleaning up TextToLatent arg testing

* Cleaning up mistakes after rebase.

* Removed last bits of dtype and and device hardwiring from controlnet section

* Refactored ControNet support to consolidate multiple parameters into data struct. Also redid how multiple controlnets are handled.

* Added support for specifying which step iteration to start using

each ControlNet, and which step to end using each controlnet (specified as fraction of total steps)

* Cleaning up prior to submitting ControlNet PR. Mostly turning off diagnostic printing. Also fixed error when there is no controlnet input.

* Added dependency on controlnet-aux v0.0.3

* Commented out ZoeDetector. Will re-instate once there's a controlnet-aux release that supports it.

* Switched CotrolNet node modelname input from free text to default list of popular ControlNet model names.

* Fix to work with current stable release of controlnet_aux (v0.0.3). Turned of pre-processor params that were added post v0.0.3. Also change defaults for shuffle.

* Refactored most of controlnet code into its own method to declutter TextToLatents.invoke(), and make upcoming integration with LatentsToLatents easier.

* Cleaning up after ControlNet refactor in TextToLatentsInvocation

* Extended node-based ControlNet support to LatentsToLatentsInvocation.

* chore(ui): regen api client

* fix(ui): add value to conditioning field

* fix(ui): add control field type

* fix(ui): fix node ui type hints

* fix(nodes): controlnet input accepts list or single controlnet

* Moved to controlnet_aux v0.0.4, reinstated Zoe controlnet preprocessor. Also in pyproject.toml had to specify downgrade of timm to 0.6.13 _after_ controlnet-aux installs timm >= 0.9.2, because timm >0.6.13 breaks Zoe preprocessor.

* Core implementation of ControlNet and MultiControlNet.

* Added first controlnet preprocessor node for canny edge detection.

* Switching to ControlField for output from controlnet nodes.

* Resolving conflicts in rebase to origin/main

* Refactored ControlNet nodes so they subclass from PreprocessedControlInvocation, and only need to override run_processor(image) (instead of reimplementing invoke())

* changes to base class for controlnet nodes

* Added HED, LineArt, and OpenPose ControlNet nodes

* Added more preprocessor nodes for:

MidasDepth

ZoeDepth

MLSD

NormalBae

Pidi

LineartAnime

ContentShuffle

Removed pil_output options, ControlNet preprocessors should always output as PIL. Removed diagnostics and other general cleanup.

* Prep for splitting pre-processor and controlnet nodes

* Refactored controlnet nodes: split out controlnet stuff into separate node, stripped controlnet stuff form image processing/analysis nodes.

* Added resizing of controlnet image based on noise latent. Fixes a tensor mismatch issue.

* Added support for using multiple control nets. Unfortunately this breaks direct usage of Control node output port ==> TextToLatent control input port -- passing through a Collect node is now required. Working on fixing this...

* Fixed use of ControlNet control_weight parameter

* Core implementation of ControlNet and MultiControlNet.

* Added first controlnet preprocessor node for canny edge detection.

* Initial port of controlnet node support from generator-based TextToImageInvocation node to latent-based TextToLatentsInvocation node

* Switching to ControlField for output from controlnet nodes.

* Refactored controlnet node to output ControlField that bundles control info.

* changes to base class for controlnet nodes

* Added more preprocessor nodes for:

MidasDepth

ZoeDepth

MLSD

NormalBae

Pidi

LineartAnime

ContentShuffle

Removed pil_output options, ControlNet preprocessors should always output as PIL. Removed diagnostics and other general cleanup.

* Prep for splitting pre-processor and controlnet nodes

* Refactored controlnet nodes: split out controlnet stuff into separate node, stripped controlnet stuff form image processing/analysis nodes.

* Added resizing of controlnet image based on noise latent. Fixes a tensor mismatch issue.

* Cleaning up TextToLatent arg testing

* Cleaning up mistakes after rebase.

* Removed last bits of dtype and and device hardwiring from controlnet section

* Refactored ControNet support to consolidate multiple parameters into data struct. Also redid how multiple controlnets are handled.

* Added support for specifying which step iteration to start using

each ControlNet, and which step to end using each controlnet (specified as fraction of total steps)

* Cleaning up prior to submitting ControlNet PR. Mostly turning off diagnostic printing. Also fixed error when there is no controlnet input.

* Commented out ZoeDetector. Will re-instate once there's a controlnet-aux release that supports it.

* Switched CotrolNet node modelname input from free text to default list of popular ControlNet model names.

* Fix to work with current stable release of controlnet_aux (v0.0.3). Turned of pre-processor params that were added post v0.0.3. Also change defaults for shuffle.

* Refactored most of controlnet code into its own method to declutter TextToLatents.invoke(), and make upcoming integration with LatentsToLatents easier.

* Cleaning up after ControlNet refactor in TextToLatentsInvocation

* Extended node-based ControlNet support to LatentsToLatentsInvocation.

* chore(ui): regen api client

* fix(ui): fix node ui type hints

* fix(nodes): controlnet input accepts list or single controlnet

* Added Mediapipe image processor for use as ControlNet preprocessor.

Also hacked in ability to specify HF subfolder when loading ControlNet models from string.

* Fixed bug where MediapipFaceProcessorInvocation was ignoring max_faces and min_confidence params.

* Added nodes for float params: ParamFloatInvocation and FloatCollectionOutput. Also added FloatOutput.

* Added mediapipe install requirement. Should be able to remove once controlnet_aux package adds mediapipe to its requirements.

* Added float to FIELD_TYPE_MAP ins constants.ts

* Progress toward improvement in fieldTemplateBuilder.ts getFieldType()

* Fixed controlnet preprocessors and controlnet handling in TextToLatents to work with revised Image services.

* Cleaning up from merge, re-adding cfg_scale to FIELD_TYPE_MAP

* Making sure cfg_scale of type list[float] can be used in image metadata, to support param easing for cfg_scale

* Fixed math for per-step param easing.

* Added option to show plot of param value at each step

* Just cleaning up after adding param easing plot option, removing vestigial code.

* Modified control_weight ControlNet param to be polistmorphic --

can now be either a single float weight applied for all steps, or a list of floats of size total_steps, that specifies weight for each step.

* Added more informative error message when _validat_edge() throws an error.

* Just improving parm easing bar chart title to include easing type.

* Added requirement for easing-functions package

* Taking out some diagnostic prints.

* Added option to use both easing function and mirror of easing function together.

* Fixed recently introduced problem (when pulled in main), triggered by num_steps in StepParamEasingInvocation not having a default value -- just added default.

---------

Co-authored-by: psychedelicious <4822129+psychedelicious@users.noreply.github.com>

In some cases the command-line was getting parsed before the logger was

initialized, causing the logger not to pick up custom logging

instructions from `--log_handlers`. This PR fixes the issue.

[fix(ui): blur tab on

click](93f3658a4a)

Fixes issue where after clicking a tab, using the arrow keys changes tab

instead of changing selected image

[fix(ui): fix canvas not filling screen on first

load](68be95acbb)

[feat(ui): remove clear temp folder canvas

button](813f79f0f9)

This button is nonfunctional.

Soon we will introduce a different way to handle clearing out

intermediate images (likely automated).

There was an issue where for graphs w/ iterations, your images were output all at once, at the very end of processing. So if you canceled halfway through an execution of 10 nodes, you wouldn't get any images - even though you'd completed 5 images' worth of inference.

## Cause

Because graphs executed breadth-first (i.e. depth-by-depth), leaf nodes were necessarily processed last. For image generation graphs, your `LatentsToImage` will be leaf nodes, and be the last depth to be executed.

For example, a `TextToLatents` graph w/ 3 iterations would execute all 3 `TextToLatents` nodes fully before moving to the next depth, where the `LatentsToImage` nodes produce output images, resulting in a node execution order like this:

1. TextToLatents

2. TextToLatents

3. TextToLatents

4. LatentsToImage

5. LatentsToImage

6. LatentsToImage

## Solution

This PR makes a two changes to graph execution to execute as deeply as it can along each branch of the graph.

### Eager node preparation

We now prepare as many nodes as possible, instead of just a single node at a time.

We also need to change the conditions in which nodes are prepared. Previously, nodes were prepared only when all of their direct ancestors were executed.

The updated logic prepares nodes that:

- are *not* `Iterate` nodes whose inputs have *not* been executed

- do *not* have any unexecuted `Iterate` ancestor nodes

This results in graphs always being maximally prepared.

### Always execute the deepest prepared node

We now choose the next node to execute by traversing from the bottom of the graph instead of the top, choosing the first node whose inputs are all executed.

This means we always execute the deepest node possible.

## Result

Graphs now execute depth-first, so instead of an execution order like this:

1. TextToLatents

2. TextToLatents

3. TextToLatents

4. LatentsToImage

5. LatentsToImage

6. LatentsToImage

... we get an execution order like this:

1. TextToLatents

2. LatentsToImage

3. TextToLatents

4. LatentsToImage

5. TextToLatents

6. LatentsToImage

Immediately after inference, the image is decoded and sent to the gallery.

fixes#3400

This PR creates the databases directory at app startup time. It also

removes a couple of debugging statements that were inadvertently left in

the model manager.

# Make InvokeAI package installable by mere mortals

This commit makes InvokeAI 3.0 to be installable via PyPi.org and/or the

installer script. The install process is now pretty much identical to

the 2.3 process, including creating launcher scripts `invoke.sh` and

`invoke.bat`.

Main changes:

1. Moved static web pages into `invokeai/frontend/web` and modified the

API to look for them there. This allows pip to copy the files into the

distribution directory so that user no longer has to be in repo root to

launch, and enables PyPi installations with `pip install invokeai`

2. Update invoke.sh and invoke.bat to launch the new web application

properly. This also changes the wording for launching the CLI from

"generate images" to "explore the InvokeAI node system," since I would

not recommend using the CLI to generate images routinely.

3. Fix a bug in the checkpoint converter script that was identified

during testing.

4. Better error reporting when checkpoint converter fails.

5. Rebuild front end.

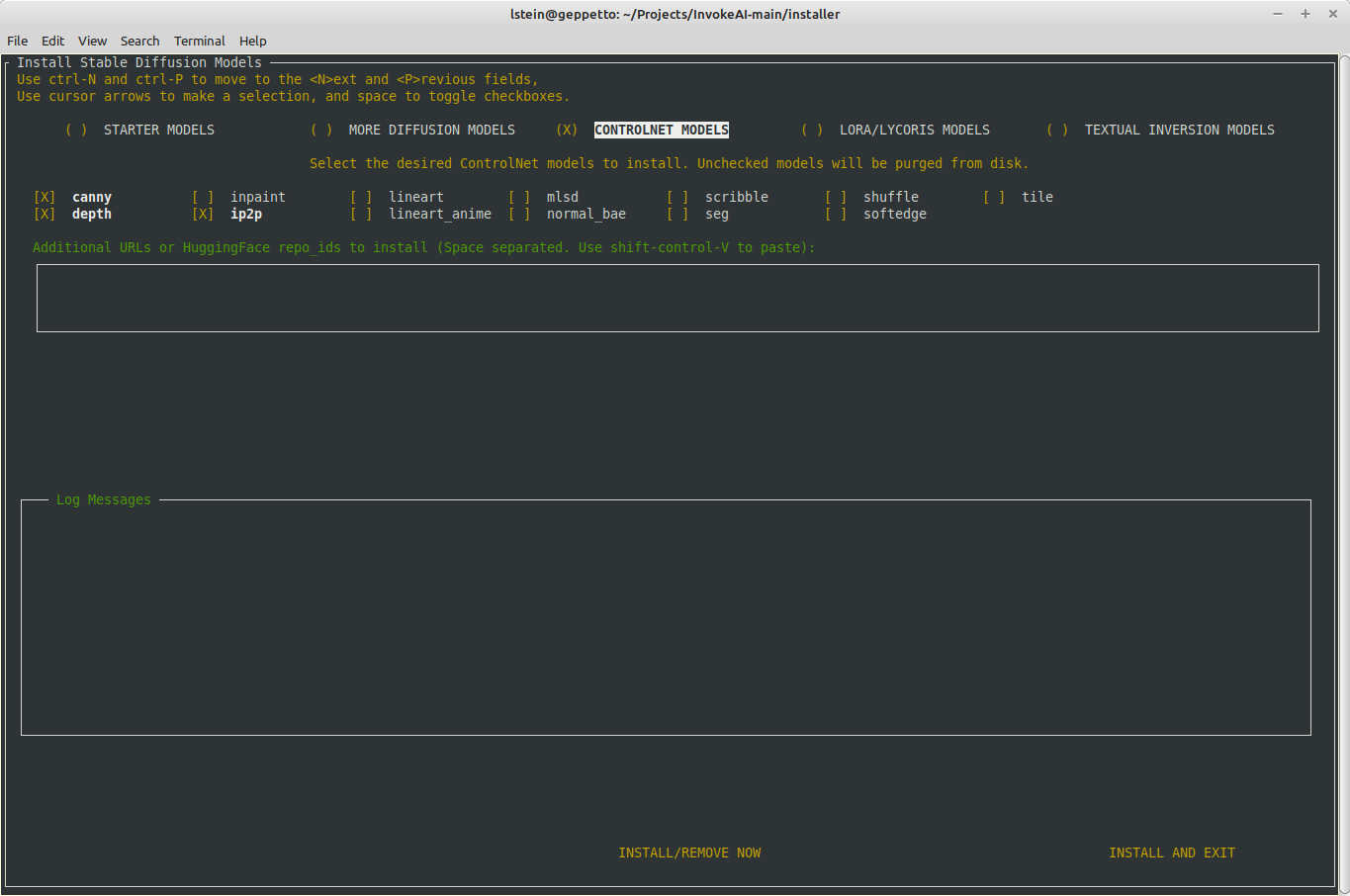

# Major improvements to the model installer.

1. The text user interface for `invokeai-model-install` has been

expanded to allow the user to install controlnet, LoRA, textual

inversion, diffusers and checkpoint models. The user can install

interactively (without leaving the TUI), or in batch mode after exiting

the application.

2. The `invokeai-model-install` command now lets you list, add and

delete models from the command line:

## Listing models

```

$ invokeai-model-install --list diffusers

Diffuser models:

analog-diffusion-1.0 not loaded diffusers An SD-1.5 model trained on diverse analog photographs (2.13 GB)

d&d-diffusion-1.0 not loaded diffusers Dungeons & Dragons characters (2.13 GB)

deliberate-1.0 not loaded diffusers Versatile model that produces detailed images up to 768px (4.27 GB)

DreamShaper not loaded diffusers Imported diffusers model DreamShaper

sd-inpainting-1.5 not loaded diffusers RunwayML SD 1.5 model optimized for inpainting, diffusers version (4.27 GB)

sd-inpainting-2.0 not loaded diffusers Stable Diffusion version 2.0 inpainting model (5.21 GB)

stable-diffusion-1.5 not loaded diffusers Stable Diffusion version 1.5 diffusers model (4.27 GB)

stable-diffusion-2.1 not loaded diffusers Stable Diffusion version 2.1 diffusers model, trained on 768 pixel images (5.21 GB)

```

```

$ invokeai-model-install --list tis

Loading Python libraries...

Installed Textual Inversion Embeddings:

EasyNegative

ahx-beta-453407d

```

## Installing models

(this example shows correct handling of a server side error at Civitai)

```

$ invokeai-model-install --diffusers https://civitai.com/api/download/models/46259 Linaqruf/anything-v3.0

Loading Python libraries...

[2023-06-05 22:17:23,556]::[InvokeAI]::INFO --> INSTALLING EXTERNAL MODELS

[2023-06-05 22:17:23,557]::[InvokeAI]::INFO --> Probing https://civitai.com/api/download/models/46259 for import

[2023-06-05 22:17:23,557]::[InvokeAI]::INFO --> https://civitai.com/api/download/models/46259 appears to be a URL

[2023-06-05 22:17:23,763]::[InvokeAI]::ERROR --> An error occurred during downloading /home/lstein/invokeai-test/models/ldm/stable-diffusion-v1/46259: Internal Server Error

[2023-06-05 22:17:23,763]::[InvokeAI]::ERROR --> ERROR DOWNLOADING https://civitai.com/api/download/models/46259: {"error":"Invalid database operation","cause":{"clientVersion":"4.12.0"}}

[2023-06-05 22:17:23,764]::[InvokeAI]::INFO --> Probing Linaqruf/anything-v3.0 for import

[2023-06-05 22:17:23,764]::[InvokeAI]::DEBUG --> Linaqruf/anything-v3.0 appears to be a HuggingFace diffusers repo_id

[2023-06-05 22:17:23,768]::[InvokeAI]::INFO --> Loading diffusers model from Linaqruf/anything-v3.0

[2023-06-05 22:17:23,769]::[InvokeAI]::DEBUG --> Using faster float16 precision

[2023-06-05 22:17:23,883]::[InvokeAI]::ERROR --> An unexpected error occurred while downloading the model: 404 Client Error. (Request ID: Root=1-647e9733-1b0ee3af67d6ac3456b1ebfc)

Revision Not Found for url: https://huggingface.co/Linaqruf/anything-v3.0/resolve/fp16/model_index.json.

Invalid rev id: fp16)

Downloading (…)ain/model_index.json: 100%|██████████████████████████████████████████████████████████████████████████████████████████████| 511/511 [00:00<00:00, 2.57MB/s]

Downloading (…)cial_tokens_map.json: 100%|██████████████████████████████████████████████████████████████████████████████████████████████| 472/472 [00:00<00:00, 6.13MB/s]

Downloading (…)cheduler_config.json: 100%|██████████████████████████████████████████████████████████████████████████████████████████████| 341/341 [00:00<00:00, 3.30MB/s]

Downloading (…)okenizer_config.json: 100%|██████████████████████████████████████████████████████████████████████████████████████████████| 807/807 [00:00<00:00, 11.3MB/s]

```

## Deleting models

```

invokeai-model-install --delete --diffusers anything-v3

Loading Python libraries...

[2023-06-05 22:19:45,927]::[InvokeAI]::INFO --> Processing requested deletions

[2023-06-05 22:19:45,927]::[InvokeAI]::INFO --> anything-v3...

[2023-06-05 22:19:45,927]::[InvokeAI]::INFO --> Deleting the cached model directory for Linaqruf/anything-v3.0

[2023-06-05 22:19:45,948]::[InvokeAI]::WARNING --> Deletion of this model is expected to free 4.3G

```

1. Contents of autoscan directory field are restored after doing an installation.

2. Activate dialogue to choose V2 parameterization when importing from a directory.

3. Remove autoscan directory from init file when its checkbox is unselected.

4. Add widget cycling behavior to install models form.

The processor is automatically selected when model is changed.

But if the user manually changes the processor, processor settings, or disables the new `Auto configure processor` switch, auto processing is disabled.

The user can enable auto configure by turning the switch back on.

When auto configure is enabled, a small dot is overlaid on the expand button to remind the user that the system is not auto configuring the processor for them.

If auto configure is enabled, the processor settings are reset to the default for the selected model.

Add uploading to IAIDndImage

- add `postUploadAction` arg to `imageUploaded` thunk, with several current valid options (set control image, set init, set nodes image, set canvas, or toast)

- updated IAIDndImage to optionally allow click to upload

- when the controlnet model is changed, if there is a default processor for the model set, the processor is changed.

- once a control image is selected (and processed), changing the model does not change the processor - must be manually changed

- Also fixed up order in which logger is created in invokeai-web

so that handlers are installed after command-line options are

parsed (and not before!)

This handles the case when an image is deleted but is still in use in as eg an init image on canvas, or a control image. If we just delete the image, canvas/controlnet/etc may break (the image would just fail to load).

When an image is deleted, the app checks to see if it is in use in:

- Image to Image

- ControlNet

- Unified Canvas

- Node Editor

The delete dialog will always open if the image is in use anywhere, and the user is advised that deleting the image will reset the feature(s).

Even if the user has ticked the box to not confirm on delete, the dialog will still show if the image is in use somewhere.

- fix "bounding box region only" not being respected when saving

- add toasts for each action

- improve workflow `take()` predicates to use the requestId

- responsive changes were causing a lot of weird layout issues, had to remove the rest of them

- canvas (non-beta) toolbar now wraps

- reduces minH for prompt boxes a bit

1. Model installer works correctly under Windows 11 Terminal

2. Fixed crash when configure script hands control off to installer

3. Kill install subprocess on keyboard interrupt

4. Command-line functionality for --yes configuration and model installation

restored.

5. New command-line features:

- install/delete lists of diffusers, LoRAS, controlnets and textual inversions

using repo ids, paths or URLs.

Help:

```

usage: invokeai-model-install [-h] [--diffusers [DIFFUSERS ...]] [--loras [LORAS ...]] [--controlnets [CONTROLNETS ...]] [--textual-inversions [TEXTUAL_INVERSIONS ...]] [--delete] [--full-precision | --no-full-precision]

[--yes] [--default_only] [--list-models {diffusers,loras,controlnets,tis}] [--config_file CONFIG_FILE] [--root_dir ROOT]

InvokeAI model downloader

options:

-h, --help show this help message and exit

--diffusers [DIFFUSERS ...]

List of URLs or repo_ids of diffusers to install/delete

--loras [LORAS ...] List of URLs or repo_ids of LoRA/LyCORIS models to install/delete

--controlnets [CONTROLNETS ...]

List of URLs or repo_ids of controlnet models to install/delete

--textual-inversions [TEXTUAL_INVERSIONS ...]

List of URLs or repo_ids of textual inversion embeddings to install/delete

--delete Delete models listed on command line rather than installing them

--full-precision, --no-full-precision

use 32-bit weights instead of faster 16-bit weights (default: False)

--yes, -y answer "yes" to all prompts

--default_only only install the default model

--list-models {diffusers,loras,controlnets,tis}

list installed models

--config_file CONFIG_FILE, -c CONFIG_FILE

path to configuration file to create

--root_dir ROOT path to root of install directory

```

There was a potential gotcha in the config system that was previously

merged with main. The `InvokeAIAppConfig` object was configuring itself

from the command line and configuration file within its initialization

routine. However, this could cause it to read `argv` from the command

line at unexpected times. This PR fixes the object so that it only reads

from the init file and command line when its `parse_args()` method is

explicitly called, which should be done at startup time in any top level

script that uses it.

In addition, using the `get_invokeai_config()` function to get a global

version of the config object didn't feel pythonic to me, so I have

changed this to `InvokeAIAppConfig.get_config()` throughout.

## Updated Usage

In the main script, at startup time, do the following:

```

from invokeai.app.services.config import InvokeAIAppConfig

config = InvokeAIAppConfig.get_config()

config.parse_args()

```

In non-main scripts, it is not necessary (or recommended) to call

`parse_args()`:

```

from invokeai.app.services.config import InvokeAIAppConfig

config = InvokeAIAppConfig.get_config()

```

The configuration object properties can be overridden when

`get_config()` is called by passing initialization values in the usual

way. If a property is set this way, then it will not be changed by

subsequent calls to `parse_args()`, but can only be changed by

explicitly setting the property.

```

config = InvokeAIAppConfig.get_config(nsfw_checker=True)

config.parse_args(argv=['--no-nsfw_checker'])

config.nsfw_checker

# True

```

You may specify alternative argv lists and configuration files in

`parse_args()`:

```

config.parse_args(argv=['--no-nsfw_checker'],

conf = OmegaConf.load('/tmp/test.yaml')

)

```

For backward compatibility, the `get_invokeai_config()` function is

still available from the module, but has been removed from the rest of

the source tree.

this PR adds long prompt support and enables compel's new `.and()`

concatenation feature which improves image quality especially with SD2.1

example of a long prompt:

> a moist sloppy pindlesackboy sloppy hamblin' bogomadong, Clem Fandango

is pissed-off, Wario's Woods in background, making a noise like

ga-woink-a

the same prompt broken into fragments and concatenated using `.and()`

(syntax works like `.blend()`):

```

("a moist sloppy pindlesackboy sloppy hamblin' bogomadong",

"Clem Fandango is pissed-off",

"Wario's Woods in background",

"making a noise like ga-woink-a").and()

```

and a less silly example:

> A dream of a distant galaxy, by Caspar David Friedrich, matte

painting, trending on artstation, HQ

the same prompt broken into two fragments and concatenated:

```

("A dream of a distant galaxy, by Caspar David Friedrich, matte painting",

"trending on artstation, HQ").and()

```

as with `.blend()` you can also weight the parts eg `("a man eating an

apple", "sitting on the roof of a car", "high quality, trending on

artstation, 8K UHD").and(1, 0.5, 0.5)` which will assign weight `1` to

`a man eating an apple` and `0.5` to `sitting on the roof of a car` and

`high quality, trending on artstation, 8K UHD`.

Implement `dnd-kit` for image drag and drop

- vastly simplifies logic bc we can drag and drop non-serializable data (like an `ImageDTO`)

- also much prettier

- also will fix conflicts with file upload via OS drag and drop, bc `dnd-kit` does not use native HTML drag and drop API

- Implemented for Init image, controlnet, and node editor so far

More progress on the ControlNet UI

- The invokeai.db database file has now been moved into

`INVOKEAIROOT/databases`. Using plural here for possible

future with more than one database file.

- Removed a few dangling debug messages that appeared during

testing.

- Rebuilt frontend to test web.

This PR provides a number of options for controlling how InvokeAI logs

messages, including options to log to a file, syslog and a web server.

Several logging handlers can be configured simultaneously.

## Controlling How InvokeAI Logs Status Messages

InvokeAI logs status messages using a configurable logging system. You

can log to the terminal window, to a designated file on the local

machine, to the syslog facility on a Linux or Mac, or to a properly

configured web server. You can configure several logs at the same time,

and control the level of message logged and the logging format (to a

limited extent).

Three command-line options control logging:

### `--log_handlers <handler1> <handler2> ...`

This option activates one or more log handlers. Options are "console",

"file", "syslog" and "http". To specify more than one, separate them by

spaces:

```bash

invokeai-web --log_handlers console syslog=/dev/log file=C:\Users\fred\invokeai.log

```

The format of these options is described below.

### `--log_format {plain|color|legacy|syslog}`

This controls the format of log messages written to the console. Only

the "console" log handler is currently affected by this setting.

* "plain" provides formatted messages like this:

```bash

[2023-05-24 23:18:2[2023-05-24 23:18:50,352]::[InvokeAI]::DEBUG --> this is a debug message

[2023-05-24 23:18:50,352]::[InvokeAI]::INFO --> this is an informational messages

[2023-05-24 23:18:50,352]::[InvokeAI]::WARNING --> this is a warning

[2023-05-24 23:18:50,352]::[InvokeAI]::ERROR --> this is an error

[2023-05-24 23:18:50,352]::[InvokeAI]::CRITICAL --> this is a critical error

```

* "color" produces similar output, but the text will be color coded to

indicate the severity of the message.

* "legacy" produces output similar to InvokeAI versions 2.3 and earlier:

```bash

### this is a critical error

*** this is an error

** this is a warning

>> this is an informational messages

| this is a debug message

```

* "syslog" produces messages suitable for syslog entries:

```bash

InvokeAI [2691178] <CRITICAL> this is a critical error

InvokeAI [2691178] <ERROR> this is an error

InvokeAI [2691178] <WARNING> this is a warning

InvokeAI [2691178] <INFO> this is an informational messages

InvokeAI [2691178] <DEBUG> this is a debug message

```

(note that the date, time and hostname will be added by the syslog

system)

### `--log_level {debug|info|warning|error|critical}`

Providing this command-line option will cause only messages at the

specified level or above to be emitted.

## Console logging

When "console" is provided to `--log_handlers`, messages will be written

to the command line window in which InvokeAI was launched. By default,

the color formatter will be used unless overridden by `--log_format`.

## File logging

When "file" is provided to `--log_handlers`, entries will be written to

the file indicated in the path argument. By default, the "plain" format

will be used:

```bash

invokeai-web --log_handlers file=/var/log/invokeai.log

```

## Syslog logging

When "syslog" is requested, entries will be sent to the syslog system.

There are a variety of ways to control where the log message is sent:

* Send to the local machine using the `/dev/log` socket:

```

invokeai-web --log_handlers syslog=/dev/log

```

* Send to the local machine using a UDP message:

```

invokeai-web --log_handlers syslog=localhost

```

* Send to the local machine using a UDP message on a nonstandard port:

```

invokeai-web --log_handlers syslog=localhost:512

```

* Send to a remote machine named "loghost" on the local LAN using

facility LOG_USER and UDP packets:

```

invokeai-web --log_handlers syslog=loghost,facility=LOG_USER,socktype=SOCK_DGRAM

```

This can be abbreviated `syslog=loghost`, as LOG_USER and SOCK_DGRAM are

defaults.

* Send to a remote machine named "loghost" using the facility LOCAL0 and

using a TCP socket:

```

invokeai-web --log_handlers syslog=loghost,facility=LOG_LOCAL0,socktype=SOCK_STREAM

```

If no arguments are specified (just a bare "syslog"), then the logging

system will look for a UNIX socket named `/dev/log`, and if not found

try to send a UDP message to `localhost`. The Macintosh OS used to

support logging to a socket named `/var/run/syslog`, but this feature

has since been disabled.

## Web logging

If you have access to a web server that is configured to log messages

when a particular URL is requested, you can log using the "http" method:

```

invokeai-web --log_handlers http=http://my.server/path/to/logger,method=POST

```

The optional [,method=] part can be used to specify whether the URL

accepts GET (default) or POST messages.

Currently password authentication and SSL are not supported.

## Using the configuration file

You can set and forget logging options by adding a "Logging" section to

`invokeai.yaml`:

```

InvokeAI:

[... other settings...]

Logging:

log_handlers:

- console

- syslog=/dev/log

log_level: info

log_format: color

```

1. Separated the "starter models" and "more models" sections. This

gives us room to list all installed diffuserse models, not just

those that are on the starter list.

2. Support mouse-based paste into the textboxes with either middle

or right mouse buttons.

3. Support terminal-style cursor movement:

^A to move to beginning of line

^E to move to end of line

^K kill text to right and put in killring

^Y yank text back

4. Internal code cleanup.

The gallery could get in a state where it thought it had just reached the end of the list and endlessly fetches more images, if there are no more images to fetch (weird I know).

Add some logic to remove the `end reached` handler when there are no more images to load.

it doesn't work for the img2img pipelines, but the implemented conditional display could break the scheduler selection dropdown.

simple fix until diffusers merges the fix - never use this scheduler.

Inputs with explicit values are validated by pydantic even if they also

have a connection (which is the actual value that is used).

Fix this by omitting explicit values for inputs that have a connection.

Problem was that controlnet support involved adding **kwargs to method calls down in denoising loop, and AddsMaskLatents didn't accept **kwarg arg. So just changed to accept and pass on **kwargs.

This may cause minor gallery jumpiness at the very end of processing, but is necessary to prevent the progress image from sticking around if the last node in a session did not have an image output.

Some socket events should not be handled by the slice reducers. For example generation progress should not be handled for a canceled session.

Added another layer of socket actions.

Example:

- `socketGeneratorProgress` is dispatched when the actual socket event is received

- Listener middleware exclusively handles this event and determines if the application should also handle it

- If so, it dispatches `appSocketGeneratorProgress`, which the slices can handle

Needed to fix issues related to canceling invocations.

Now that images are in a database and we can make filtered queries, we can do away with the cumbersome `resultsSlice` and `uploadsSlice`.

- Remove `resultsSlice` and `uploadsSlice` entirely

- Add `imagesSlice` fills the same role

- Convert the application to use `imagesSlice`, reducing a lot of messy logic where we had to check which category was selected

- Add a simple filter popover to the gallery, which lets you select any number of image categories

Because we dynamically insert images into the DB and UI's images state, `page`/`per_page` pagination makes loading the images awkward.

Using `offset`/`limit` pagination lets us query for images with an offset equal to the number of images already loaded (which match the query parameters).

The result is that we always get the correct next page of images when loading more.

- Update all thunks & network related things

- Update gallery

What I have not done yet is rename the gallery tabs and the relevant slices, but I believe the functionality is all there.

Also I fixed several bugs along the way but couldn't really commit them separately bc I was refactoring. Can't remember what they were, but related to the gallery image switching.

- Remove `ImageType` entirely, it is confusing

- Create `ResourceOrigin`, may be `internal` or `external`

- Revamp `ImageCategory`, may be `general`, `mask`, `control`, `user`, `other`. Expect to add more as time goes on

- Update images `list` route to accept `include_categories` OR `exclude_categories` query parameters to afford finer-grained querying. All services are updated to accomodate this change.

The new setup should account for our types of images, including the combinations we couldn't really handle until now:

- Canvas init and masks

- Canvas when saved-to-gallery or merged

Currenly only used to make names for images, but when latents, conditioning, etc are managed in DB, will do the same for them.

Intended to eventually support custom naming schemes.

MidasDepth

ZoeDepth

MLSD

NormalBae

Pidi

LineartAnime

ContentShuffle

Removed pil_output options, ControlNet preprocessors should always output as PIL. Removed diagnostics and other general cleanup.

MidasDepth

ZoeDepth

MLSD

NormalBae

Pidi

LineartAnime

ContentShuffle

Removed pil_output options, ControlNet preprocessors should always output as PIL. Removed diagnostics and other general cleanup.

MidasDepth

ZoeDepth

MLSD

NormalBae

Pidi

LineartAnime

ContentShuffle

Removed pil_output options, ControlNet preprocessors should always output as PIL. Removed diagnostics and other general cleanup.

MidasDepth

ZoeDepth

MLSD

NormalBae

Pidi

LineartAnime

ContentShuffle

Removed pil_output options, ControlNet preprocessors should always output as PIL. Removed diagnostics and other general cleanup.

- Update the canvas graph generation to flag its uploaded init and mask images as `intermediate`.

- During canvas setup, hit the update route to associate the uploaded images with the session id.

- Organize the socketio and RTK listener middlware better. Needed to facilitate the updated canvas logic.

- Add a new action `sessionReadyToInvoke`. The `sessionInvoked` action is *only* ever run in response to this event. This lets us do whatever complicated setup (eg canvas) and explicitly invoking. Previously, invoking was tied to the socket subscribe events.

- Some minor tidying.

- `ImageType` is now restricted to `results` and `uploads`.

- Add a reserved `meta` field to nodes to hold the `is_intermediate` boolean. We can extend it in the future to support other node `meta`.

- Add a `is_intermediate` column to the `images` table to hold this. (When `latents`, `conditioning` etc are added to the DB, they will also have this column.)

- All nodes default to `*not* intermediate`. Nodes must explicitly be marked `intermediate` for their outputs to be `intermediate`.

- When building a graph, you can set `node.meta.is_intermediate=True` and it will be handled as an intermediate.

- Add a new `update()` method to the `ImageService`, and a route to call it. Updates have a strict model, currently only `session_id` and `image_category` may be updated.

- Add a new `update()` method to the `ImageRecordStorageService` to update the image record using the model.

The `RangeInvocation` is a simple wrapper around `range()`, but you must provide `stop > start`.

`RangeOfSizeInvocation` replaces the `stop` parameter with `size`, so that you can just provide the `start` and `step` and get a range of `size` length.

When returning a `FileResponse`, we must provide a valid path, else an exception is raised outside the route handler.

Add the `validate_path` method back to the service so we can validate paths before returning the file.

I don't like this but apparently this is just how `starlette` and `fastapi` works with `FileResponse`.

- Address database feedback:

- Remove all the extraneous tables. Only an `images` table now:

- `image_type` and `image_category` are unrestricted strings. When creating images, the provided values are checked to ensure they are a valid type and category.

- Add `updated_at` and `deleted_at` columns. `deleted_at` is currently unused.

- Use SQLite's built-in timestamp features to populate these. Add a trigger to update `updated_at` when the row is updated. Currently no way to update a row.

- Rename the `id` column in `images` to `image_name`

- Rename `ImageCategory.IMAGE` to `ImageCategory.GENERAL`

- Move all exceptions outside their base classes to make them more portable.

- Add `width` and `height` columns to the database. These store the actual dimensions of the image file, whereas the metadata's `width` and `height` refer to the respective generation parameters and are nullable.

- Make `deserialize_image_record` take a `dict` instead of `sqlite3.Row`

- Improve comments throughout

- Tidy up unused code/files and some minor organisation

feat(nodes): add ResultsServiceABC & SqliteResultsService

**Doesn't actually work bc of circular imports. Can't even test it.**

- add a base class for ResultsService and SQLite implementation

- use `graph_execution_manager` `on_changed` callback to keep `results` table in sync

fix(nodes): fix results service bugs

chore(ui): regen api

fix(ui): fix type guards

feat(nodes): add `result_type` to results table, fix types

fix(nodes): do not shadow `list` builtin

feat(nodes): add results router

It doesn't work due to circular imports still

fix(nodes): Result class should use outputs classes, not fields

feat(ui): crude results router

fix(ui): send to canvas in currentimagebuttons not working

feat(nodes): add core metadata builder

feat(nodes): add design doc

feat(nodes): wip latents db stuff

feat(nodes): images_db_service and resources router

feat(nodes): wip images db & router

feat(nodes): update image related names

feat(nodes): update urlservice

feat(nodes): add high-level images service

The problem was the same seed was getting used for the seam painting pass, causing the fried look.

Same issue as if you do img2img on a txt2img with the same seed/prompt.

Thanks to @hipsterusername for teaming up to debug this. We got pretty deep into the weeds.

This commit makes InvokeAI 3.0 to be installable via PyPi.org and the

installer script.

Main changes.

1. Move static web pages into `invokeai/frontend/web` and modify the

API to look for them there. This allows pip to copy the files into the

distribution directory so that user no longer has to be in repo root

to launch.

2. Update invoke.sh and invoke.bat to launch the new web application

properly. This also changes the wording for launching the CLI from

"generate images" to "explore the InvokeAI node system," since I would

not recommend using the CLI to generate images routinely.

3. Fix a bug in the checkpoint converter script that was identified

during testing.

4. Better error reporting when checkpoint converter fails.

5. Rebuild front end.

* added optional middleware prop and new actions needed

* accidental import

* make middleware an array

---------

Co-authored-by: Mary Hipp <maryhipp@Marys-MacBook-Air.local>

# Application-wide configuration service

This PR creates a new `InvokeAIAppConfig` object that reads

application-wide settings from an init file, the environment, and the

command line.

Arguments and fields are taken from the pydantic definition of the

model. Defaults can be set by creating a yaml configuration file that

has a top-level key of "InvokeAI" and subheadings for each of the

categories returned by `invokeai --help`.

The file looks like this:

[file: invokeai.yaml]

```

InvokeAI:

Paths:

root: /home/lstein/invokeai-main

conf_path: configs/models.yaml

legacy_conf_dir: configs/stable-diffusion

outdir: outputs

embedding_dir: embeddings

lora_dir: loras

autoconvert_dir: null

gfpgan_model_dir: models/gfpgan/GFPGANv1.4.pth

Models:

model: stable-diffusion-1.5

embeddings: true

Memory/Performance:

xformers_enabled: false

sequential_guidance: false

precision: float16

max_loaded_models: 4

always_use_cpu: false

free_gpu_mem: false

Features:

nsfw_checker: true

restore: true

esrgan: true

patchmatch: true

internet_available: true

log_tokenization: false

Cross-Origin Resource Sharing:

allow_origins: []

allow_credentials: true

allow_methods:

- '*'

allow_headers:

- '*'

Web Server:

host: 127.0.0.1

port: 8081

```

The default name of the configuration file is `invokeai.yaml`, located

in INVOKEAI_ROOT. You can use any OmegaConf dictionary by passing it to

the config object at initialization time:

```

omegaconf = OmegaConf.load('/tmp/init.yaml')

conf = InvokeAIAppConfig(conf=omegaconf)

```

The default name of the configuration file is `invokeai.yaml`, located

in INVOKEAI_ROOT. You can replace supersede this by providing

anyOmegaConf dictionary object initialization time:

```

omegaconf = OmegaConf.load('/tmp/init.yaml')

conf = InvokeAIAppConfig(conf=omegaconf)

```

By default, InvokeAIAppConfig will parse the contents of `sys.argv` at

initialization time. You may pass a list of strings in the optional

`argv` argument to use instead of the system argv:

```

conf = InvokeAIAppConfig(arg=['--xformers_enabled'])

```

It is also possible to set a value at initialization time. This value

has highest priority.

```

conf = InvokeAIAppConfig(xformers_enabled=True)

```

Any setting can be overwritten by setting an environment variable of

form: "INVOKEAI_<setting>", as in:

```

export INVOKEAI_port=8080

```

Order of precedence (from highest):

1) initialization options

2) command line options

3) environment variable options

4) config file options

5) pydantic defaults

Typical usage:

```

from invokeai.app.services.config import InvokeAIAppConfig

# get global configuration and print its nsfw_checker value

conf = InvokeAIAppConfig()

print(conf.nsfw_checker)

```

Finally, the configuration object is able to recreate its (modified)

yaml file, by calling its `to_yaml()` method:

```

conf = InvokeAIAppConfig(outdir='/tmp', port=8080)

print(conf.to_yaml())

```

# Legacy code removal and porting

This PR replaces Globals with the InvokeAIAppConfig system throughout,

and therefore removes the `globals.py` and `args.py` modules. It also

removes `generate` and the legacy CLI. ***The old CLI and web servers

are now gone.***

I have ported the functionality of the configuration script, the model

installer, and the merge and textual inversion scripts. The `invokeai`

command will now launch `invokeai-node-cli`, and `invokeai-web` will

launch the web server.

I have changed the continuous invocation tests to accommodate the new

command syntax in `invokeai-node-cli`. As a convenience function, you

can also pass invocations to `invokeai-node-cli` (or its alias

`invokeai`) on the command line as as standard input:

```

invokeai-node-cli "t2i --positive_prompt 'banana sushi' --seed 42"

invokeai < invocation_commands.txt

```

- Make environment variable settings case InSenSiTive:

INVOKEAI_MAX_LOADED_MODELS and InvokeAI_Max_Loaded_Models

environment variables will both set `max_loaded_models`

- Updated realesrgan to use new config system.

- Updated textual_inversion_training to use new config system.

- Discovered a race condition when InvokeAIAppConfig is created

at module load time, which makes it impossible to customize

or replace the help message produced with --help on the command

line. To fix this, moved all instances of get_invokeai_config()

from module load time to object initialization time. Makes code

cleaner, too.

- Added `--from_file` argument to `invokeai-node-cli` and changed

github action to match. CI tests will hopefully work now.

- invokeai-configure updated to work with new config system

- migrate invokeai.init to invokeai.yaml during configure

- replace legacy invokeai with invokeai-node-cli

- add ability to run an invocation directly from invokeai-node-cli command line

- update CI tests to work with new invokeai syntax

* refetch images list if error loading

* tell user to refresh instead of refetching

* unused import

* feat(ui): use `useAppToaster` to make toast

* fix(ui): clear selected/initial image on error

---------

Co-authored-by: Mary Hipp <maryhipp@Marys-MacBook-Air.local>

Co-authored-by: psychedelicious <4822129+psychedelicious@users.noreply.github.com>

The `ModelsList` OpenAPI schema is generated as being keyed by plain strings. This means that API consumers do not know the shape of the dict. It _should_ be keyed by the `SDModelType` enum.

Unfortunately, `fastapi` does not actually handle this correctly yet; it still generates the schema with plain string keys.

Adding this anyways though in hopes that it will be resolved upstream and we can get the correct schema. Until then, I'll implement the (simple but annoying) logic on the frontend.

https://github.com/pydantic/pydantic/issues/4393

1. If an external VAE is specified in config file, then

get_model(submodel=vae) will return the external VAE, not the one

burnt into the parent diffusers pipeline.

2. The mechanism in (1) is generalized such that you can now have

"unet:", "text_encoder:" and similar stanzas in the config file.

Valid formats of these subsections:

unet:

repo_id: foo/bar

unet:

path: /path/to/local/folder

unet:

repo_id: foo/bar

subfolder: unet

In the near future, these will also be used to attach external

parts to the pipeline, generalizing VAE behavior.

3. Accommodate callers (i.e. the WebUI) that are passing the

model key ("diffusers/stable-diffusion-1.5") to get_model()

instead of the tuple of model_name and model_type.

4. Fixed bug in VAE model attaching code.

5. Rebuilt web front end.

This PR improves the logging module a tad bit along with the

documentation.

**New Look:**

## Usage

**General Logger**

InvokeAI has a module level logger. You can call it this way.

In this below example, you will use the default logger `InvokeAI` and

all your messages will be logged under that name.

```python

from invokeai.backend.util.logging import logger

logger.critical("CriticalMessage") // In Bold Red

logger.error("Info Message") // In Red

logger.warning("Info Message") // In Yellow

logger.info("Info Message") // In Grey

logger.debug("Debug Message") // In Grey

```

Results:

```

[12-05-2023 20]::[InvokeAI]::CRITICAL --> This is an info message [In Bold Red]

[12-05-2023 20]::[InvokeAI]::ERROR --> This is an info message [In Red]

[12-05-2023 20]::[InvokeAI]::WARNING --> This is an info message [In Yellow]

[12-05-2023 20]::[InvokeAI]::INFO --> This is an info message [In Grey]

[12-05-2023 20]::[InvokeAI]::DEBUG --> This is an info message [In Grey]

```

**Custom Logger**

If you want to use a custom logger for your module, you can import it

the following way.

```python

from invokeai.backend.util.logging import logging

logger = logging.getLogger(name='Model Manager')

logger.critical("CriticalMessage") // In Bold Red

logger.error("Info Message") // In Red

logger.warning("Info Message") // In Yellow

logger.info("Info Message") // In Grey

logger.debug("Debug Message") // In Grey

```

Results:

```

[12-05-2023 20]::[Model Manager]::CRITICAL --> This is an info message [In Bold Red]

[12-05-2023 20]::[Model Manager]::ERROR --> This is an info message [In Red]

[12-05-2023 20]::[Model Manager]::WARNING --> This is an info message [In Yellow]

[12-05-2023 20]::[Model Manager]::INFO --> This is an info message [In Grey]

[12-05-2023 20]::[Model Manager]::DEBUG --> This is an info message [In Grey]

```

**When to use custom logger?**

It is recommended to use a custom logger if your module is not a part of

base InvokeAI. For example: custom extensions / nodes.

1. if retrieving an item from the queue raises an exception, the

InvocationProcessor thread crashes, but the API continues running in

a non-functional state. This fixes the issue

2. when there are no items in the queue, sleep 1 second before checking

again.

3. Also ensures the thread isn't crashed if an exception is raised from

invoker, and emits the error event

Intentionally using base Exceptions because for now we don't know which

specific exception to expect.

Fixes (sort of)? #3222

- do not show canvas intermediates in gallery

- do not show progress image in uploads gallery category

- use custom dark mode `localStorage` key (prevents collision with

commercial)

- use variable font (reduce bundle size by factor of 10)

- change how custom headers are used

- use style injection for building package

- fix tab icon sizes

when building for package, CSS is all in JS files. when used as a package, it is then injected into the page. bit of a hack to missing CSS in commercial product

**Features:**

- Add UniPC Scheduler

- Add Euler Karras Scheduler

- Add DPMPP_2 Karras Scheduler

- Add DEIS Scheduler

- Add DDPM Scheduler

**Other:**

- Renamed schedulers to their accurate names: _a = Ancestral, _k =

Karras

- Fix scheduler not defaulting correctly to DDIM.

- Code split SCHEDULER_MAP so its consistently loaded from the same

place.

**Known Bugs:**

- dpmpp_2s not working in img2img for denoising values < 0.8 ==> // This

seems to be an upstream bug. I've disabled it in img2img and canvas

until the upstream bug is fixed.

https://github.com/huggingface/diffusers/issues/1866

This PR updates to `xformers ~= 0.0.19` and `torch ~= 2.0.0`, which

together seem to solve the non-deterministic image generation issue that

was previously seen with earlier versions of `xformers`.

Update the push trigger with the branch which should deploy the docs,

also bring over the updates to the workflow from the v2.3 branch and:

- remove main and development branch from trigger

- they would fail without the updated toml

- cache pip environment

- update install method (`pip install ".[docs]"`)

hi there, love the project! i noticed a small typo when going over the

install process.

when copying the automated install instructions from the docs into a

terminal, the line to install the python packages failed as it was

missing the `-y` flag.

when copying the automated install instructions from the docs into a terminal, the line to install the python packages failed as it was missing the `-y` flag.

Seems like this is the only change needed for the existing inpaint code

to work as a node. Kyle said on Discord that inpaint shouldn't be a

node, so feel free to just reject this if this code is going to be gone

soon.

# Intro

This commit adds invokeai.backend.util.logging, which provides support

for formatted console and logfile messages that follow the status

reporting conventions of earlier InvokeAI versions:

```

### A critical error

*** A non-fatal error

** A warning

>> Informational message

| Debugging message

```

Internally, the invokeai logging module creates a new default logger

named "invokeai" so that its logging does not interfere with other

module's use of the vanilla logging module. So `logging.error("foo")`

will go through the regular logging path and not add InvokeAI's

informational message decorations, while `ialog.error("foo")` will add

the decorations.

# Usage:

This is a thin wrapper around the standard Python logging module. It can

be used in several ways:

## Module-level logging style

This style logs everything through a single default logging object and

is identical to using Python's `logging` module. The commonly-used

module-level logging functions are implemented as simple pass-thrus to

logging:

```

import invokeai.backend.util.logging as logger

logger.debug('this is a debugging message')

logger.info('this is a informational message')

logger.log(level=logging.CRITICAL, 'get out of dodge')

logger.disable(level=logging.INFO)

logger.basicConfig(filename='/var/log/invokeai.log')

logger.error('this will be logged to console and to invokeai.log')

```

Internally these functions all go through a custom logging object named

"invokeai". You can access it to perform additional customization in

either of these ways:

```

logger = logger.getLogger()

logger = logger.getLogger('invokeai')

```

## Object-oriented style

For more control, the logging module's object-oriented logging style is

also supported. The API is identical to the vanilla logging usage. In

fact, the only thing that has changed is that the getLogger() method

adds a custom formatter to the log messages.

```

import logging

from invokeai.backend.util.logging import InvokeAILogger

logger = InvokeAILogger.getLogger(__name__)

fh = logging.FileHandler('/var/invokeai.log')

logger.addHandler(fh)

logger.critical('this will be logged to both the console and the log file')

```

## Within the nodes API

From within the nodes API, the logger module is stored in the `logger`

slot of InvocationServices during dependency initialization. For

example, in a router, the idiom is:

```

from ..dependencies import ApiDependencies

logger = ApiDependencies.invoker.services.logger

logger.warning('uh oh')

```

Currently, to change the logger used by the API, one must change the

logging module passed to `ApiDependencies.initialize()` in `api_app.py`.

However, this will eventually be replaced with a method to select the

preferred logging module using the configuration file (dependent on

merging of PR #3221)

- I've sorted out the issues that make *not* persisting troublesome, these will be rolled out with canvas

- Also realized that persisting gallery images very quickly fills up localStorage, so we can't really do it anyways

vastly improves the gallery performance when many images are loaded.

- `react-virtuoso` to do the virtualized list

- `overlayscrollbars` for a scrollbar

On hyperthreaded CPUs we get two threads operating on the queue by

default on each core. This cases two threads to process queue items.

This results in pytorch errors and sometimes generates garbage.

Locking this to single thread makes sense because we are bound by the

number of GPUs in the system, not by CPU cores. And to parallelize

across GPUs we should just start multiple processors (and use async

instead of threading)

Fixes#3289

- `disabledParametersPanels` -> `disabledFeatures`

- handle disabling `faceRestore`, `upscaling`, `lightbox`, `modelManager` and OSS header links/buttons

- wait until models are loaded to hide loading screen

- also wait until schema is parsed if `nodes` is an enabled tab

When gallery was empty (and there is therefore no selected image), no

progress images were displayed.

- fix by correcting the logic in CurrentImageDisplay

- also fix app crash introduced by fixing the first bug

Prevent legacy CLI crash caused by removal of convert option

- Compensatory change to the CLI that prevents it from crashing when it

tries to import a model.

- Bug introduced when the "convert" option removed from the model

manager.

- Fix the update script to work again and fixes the ambiguity between

when a user wants to update to a tag vs updating to a branch, by making

these two operations explicitly separate.

- Remove dangling functions and arguments related to legacy checkpoint

conversion. These are no longer needed now that all legacy models are

either converted at import time, or on-the-fly in RAM.

I noticed that the current invokeai-new.py was using almost all of a CPU

core. After a bit of profileing I noticed that there were many thousands

of calls to epoll() which suggested to me that something wasn't sleeping

properly in asyncio's loop.

A bit of further investigation with Python profiling revealed that the

__dispatch_from_queue() method in FastAPIEventService

(app/api/events.py:33) was also being called thousands of times.

I believe the asyncio.sleep(0.001) in that method is too aggressive (it

means that the queue will be polled every 1ms) and that 0.1 (100ms) is

still entirely reasonable.

Currently translated at 100.0% (512 of 512 strings)

translationBot(ui): update translation (Russian)

Currently translated at 100.0% (512 of 512 strings)

translationBot(ui): update translation (English)

Currently translated at 100.0% (512 of 512 strings)

translationBot(ui): update translation (Ukrainian)

Currently translated at 100.0% (506 of 506 strings)

translationBot(ui): update translation (Russian)

Currently translated at 100.0% (506 of 506 strings)

translationBot(ui): update translation (Russian)

Currently translated at 100.0% (506 of 506 strings)

Co-authored-by: System X - Files <vasyasos@gmail.com>

Translate-URL: https://hosted.weblate.org/projects/invokeai/web-ui/en/

Translate-URL: https://hosted.weblate.org/projects/invokeai/web-ui/ru/

Translate-URL: https://hosted.weblate.org/projects/invokeai/web-ui/uk/

Translation: InvokeAI/Web UI

Currently translated at 100.0% (512 of 512 strings)

translationBot(ui): update translation (Italian)

Currently translated at 100.0% (511 of 511 strings)

translationBot(ui): update translation (Italian)

Currently translated at 100.0% (506 of 506 strings)

Co-authored-by: Riccardo Giovanetti <riccardo.giovanetti@gmail.com>

Translate-URL: https://hosted.weblate.org/projects/invokeai/web-ui/it/

Translation: InvokeAI/Web UI

Currently translated at 100.0% (512 of 512 strings)

translationBot(ui): update translation (Spanish)

Currently translated at 100.0% (511 of 511 strings)

translationBot(ui): update translation (Spanish)

Currently translated at 100.0% (506 of 506 strings)

Co-authored-by: gallegonovato <fran-carro@hotmail.es>

Translate-URL: https://hosted.weblate.org/projects/invokeai/web-ui/es/

Translation: InvokeAI/Web UI

* if `shouldFetchImages` is passed in, UI will make an additional

request to get valid image URL when an invocation is complete

* this is necessary in order to have optional authorization for images

- Style the Minimap

- Made the Node UI Legend Responsive

- Set Min Width for nodes on Spawn so resize doesn't snap.

- Initial Implementation of Node Search

- Added FuseJS to handle the node filtering

The first draft for a Responsive Mobile Layout for InvokeAI. Some basic

documentation to help contributors. // Notes from: @blessedcoolant

---

The whole rework needs to be done using the `mobile first` concept where

the base design will be catered to mobile and we add responsive changes

as we grow to larger screens.

**Added**

- Basic breakpoints have been added to the `theme.ts` file that indicate

at which values Chakra makes the responsive changes.

- A basic `useResolution` hook has been added that either returns

`mobile`, `tablet` or `desktop` based on the breakpoint. We can

customize this hook further to do more complex checks for us if need be.

**Syntax**

- Any Chakra component is directly capable of taking different values

for the different breakpoints set in our `theme.ts` file. These can be

passed in a few ways with the most descriptive being an object. For

example:

`flexDir={{ base: 'column', xl: 'row' }}` - This would set the `0em and

above` to be column for the flex direction but change to row

automatically when we hit `xl` and above resolutions which in our case

is `80em or 1280px`. This same format is applicable for any element in

Chakra.

`flexDir={['column', null, null, 'row', null]}` - The above syntax can

also be passed as an array to the property with each value in the array

corresponding to each breakpoint we have. Setting `null` just bypasses

it. This is a good short hand but I think we stick to the above syntax

for readability.

**Note**: I've modified a few elements here and there to give an idea on

how the responsive syntax works for reference.

---

**Problems to be solved** @SammCheese

- Some issues you might run into are with the Resizable components.

We've decided we will get not use resizable components for smaller

resolutions. Doesn't make sense. So you'll need to make conditional

renderings around these.

- Some components that need custom layouts for different screens might

be better if ported over to `Grid` and use `gridTemplateAreas` to swap

out the design layout. I've demonstrated an example of this in a commit

I've made. I'll let you be the judge of where we might need this.

- The header will probably need to be converted to a burger menu of some

sort with the model changing being handled correctly UX wise. We'll

discuss this on discord.

---

Anyone willing to contribute to this PR can feel free to join the

discussion on discord.

https://discord.com/channels/1020123559063990373/1020839344170348605/threads/1097323866780606615

* feat(ui): add axios client generator and simple example

* fix(ui): update client & nodes test code w/ new Edge type

* chore(ui): organize generated files

* chore(ui): update .eslintignore, .prettierignore

* chore(ui): update openapi.json

* feat(backend): fixes for nodes/generator

* feat(ui): generate object args for api client

* feat(ui): more nodes api prototyping

* feat(ui): nodes cancel

* chore(ui): regenerate api client

* fix(ui): disable OG web server socket connection

* fix(ui): fix scrollbar styles typing and prop

just noticed the typo, and made the types stronger.

* feat(ui): add socketio types

* feat(ui): wip nodes

- extract api client method arg types instead of manually declaring them

- update example to display images

- general tidy up

* start building out node translations from frontend state and add notes about missing features

* use reference to sampler_name

* use reference to sampler_name

* add optional apiUrl prop

* feat(ui): start hooking up dynamic txt2img node generation, create middleware for session invocation

* feat(ui): write separate nodes socket layer, txt2img generating and rendering w single node

* feat(ui): img2img implementation

* feat(ui): get intermediate images working but types are stubbed out

* chore(ui): add support for package mode

* feat(ui): add nodes mode script

* feat(ui): handle random seeds

* fix(ui): fix middleware types

* feat(ui): add rtk action type guard

* feat(ui): disable NodeAPITest

This was polluting the network/socket logs.

* feat(ui): fix parameters panel border color

This commit should be elsewhere but I don't want to break my flow

* feat(ui): make thunk types more consistent

* feat(ui): add type guards for outputs

* feat(ui): load images on socket connect

Rudimentary

* chore(ui): bump redux-toolkit

* docs(ui): update readme

* chore(ui): regenerate api client

* chore(ui): add typescript as dev dependency

I am having trouble with TS versions after vscode updated and now uses TS 5. `madge` has installed 3.9.10 and for whatever reason my vscode wants to use that. Manually specifying 4.9.5 and then setting vscode to use that as the workspace TS fixes the issue.

* feat(ui): begin migrating gallery to nodes

Along the way, migrate to use RTK `createEntityAdapter` for gallery images, and separate `results` and `uploads` into separate slices. Much cleaner this way.

* feat(ui): clean up & comment results slice